Authority metrics are SEO measurements that evaluate the credibility and strength of a website. Domain Power (DP), Domain Rating (DR), and Domain Authority (DA) quantify performance using backlinks, trust signals, and traffic influence. Authority metrics matter because they determine how search engines interpret domain value and assign visibility.

SEO professionals often assume that high-authority domains dominate every search environment. Others argue that AI-driven discovery is reshaped visibility by rewarding context and semantic relevance. The missing piece is evidence showing how authority metrics behave inside large language models (LLMs).

This study analyzes the relationship between DP, DR, DA, and the LLM Visibility Score across 21,767 domains. The analysis measures how authority scores align with citation frequency and visibility in LLM-generated responses. The results reveal that SEO authority metrics show weak or negative correlations with LLM Visibility, suggesting that LLMs distribute exposure based on contextual relevance rather than dominance.

Methodology – How Did We Measure Authority vs. Visibility?

This experiment evaluates whether high-authority domains maintain a visibility advantage inside large language model (LLM) responses. This experiment provides clarity on how traditional SEO authority metrics translate to AI-driven discovery.

This experiment matters because it determines whether backlink-based authority continues to shape visibility in the new LLM ecosystem.

The dataset integrates 3 primary components listed below.

- Domain-level authority data for DP, DR, and DA collected from verified SEO metric providers.

- LLM Visibility Scores gathered from ChatGPT, Gemini, and Perplexity between August 25 and October 24, 2025.

- Cross-model response logs containing domain citations, visibility percentages, and co-mention frequencies that capture how often and how prominently each domain appeared in model outputs.

The preprocessing steps are listed below.

- Merge domain metrics (DP, DR, DA) with the LLM Visibility dataset by domain name.

- Exclude rows where visibility_score = 0 to isolate meaningful citations for correlation analysis.

- Include all rows for the Co-Mention Frequency and Google and YouTube case study analyses.

- Standardize identifiers, timestamps, and field names for consistency.

- Remove invalid or unverified domains.

The analytical steps are listed below.

- Compute Pearson correlations between DP, DR, DA, and visibility indicators such as Average Visibility Score and Win Rate (visibility score = 100).

- Visualize the relationships with scatter plots and box plots across the 3 LLMs.

- Segment results by competition level, defined by the number of co-mentioned domains within each LLM response.

- Apply IQR-based filtering to remove statistical outliers and ensure robust results.

The dataset covers 21,767 unique domains with available authority metrics and verified visibility data. This design isolates whether SEO authority metrics (DP, DR, DA) predict domain prominence within LLM-generated responses or if new, context-based signals define visibility in AI search.

What Is the Final Takeaway?

The analysis demonstrates that DP, DR, DA are weak predictors of LLM Visibility. Higher authority scores do not guarantee exposure in AI-generated results. Across all 3 metrics, correlations range between –0.08 and –0.21, confirming that traditional authority signals have limited influence on how often domains appear in LLM responses.

Domains with lower authority often achieve equal or higher visibility, proving that LLMs distribute exposure based on contextual relevance rather than backlink weight. The Win Rate metric reinforces this pattern, as top-visibility positions favor domains that match topic intent rather than those with the strongest authority metrics.

LLMs treat authority and relevance as separate factors, creating a more balanced visibility landscape where smaller domains perform alongside high-authority sites when their content matches query intent. The results confirm that authority-based hierarchies continue to lose influence inside AI search environments. Visibility patterns remain consistent across models as authority declines, contextual alignment rises, and exposure becomes more evenly distributed.

SEO teams need to treat LLM Visibility as a new performance baseline. Brands that optimize for contextual accuracy and entity clarity achieve stronger discovery, broader reach, and sustained presence across AI-generated results.

How Do Authority Metrics Correlate with LLM Visibility?

I, Manick Bhan, together with the Search Atlas research team, analyzed correlations between authority metrics and LLM Visibility across 21,767 domains. The breakdown to show how DP, DR, and DA relate to visibility is outlined below.

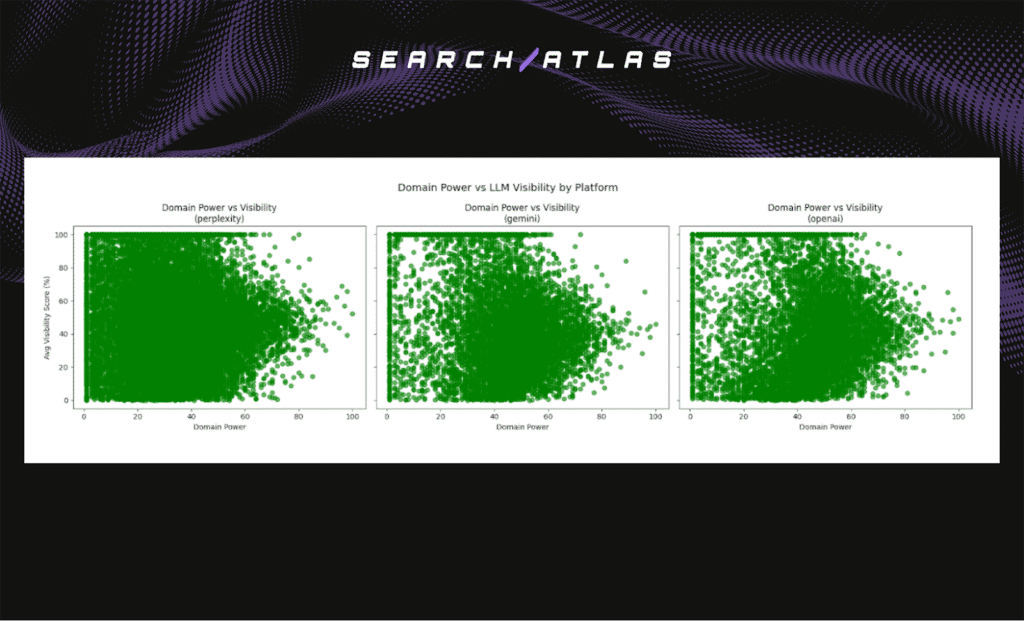

Domain Power

Domain Power measures verified performance using live data from Google Search Console. DP reflects real search strength by combining ranking reach and keyword coverage. Domain Power matters because it represents measurable visibility from verified Google data rather than estimated backlinks.

The headline results are shown below.

- OpenAI correlation. r = –0.12

- Perplexity correlation. r = –0.18

- Gemini correlation. r = –0.09

- Direction of trend. Mild negative slope across all models

High-DP domains occasionally underperform, while mid-tier domains maintain steadier visibility across LLM responses. The trend indicates that search-derived authority does not guarantee AI citation frequency. Contextual precision and topical relevance outweigh historical ranking strength, confirming that LLMs evaluate authority differently from traditional search engines.

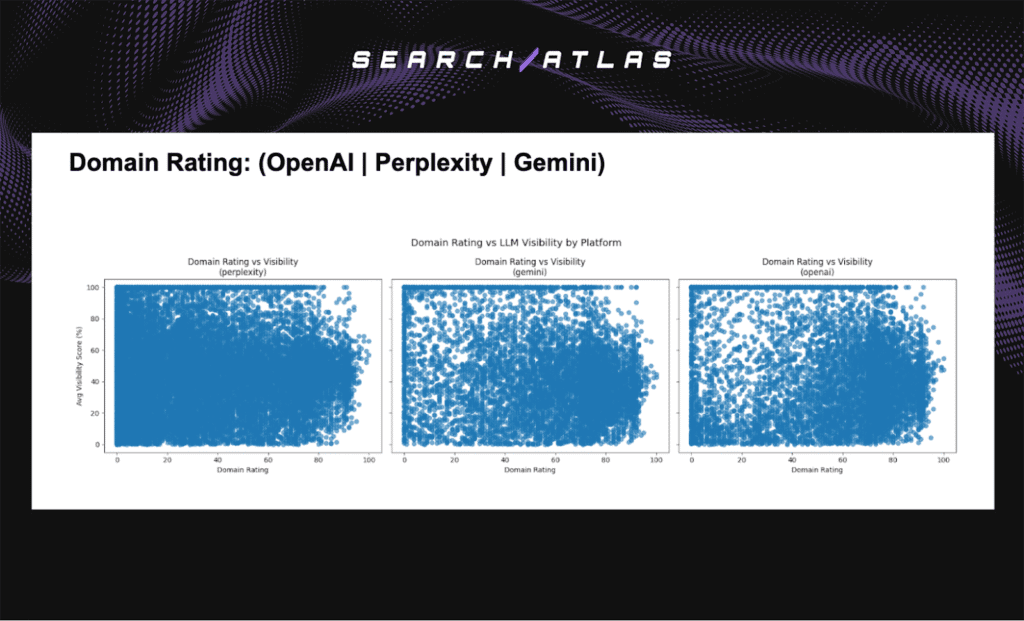

Domain Rating

Domain Rating measures backlink quantity and quality to estimate overall domain authority. DR reflects a site’s link profile and influence within the web graph. DR matters because it has long been treated as a leading predictor of SEO strength.

The headline results are shown below.

- OpenAI correlation. r ≈ 0.00 (neutral)

- Perplexity correlation. r = –0.17

- Gemini correlation. r = –0.14

- Direction of trend. Weak negative slope across most models

High-DR domains vary widely across the visibility spectrum, showing no consistent advantage in generative outputs. The data confirms that backlink-weighted authority fails to predict prominence inside large language model responses. LLM visibility relies on information quality and contextual alignment rather than backlink count or referring domain volume.

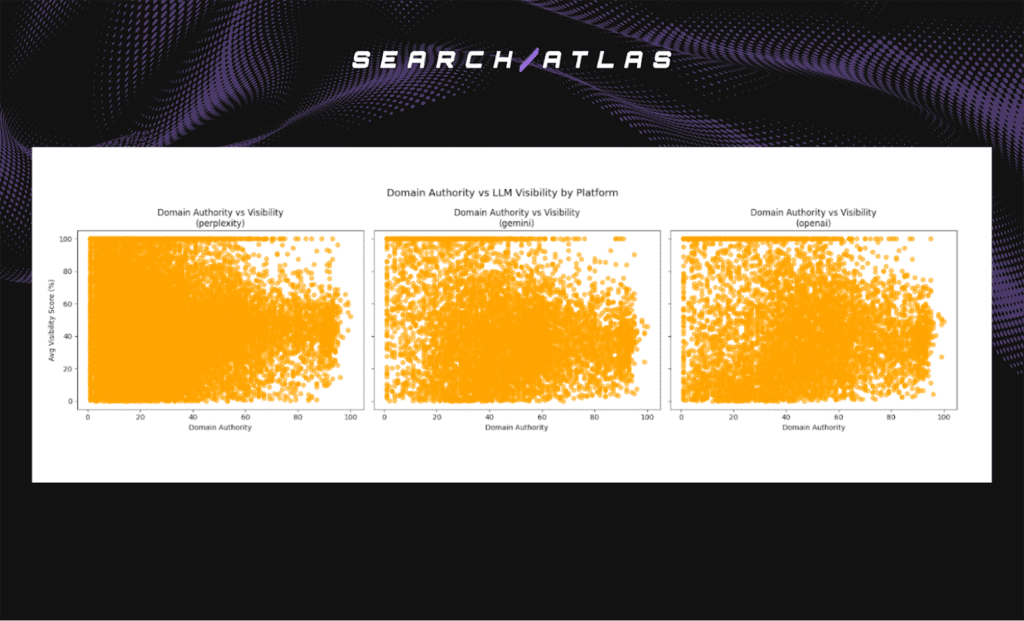

Domain Authority

Domain Authority aggregates link metrics and estimated trust into a single 0-100 index. DA summarizes external linking patterns and historical credibility. DA matters because it has been one of the most recognized authority benchmarks in SEO performance analysis.

The headline results are shown below.

- OpenAI correlation. r = –0.10

- Perplexity correlation. r = –0.21

- Gemini correlation. r = –0.13

- Direction of trend. consistent weak-to-moderate negative correlation

Domains above DA 80 display the greatest volatility, with no stable advantage in visibility or win rate. Broad backlink portfolios fail to ensure consistent appearance in LLM citations.

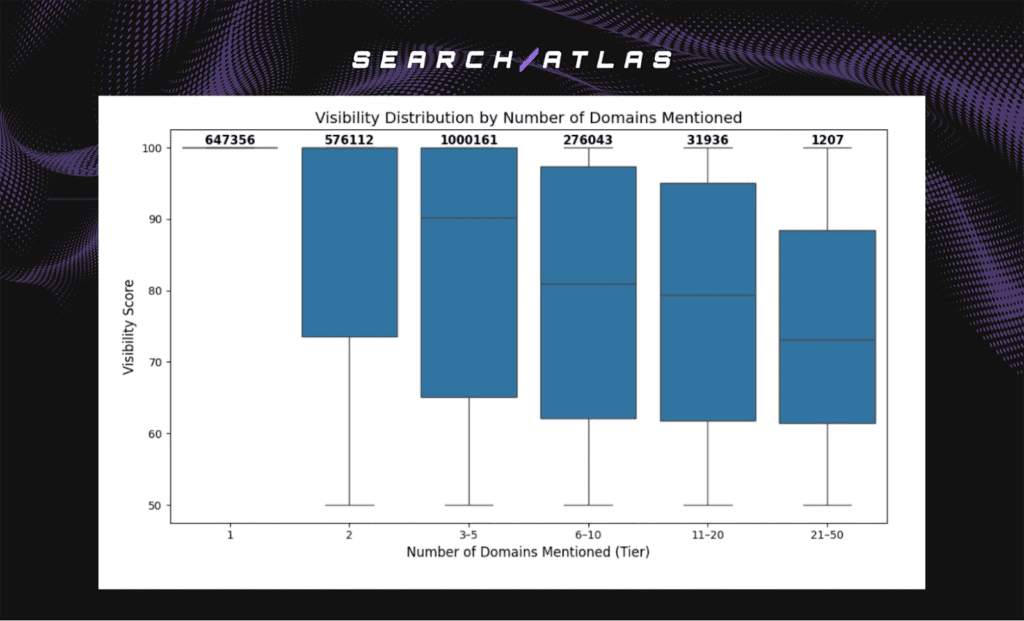

Does Co-Mention Frequency Affect Visibility?

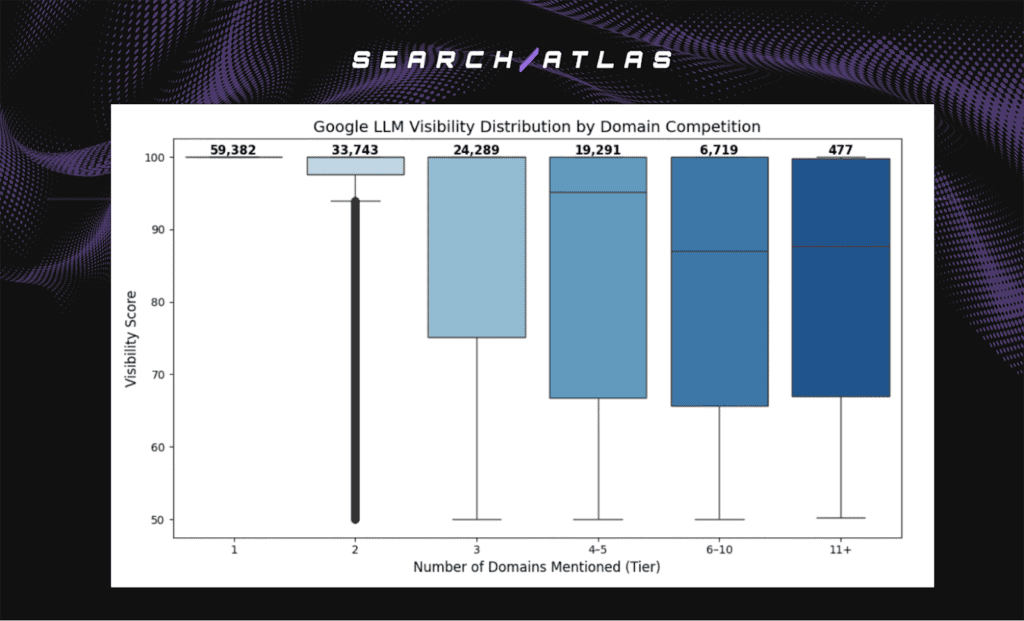

Co-mention frequency measures how many domains appear together in a single LLM response. The analysis covers 368,972 unique domains with visibility scores between 50 and 100 to understand how competition within LLM responses influences visibility. The breakdown to show how co-mention frequency relates to exposure is outlined below.

Co-Mention Frequency

Co-mention frequency represents competition intensity within each answer and determines how attention is distributed across cited sources. Co-mention frequency matters because it reveals whether reduced competition improves domain prominence across models.

The headline results are shown below.

- Fewer co-mentions correspond with higher median visibility.

- Increased competition reduces visibility across all models.

- Single-domain responses achieve the highest win rates.

- Visibility declines steadily from 2 to 10 co-mentions.

- Beyond ten domains, visibility variance widens as LLMs diversify citations.

Each variable contributes differently. Low competition amplifies visibility because LLMs assign greater weight to singular or limited-domain responses. Medium tiers introduce variability, balancing diversity and relevance. High competition disperses exposure evenly, showing that prominence depends on contextual precision, not backlink strength.

Competition intensity emerges as the strongest predictor of exposure in LLM environments. Domains achieve their highest visibility when cited alone or alongside few competitors, confirming that response density, not authority metrics, determines prominence inside AI-generated search results.

Case Studies: Google and YouTube

I, Manick Bhan, together with the Search Atlas research team, analyzed Google and YouTube to understand how competition intensity affects visibility across large language model responses. The analysis used all responses where the domains google.com and youtube.com appeared, covering visibility scores between 50 and 100.

The breakdown to show how these domains perform across co-mention tiers is outlined below.

Google maintains near-perfect visibility (~100%) when it is the only cited domain in an LLM response. Visibility remains high across low competition tiers (2 to 5 domains) but gradually declines as domain density increases.

The headline results are shown below.

- Single-domain visibility. ~100% median score

- 2 to 5 co-mentions. Stable visibility

- 6 to 10 co-mentions. Decline in visibility

- 11+ co-mentions. Wider variance but continued inclusion

Query-level analysis reveals that Google achieves 100% visibility in technical and product-specific prompts (“Google Sheets API,” “Google Search Console setup”) but falls to 0% visibility in broader or competitive topics (“best AI tools,” “top search platforms”).

These results show that Google retains strong authority signals but shares space when LLMs diversify sources to maintain neutrality.

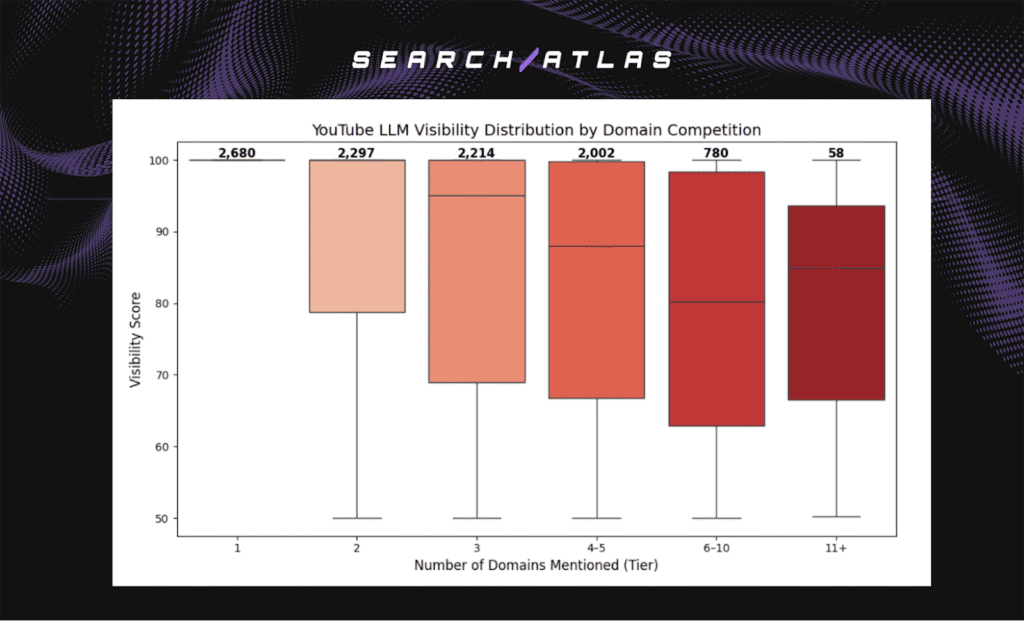

YouTube

YouTube follows a similar trend but exhibits stronger resilience under high competition. It achieves 100% visibility when cited alone and sustains high exposure across low to mid competition levels.

The headline results are shown below.

- Single-domain visibility. 100% median score

- 2 to 5 co-mentions. High stability (median 90 to 95%)

- 6 to 10 co-mentions. Moderate decline (median ~80%)

- 11+ co-mentions. Median visibility remains near 80%

Queries driving 100% visibility often relate to tutorials or video content (“YouTube Shorts monetization,” “how to upload a video”), while 0% visibility appears in text-heavy queries where reference sites dominate.

Both domains perform strongest in low-competition contexts and remain present even as competition rises. The analysis confirms that LLM visibility depends more on contextual relevance than absolute authority, with Google and YouTube illustrating how brand trust sustains inclusion but not guaranteed dominance.

Which Factors Best Predict LLM Visibility?

The 3 core predictors were analyzed to understand what drives exposure within large language model responses. These variables measure authority, competition, and prominence, capturing how LLMs allocate visibility across different domains.

The headline results are shown below.

- Authority Metrics (DP, DR, DA). Weakly negative correlation with visibility across all models.

- Co-Mention Frequency. Strong negative correlation, confirming that competition intensity has the highest influence.

- Win Rate (Visibility = 100). Modest positive correlation, showing that consistent top appearances slightly reinforce visibility patterns.

Each factor contributes differently. Authority metrics reflect traditional SEO hierarchy but fail to predict performance in generative environments. Co-Mention Frequency captures competitive density and proves to be the most reliable indicator of visibility outcomes. Win Rate highlights citation consistency but lacks predictive strength compared to competition dynamics.

Competitive context and topical alignment emerge as the strongest predictors of visibility. The results confirm that LLMs reward contextual relevance and diversity over authority, reshaping discovery around information quality rather than backlink-derived reputation.

What Should SEO and AI Teams Do with These Findings?

The analysis confirms that DP, DR, DA have weak or negative relationships with visibility inside large language models. Traditional authority metrics retain value for Google Search, but they lose predictive strength in AI-generated responses. SEO and AI teams need to adapt measurement, strategy, and optimization focus accordingly.

1. Treat LLM Visibility as a New Performance Layer

Monitor the LLM Visibility Score alongside traditional metrics like Domain Power and traffic data. Authority continues to influence organic rankings, but visibility across LLMs represents a separate layer of brand exposure that operates under different logic.

2. Optimize for Contextual Relevance

Increase visibility by refining topic alignment, semantic clarity, and structured context within content. LLMs prioritize relevance and informational precision over backlink quantity. Pages that answer prompts comprehensively and align closely with query intent are cited more frequently.

3. Rethink Link-Building Priorities

Re-evaluate link-building investments. Focus on contextual and thematic connections instead of pursuing authority inflation. The findings show that high Domain Rating or Domain Authority alone does not increase citation likelihood inside AI models.

4. Build Entity-Focused Content Clusters

Develop content clusters that define entity relationships between concepts, brands, and topics. Consistent internal structure, schema markup, and topical depth improve how LLMs interpret subject expertise and determine relevance within generated responses.

5. Benchmark Across Platforms

Compare visibility trends across ChatGPT, Gemini, and Perplexity. Cross-model variation allows teams to identify where content structure and topic representation perform strongest. Tracking these signals reveals how models differ in evaluating trust and relevance.

What Are the Limitations of the Study?

Every dataset carries scope and timing constraints. The limitations of this analysis are listed below.

- Visibility data was collected from only 3 large language models (OpenAI, Perplexity, and Gemini) within a two-month period.

- The study did not assess sentiment or contextual tone of domain mentions within model responses.

- The Win Rate metric captured only full-visibility appearances (score = 100), omitting partial or weighted inclusions.

- Cross-model comparison excludes Claude and SearchGPT,which limits generalization across the broader LLM landscape.

Despite these limits, the findings remain consistent across all analyzed systems. The correlations between authority and visibility stay weakly negative, reinforcing that LLM visibility prominence depends more on contextual relevance than on traditional authority metrics.