Large language models (LLMs) cite external domains constantly. LLMs repeat these citations across many industries, but the industry distribution behind these citations remains unclear. Citation patterns matter because they influence credibility, source diversity, and GEO strategy inside AI-generated answers.

SEO professionals often assume that LLMs balance institutional, academic, and commercial sources. Others believe that retrieval-based systems amplify news, reviews, and product ecosystems. The missing piece is large-scale evidence showing which industries dominate citations across major LLM platforms.

This study analyzes 5.17 million citations across 907,003 unique domains generated by OpenAI, Gemini, and Perplexity between August 25 and September 26, 2025. The analysis classifies each domain into 24 major industry categories and measures industry share across platforms, query intents, and content formats.

The results reveal that LLMs rely primarily on commercial domains, while academic and government domains remain underrepresented. The findings confirm that LLM citations reflect the structure of the public web rather than institutional authority.

Methodology – How Did We Measure Industry Distribution in LLM Citations?

This experiment measures how LLMs cite external domains across different industries. The analysis evaluates whether LLMs reference commercial ecosystems, institutional sources, or media networks when generating answers.

This experiment matters because it shows which sectors of the web gain visibility inside AI-generated answers. The industry distribution of citations reveals whether LLMs elevate institutional sources or default to commercial ecosystems.

The dataset integrates 2 main components listed below.

- LLM response dataset. Contains 5.17 million citations from OpenAI, Gemini, and Perplexity between August 25 and September 26, 2025.

- Domain classification dataset. Provides industry labels for 907,003 unique domains mapped into 24 categories.

The preprocessing steps are listed below.

1. Normalize every URL to its base domain to ensure consistent matching.

2. Classify each domain with an LLM prompted to assign an industry.

3. Validate two hundred random classifications to confirm accuracy.

4. Remove domains labeled as Other or with missing labels.

5. Merge normalized domains with industry assignments to form the final dataset.

The feature engineering integrates 3 steps.

Firstly, group domains by top-level extensions for .com, .org, .gov, .edu, and regional TLDs. Secondly, map industries into four source groups (Commercial, Academic and Government, News and Media, Social and Blog). Thirdly, convert timestamps into monthly periods to support temporal segmentation.

This methodological design enables both high-level and granular analysis. The structure isolates whether LLMs select sources from commercial ecosystems or institutional environments and reveals how citation patterns shift across platforms and query intents.

What Is the Final Takeaway?

The analysis demonstrates that LLMs construct their answers from commercial ecosystems. The study shows that industry distribution remains anchored in Technology, Consumer and Retail, Healthcare, Business and Finance, and Construction and Manufacturing.

These sectors dominate because they publish the largest volume of accessible, indexable content. LLMs do not assign these industries special weight. LLMs mirror what exists on the public web.

Platform architecture shapes citation behavior. Each model retrieves, stores, and prioritizes information differently.

Perplexity produces the strongest commercial concentration due to real-time retrieval. OpenAI draws from a broader set of categories and cites News and Media more often. Gemini references Technology and institutional sources at a higher proportion than the other 2 models, but at a smaller overall scale.

Institutional domains remain limited. Academic and government sources appear infrequently across every platform. Their low representation reflects the scarcity and accessibility constraints of institutional material on the public web rather than model preference.

The direction of these findings remains consistent. Commercial ecosystems drive citation volume, media outlets provide contextual grounding, and institutional sources remain secondary.

Search Atlas networks participate in this structure.

Signal Genesys appears in 86% of mapped domains within the sample, and Link Laboratory appears in 3,512 citations. These results show that syndicated news partners and large publisher networks align with the types of domains LLMs reference most often.

LLMs reproduce the structure of the open web. Commercial ecosystems supply the overwhelming share of citations, media outlets provide contextual grounding, and institutional sources remain secondary across platforms and query intents.

How Domain Industries Appear Across LLM Platforms?

I, Manick Bhan, together with the Search Atlas research team, analyzed 5.17M LLM citations to understand how industries appear across OpenAI, Gemini, and Perplexity. The breakdown to show how models distribute citations across sectors is outlined below.

Data Distribution

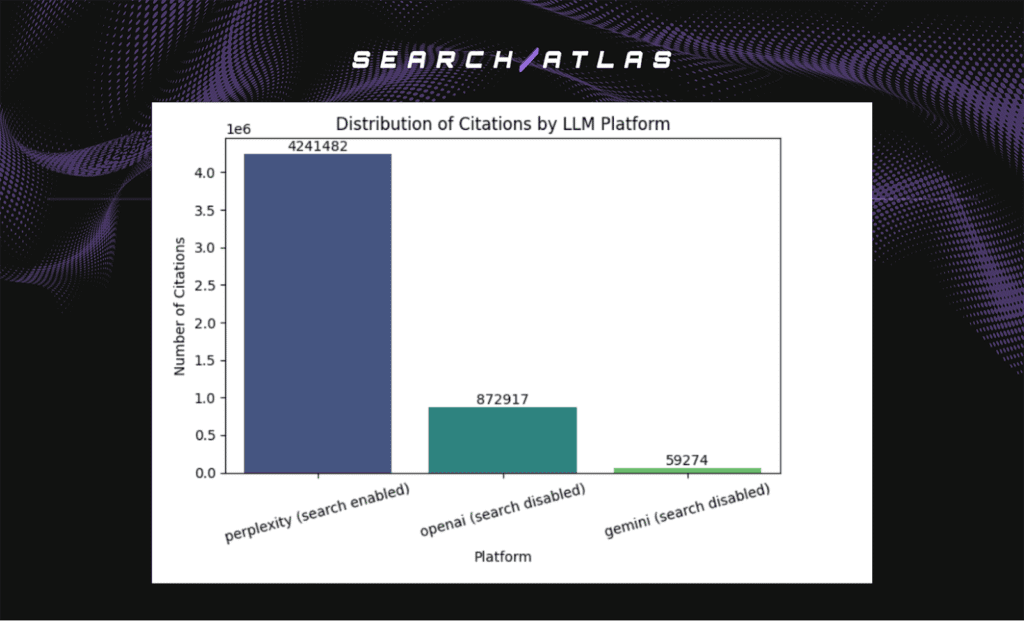

The citation volume varies across platforms. The distribution matters because it shapes every pattern in the results. The distribution of citations by LLM platforms is shown below.

- Perplexity distribution. 80%

- OpenAI distribution. 15%

- Gemini distribution. 5%

Perplexity generates the largest pool of citations in the dataset. OpenAI contributes a smaller but stable fraction. Gemini contributes the smallest share. This distribution informs every platform-level comparison in the sections below.

Industry Representation in LLM Citations

Industry coverage reveals which sectors appear most often in model responses. Industry presence matters because it determines whose information enters AI-generated visibility.

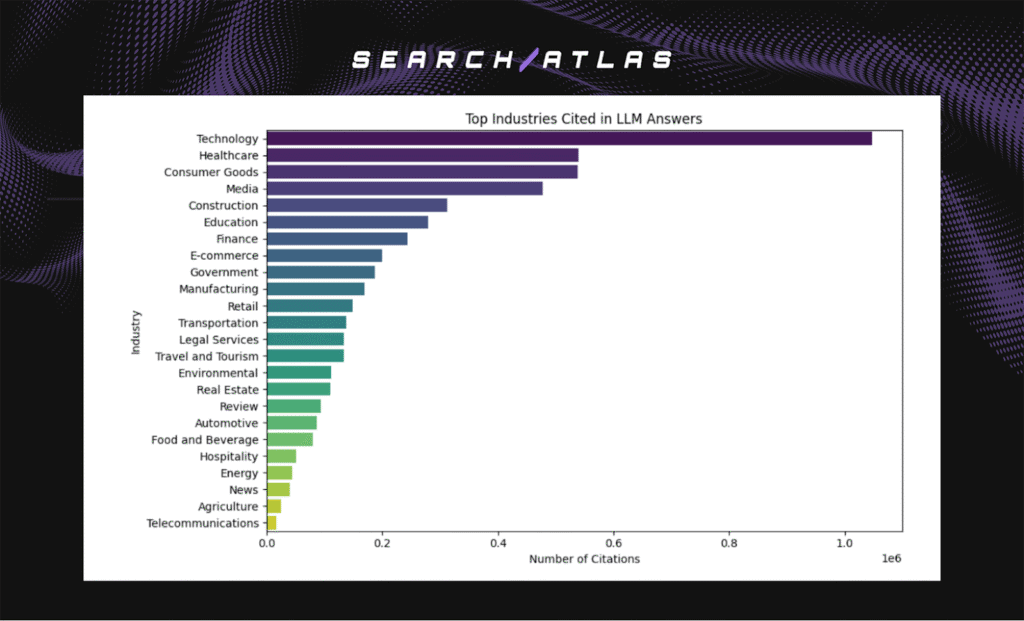

The headline results are shown below.

- Dominant sectors. Technology, Healthcare, Consumer Goods

- Secondary sectors. Media, Construction

- Sparse sectors. Academic, Government, Agriculture, Telecommunications

Technology, Healthcare, and Consumer Goods dominate the citations across the dataset. Media and Construction follow with moderate representation. Academic and government domains appear infrequently, which shows that institutional material receives far less exposure than commercial ecosystems.

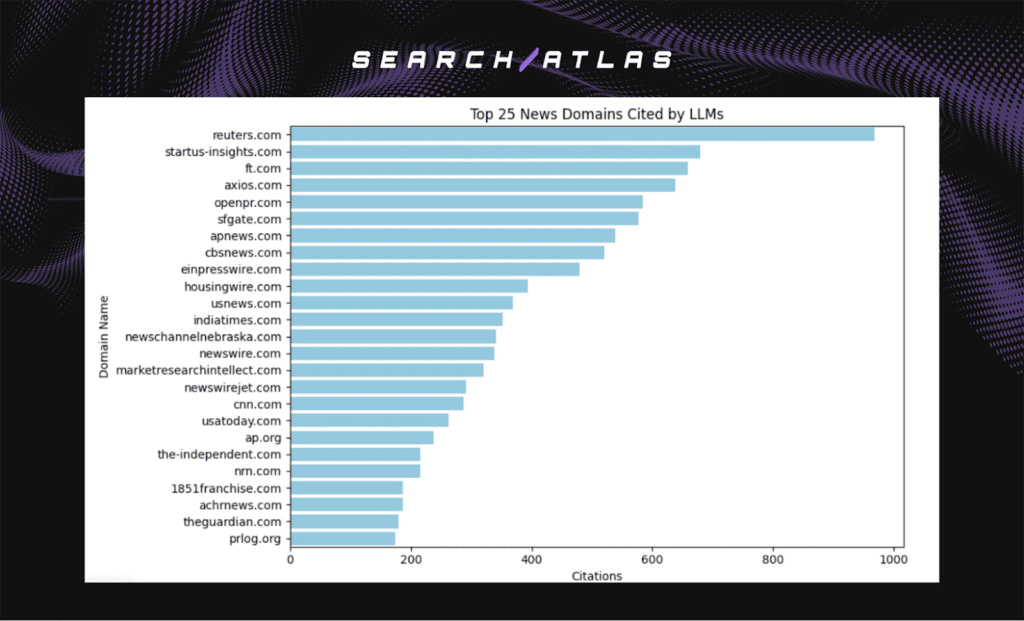

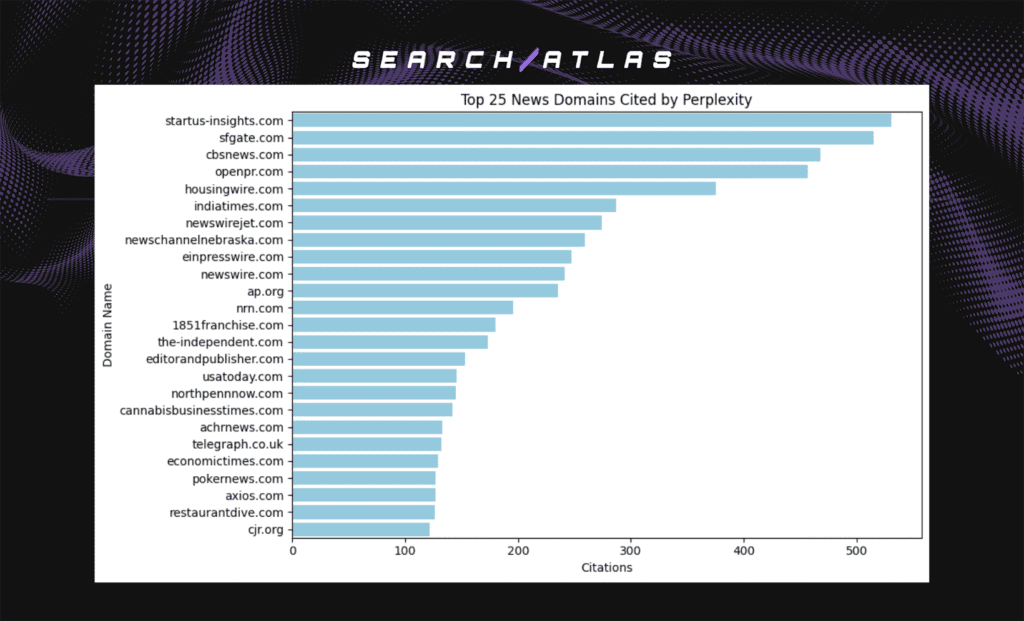

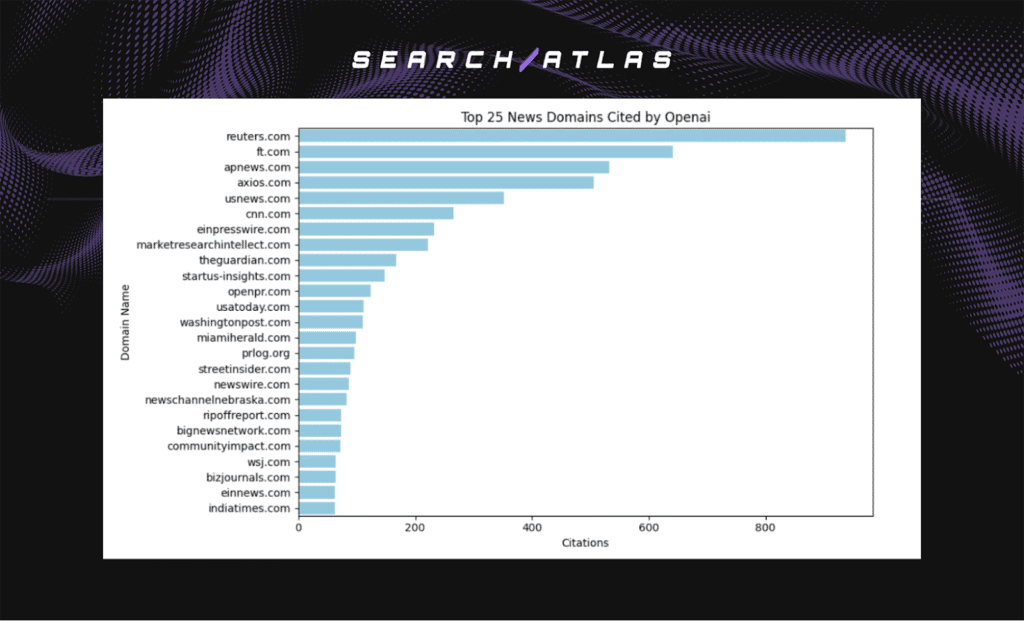

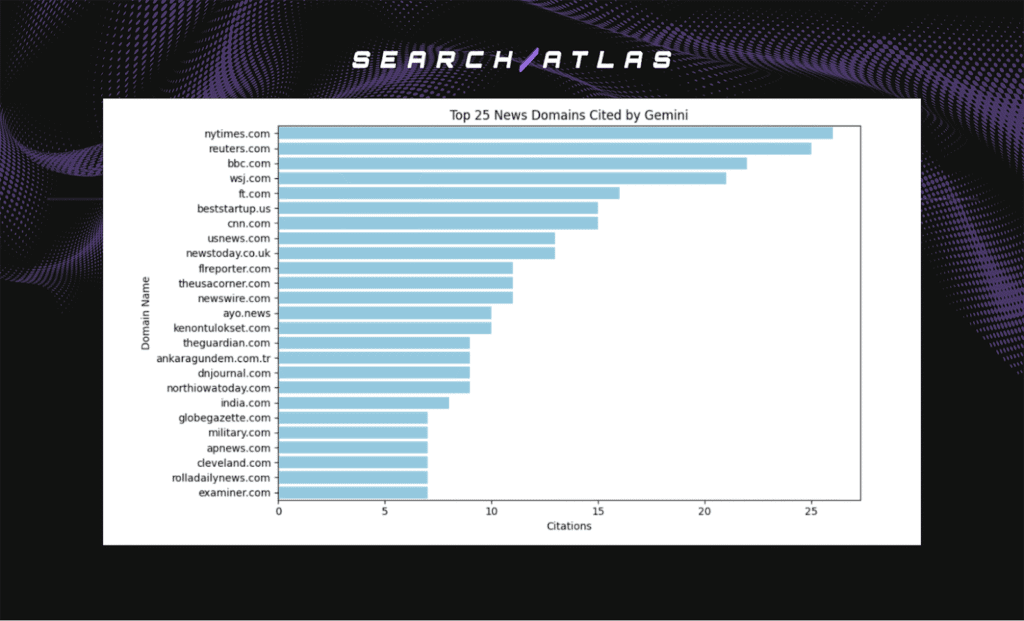

News Domains

News citations show which publishers shape factual grounding across platforms. News sourcing matters because it influences how models present timely or authoritative information.

The headline results are shown below.

- All 3 models. Reuters.com, ft.com, cnn.com, apnews.com, usnews.com

- Perplexity pattern. Broad pattern of global, regional, and niche outlets

- OpenAI pattern. Concentrated set of established international publishers

- Gemini pattern. Narrow set of top-tier outlets with minimal long-tail reach

Perplexity cites a wide spectrum of news sources, which range from global publications to regional outlets and industry-specific sites. OpenAI references a tighter group of prominent publishers known for editorial strength. Gemini shows the smallest citation pool and focuses on major global outlets, which reflects narrower retrieval breadth.

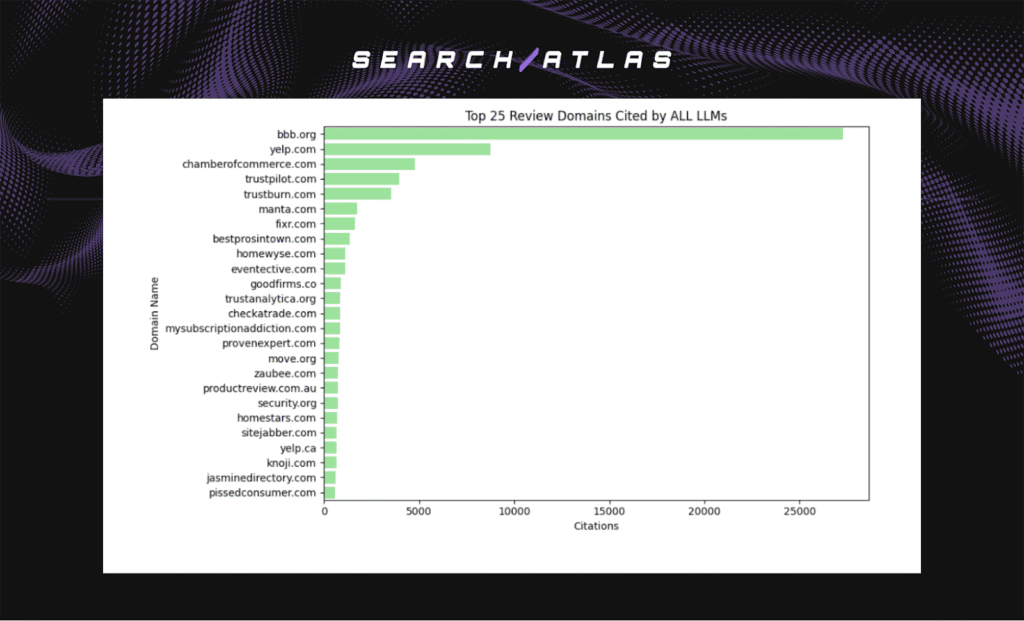

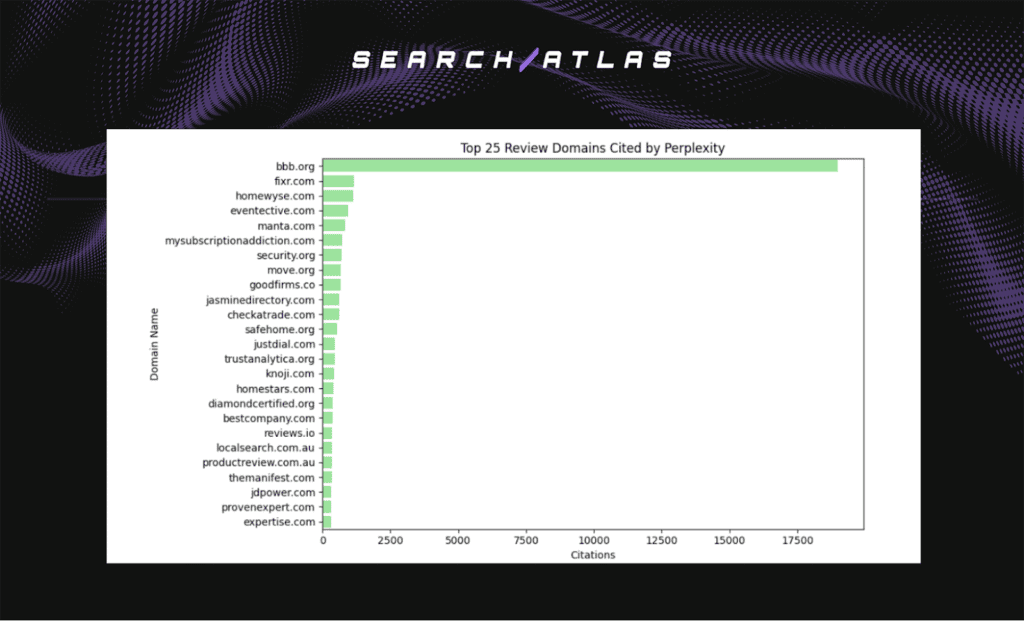

Review Domains

Review citations reveal how models reference evaluations of businesses, products, or services. Review sources matter because they influence credibility signals inside generated answers.

The headline results are shown below.

- Dominant domain. bbb.org

- Secondary domains. Yelp.com, chamberofcommerce.com

- Broader set. Trustpilot, Manta, Trustburn

- Direction of trend. Strong reliance on structured review directories

BBB.org dominates every platform in the review category. ChamberofCommerce.com and Yelp.com follow at smaller but consistent levels. Trustpilot, Manta, and Trustburn appear across many queries, which shows consistent reliance on verified review ecosystems rather than informal or unmoderated commentary.

How News Citations Differ Across Platforms?

News domains appear across all 3 models, but each platform shows a distinct pattern in how it references journalism and syndicated reporting. The distribution reveals whether a model leans toward global and regional publishers or press-release networks when grounding its answers.

The top 25 News Domains cited per platform are listed below.

Perplexity

Perplexity cites the widest range of news sources.

Perplexity distribution includes global outlets, regional publishers, and a long tail of PR syndication networks. This pattern shows that Perplexity draws from a broad public web footprint rather than a narrow set of authoritative news domains.

OpenAI

OpenAI focuses on established journalism.

Reuters, FT, APNews, and Axios dominate OpenAI citations, which shows a consistent preference for high-credibility global publishers. OpenAI references analytical and industry commentary sites (MarketResearchIntellect.com and TheGuardian.com).

Gemini

Gemini shows the most selective pattern.

NYTimes.com, Reuters.com, BBC.com, WSJ.com, and FT.com appear frequently in Gemini, while the overall citation volume remains low. This selectivity points to tighter retrieval filters that prioritize editorially verified news over breadth.

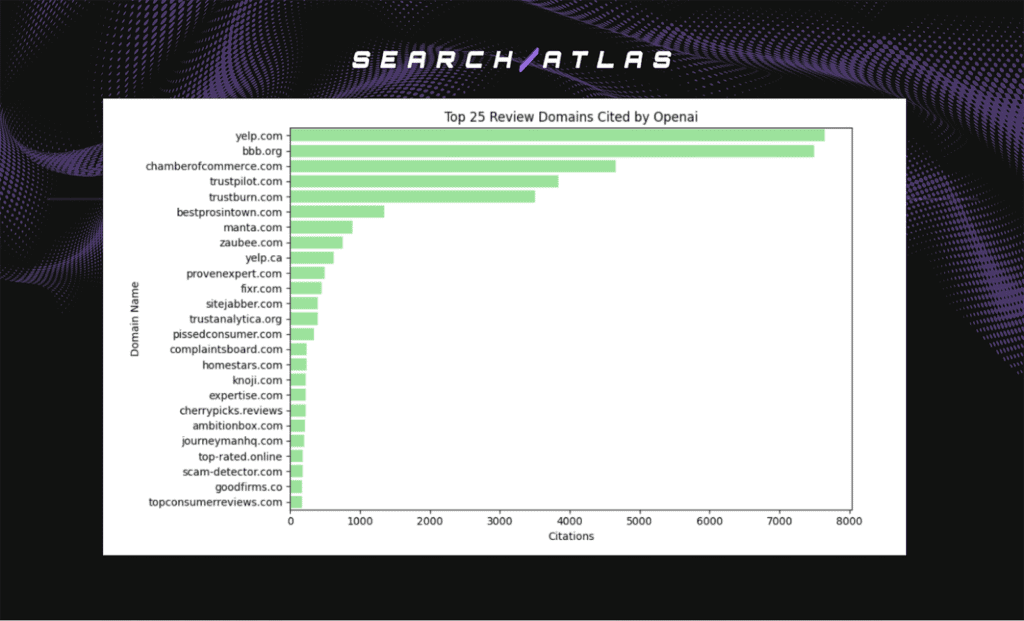

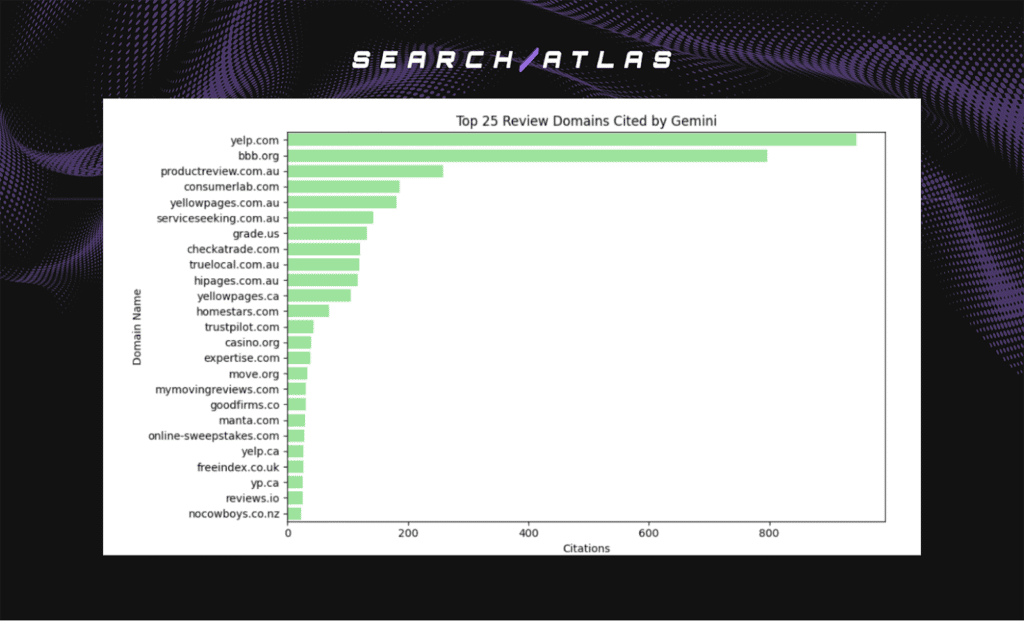

How Review Citations Differ Across Platforms?

Review domains appear across LLM responses as credibility anchors for products, services, and local businesses. Each model references different types of evaluation sources, which reveal whether it favors structured business directories or consumer-oriented feedback platforms.

The top 25 review domains cited per platform are listed below.

Perplexity

Perplexity centers its review citations on BBB.org.

The volume far exceeds all other review domains in its distribution. Fixr.com, Homewyse.com, and Eventective.com appear at much lower frequencies, which shows that Perplexity relies primarily on structured business and contractor directories rather than user-driven review ecosystems.

OpenAI

OpenAI spreads its citations across consumer and business review platforms.

Yelp.com and BBB.org lead the distribution, followed by ChamberofCommerce.com, Trustpilot.com, and Trustburn.com. This pattern reflects a balanced orientation toward public feedback aggregators and business credibility sources.

Gemini

Gemini presents a smaller but distinctive review pattern.

Yelp.com and BBB.org remain prominent, and several Australian platforms (ProductReview.com.au and ServiceSeeking.com.au) appear in meaningful positions. This distribution indicates that Gemini emphasizes localized and service-based review sources over large global aggregators.

How Industry Patterns Appear Across LLM Outputs?

Industry patterns reveal which sectors gain visibility inside AI-generated answers. This analysis measures how often each industry appears and how that presence shifts across platforms and query intents. Industry distribution matters because it shapes which parts of the web influence responses in major LLM systems.

This section outlines the main findings below.

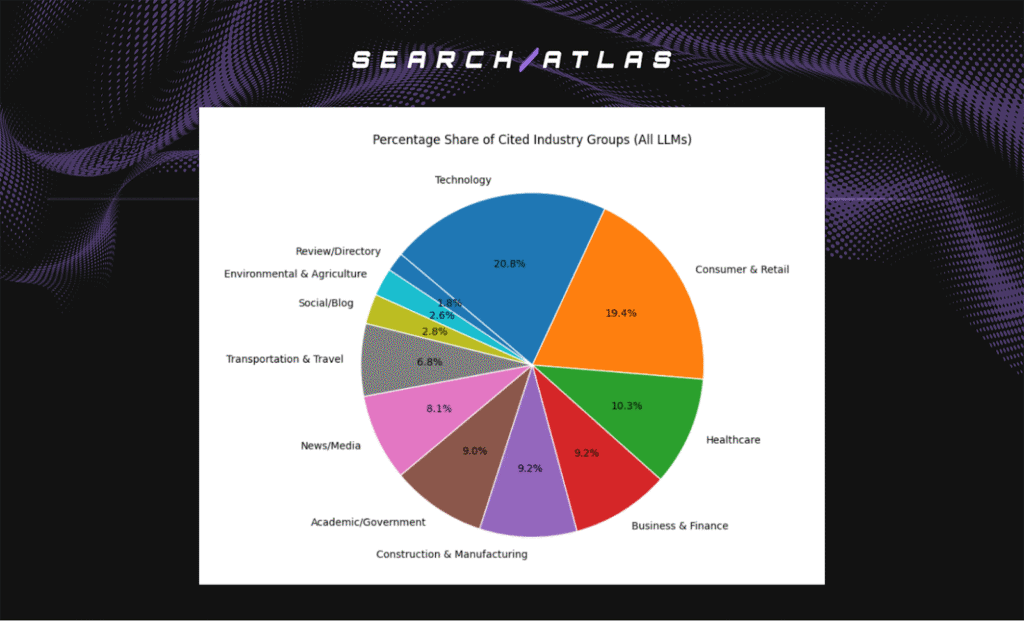

Which Industries Do LLMs Cite Most?

The analysis measures which industries appear most often in LLM responses. Industry presence shapes which sectors of the web gain visibility inside AI-generated answers.

The headline results are shown below.

Dominant sectors are listed below.

- Technology. 20.8%

- Consumer and Retail. 19.4%

- Healthcare. 10.3%

- Business and Finance. 9.2%

- Construction and Manufacturing. 9.2%

Secondary sectors are listed below.

- News and Media. 8.1%

- Transportation and Travel. 6.8%

Sparse sectors are listed below.

- Academic and Government. 9.0%

- Environmental and Agriculture. 2.6%

- Social and Blog. 2.8%

- Review and Directory. 1.8%

Technology stands as the largest share of citations across the dataset. Consumer and Retail, Healthcare, Business and Finance, and Construction and Manufacturing follow with strong representation. Together, these sectors account for nearly 70% of all citations.

Academic and Government sources appear far less often, which shows that institutional material receives limited visibility inside LLM outputs. News and Media appear at mid levels, while Social, Blog, Review, and niche industry categories are a small portion of total citations.

The distribution confirms that LLMs rely on commercial and industry-driven ecosystems more than institutional or peer-reviewed sources.

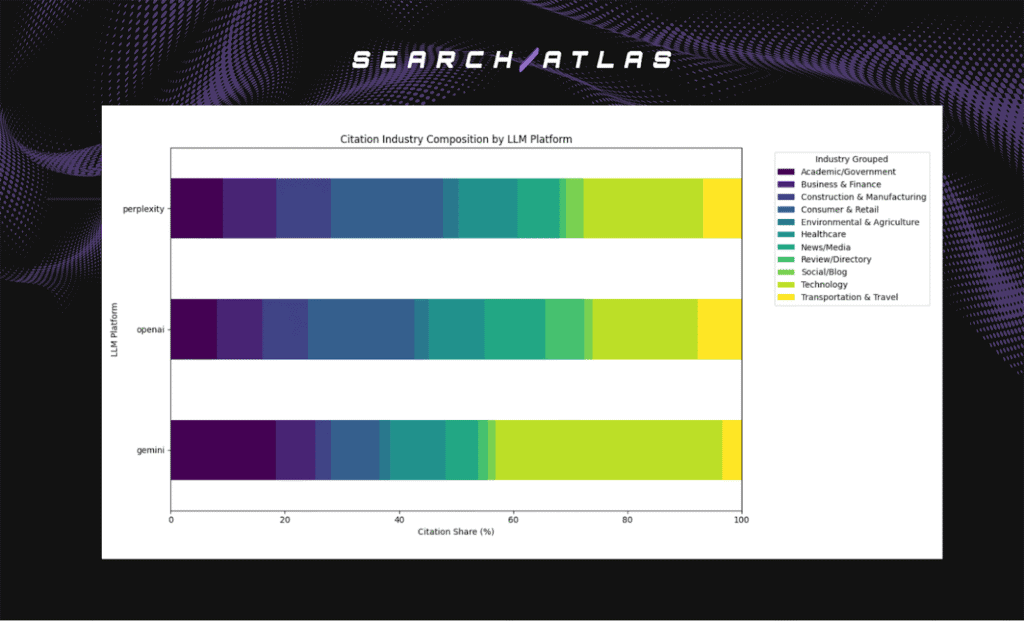

What Is the Industry Citation Composition by LLM Platform?

Industry composition defines which parts of the web gain visibility inside AI-generated responses. The analysis shows which sectors each model relies on when generating answers and how platform design shapes the mix of commercial, institutional, and media sources.

The breakdown to show how each platform allocates citations across industries is below.

Each platform shows a distinct industry signature shaped by its system design.

- Perplexity relies on live retrieval.

- OpenAI blends internal reasoning with curated sources.

- Gemini produces narrower but more institution-oriented citations.

- Technology, Consumer and Retail, and Business and Finance dominate all 3 platforms.

- Academics and Government remain under 10% everywhere.

Perplexity: Commercial Industries Dominate

- Consumer and Retail, Construction and Manufacturing, and Technology account for 70%+ of Perplexity citations.

- Academic and Government remain below 5%.

- Social and Blog categories appear rarely.

Perplexity shows the strongest commercial skew because real-time retrieval pulls from the public web’s most abundant sectors.

OpenAI: Broadest Industry Spread With Higher News Presence

- News and Media exceed 10%, the highest of any platform.

- Technology, Consumer and Retail, and Business and Finance form the core of its distribution.

- Academic and Government remain below 10%, but appear more frequently than in Perplexity.

OpenAI produces the most balanced mix, combining commercial sectors with a notable concentration of journalistic sources.

Gemini: Narrower Footprint With Higher Institutional Weight

- Technology holds the largest share of citations.

- News and Media remain low, typically under 5%.

- Academic and Government rise to just under 10%, higher than Perplexity and OpenAI.

Gemini emphasizes Technology and institutional domains, creating a citation base with stronger editorial and authoritative signals.

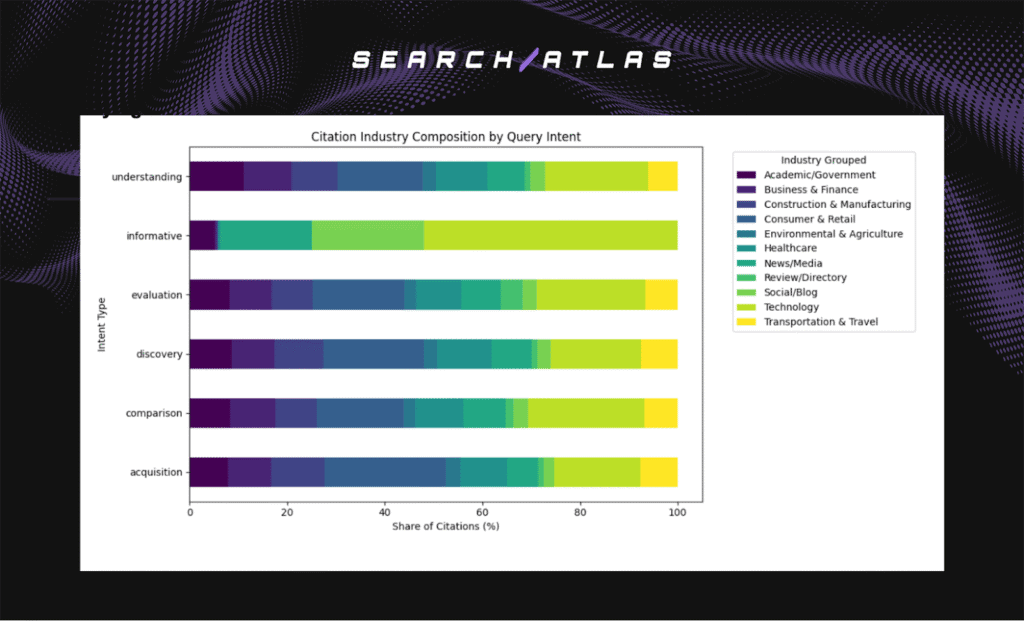

Which Industries Appear Across Different Query Intents?

Query intent shapes how models gather information, yet the overall industry mix remains anchored in the structure of the public web. Intent matters because it reveals which sectors LLMs rely on for different informational goals.

The headline results are shown below.

- News and Media rise for informative and understanding intents.

- Academic and Government stay low across every intent type.

- Consumer and Retail and Business and Finance remain strong secondary contributors.

- Healthcare, Environmental, and Construction and Manufacturing appear at moderate levels between 5% and 15%.

- Technology is the largest cited industry for every intent category. This happens because technology content is abundant, well-structured, and relevant to a wide range of questions.

Understanding Queries

Understanding prompts mirror informative queries and include increased presence from News/Media and Social/Blog. The industries listed below appear most often for this intent.

- Journalism

- Reporting and informational outlets

- Publicly authored explanations

- Social platforms

Technology remains the dominant category. Academic/Government contributes slightly more often than in other intents, though still at a very small overall share.

Informative Queries

Informative prompts continue to draw heavily from Technology, but show a greater presence of News/Media and Social/Blog than other intents.

The industries listed below appear more frequently in this category.

- Journalism

- Reporting and informational outlets

- Public commentary and social platforms

- Software companies and tech platforms

Institutional sources remain rare, and Academic/Government appears only at very small levels.

Evaluation Queries

Evaluation prompts lean toward Technology, Consumer and Retail, and Business and Finance. These queries focus on quality, performance, or suitability. The industries listed below appear most often.

- Software companies

- Tech platforms

- Product pages and e-commerce sources

- Corporate and financial explanations

Healthcare, Environmental and Agriculture, and Construction and Manufacturing contribute at low percentages for evaluations involving specialized or regulated topics.

Discovery Queries

Discovery prompts show a broad footprint but still center on Technology. These queries prompt models to introduce options or explore categories.

The industries listed below appear most often.

- Software companies

- Developer tools

- Tech platforms

- Product documentation

Consumer and Retail appear when discovery involves brands or product categories, while Healthcare, Environmental and Agriculture, and Construction and Manufacturing remain present at low levels.

Comparison Queries

Comparison prompts emphasize Technology most strongly, followed by Consumer and Retail, and Business and Finance. These industries provide the bulk of citations because comparisons involve evaluating alternatives.

The industries listed below appear across this intent.

- Software companies

- Developer tools

- Tech platforms

- Product pages and corporate information

Healthcare, Environmental and Agriculture, and Construction and Manufacturing appear at lower levels when comparisons require subject-specific contex.t

Acquisition Queries

Acquisition prompts focus on selecting tools, products, or services. These queries draw heavily from Technology, Consumer and Retail, and Business and Finance, since LLMs rely on sources that provide clear decision-making information.

Citations for this intent come from the sources listed below.

- Software companies

- Tech platforms

- Product documentation

- Product pages, e-commerce sites, shopping guides

- Corporate pages and financial service providers

Healthcare, Environmental and Agriculture, and Construction and Manufacturing appear in smaller proportions when the topic involves physical goods or regulated services.

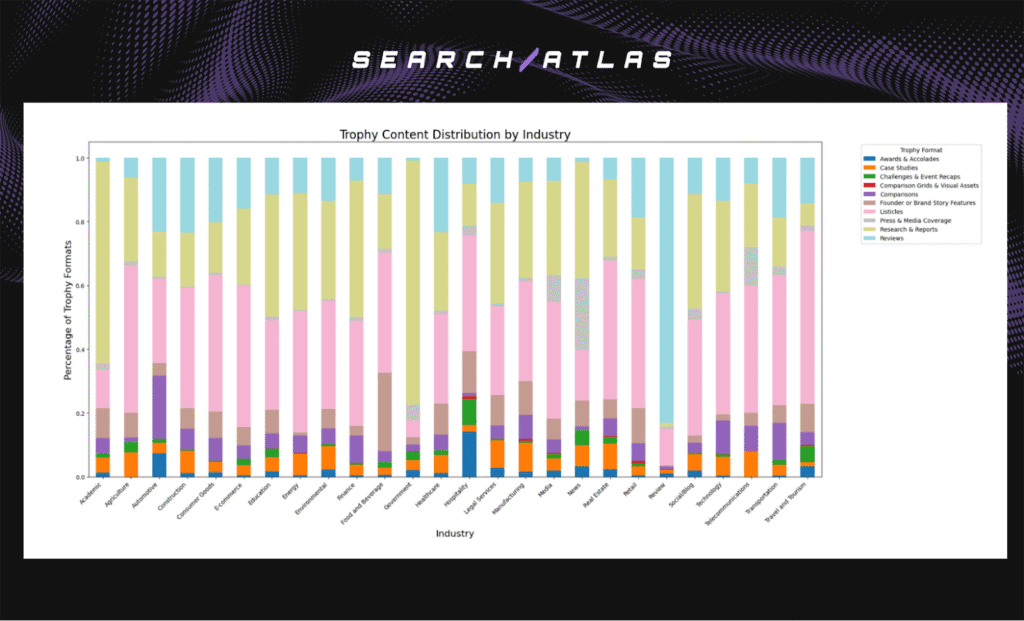

How Trophy Content Types Vary Across Industries?

I, Manick Bhan, together with the Search Atlas research team, analyzed 17,351 cited URLs to measure how Trophy Content formats distribute across industries. Trophy Content describes high authority page formats that anchor brand entities across the web.

The breakdown to show how listicles, reviews, research content, press coverage, and other formats appear by sector is outlined below.

- Listicles dominate almost every industry. LLMs most frequently cite “Top X” and “Best Y” style pages, regardless of sector. Listicles act as a universal evaluation format across consumer and B2B environments.

- Research and reports concentrate on regulated and technical sectors. Academic, Government, Healthcare, Finance, and Environmental and Agriculture account for the largest share of research-driven citations. These industries rely on structured evidence, formal reports, and documented findings.

- Press coverage spikes in media-heavy categories. Media, News, and Real Estate show noticeably higher proportions of press-oriented citations. LLMs draw on coverage, announcements, and editorial pieces when these industries appear in responses.

- Reviews cluster in consumer-facing verticals. Retail, Consumer Goods, Hospitality, and eCommerce contain the strongest presence of review content. Citation patterns reflect heavy use of experience-based pages where customers evaluate products or services.

- Awards, case studies, and comparisons remain low across most sectors. These formats appear in the dataset but contribute only a small share of citations in most industries. Their role is targeted rather than dominant.

- Each industry follows a predictable Trophy Content profile. Regulated industries lean toward research formats. Consumer industries rely on reviews and listicles. Media categories concentrate on press coverage. Services and B2B sectors introduce case studies and comparisons at lower but consistent levels.

Together, these patterns show that LLMs reference Trophy Content formats that match industry communication habits. The type of authoritative page a sector publishes at scale shapes which citations appear inside LLM-generated answers.

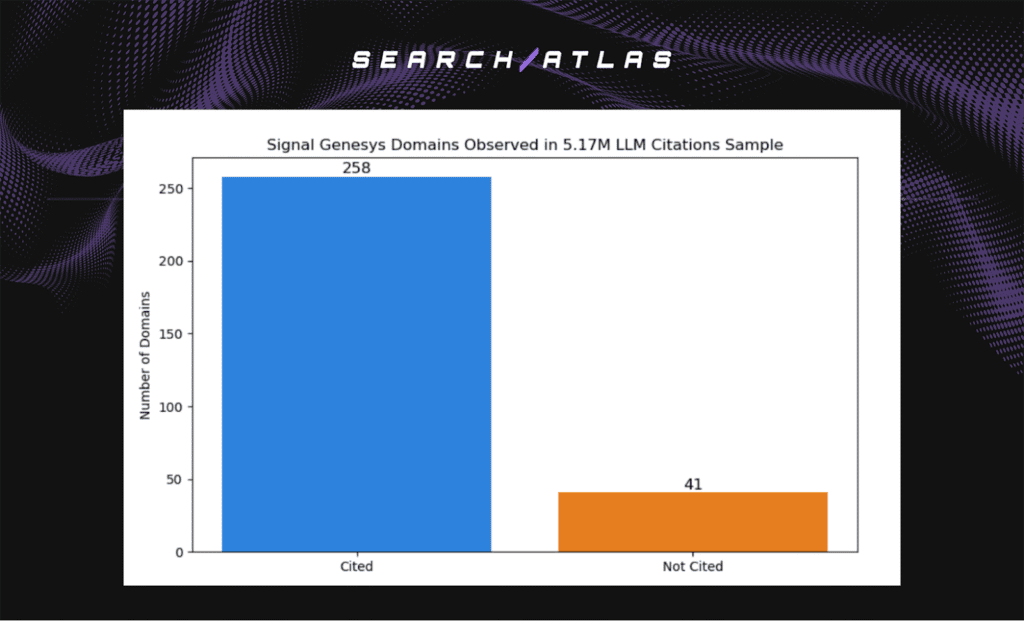

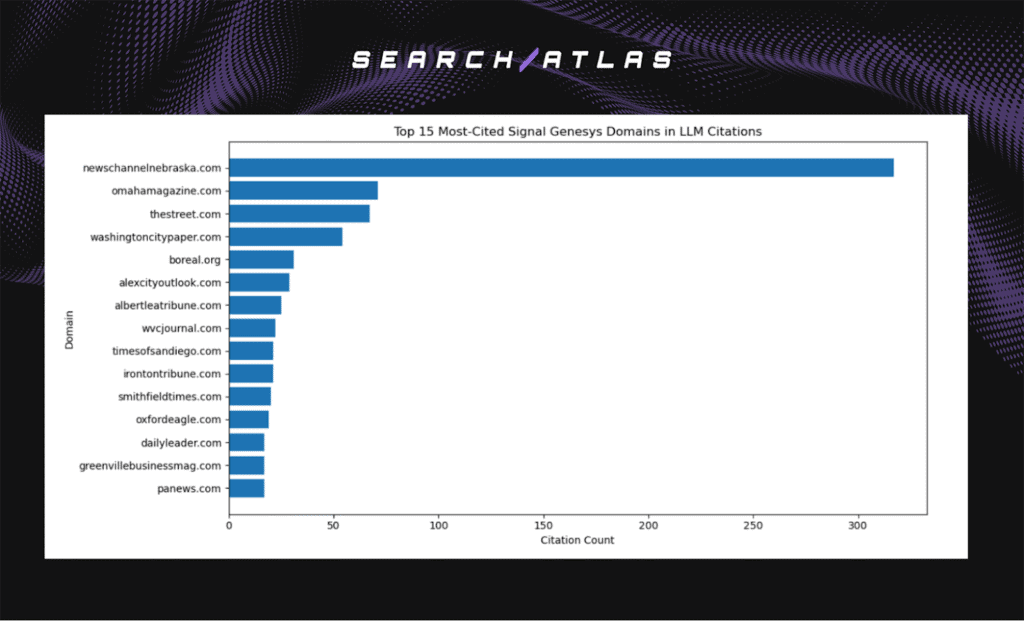

How Often Do Search Atlas Networks Appear in LLM Citations?

I, Manick Bhan, together with the Search Atlas research team, analyzed 5.17M LLM citations to measure how often Search Atlas distribution networks appear in model outputs. The breakdown to show how Signal Genesys and Link Laboratory surface in citations is outlined below.

Signal Genesys Visibility

Signal Genesys includes 299 total distribution partners across broadcast outlets, regional news sites, financial networks, and digital publishers.

- 258 of 299 domains appeared at least once in the dataset.

- 86% total network visibility across 5.17M citations.

- 41 domains did not appear in this dataset but surfaced in other prompt contexts.

Across all appearances, LLMs cited Signal Genesys domains 1,258 times. The 15 most-cited Signal Genesys domains are listed below.

- Broadcast news affiliates

- Regional and city magazines

- Local newspapers

- Business journals

Signal Genesys domains appear prominently because LLMs often cite regional news outlets and syndicated editorial content, which aligns with where SG-distributed stories frequently publish.

Link Laboratory Visibility

Link Laboratory connects brands to 50,902 publisher domains across a wide network of high-authority websites. The platform functions as a publisher exchange marketplace, enabling sponsored and editorial placements that strengthen brand reach and domain authority.

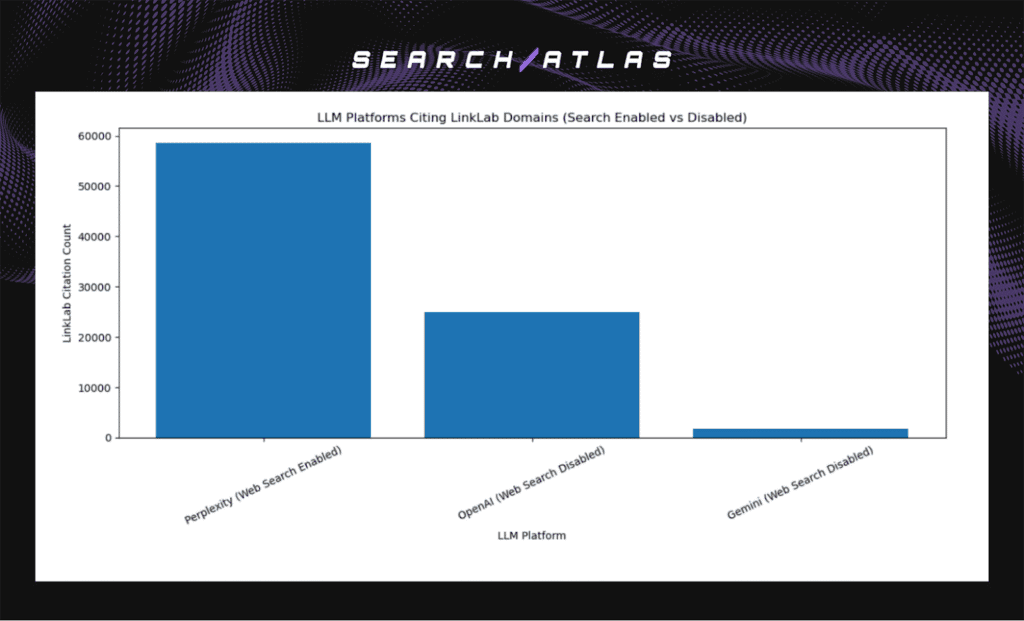

To measure how often these publishers appear organically in LLM responses, we matched all 50,902 LinkLab domains against the 713,615 unique domains from OpenAI, Gemini, and Perplexity.

- 3,512 of 50,902 domains appeared at least once in the dataset.

- 85,357 total URL citations referenced LinkLab publishers across 5.17M citations.

The platform-level distribution is listed below.

- Perplexity. Contributed the largest share of citations, driven by its real-time retrieval engine.

- OpenAI (search disabled). Cited LinkLab domains frequently, showing the inherent authority these publishers carry inside model training data.

- Gemini. Surfaced LinkLab domains at a lower volume, reflecting its narrower retrieval pattern.

Link Laboratory domains appear across LLM outputs because models recurrently cite editorial, informational, and authority-weighted publishers, which align with the types of sites where LinkLab content placements commonly occur.

What Should SEO and AI Teams Do with These Findings?

The results show that LLM citations concentrate heavily in commercial web ecosystems, news media, and technology-driven domains. Academic and governmental sources appear infrequently, and each platform favors different industry mixes. These patterns reshape how teams evaluate visibility inside AI-generated environments.

1. Focus on Industries That LLMs Cite Most Often

Technology, Consumer and Retail, and Business and Finance anchor the majority of citations. Teams strengthen visibility by increasing structured coverage in these sectors, where LLMs consistently pull information.

2. Strengthen Media Presence Across News Ecosystems

OpenAI and Perplexity cite news outlets at higher rates than other platforms. Brands expand reach inside AI responses by maintaining steady publication through press networks, syndicated articles, and newsroom-friendly formats.

3. Align Content With Intent-Based Citation Patterns

Acquisition, comparison, and evaluation queries rely on commercial sectors, while informative and understanding prompts introduce more News and Media domains. Designing content around these distinct intent categories increases match likelihood.

4. Produce Content Types That LLMs Commonly Cite

Listicles, reviews, research pages, and press coverage appear across industry groups at different frequencies. Using these high-visibility formats reinforces entity clarity and offers broader citation coverage.

What Are the Limitations of the Study?

Every model has limitations. The limitations of this study are listed below.

- One-Month Timeframe. The dataset covers a single month of LLM responses, which restricts the ability to observe seasonal patterns or long-term shifts.

- Citation ≠ Accuracy. Citations measure visibility and presence but do not indicate factual correctness, endorsement, or sentiment.

- Model Design Differences. Retrieval-enabled and non-retrieval systems operate differently, which affects comparability in citation distribution.

Despite these limits, the analysis establishes a clear baseline showing that LLM citations concentrate in commercial and media ecosystems while institutional sources remain small. Future work needs to extend the timeframe, expand platform coverage, and connect citation presence directly to LLM Visibility scoring and downstream SEO performance.