Schema markup defines how search engines read and organize information on web pages. Schema markup adds structured labels to content, such as business details, products, and articles, so machines can understand what a page contains. As large language models (LLMs) increasingly generate answers directly for users, it remains unclear whether this structured data influences how these systems choose which websites to cite.

Many SEO professionals assume that adding schema markup makes a domain more visible inside AI-generated answers. However, there is limited empirical evidence showing whether schema adoption actually affects how often LLMs reference a domain, or whether LLMs rely on different signals when selecting sources.

This study analyzes the relationship between schema markup coverage and domain visibility across OpenAI, Gemini, and Perplexity. The analysis combines extracted HTML schema data with measurements of how frequently domains appear in LLM-generated responses, comparing visibility across five levels of schema adoption.

The findings show that higher schema coverage does not result in higher visibility within LLM responses. Domains with extensive schema markup are cited no more frequently than domains with little or no schema, which indicates that schema markup alone does not influence how LLMs select sources.

Methodology – How Was Schema Impact Measured?

This study measures whether schema markup influences how often LLMs cite web domains. The analysis evaluates whether domains with higher structured data coverage achieve greater visibility inside AI-generated responses across major LLM platforms.

This analysis matters because schema markup is widely treated as a signal that improves machine understanding. The dataset integrates 2 primary components.

1. Schema Coverage Dataset. HTML outputs were analyzed to determine whether each sampled URL contained schema markup. Supported formats included JSON-LD, microdata, and RDFa. Each URL was labeled as either containing schema or not, and domains were extracted from URLs so schema usage is evaluated at the domain level.

2. LLM Visibility Dataset. Domain-level visibility data measured how often each domain appeared in LLM-generated responses across OpenAI, Gemini, and Perplexity. Each record included platform identifiers, appearance counts, and visibility scores derived from multiple responses.

URL-level schema labels were aggregated to compute domain-level schema coverage, defined as the proportion of sampled URLs within each domain that contained schema markup. This process produced a schema percentage indicating how widely structured data was implemented across a domain.

To enable meaningful comparison, domains were categorized into 5 schema adoption buckets listed below.

- No Schema: 0%.

- Minimal Schema: 1 to 30%.

- Moderate Schema: 31 to 70%.

- High Schema: 71 to 99%.

- Full Schema: 100%.

The analytical steps are listed below.

1. Normalize domain names into a consistent domain.tld format across datasets.

2. Aggregate repeated domain appearances using weighted averages, where visibility scores were weighted by the number of responses the domain competed in.

3. Merge schema coverage metrics with LLM visibility records at the domain level.

4. Compare visibility score distributions across schema adoption categories.

5. Evaluate platform-specific consistency across OpenAI, Gemini, and Perplexity.

6. Visualize visibility distributions using box plots and density plots.

The target variables are listed below.

- Schema Coverage (%). Measures the proportion of sampled URLs within a domain that contain schema markup.

- LLM Visibility Score. Measures how frequently a domain is cited within LLM-generated responses, weighted by competition volume.

This framework enables direct comparison of visibility outcomes across varying levels of schema adoption. The design isolates whether structured data implementation correlates with increased LLM citation frequency, or whether schema markup has no measurable effect on how LLMs reference web domains.

What Is the Final Takeaway?

The analysis demonstrates that schema markup does not influence how often LLMs cite web domains. The study shows that visibility inside LLM-generated responses remains consistent across all levels of schema adoption, which indicates that structured data coverage does not act as a citation signal for AI search systems.

Domains with complete schema coverage perform no better than domains with minimal or no schema across OpenAI, Gemini, and Perplexity. Visibility distributions remain nearly identical across platforms, which confirms that LLM citation behavior does not respond to increased structured data implementation.

Schema coverage reflects how consistently structured data is applied across a domain, but it does not translate into higher visibility within AI-generated answers. High-visibility and low-visibility domains appear in every schema category, which shows that schema adoption does not distinguish strong performers from weak ones in LLM environments.

The direction of these findings remains consistent across all analyses. Schema improves machine parsing for traditional search systems, but it does not affect LLM citation frequency. Visibility inside AI search depends on semantic relevance and model retrieval behavior rather than structured markup.

How Does Schema Coverage Relate to LLM Visibility?

I, Manick Bhan, together with the Search Atlas research team, analyzed domain-level schema coverage and LLM visibility data to evaluate whether structured data adoption influences how frequently domains are cited by LLMs. The analysis compares visibility patterns across schema adoption levels for OpenAI, Gemini, and Perplexity.

Schema Coverage Distribution

The schema coverage distribution measures how widely structured data is implemented across sampled domains. This distribution matters because uneven schema adoption shapes how results cluster and how comparisons need to be interpreted.

The headline patterns are shown below.

- A large share of domains fall into the 0% or 100% schema categories.

- Many domains have only a small number of sampled URLs, which naturally pushes them toward the extremes.

- Larger domains with hundreds of sampled URLs show more variation, but schema adoption still clusters near the boundaries.

This pattern indicates that the observed distribution reflects differences in sampling density as much as differences in schema implementation practices.

Impact of Schema Coverage on LLM Visibility

This analysis measures how often domains appear in LLM-generated responses across schema adoption categories. Visibility reflects citation frequency inside AI-generated answers rather than traditional search rankings.

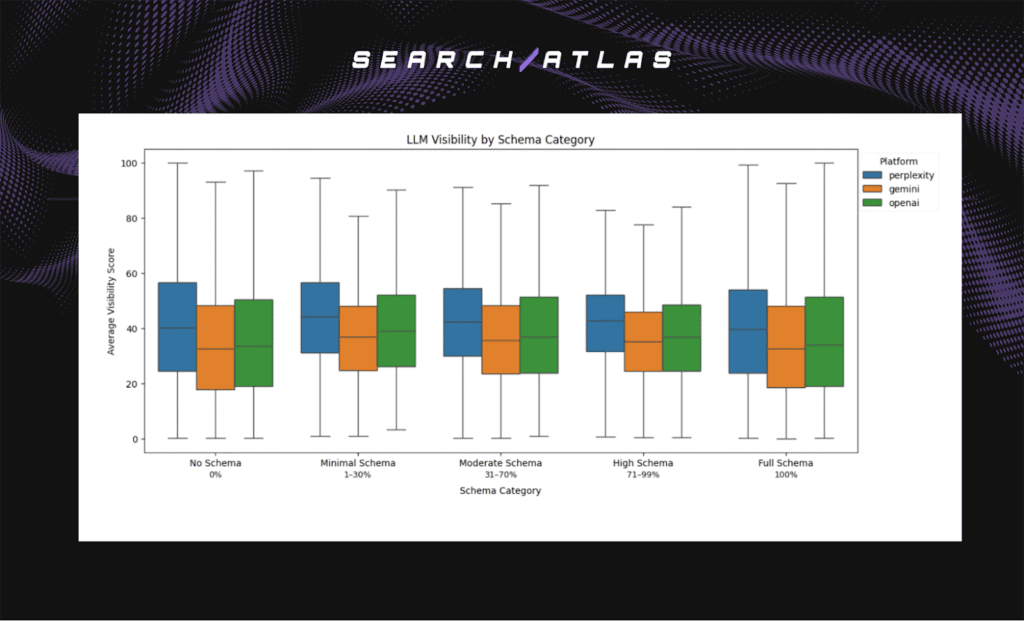

Box Plot – Visibility Distributions by Schema Level

The box plot compares visibility score distributions across schema categories for each LLM platform. The headline results are shown below.

- Perplexity. Visibility distributions remain stable across all schema categories.

- OpenAI. Visibility distributions remain stable across all schema categories.

- Gemini. Visibility distributions remain stable across all schema categories.

The medians, interquartile ranges, and overall spread appear nearly identical from No Schema to Full Schema. There is no consistent upward trend showing that domains with higher schema coverage achieve higher visibility scores on any platform.

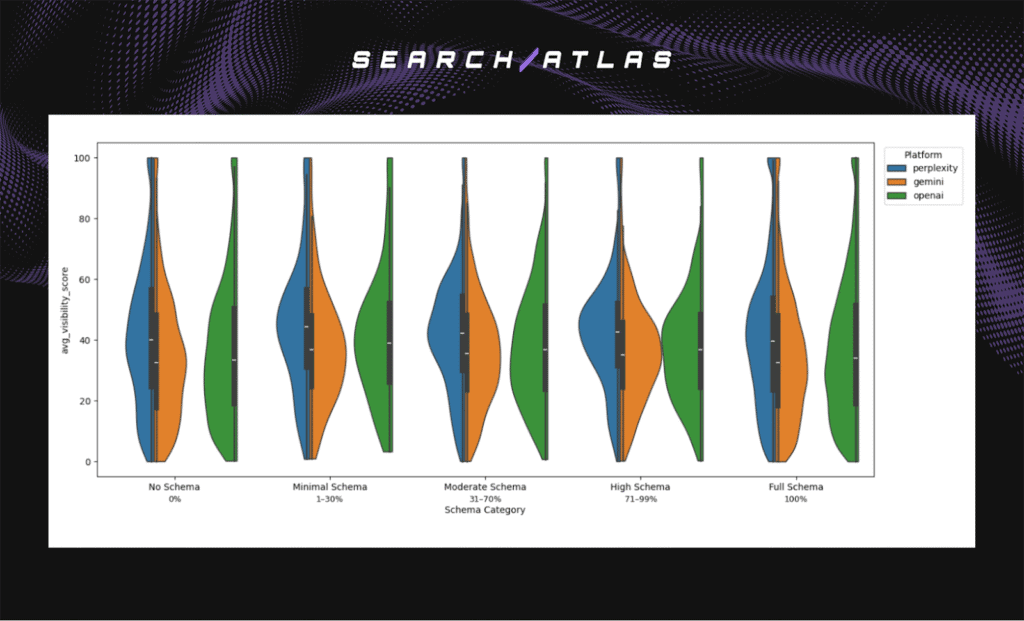

Violin Plot – Density and Spread of Visibility Scores

The violin plot visualizes the density and distribution of visibility scores within each schema category, highlighting how values spread across domains. The violin plot is below.

Which Patterns Best Explain LLM Visibility Behavior?

The analysis examines how LLM visibility scores vary across schema adoption levels and platforms. Visibility measures how often a domain appears in AI-generated responses, reflecting citation frequency rather than traditional rankings.

Visibility patterns remain consistent across all schema categories. High- and low-visibility domains appear at every level of schema adoption, which shows that structured data does not distinguish strong performers from weak ones.

Platform-level differences remain stable regardless of schema coverage. Perplexity shows the highest median visibility, Gemini the lowest, and OpenAI falls in between across all schema buckets.

The wide overlap of visibility distributions across categories confirms that schema markup does not predict which domains LLMs choose to cite. Visibility outcomes reflect model behavior rather than structured data implementation.

What Should SEO and AI Teams Do with These Findings?

SEO and AI teams need to separate traditional SEO assumptions from AI search behavior. Visibility inside LLM-generated answers reflects how models interpret and synthesize information, not how completely a page implements structured markup. Measuring AI visibility directly provides a clearer view of brand exposure within generative systems.

Teams need to prioritize semantic clarity and topical authority. LLMs reference meaning, context, and explanatory depth rather than markup completeness. Pages that clearly explain concepts, maintain topical focus, and present consistent facts align more closely with how LLMs select sources.

Content depth and informational quality emerge as stronger drivers of LLM visibility than technical enhancements. Domains with high and low schema adoption appear across the full visibility range, which shows that structured data does not separate authoritative sources from non-authoritative ones in AI-generated responses.

Schema markup remains relevant for search engine features and SERP presentation, but it does not influence how often LLMs cite a domain. SEO and AI teams need to evaluate schema as a search-focused optimization while assessing AI visibility through separate, LLM-specific measurement frameworks.

What Are the Study Limitations?

Every empirical analysis has limitations. The limitations of this study are listed below.

- Schema Type Granularity. The analysis measured the presence of schema markup, not the type, completeness, or quality of structured data. Differences between schema implementations were not evaluated.

- Observational Design. The study identifies correlations between schema coverage and LLM visibility but does not establish causation. Schema adoption and citation behavior is influenced by additional, unmeasured factors.

- Platform Scope. The analysis focuses on OpenAI, Gemini, and Perplexity. Citation behavior across other LLM platforms differs.

Despite these constraints, the results remain consistent across platforms and schema adoption levels. The analysis establishes a clear baseline showing that schema markup does not influence LLM citation frequency.

Future research needs to expand platform coverage, incorporate schema type differentiation, and examine longitudinal effects as AI search systems evolve.