You may also read a concise version of this research in our blog: How GPT Results Differ from Google Search: LLM-SERP Overlap Study

Overview

This report investigates the overlap between Search Engine Results Pages (SERP) and Generative Pretrained Transformers (GPT) responses to understand how aligned these two systems are in terms of URLs and Domain response citations.

Data Sources

- SERP Dataset: Collected between September and October 2025

- LLM Dataset: GPT-generated responses collected within October 2025

Each LLM query title was compared to all SERP keywords using cosine similarity on embeddings. Pairs exceeding 82% similarity were retained as “analogous keywords” meaning they expressed nearly identical information demand.

A total of 18,377 analogous LLM–SERP keyword pairs (≥82% semantic similarity) were used for analysis.

Methodology

1. Data Collection

Two datasets were used for this analysis:

- LLM Query Dataset: Contained responses from OpenAI, Perplexity, and Gemini collected October 2025. Each record included the query title, platform, timestamps (created, modified), and lists of cited URLs and domains.

- SERP Dataset: Contained keyword-level search results from September and October 2025, with each row holding the keyword and its corresponding search result data in JSON format.

These datasets provided the basis for comparing what LLMs referenced against what appeared in real search results during the same period.

2. SERP Parsing and Transformation

Each SERP record was parsed from its JSON field to extract:

- URLs – the links returned for each keyword.

- Domains – derived from each URL using urlparse.

The timestamps were converted to date/time format and used to filter for the target months (September–October 2025).

This produced a clean SERP dataset containing keywords, URLs, domains, and associated timestamps ready for embedding.

3. LLM Response Aggregation

LLM responses were grouped by query_response_id and platform to produce one consolidated record per response.

For each record, we retained:

- The response title(query),

- The platform name,

- The creation and modification timestamps, and

- The complete lists of cited URLs and domains.

This ensured that each LLM output was uniquely represented for embedding and overlap comparison.

4. Semantic Matching with Embeddings

To align semantically similar LLM responses and SERP keywords:

- Text embeddings were generated using OpenAI’s text-embedding-3-small model.

- LLM titles (queries) and SERP keywords were both embedded.

- Cosine similarity was computed on GPU using PyTorch with the vectors.

- For each LLM query, the most similar SERP keyword was identified.

- Only pairs with a similarity score > 0.82 were retained as semantically matched.

This step produced a refined dataset where LLM responses were directly comparable to closely related SERP keywords.

5. URL and Domain Normalization

To ensure accurate comparison:

- Domains were standardized using tldextract to retain only domain.suffix (e.g., news.google.com to google.com).

- URLs were lower-cased, stripped of www, query strings, and fragments, and kept in a consistent normalized format (scheme://domain/path).

These transformations were applied to both LLM-cited and SERP-listed links, resulting in consistent normalized lists.

Baseline for Comparison

Because the modern web is predominantly commercial (.com), with roughly 75–80% of indexed domains belonging to .com TLDs, a high share of commercial citations in LLM responses is expected.

Therefore, LLMs reflecting mostly .com domains should not be interpreted as inherently biased but rather as mirroring real-world web composition and Google SERP distributions.

6. Overlap Calculation

For each analogous LLM–SERP keyword pair (i.e., a matched query and its most similar SERP keyword), two overlap metrics were computed at the pair level:

- Domain Overlap (%) = (shared domains ÷ total unique LLM domains) × 100

- URL Overlap (%) = (shared URLs ÷ total unique LLM URLs) × 100

Each LLM–SERP pair therefore produced an individual overlap percentage, capturing how closely the model’s cited domains aligned with the corresponding SERP results for that specific query.

These pair-level overlaps were stored for distribution analysis and platform-level aggregation.

7. Aggregation and Visualization

Pair-level overlap values were then aggregated to compute average and distribution statistics by platform and query intent.

- Distributions of per-pair domain overlaps were visualized with boxplots to show variation across queries and platforms.

- Average overlap percentages were summarized with bar charts grouped by platform and intent.

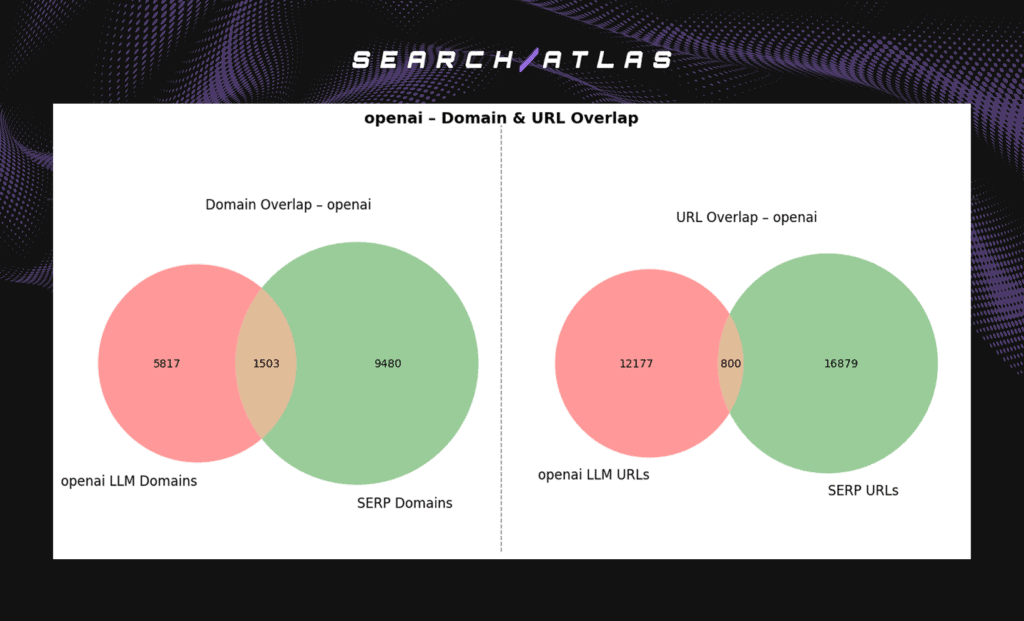

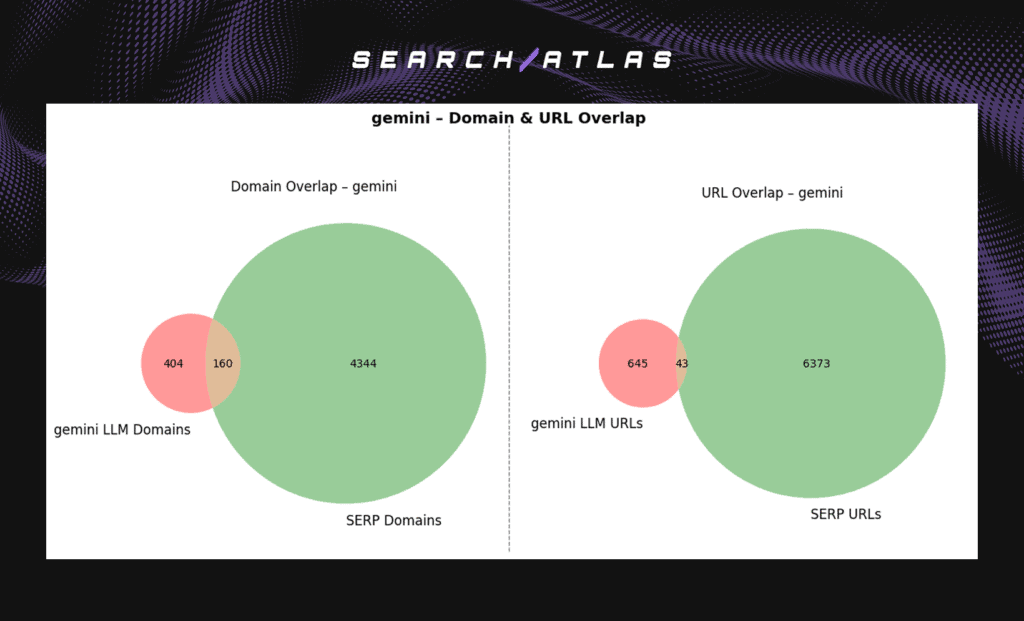

- Venn diagrams were included only as high-level visuals, showing the overall intersection of unique domains and URLs between each LLM and SERP, but were not used for metric computation.

This visualization strategy allows both micro-level analysis (per-query overlap) and macro-level insight (total overlap) of LLM–SERP alignment.

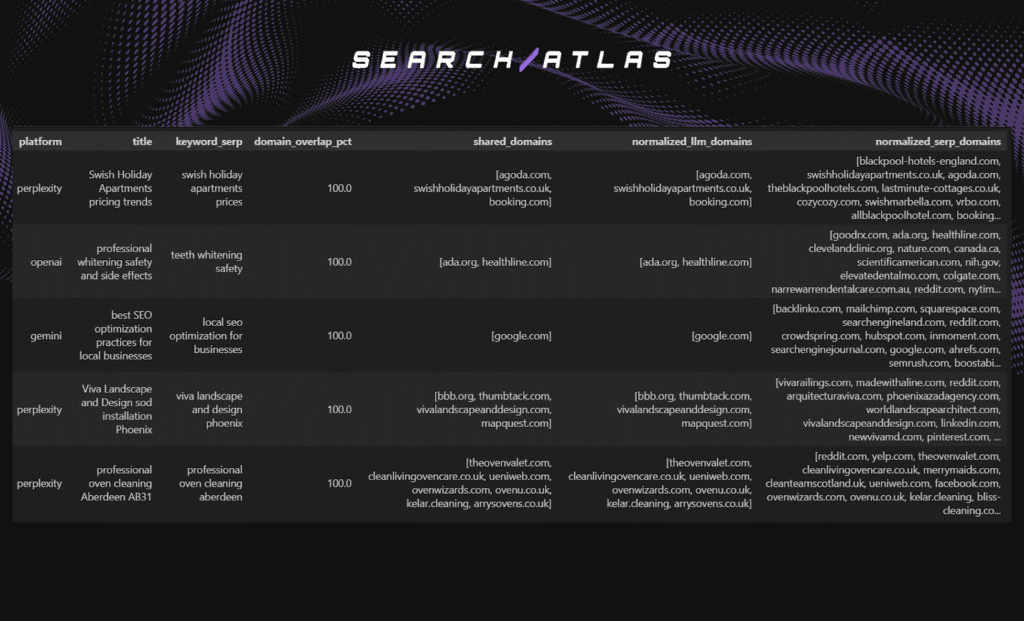

Examples of Semantically Matched Rows (Similarity Score > 82%)

Below is a list of 20 sample rows after applying the semantic similarity filter, showing the LLM query (title) alongside the corresponding SERP search keyword (keyword_serp) for each matched pair.

Dataset Composition

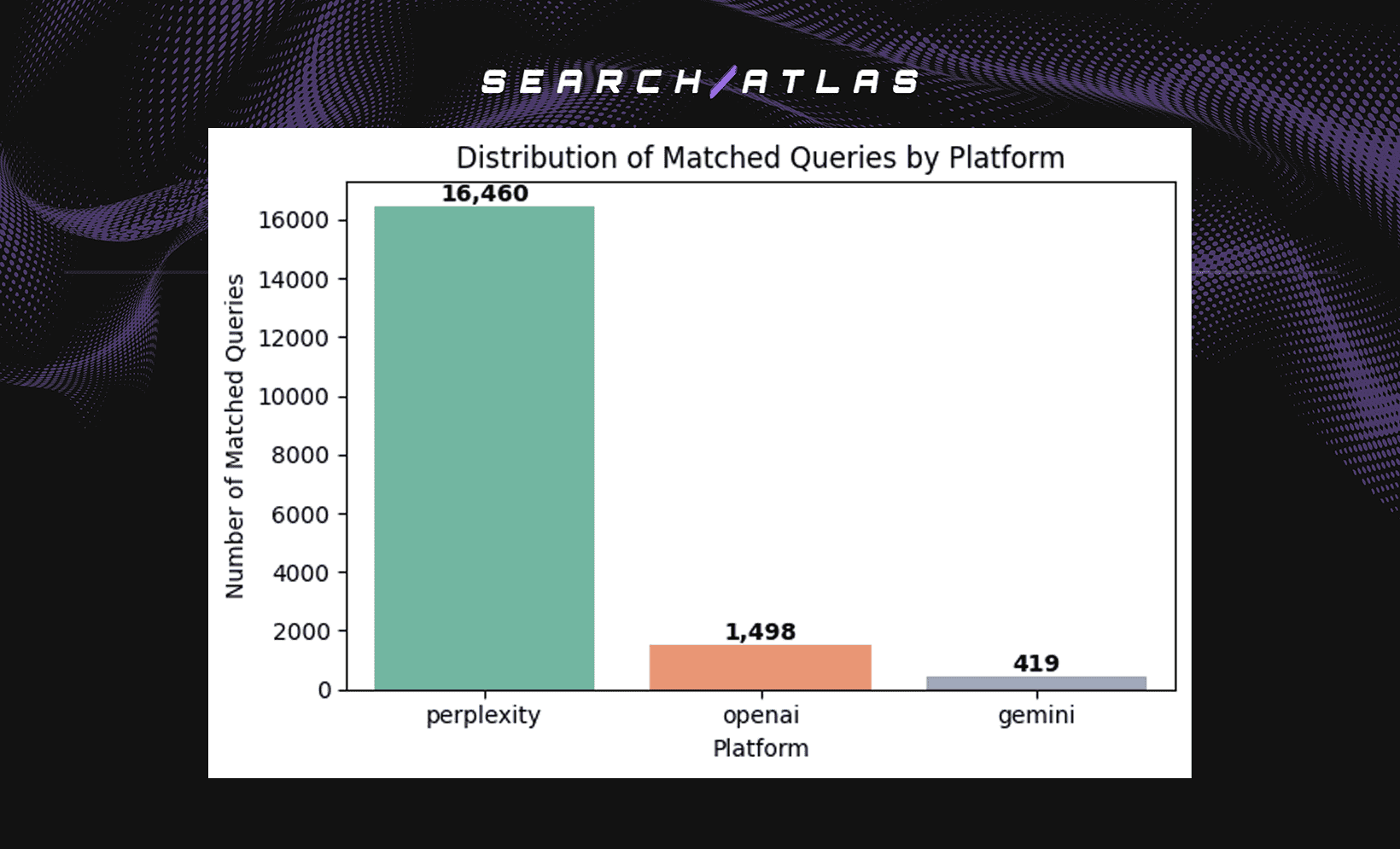

The figure below shows the number of matched query response pairs contributed by each model.

It is important to note that Perplexity accounts for the majority of matched queries (~89%), followed by OpenAI (~8%) and Gemini (~3%).

This imbalance may introduce bias in the overall results.

To ensure fair comparison and reduce bias, subsequent analyses are presented at the platform level, examining each model’s overlap with SERP independently.

Per-Pair Platform-Level Comparison of LLM–SERP Alignment

To understand how closely each large language model (LLM) aligns with search results, we calculated domain- and URL-level overlap percentages for each analogous LLM–SERP keyword pair across Perplexity (WebSearch enabled), OpenAI (GPT) (WebSearch disabled), and Gemini (WebSearch disabled).

Domain Overlap Analysis

- The boxplot above illustrates the distribution of domain overlap percentages across each LLM’s cited sources (Perplexity, OpenAI (GPT), and Gemini) and the corresponding SERP results, computed per query.

- Perplexity, which actively integrates live WebSearch retrieval, shows the highest and most consistent domain overlap with SERP. Its median overlap ranges between 25-30%, confirming that real-time search augmentation enables it to cite many of the same authoritative sources that appear in Google results.

- OpenAI (GPT) generally shows lower overlap (median ~10–15%), suggesting greater reliance on internal pre-trained knowledge rather than live web retrieval.

- Gemini displays high variability; some responses show near-zero overlap, while others align closely with SERP. This suggests a more selective or context-dependent retrieval pattern rather than consistent web citation.

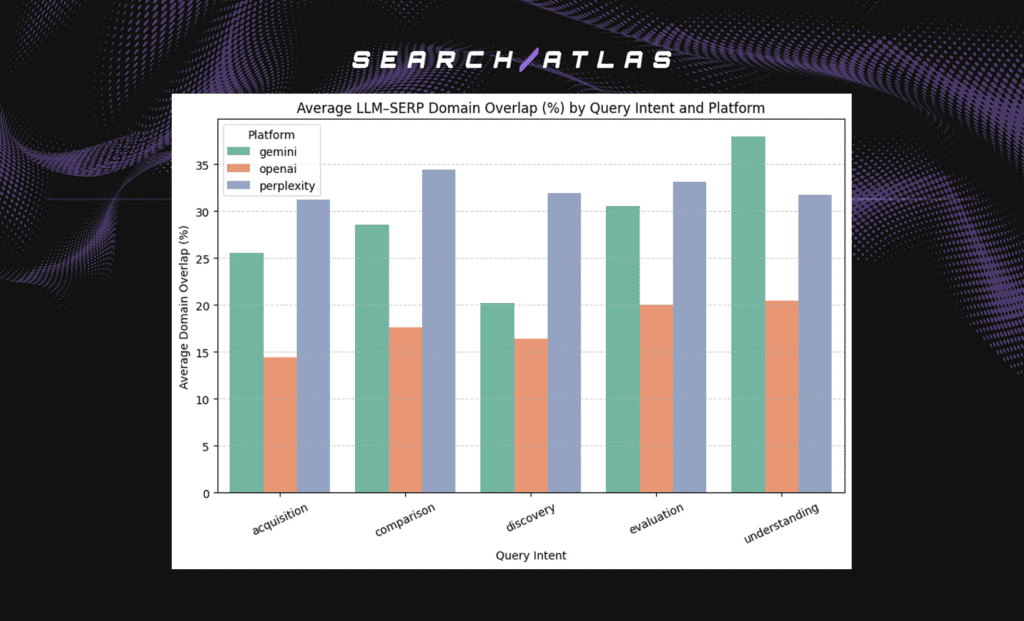

Average Domain LLM-SERP Overlap by Query Intent

- The chart below shows the average domain overlap between LLM outputs and SERP results across five query intents.

- Perplexity maintains the most consistent domain alignment across all intents, averaging around 30 to 35%, reflecting the influence of its live web retrieval.

- Gemini also shows relatively high alignment, particularly for Understanding queries, reflecting its more verbose generation style and tendency toward detailed, precision-focused explanations.

- OpenAI (GPT) shows lower domain overlap across all query intents, indicating that its responses rely more on pre-trained knowledge where answers are synthesized conceptually rather than retrieved from live data.

- Overall, overlap patterns vary by intent and model design. Retrieval-augmented systems such as Perplexity align more closely with SERP, while non-retrieval models like Gemini and OpenAI show greater variability depending on the query type.

URL Overlap Analysis

- At the URL level, overlap values drop substantially across all models, a reflection of the fact that URLs represent exact page matches, which are more sensitive to time and content variations.

- Again, Perplexity’s web-search retrieval-augmented design yields the highest overlap, with a median overlap near 20%, and occasional near-perfect matches (80-100%) of the same URLs found in SERP.

- OpenAI (GPT) and Gemini show limited average URL overlap, typically below 10% consistent with their generation-focused design and lack of direct retrieval.

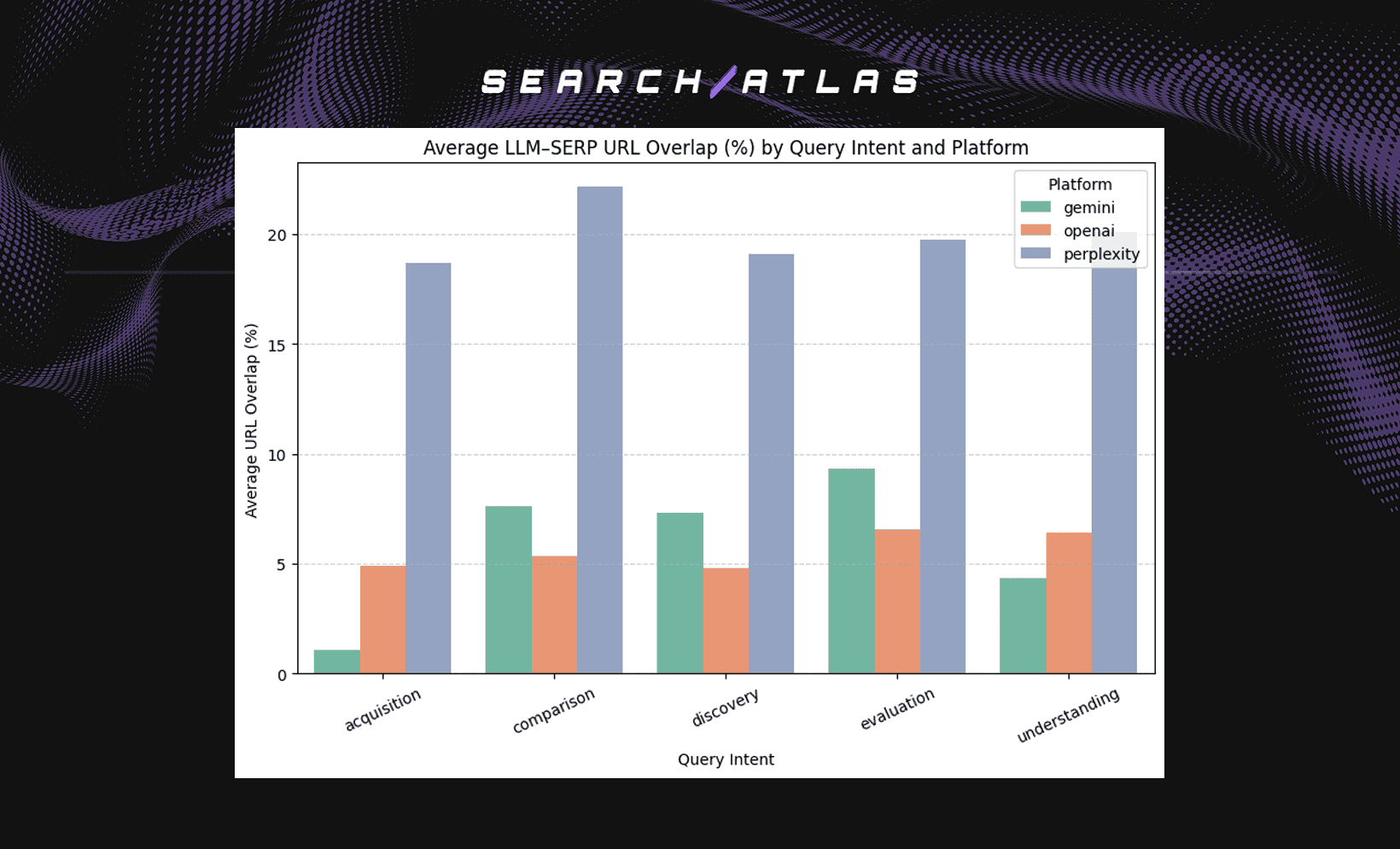

Average URL LLM-SERP Overlap by Query Intent

- The average overlap by query intent again highlights Perplexity’s consistent lead, maintaining relatively steady performance across all categories.

- Gemini shows moderate overlap for Evaluation and Comparison queries, suggesting occasional alignment with SERP sources, while OpenAI (GPT) remains the lowest across intents.

- This underscores that while domain-level overlap captures general topical consistency, URL-level overlap measures true retrieval fidelity, where only Perplexity, equipped with live search, demonstrates strong alignment with SERP’s exact sources.

- In contrast, non-retrieval LLMs like GPT and Gemini rely on reasoning and paraphrased synthesis, explaining their low URL overlap even when they conceptually address similar topics.

Per-Platform Aggregate Comparison of LLM–SERP Overlap

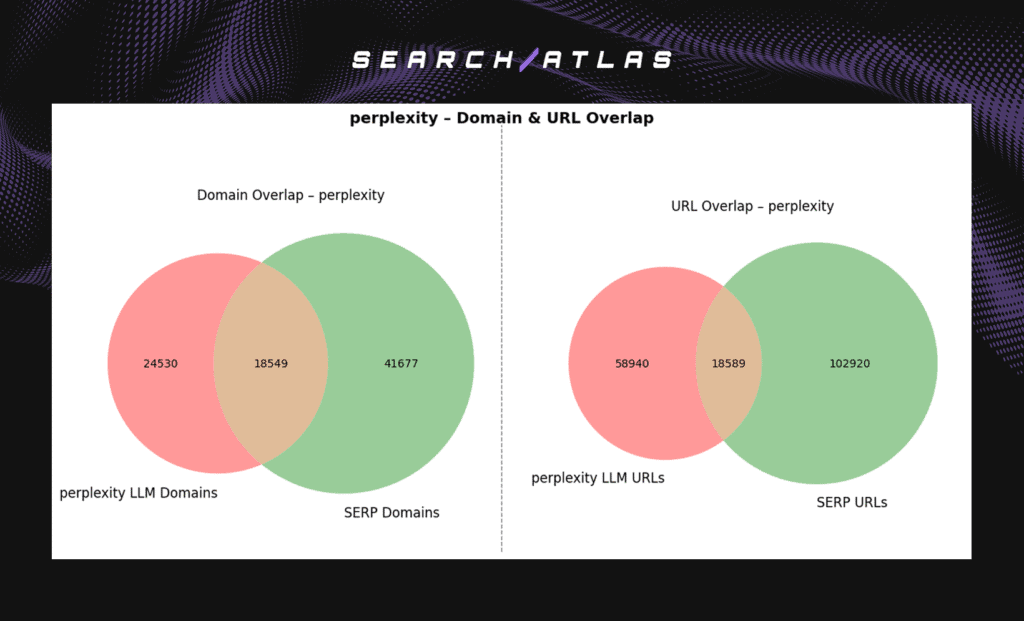

These Venn diagrams summarize the total unique domains and URLs cited by each LLM versus those present in Google’s SERP, showing how much each model’s overall knowledge or citation base overlaps with the search ecosystem.

Perplexity

Perplexity: Search-Aligned Retrieval

- Domains: Shares 18,549 domains (≈43% of its LLM domains, ≈31% of SERP’s).

- URLs: Shares 18,589 URLs (≈24% of its LLM URLs, ≈15% of SERP’s).

- Perplexity shows the strongest alignment with SERP among all models.

- Its retrieval-based architecture directly searches the web, so its citation pool mirrors the SEO-visible web more closely.

- Implies it’s search-continuous, integrating new or trending sites.

OpenAI

OpenAI (GPT): Reasoning over Retrieval

- Domains: Shares 1,503 domains (~21% of its LLM domains, ~14% of SERP’s).

- URLs: Shares 800 URLs (~7% of its LLM URLs, ~5% of SERP’s).

- Indicates moderate overlap. GPT occasionally references web sources that align with SERP but largely synthesizes from internal knowledge.

- This pattern suggests semantic abstraction and reasoning rather than literal web citation.

- GPT acts as a knowledge summarizer, not a retriever.

Gemini

Gemini: Minimal Overlap, Selective Sources

- Domains: Shares 160 domains (~28% of its LLM domains, ~4% of SERP’s).

- URLs: Shares 43 URLs (~6% of its LLM URLs, ~1% of SERP’s).

- Gemini shows a small absolute overlap with SERP, both at the domain and URL level.

- While roughly 40% of its cited domains appear in SERP, they make up only about 4% of SERP’s total domains, reflecting Gemini’s highly selective and filtered citation pattern compared to other models.

- Despite being a Google model, Gemini seems to filter aggressively and rely on a more limited subset of web sources, potentially emphasizing precision over breadth rather than broad web retrieval.

Examples

Examples of Query Intents

Below are two example queries for each intent category.

Examples of High vs. Low LLM–SERP Overlap

High-Overlap Examples

Below are examples of LLM–SERP Overlaps with 100% LLM Overlap Scores.

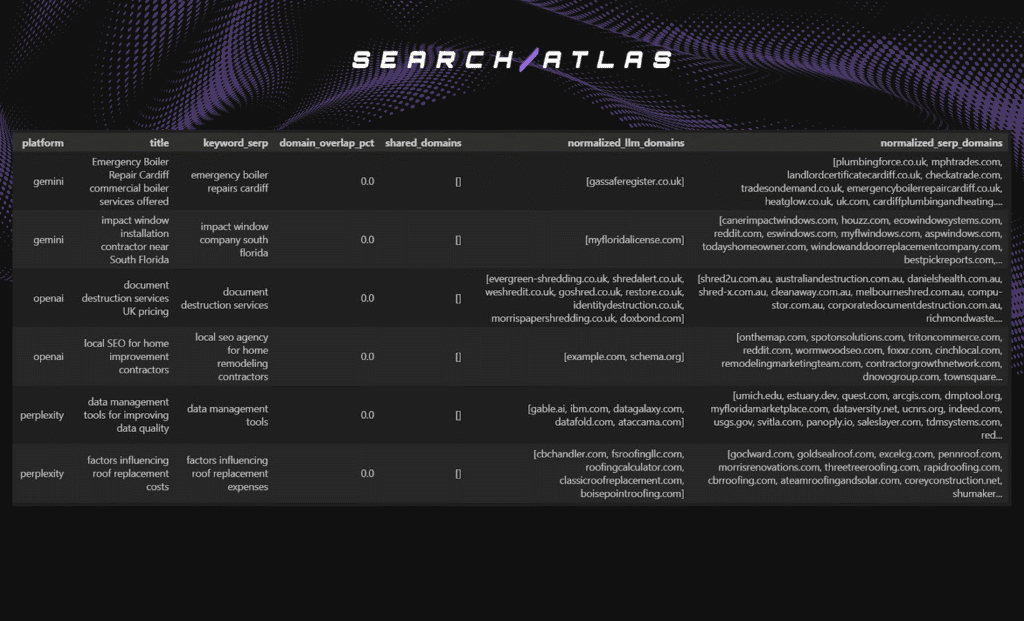

Low-Overlap Examples

Below are two examples from each platform showing LLM–SERP pairs with 0% domain overlap, where none of the domains cited by the LLM appeared in the corresponding SERP results.

Conclusion

This analysis shows clear differences in how large language models (LLMs) align with search engine results. Perplexity, which performs live web retrieval, achieves the highest and most consistent domain and URL overlap with Google SERP, reflecting its ability to access and cite current web content. OpenAI (GPT) and Gemini, in contrast, show lower overlap, consistent with their reliance on internal reasoning and pre-trained knowledge.

Domain-level overlap indicates general topical alignment, while URL-level overlap remains low, showing that LLMs often reference similar themes rather than identical pages. The 0.82 semantic similarity threshold ensures linguistic proximity but not identical intent, so overlaps should be interpreted as topic-level correspondence rather than perfect query equivalence.

Limitations and Future Work

- Query Intent Coverage: Some query types may be unevenly represented, influencing overlap results.

- Semantic Similarity Threshold: The 0.82 similarity score captures semantic resemblance, not necessarily identical user intent meaning.

- Temporal Scope: The two-month window (September–October 2025) provides a limited snapshot.

- Model Design Differences: Retrieval-enabled and non-retrieval systems differ architecturally, affecting comparability.

Future work should extend the timeframe and explore how overlap patterns vary over time and across query intents. Increasing the number of samples with higher semantic similarity scores (e.g., 0.95–1.00) would help validate whether stronger query alignment produces more consistent LLM–Search correspondence.