You may also read a concise version of this research in our blog: How LLMs Rank Local Businesses: A Study of “Near Me” Query Citations

Executive Summary

Large Language Models (LLMs) are increasingly used as decision engines for local intent queries such as “plumber near me,” “restaurants near me,” or “urgent care near me.” As LLMs become embedded across search interfaces, smart assistants, and AI agents, understanding how local businesses are selected, ranked, and cited has become a critical visibility question for SEOs, local marketers, multi-location brands, and citation platforms.

This study analyzes the Local LLM Ranking Factors that influence which businesses and domains LLMs reference when responding to “near me” queries. Using a dataset of 104,855 URL citations collected from leading LLMs (OpenAI, Gemini, Perplexity, Grok, Copilot, Google AI Mode), we examined how different classes of signals correlate with LLM ranking outcomes.

Methodology

Data Collection

Local-Intent Query Sample (“Near Me” Queries)

To evaluate how LLMs rank local businesses and local-intent sources, we collected all model responses generated for queries containing “near me” in the query text. Only broad brand-intent queries were included to maintain clean query intent and ensure the dataset reflected real local discovery searches rather than niche or branded lookups.

After filtering, we extracted 104,855 URL citations generated by six major LLM platforms:

- OpenAI

- Gemini

- Perplexity

- Grok

- Google AI Mode

- Copilot

Time Range: October 27, 2025 – December 3, 2025

Each citation record contained:

- Query metadata: query title, intent, search volume, timestamp

- Citation metadata: domain, URL path, LLM ranking position, mentions, share of voice

- Platform metadata: LLM platform, model, token usage, response creation timestamp

This dataset served as the foundation for all ranking-factor assessments conducted in this study.

Analysis

1. Domain Metrics Analysis

To test whether traditional SEO authority metrics influence LLM ranking, each cited domain was enriched with:

- Domain Power (DP)

- Domain Authority (DA)

- Domain Rating (DR)

Only the most recent recorded metric per domain was retained. Domains lacking valid measurements were excluded from the correlation analysis to maintain reliability.

This enabled a direct comparison between SEO domain authority and LLM citation rank.

2. Schema Markup Coverage Analysis

To evaluate whether structured data improves LLM visibility, we merged a large-scale HTML schema dataset that captured schema usage across millions of URLs.

To compute a domain-level score:

- Each cited URL received a schema detection flag indicating whether structured data was present.

- Schema coverage was calculated as the proportion of URLs belonging to each domain that contained valid structured data.

This produced a schema percentage score for every cited domain, reflecting the breadth of structured data implementation across that site. This allowed us to measure whether higher schema adoption correlates with stronger LLM ranking performance.

3. Google Business Profile (GBP) Signal Integration

Many cited domains corresponded to real-world local businesses. To test whether local prominence signals influence LLM ranking, we merged a dedicated GBP dataset that included:

- GBP Score

- Heatmap average rank

- Heatmap keyword coverage

- Citation count

- Posts count

- Review count and average rating

- Review-reply behavior (Reviews replied vs not replied)

- Questions count

All rows missing GBP data or containing zero-value metrics were removed to prevent distortion in the correlation analysis.

This integration enabled us to test whether LLMs implicitly reward businesses with stronger local reputation signals.

4. Semantic Relevance Analysis

To estimate how closely each cited URL aligned with the user’s intent, we performed two steps:

- Generated vector embeddings for both the query text and the scraped page content using modern embedding models.

- Measured cosine similarity between these embeddings to quantify their semantic alignment.

Each citation then received a Semantic Relevance Score, representing how closely the retrieved URL content matched the user’s request.

This allowed us to evaluate how relevance influences ranking across platforms.

5. URL Freshness Analysis

5.1 Web Scraping for Content & Metadata

For every cited URL (excluding homepages), we performed structured scraping to retrieve:

- Readable URL Citation page text (URL Content)

- Publication dates (using trusted metadata fields such as article:published_time, og:published_time, datePublished, and similar standardized publishing tags).

Invalid, missing, or auto-generated timestamps were removed to preserve temporal accuracy.

5.2 Freshness Score Calculation & Correlation Analysis

Using the extracted publication date, we computed a freshness score for every valid citation:

Freshness (days) = LLM Citation Timestamp – URL Publication Date

We removed all URLs where our publication-date extraction script failed to retrieve a valid timestamp, resulting in a final sample of 9,805 URL citations with verified publication dates.

This allowed us to measure the overall distribution of content age, compare recency tendencies across platforms, determine whether each LLM showed a preference for recent, moderately old, or long-standing sources, and finally compute the correlation between freshness and publication date (with both variables inverted so that lower values represent higher ranking and recency).

Domain Metrics Analysis

From a total of 104,855 URL citations, we identified 35,385 unique domains, of which 2,878 had valid domain authority metrics available.

We evaluated whether traditional SEO authority metrics (Domain Power, Domain Authority, and Domain Rating) correlate with higher LLM ranking positions.

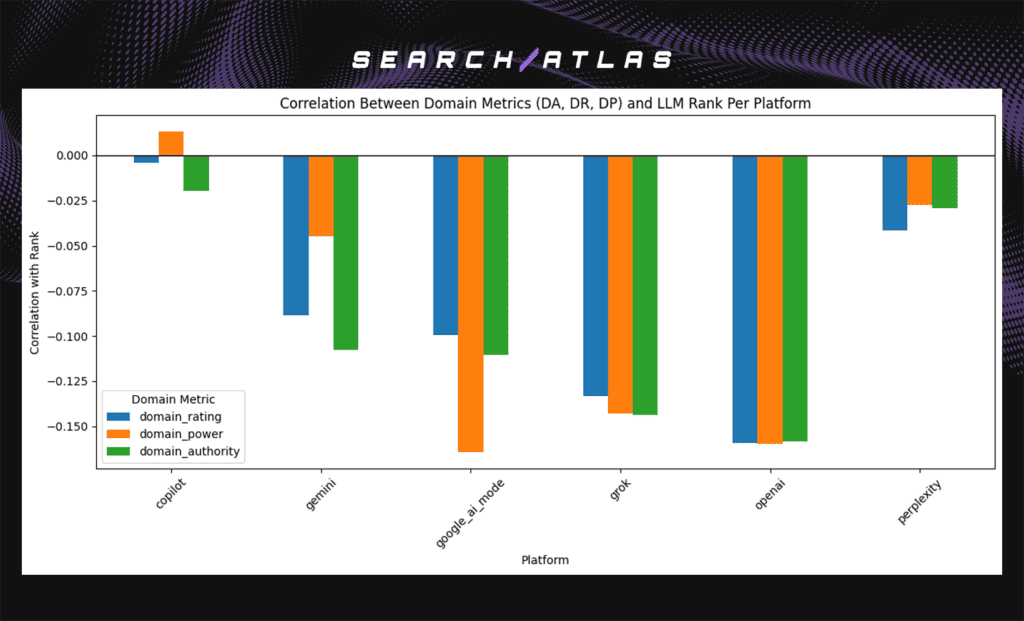

Below is the chart showing how each metric relates to LLM rank for every platform.

Key Insights

- Across most platforms, Domain Authority, Domain Power, and Domain Rating show negative correlations with LLM ranking position, indicating that higher-authority domains do not consistently appear in higher-ranked citation positions.

- OpenAI, Grok, and Google AI Mode exhibit the strongest negative relationships, suggesting that traditional SEO authority signals play a limited role in how these platforms order citations.

- Perplexity shows a weaker but still negative association, implying partial influence from domain authority but not a dominant ranking signal.

- Overall, LLM citation ranking appears largely decoupled from classical domain authority metrics, with ranking behavior likely driven more by semantic relevance, retrieval context, or query-level factors than by domain strength.

Schema Markup Analysis

We analyzed schema presence across 62,465 URL citations that successfully matched to domains with available schema coverage data and evaluated whether structured data correlates with improved ranking in LLM-generated results.

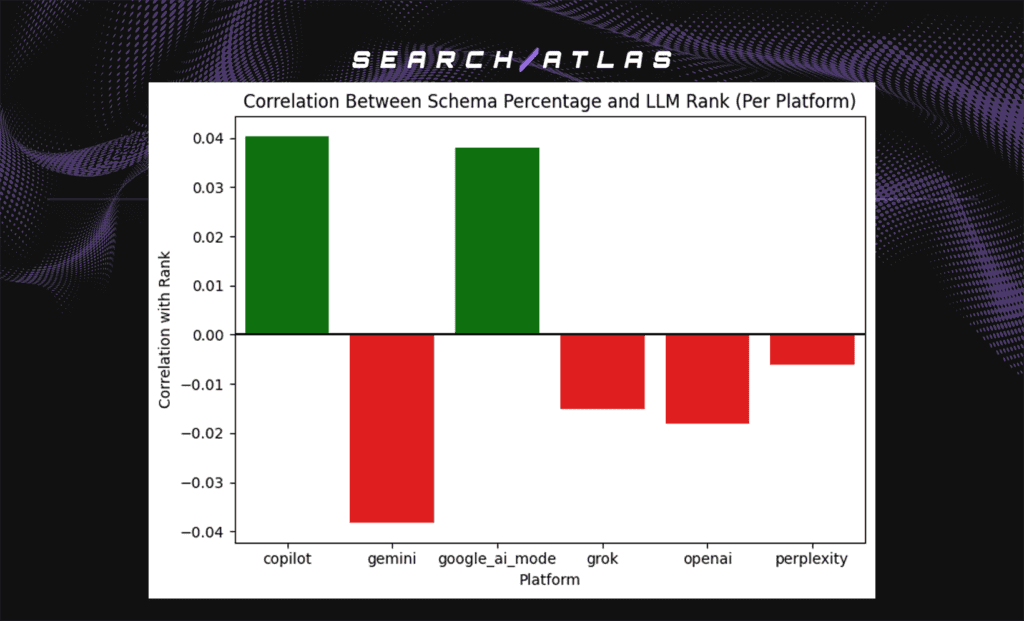

The chart below shows the correlation between domain-level schema usage and LLM ranking performance.

Key Insights

- Schema usage shows only a weak relationship with LLM ranking across all platforms, with correlations clustered close to zero. This indicates that structured data is not a primary ranking signal in LLM-generated responses.

- Foundational LLM platforms (OpenAI, Gemini, Grok & Perplexity) show neutral to negative correlations, indicating that schema markup provides little to no ranking advantage when citation ordering is driven primarily by other search factors rather than search-style signals.

- Copilot and Google AI Mode are the only platforms showing small positive correlations, suggesting that domains with broader schema adoption are slightly more likely to rank higher on these systems.

- This divergence likely reflects system design differences: Copilot and Google AI Mode are tightly integrated with traditional search infrastructure (Bing and Google Search, respectively), where structured metadata (schema) has historically influenced indexing and ranking.

- Overall, schema markup does not function as a consistent or decisive ranking factor in LLM responses. Any observed positive effects appear limited to platforms operating within the hybrid LLM + search engine environments, and correlations remain minimal in both positive and negative directions (approximately –0.04 to +0.04).

Google Business Profile (GBP) Signal Analysis

We merged the URL citation dataset with Google Business Profile (GBP) data, yielding 14,300 URL citations with business-level GBP metrics including heatmap rank, keyword count, citation count, review volume, review quality, reply behavior, and posting frequency. This allowed us to evaluate whether local prominence signals influence LLM ranking.

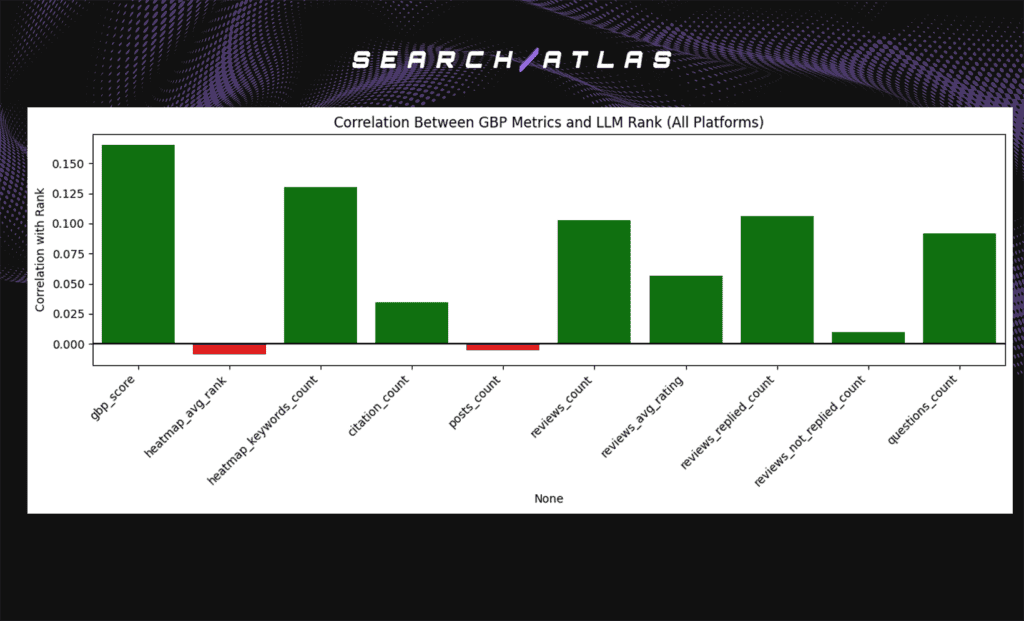

The chart below summarizes how GBP-derived local prominence signals correlate with LLM citation ranking across all platforms.

Key Insights

- GBP Score shows the strongest positive correlation (~+0.16), making it the most informative GBP signal in this analysis. This suggests that overall GBP profile quality has a modest association with better LLM Citation ranking.

- Engagement and review quality signals matter more than raw volume. Review count (~+0.10), replied reviews (~+0.11), and average rating (~+0.06) all show small positive correlations, indicating LLMs slightly favor businesses with active, well-managed profiles.

- Posting activity and citation count have limited influence. Posts count (~–0.01) and citation count (~+0.03) show near-zero correlations, suggesting minimal impact on ranking.

- Traditional local SEO visibility signals contribute little or negatively. Heatmap average rank and posts count show weak negative correlations, implying that map-based visibility does not translate directly into LLM ranking behavior.

- Overall effect sizes are small. All correlations fall within a narrow range (approximately –0.01 to +0.16), indicating that GBP signals provide supplementary context rather than decisive ranking power in LLM-generated results.

Semantic Relevance Analysis

Using vector embeddings for both the LLM query and the Cited URL content, we measured cosine similarity between the LLM query text and the content of each cited webpage.

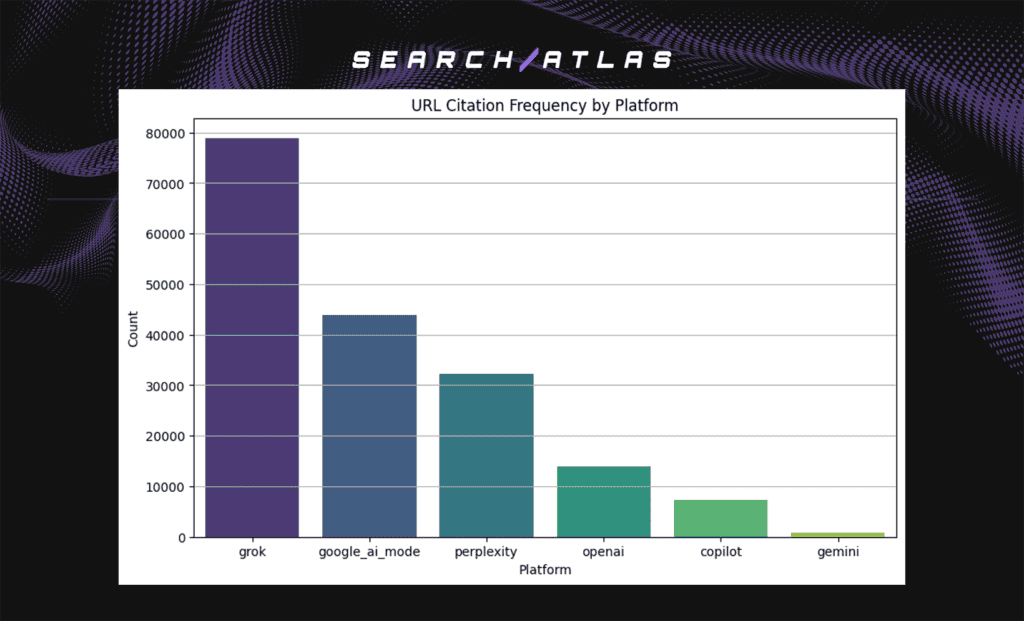

URL Citation Frequency by Platform

The chart below shows how frequently each platform appears in the citation sample dataset.

Distribution of Semantic Relevance

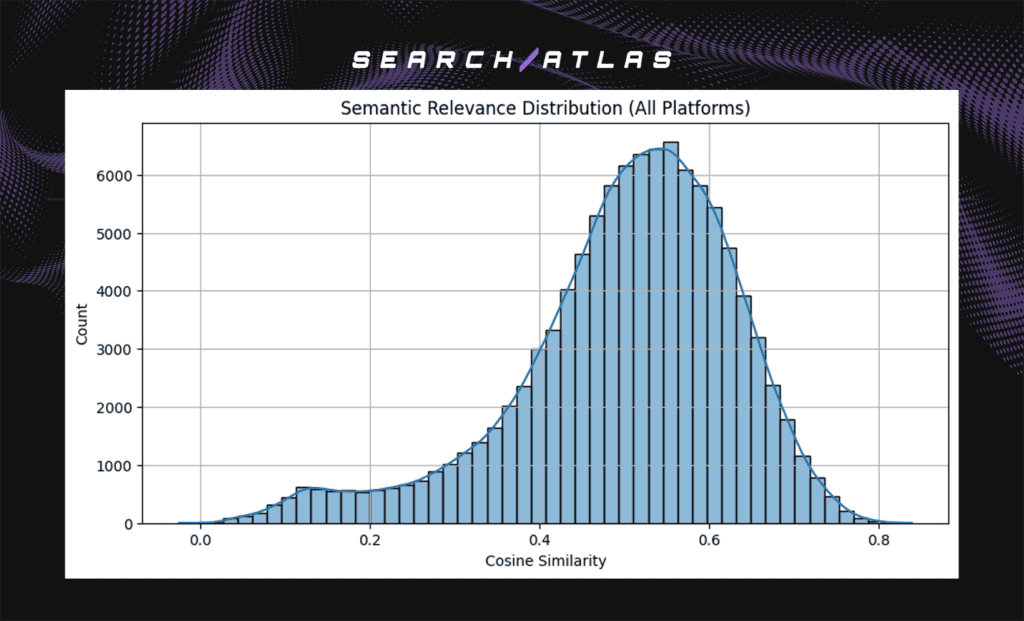

The chart below shows the distribution of semantic relevance scores across all LLM platforms.

From the distribution above, we observe a single dominant cluster:

- A large concentration of citations between approximately 0.35 and 0.75, representing URLs that are meaningfully relevant to the user query.

In addition to the main cluster, the distribution exhibits a gradual left tail extending toward lower relevance scores, rather than a distinct secondary cluster.

Further analysis shows that this left-skewed tail is driven primarily by cases where LLMs cite homepage-level or domain-level URLs, rather than specific article or landing pages.

Homepage citations often contain broad navigational, branding, or multi-topic content, which dilutes their semantic alignment with narrowly defined queries and results in lower cosine similarity scores.

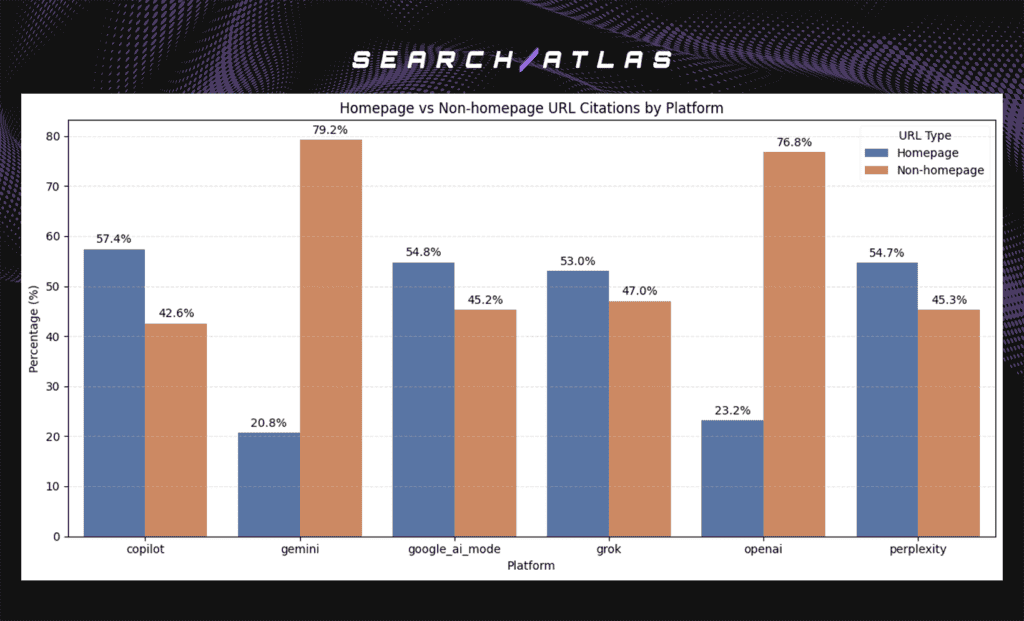

To illustrate this effect, the chart below shows the proportion of homepage versus non-homepage URL citations retrieved by each platform.

Homepage vs Non-homepage URL Citations by Platform

The chart reveals clear differences in citation granularity across platforms:

- Gemini and OpenAI retrieve a substantially higher share of non-homepage URLs, indicating a stronger tendency to cite deeper, page-specific content that is closely aligned with the query intent.

- Copilot and Google AI Mode show a more balanced mix of homepage and non-homepage citations, reflecting a combination of domain-level authority signals and page-level relevance.

- Perplexity retrieves a relatively higher share of homepage citations, consistent with its tendency to surface authoritative domains rather than individual articles.

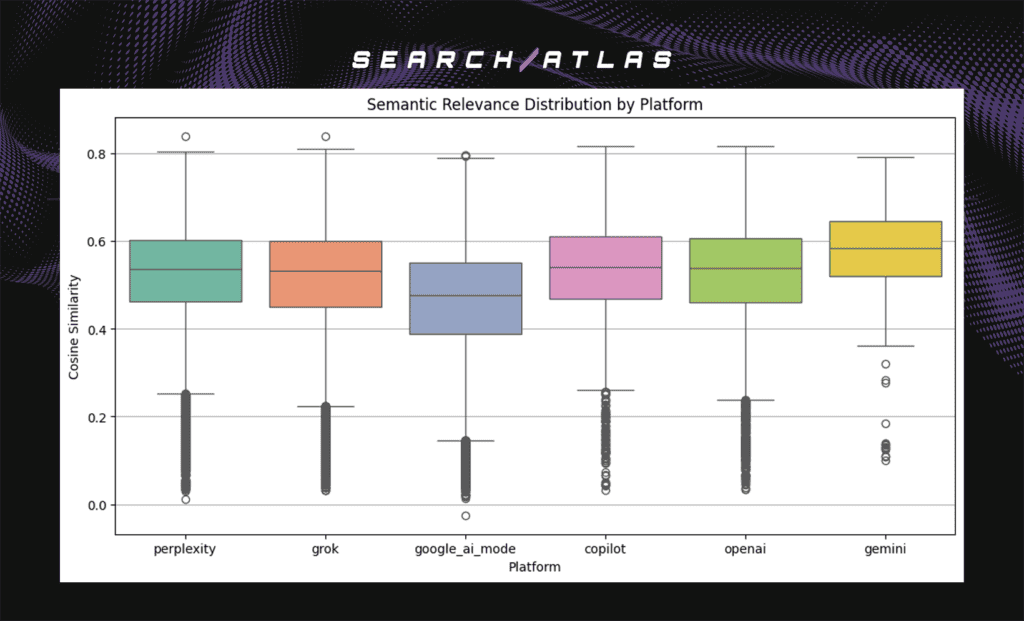

Semantic Relevance Distribution by Platform

Below is a chart illustrating the distribution of semantic relevance scores across all platforms, highlighting differences in how consistently each LLM retrieves relevant content.

Key Insights

- Semantic relevance scores cluster between 0.40 and 0.70 across all platforms, indicating generally strong alignment between cited URLs and user queries.

- Gemini has the highest median relevance, reflecting a stronger tendency to surface highly relevant citations.

- OpenAI and Copilot show the most consistent relevance, with narrow interquartile ranges centered in the mid-to-high relevance band (~0.50–0.60).

- Perplexity and Grok exhibit greater spread and heavier lower tails, indicating less consistent citation relevance.

- Google AI Mode exhibits a lower median relevance with moderate dispersion, indicating weaker average alignment rather than higher variability.

- Low-relevance outliers (≈0.00–0.20) occur on all platforms, driven by domain- or homepage-level citations rather than content-specific URLs.

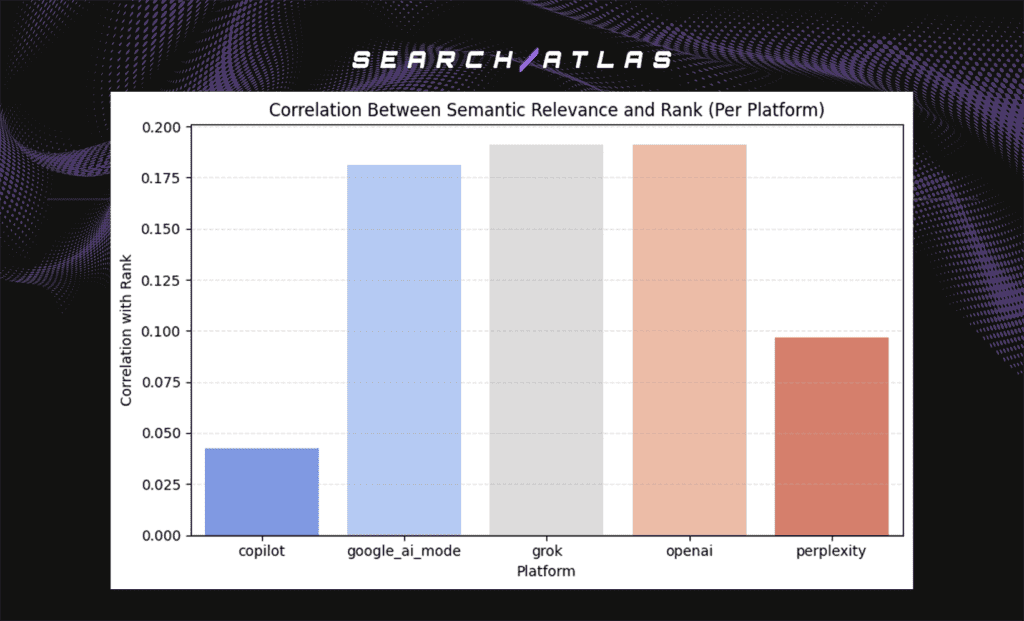

Correlation Between Semantic Relevance and LLM Rank

To determine whether LLMs rank more relevant citations higher, we measured the correlation between each citation’s Semantic Relevance Score and its LLM-assigned rank. Gemini was excluded from this analysis due to its low volume of valid webpage citations from our sample data.

Below is the chart showing the correlation between semantic relevance and ranking position for each platform.

Key Insights

- OpenAI, Grok, and Google AI Mode exhibit similarly strong positive correlations (approximately 0.18–0.19), indicating that all three platforms consistently rank more semantically relevant citations higher. No single platform clearly outperforms the others in this regard.

- This similarity suggests a shared ranking behavior across these platforms, where semantic relevance plays a meaningful and comparable role in citation ordering.

- Perplexity shows a weaker but still positive correlation (~0.10), indicating that relevance influences ranking, but less strongly than for OpenAI, Grok, or Google AI Mode.

- Copilot exhibits the weakest correlation (~0.04), suggesting that semantic relevance has a relatively limited influence on how citations are ranked, with other signals likely playing a larger role.

URL Freshness Analysis

For this analysis, we only included URLs with valid publication dates, meaning actual content pages (not homepages or non-HTML assets such as images or icons).

This ensures that the analysis reflects real webpage citations where a publication timestamp could be extracted. In total, we successfully retrieved 9,771 valid URL publication dates from the dataset.

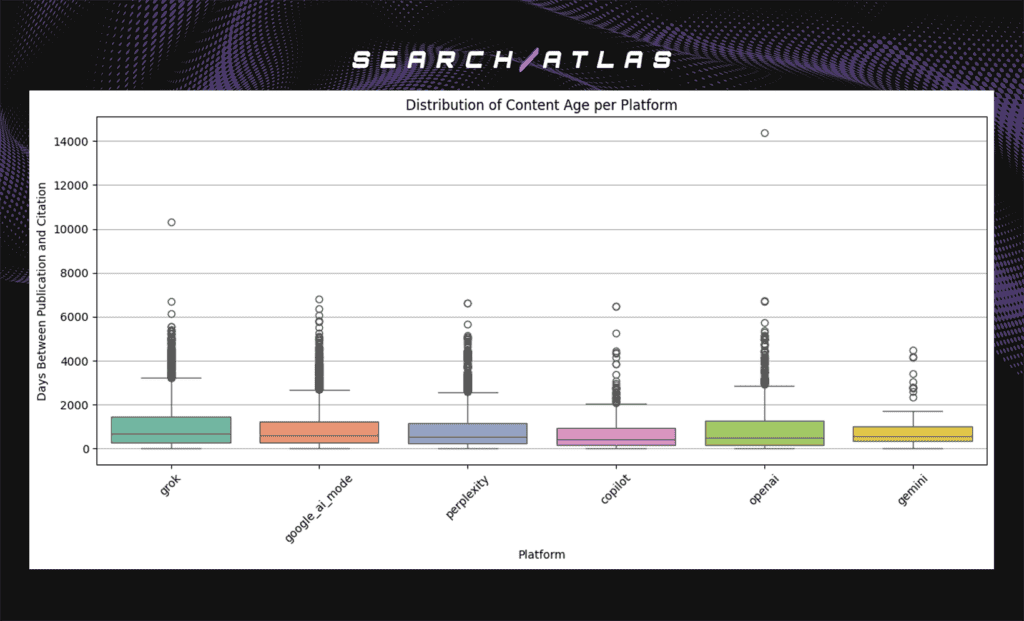

Below is the chart showing the Distribution of Content Freshness (Age) per Platform, measured as the number of days between each URL’s publication date and its citation by the LLM.

Distribution of Content Freshness (Age) per Platform

Key Insights

- Gemini cites the freshest URLs overall, indicating stronger reliance on recently published content.

- OpenAI and Grok often cite fresh pages but also reference older, evergreen URLs, suggesting their retrieval leans toward established, long-standing sources.

- Perplexity, Copilot, and Google AI Mode show a balanced freshness range, combining both recent and established web sources.

- Across platforms, the median content age typically falls within 3–4 years, with a long tail of much older citations indicating occasional reliance on legacy web material.

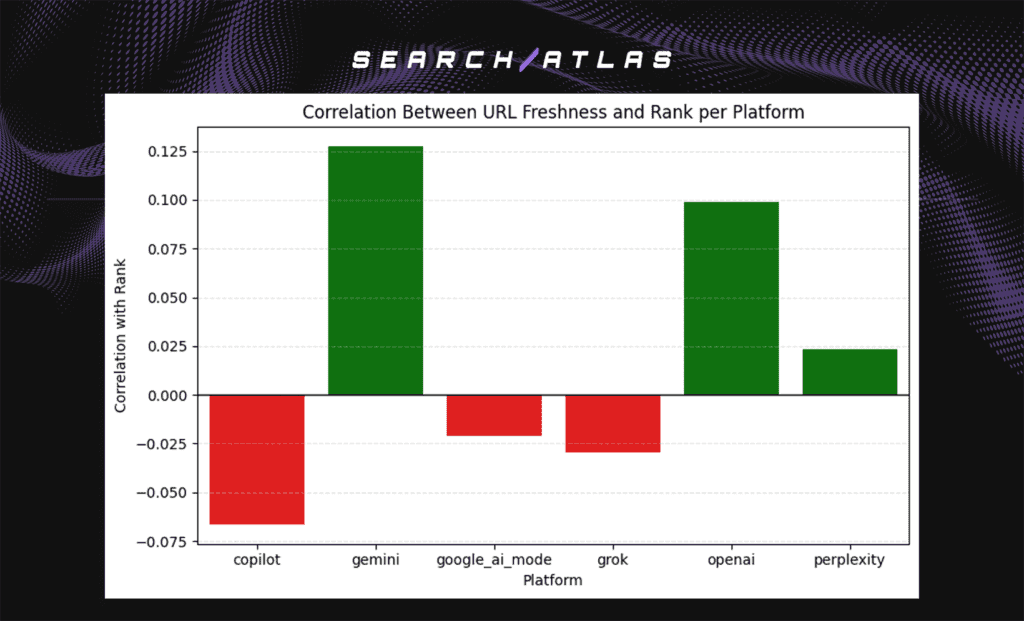

Correlation Between URL Freshness and Rank per Platform

For this analysis, we measured whether fresher URLs (recently published pages) tend to appear higher in each LLM’s ranking.

Below is the chart showing the correlation between URL freshness and ranking across LLM platforms.

Key Insights

- Gemini shows the strongest positive correlation (~0.13), indicating it most consistently ranks fresher URLs higher, favoring recent web content.

- OpenAI also shows a positive correlation (~0.09), suggesting moderate preference for recent pages, though not as strong as Gemini.

- Perplexity shows a weak positive correlation (~0.02), implying a slight bias toward URL freshness, but other factors likely dominate its ranking logic.

- Copilot, Grok, and Google AI Mode show negative correlations, indicating they do not prioritize freshness, and their top-ranked citations often include older or evergreen pages.

Conclusion

Across all analyses, local LLM ranking behavior is driven primarily by semantic relevance, with freshness and traditional SEO signals playing secondary, platform-dependent roles.

Semantic relevance shows the strongest and most consistent relationship with ranking. OpenAI, Grok, and Google AI Mode display similar positive alignment between relevance and rank, indicating that citation ordering is meaningfully influenced by how closely content matches query intent. Perplexity shows weaker alignment, while Copilot exhibits minimal sensitivity to semantic relevance.

Traditional authority signals and structured data play a limited role. Domain authority metrics and schema markup show weak or inconsistent correlations with ranking, suggesting they are not decisive factors in LLM citation ordering.

Freshness effects vary by platform. Gemini shows the strongest preference for recently published content, while OpenAI and Grok balance newer pages with established evergreen sources. Other platforms show limited or inconsistent freshness bias.

Google Business Profile signals show modest influence. Composite and quality-oriented GBP metrics correlate slightly with higher rankings, but effect sizes remain small, indicating that GBP acts as supporting context rather than a primary ranking driver.

Overall, LLMs primarily reward semantic alignment, with limited secondary influence from credibility-related signals, marking a shift toward rankings driven by contextual relevance rather than traditional SEO link equity.