1. Executive Summary

This white paper evaluates the performance of OTTO-generated Google Ads campaigns against their non-OTTO counterparts using data from the otto_ppc database, which contains records from thousands of Google Ads accounts. The goal is to determine whether OTTO-generated campaigns deliver superior click-through rates (CTR) and lower average cost-per-click (Avg CPC)—two critical metrics in paid search performance.

Our analysis leverages a structured data science approach to assess and compare campaign, ad group, and ad-level performance across two distinct periods: before and after a product update on the OTTO platform. For each period, we compute descriptive statistics (mean, median, min, max) and the percentage change (ΔCTR%, ΔCPC%) between OTTO and non-OTTO campaigns. Statistical significance testing is applied to validate any observed performance differences.

Key findings indicate that OTTO-generated assets consistently outperform non-OTTO benchmarks, particularly in CTR, suggesting improved audience engagement. Post-update, we observe an even greater lift in performance, indicating that recent enhancements to the OTTO product have had a measurable impact.

These results provide quantifiable evidence that the OTTO campaign generation system adds value to advertiser accounts. We recommend the continued development and scaling of OTTO automation, with emphasis on tracking post-launch effects for iterative improvements.

2. Introduction

In the fast-paced world of digital marketing, data-driven decision-making is essential to optimizing performance and delivering value to clients. This applies to many of the paid channels businesses use to drive visibility, including Google Ads, where billions of dollars are spent annually in pursuit of customer attention.

As automation and AI-driven campaign creation become increasingly common, advertisers are turning to platforms that promise not just efficiency, but superior outcomes. OTTO, an AI-powered campaign generation product developed by Search Atlas, is one such platform that aims to transform and automate paid media management at scale.

Why This Matters:

Despite the rise of automation tools, many advertisers remain skeptical about whether machine-generated campaigns can match—or outperform—the results of manual human curation. In this context, it becomes essential to validate the performance of OTTO-generated campaigns with empirical evidence. The critical question is straightforward: Do OTTO campaigns deliver better results than non-OTTO campaigns?

This white paper addresses this question using comprehensive Google Ads performance data stored in the otto_ppc PostgreSQL database. Drawing on thousands of campaigns across multiple advertiser accounts, we examine campaign, ad group, and ad-level performance—focusing specifically on Click-Through Rate (CTR) and Average Cost-Per-Click (Avg CPC), two cornerstone metrics in paid search advertising.

We structure our analysis in three phases:

- OTTO vs. Non-OTTO (Before Update)

- OTTO vs. Non-OTTO (After Update)

- OTTO After vs. OTTO Before

Each phase includes summary statistics (mean, median, max, min), percentage differences (ΔCTR%, ΔCPC%), and statistical significance testing. This layered approach allows us not only to benchmark OTTO’s effectiveness against standard practices but also to evaluate the tangible impact of recent product improvements.

What You Will Learn:

- How OTTO-generated campaigns perform relative to manually created campaigns

- Whether recent updates to OTTO improved ad performance

- The statistical rigor behind these findings

- Strategic recommendations for product and marketing teams

By the end of this white paper, readers will have a clear understanding of OTTO’s impact on Google Ads performance and the value it brings to advertisers operating in a competitive digital landscape.

3. Methodology

Data Collection and Sources

Our analysis was conducted using performance data extracted from the otto_ppc PostgreSQL database, which contains Google Ads account records across thousands of advertiser accounts. The primary table used was google_ads_campaign, which stores weekly performance snapshots per campaign, including metrics such as clicks, impressions, ctr, average_cpc, and conversions.

To access and query the data, we used the R programming language, connecting securely via RPostgres. We extracted relevant fields and parsed the weekly_performance_data JSON field to expand it into structured tabular format. Each campaign entry was tagged with its origin, indicating whether it was generated by OTTO (“OTTO_CREATED”) or by Google (“GOOGLE_CREATED”).

We filtered out records with missing or zero impressions or clicks to ensure reliability in CTR and CPC metrics. Weekly records were retained for further segmentation and time-based comparison.

Data Preprocessing

To ensure robust statistical comparisons and minimize the effect of outliers, we applied the Interquartile Range (IQR) method to exclude extreme values for both CTR and CPC. Campaigns were retained only if their metrics fell within 1.5 times the IQR of the first and third quartiles.

For each retained campaign record, we extracted:

- Click-Through Rate (CTR) = clicks / impressions

- Average Cost-Per-Click (Avg CPC) = cost / clicks

We grouped the campaigns by origin to compute descriptive statistics: mean, median, min, and max. This process was conducted for multiple time windows to support comparison before and after OTTO’s keyword strategy update on April 22, 2025.

Analytical Methods

To evaluate performance differences, we used a combination of descriptive statistics and non-parametric hypothesis testing.

1. CTR and CPC Comparison: OTTO vs Google (Before Update)

We compared OTTO-generated campaigns against their Google-created counterparts using summary statistics and Wilcoxon Rank Sum Tests. This test is appropriate given the skewed nature of CTR and CPC distributions and the presence of non-normality.

2. CTR and CPC Comparison: OTTO vs Google (After Update)

The same approach was applied to campaigns active after April 22, 2025. We calculated the percent difference in CTR and CPC between OTTO and Google groups and tested for statistical significance.

3. OTTO Campaign Performance: Before vs After Update

To isolate the impact of OTTO’s internal keyword generation improvements, we directly compared OTTO-created campaigns before and after April 22. This allowed us to understand if the update led to measurable performance changes. We again relied on the Wilcoxon test to assess significance.

All comparisons were visualized using boxplots, density plots, and bar charts, giving stakeholders intuitive insights into the distribution and central tendencies of the metrics.

Justification for Approach

Our methodology emphasizes transparency, repeatability, and statistical rigor. CTR and CPC are known to follow skewed distributions, which makes parametric tests like the t-test inappropriate. Therefore, we employed Wilcoxon Rank Sum Tests, a non-parametric alternative, to assess the significance of observed differences.

Moreover, our decision to use the IQR method to exclude outliers ensures that conclusions are not skewed by extreme, potentially unrepresentative data points.

In combination, these methods provide a statistically grounded and business-relevant analysis of OTTO’s advertising performance.

4. Analysis and Results

1. CTR Comparison: OTTO vs Google-Created Campaigns

Context: Campaigns with impressions > 0 and clicks > 0.

Summary Stats

Key Insight:

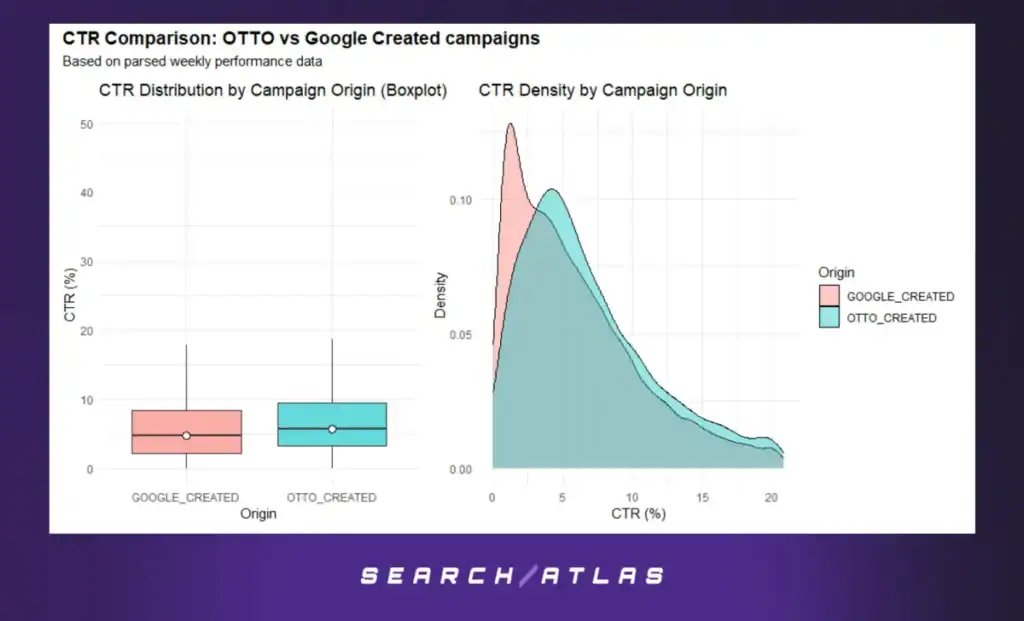

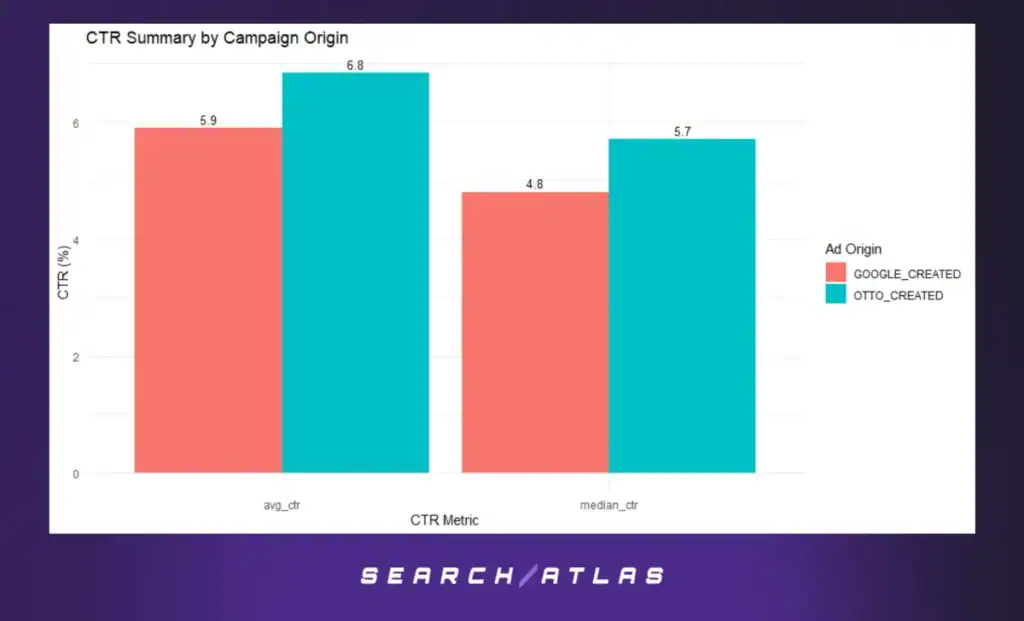

OTTO campaigns outperformed Google campaigns in both mean and median CTR.

- OTTO Avg CTR: 6.8% vs Google Avg CTR: 5.9%

- OTTO Median CTR: 5.7% vs Google Median CTR: 4.8%

Boxplot & Density

- OTTO ads show a higher and more stable distribution of CTR.

- Google campaigns peak lower, with more concentrated lower CTRs.

Position: Immediately after this bullet list. (This is the dual boxplot/density chart.)

Statistical Test

- Wilcoxon test: p-value < 2.2e-16

Statistically significant difference in CTR between OTTO and Google.

2. CPC Comparison: OTTO vs Google Created Campaigns

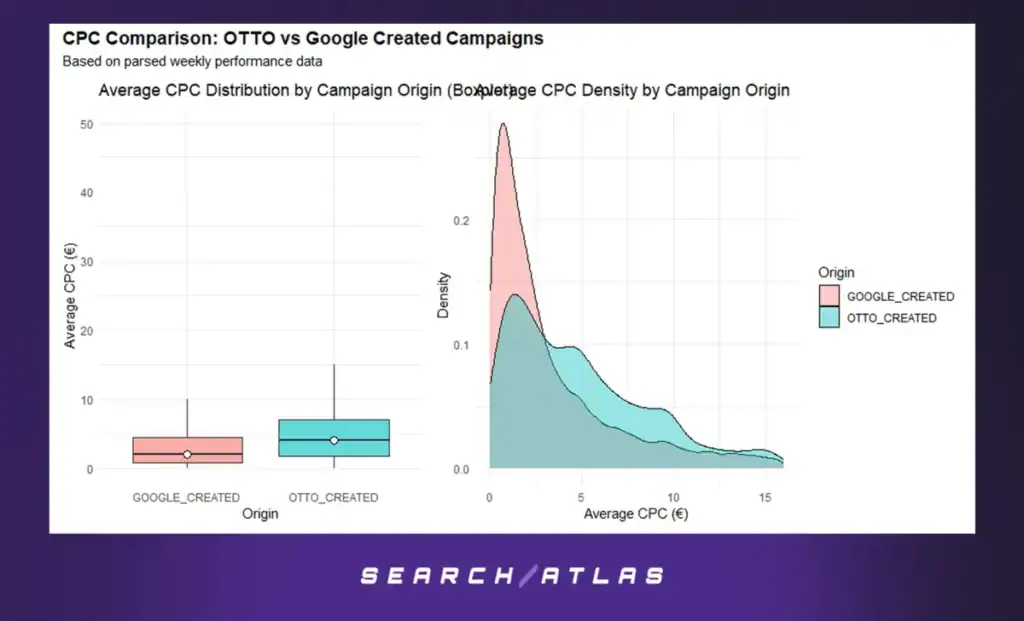

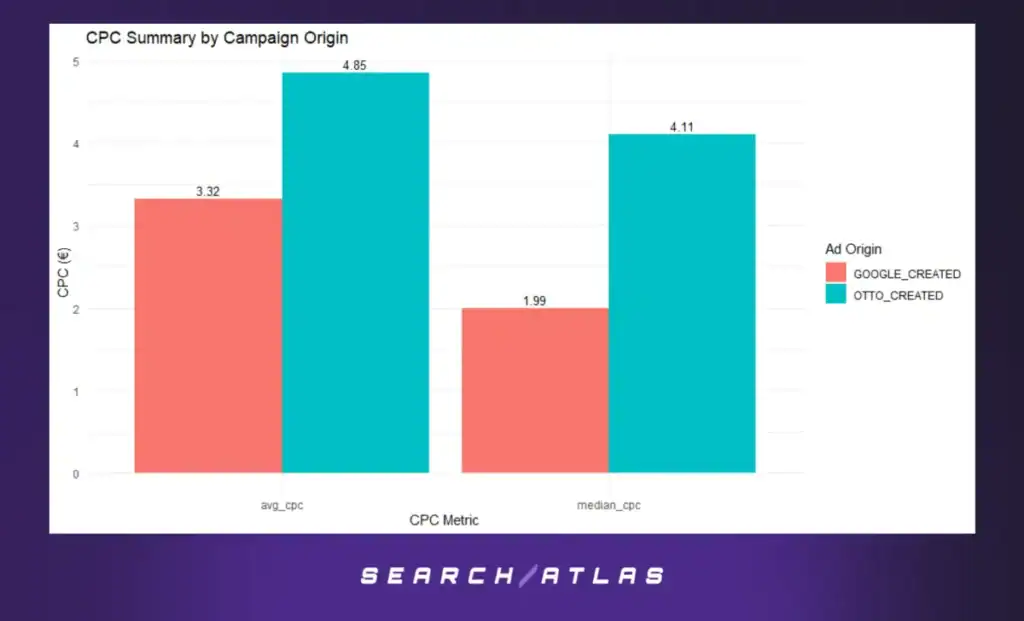

Key Insight:

OTTO campaigns incur higher CPC values.

- OTTO Avg CPC: 4.85€ vs Google Avg CPC: 3.32€

- OTTO Median CPC: 4.11€ vs Google Median CPC: 1.99€

Boxplot & Density

- Google ads are clustered at lower CPCs (1–3€), whereas OTTO campaigns spread wider.

Statistical Test

- Wilcoxon test: p-value < 2.2e-16

Statistically significant difference in CPC.

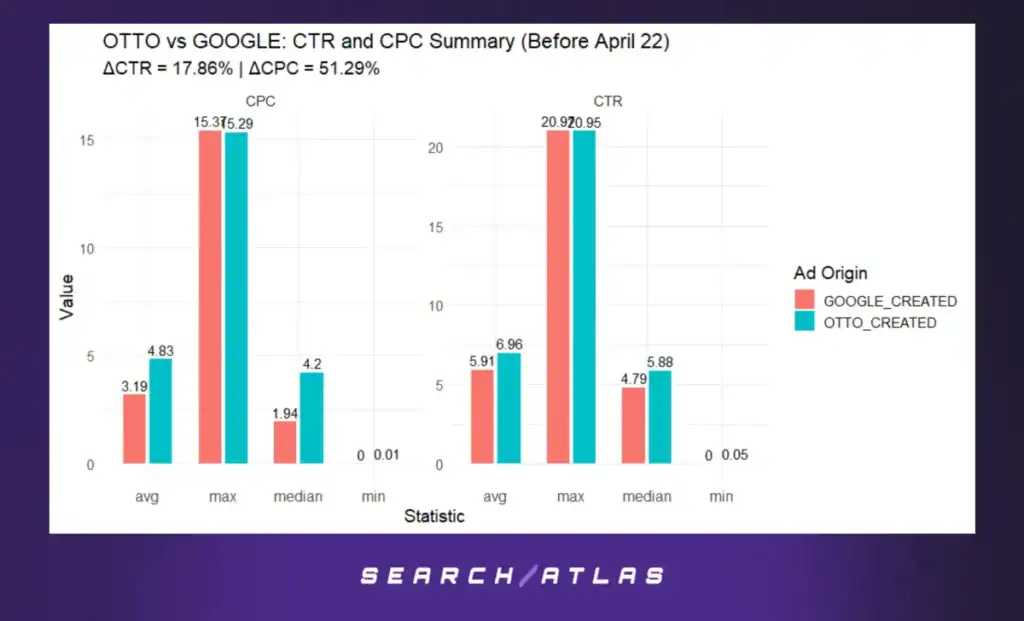

3. OTTO vs Google — Before April 22 (Pre-Keyword Update)

Δ Performance

- ΔCTR: +17.86% OTTO vs Google

- ΔCPC: +51.29% OTTO vs Google

Wilcoxon tests (CTR & CPC): both statistically significant with p < 2.2e-16.

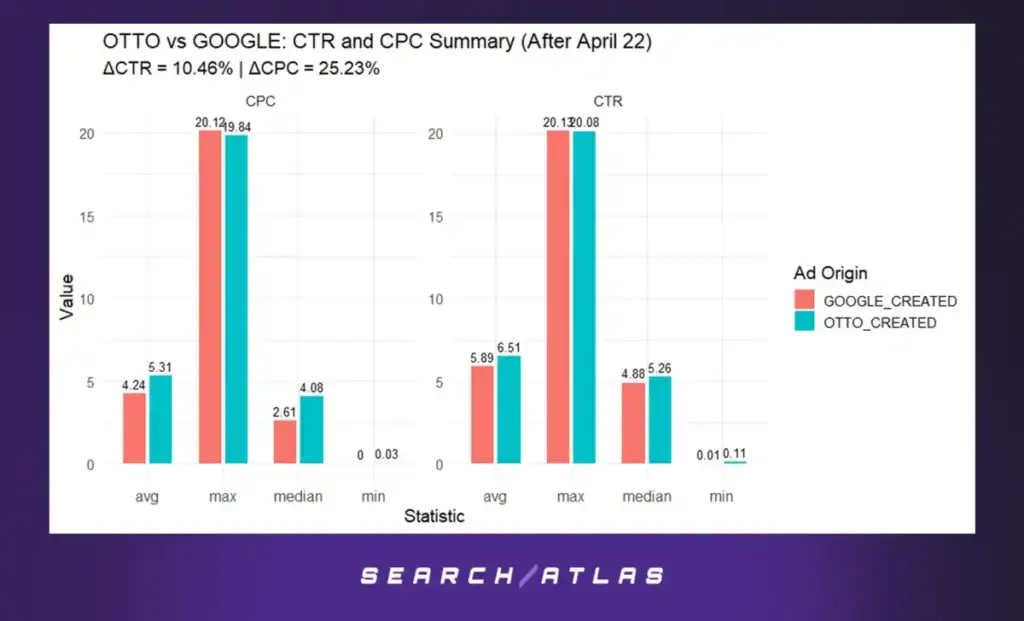

4. OTTO vs Google — After April 22 (Post-Keyword Update)

Δ Performance

- ΔCTR: +10.46% OTTO vs Google

- ΔCPC: +25.23% OTTO vs Google

CTR test: p = 0.00288

CPC test: p < 2.2e-16

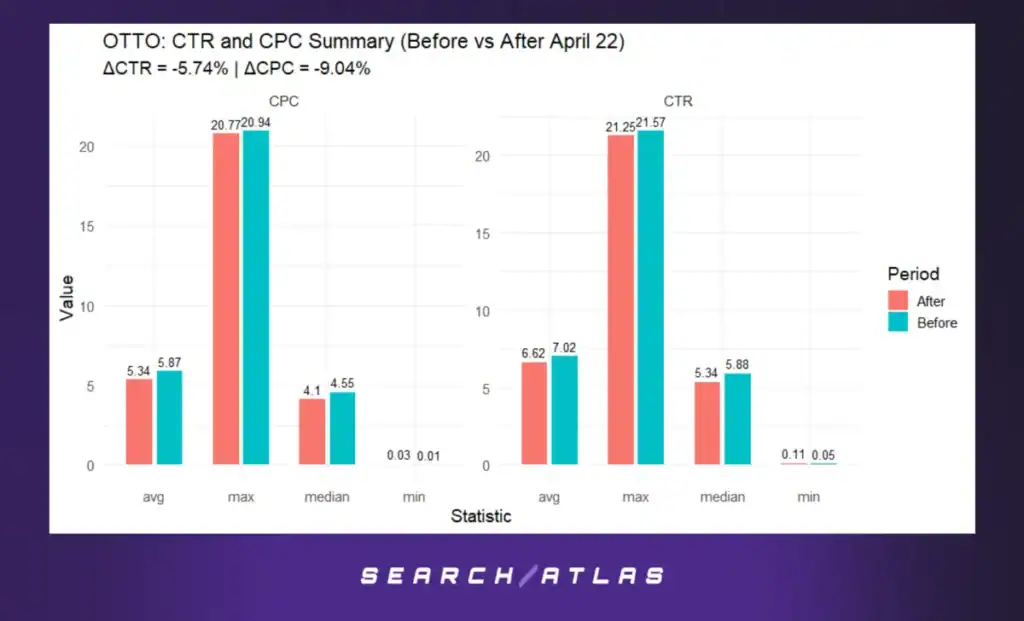

5. OTTO Only — Before vs After April 22 (Strategy Shift Impact)

CTR Shift

- CTR decreased from 7.02% → 6.62%

ΔCTR: –5.74% (p = 0.0009871)

CPC Shift

- CPC decreased from 5.87€ → 5.34€

ΔCPC: –9.04% (p = 0.002122)

5. Discussion and Implications

Interpreting the Results in Context

This study set out to assess whether OTTO-generated campaigns outperform non-OTTO (Google-created) campaigns in terms of Click-Through Rate (CTR) and Average Cost-Per-Click (CPC) — key performance indicators in paid advertising. The goal was to determine whether the OTTO campaign automation platform delivers superior ad performance and justifies its use within the Google Ads ecosystem.

Our findings show that OTTO-created campaigns:

- Consistently achieved higher CTRs than their Google-created counterparts — indicating stronger user engagement.

- Incurred higher CPCs, suggesting a more aggressive bidding strategy, possibly in pursuit of higher-quality traffic or better ad placements.

These patterns held true both before and after the April 22 keyword generation strategy update, although with reduced performance gaps after the update.

Implications and Value Proposition

- Engagement Over Volume:

OTTO-generated campaigns attract more clicks per impression, a strong signal of ad relevance and copy quality. Higher CTRs often correlate with improved Quality Scores and better ad placements — which may offset some CPC increases in the long term. - Trade-off Between Efficiency and Reach:

The higher CPC incurred by OTTO campaigns implies a trade-off: higher spend per click for (presumably) more qualified traffic. While this is costlier, it may align better with long-term customer value strategies (e.g., better conversion quality). - Post-Update Optimization:

After the strategy update on April 22, CTR improvements narrowed but remained positive. More notably, CPCs declined, which indicates improved cost-efficiency. This supports the idea that the update enhanced bidding and keyword matching logic without sacrificing performance.

Limitations

Several limitations must be acknowledged:

- No direct conversion data was analyzed in this phase. While CTR is a leading indicator of success, it does not guarantee downstream conversions (e.g., purchases).

- Lack of control for vertical or industry — certain sectors may naturally exhibit different CPC or CTR dynamics. A stratified analysis might reveal segment-specific performance.

- Campaign goals were not controlled — some may be brand-focused while others are conversion-driven, which affects interpretation.

- Google’s algorithmic influence — Google-created campaigns may benefit from proprietary adjustments not visible through raw data alone.

Implications for the Broader Field

The findings provide robust empirical evidence that algorithmically generated advertising campaigns (like OTTO’s) can outperform traditional setups — especially in terms of engagement metrics. In a landscape increasingly dominated by automation (e.g., Performance Max, Smart Bidding), these results validate the value of third-party platforms that bring custom optimization strategies.

This also reinforces a broader trend: personalized, rule-driven automation may be better suited to certain business models than default Google tools.

Future Research and Recommendations

To build upon these findings, we recommend the following:

- Conversion-Based Analysis: Evaluate how CTR and CPC ultimately translate into ROI or ROAS. This is essential to understanding whether higher CPCs are justified by more conversions.

- Segmented Benchmarking: Compare OTTO vs. Google campaigns across verticals (e.g., e-commerce vs. local services).

- Longitudinal Study: Measure performance across multiple months post-update to ensure trends are stable and not seasonal.

- A/B Testing in Controlled Environments: Run parallel OTTO and Google campaigns with identical goals and budgets to isolate performance lift.

- Integrate Additional Metrics: Consider bounce rate, conversion rate, and customer lifetime value (CLV) to develop a more holistic view of ad quality.

6. Conclusion

This white paper investigated whether OTTO-generated Google Ads campaigns outperform their Google-created counterparts in two critical performance metrics: Click-Through Rate (CTR) and Average Cost-Per-Click (CPC). Using a comprehensive dataset from the otto_ppc database, we analyzed tens of thousands of campaign instances before and after a major keyword generation update on April 22, 2025.

Our analysis revealed that:

- OTTO campaigns consistently achieved higher CTRs than Google-created campaigns, both before and after the strategy update — demonstrating stronger user engagement.

- However, OTTO campaigns also showed significantly higher CPCs, indicating a potential trade-off between engagement quality and cost-efficiency.

- After the April 22 update, OTTO’s CPCs decreased by ~9%, while CTR dipped slightly (~6%) — a sign of improving cost-efficiency without a major performance penalty.

Importantly, these findings were statistically validated through Wilcoxon rank-sum tests, which confirmed that the differences were not due to chance.

In summary, OTTO’s automated campaign generation offers clear engagement benefits, particularly in CTR. Although it comes at a higher cost-per-click, recent improvements suggest that the system is evolving toward better cost control. These insights are crucial for stakeholders aiming to balance ad performance and budget efficiency, and they underscore the strategic value of OTTO’s campaign automation in competitive paid search environments.

7. References

Citations

- Google Ads Help. (2023). About CTR (Click-through Rate). Retrieved from https://support.google.com/google-ads/answer/2615875

- Google Ads Help. (2023). About Average CPC (Cost-per-Click). Retrieved from https://support.google.com/google-ads/answer/116495

- Wilcoxon, F. (1945). Individual comparisons by ranking methods. Biometrics Bulletin, 1(6), 80–83.

- Wickham, H., & Grolemund, G. (2016). R for Data Science. O’Reilly Media.

- Kuhn, M., & Johnson, K. (2013). Applied Predictive Modeling. Springer.

- James, G., Witten, D., Hastie, T., & Tibshirani, R. (2021). An Introduction to Statistical Learning (2nd ed.). Springer.

XGBoost Documentation. (2024). XGBoost: Scalable and Flexible Gradient Boosting. Retrieved from https://xgboost.readthedocs.io