You may also read a concise version of this research in our blog: The Limits of Schema Markup for AI Search: LLM Citation Analysis

This study examines whether implementing schema markup on webpages influences how often domains are cited by major large language models (LLMs). Using a dataset of extracted HTML schema information and a separate dataset measuring domain visibility in LLM responses, we compare schema coverage with visibility metrics across OpenAI, Gemini, and Perplexity.

Overall, the analysis shows that schema adoption varies significantly across domains, but higher schema coverage does not reliably lead to higher visibility scores. This suggests that schema markup alone is not a major driver of LLM citation behavior, despite common industry assumptions.

Methodology

1. Schema Data Extraction

We analyzed webpage HTML outputs to determine whether each URL contained schema markup in formats such as JSON-LD, microdata, or RDFa.

Each page was labeled as either containing a schema or not. Domain names were then extracted from URLs so that schema usage could be evaluated at the domain level and compared with LLM visibility scores.

2. Computing Schema Coverage

Schema coverage was calculated as the proportion of URLs within each domain that contained schema markup. This resulted in a domain-level schema percentage indicating how widely structured data was implemented.

3. Categorizing Domains into Schema Buckets

To enable meaningful comparisons, each domain was assigned to one of five schema usage categories:

- No Schema: 0%

- Minimal Schema: 1–30%

- Moderate Schema: 31–70%

- High Schema: 71–99%

- Full Schema: 100%

These buckets allowed us to compare visibility patterns across different levels of schema adoption.

4. LLM Visibility Data Preparation

The visibility dataset measured how often each domain appeared in LLM-generated responses. Some domains appeared multiple times within the same platform, so additional preprocessing ensured accurate aggregation.

This included:

- Normalizing all domain names into a consistent domain.tld format to allow correct matching across datasets.

- Aggregating repeated domain records using weighted averages, where visibility scores were weighted by the number of responses the domain competed in. This ensured that visibility measurements based on a large number of LLM responses carried more influence than one-off or low-sample observations.

- Ensuring cross-platform comparability so that each domain had a unified and consistent visibility representation across OpenAI, Gemini, and Perplexity.

This produced a single, reliable visibility record per domain, per platform.

5. Merging Schema and Visibility Data

Schema coverage metrics were matched with LLM visibility records using normalized domain names.

This produced a unified dataset containing each domain’s schema percentage, schema category, visibility scores across platforms, and supporting metrics such as competition counts and appearance frequency.

This merged dataset formed the basis of all downstream analysis.

6. Analysis Framework

The analysis examined how visibility scores varied across schema categories. The schema buckets served as the primary comparison groups. We evaluated:

- Distribution of visibility scores across schema levels

- Whether higher schema adoption correlated with better visibility

- Platform-specific behavior and consistency

This framework enabled a direct assessment of whether schema markup influences LLM citation behavior.

Distribution of Schema Coverage Across Domains

The highly polarized distribution is driven in part by uneven sample sizes across domains.

Many domains in the dataset only had a small number of sampled URLs, and when all of those pages either contained schema or lacked it, those domains naturally fell into the 0 percent or 100 percent buckets.

Larger domains with hundreds of sampled URLs showed more variation, but even in those cases, schema adoption tended to be consistent enough that their percentages still clustered near the extremes.

As a result, the distribution reflects differences in sampling density as much as differences in schema adoption practices.

Impact of Schema Coverage on LLM Visibility

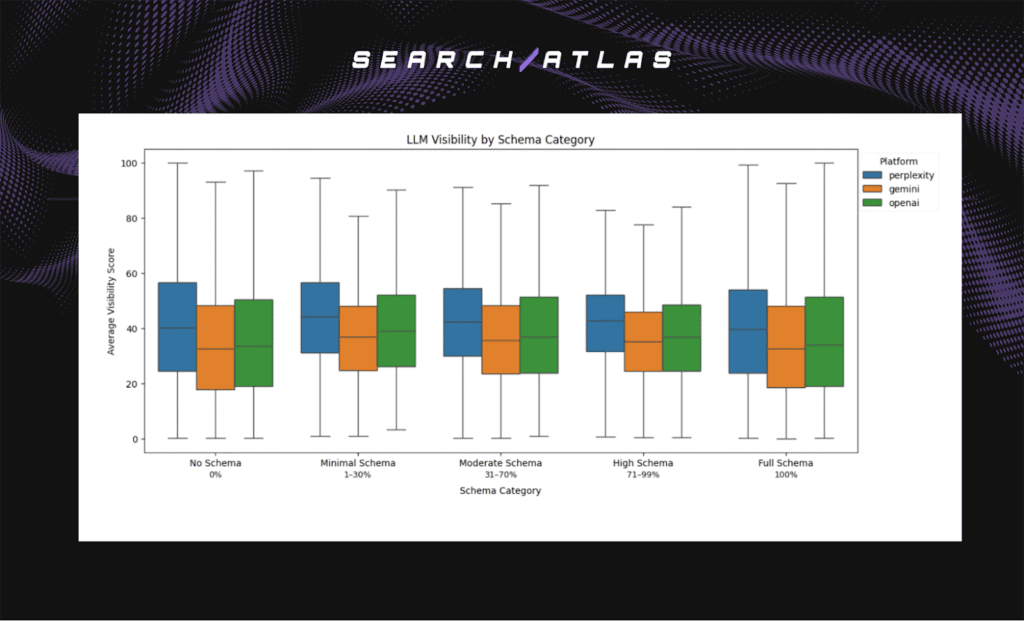

Box Plot

Key Insights

Visibility distributions for Perplexity, Gemini, and OpenAI are highly similar across all schema categories.

There is no consistent upward trend showing that domains with more schema markup achieve higher visibility scores.

The medians, interquartile ranges, and overall spread look nearly identical from “No Schema” to “Full Schema,” indicating that schema adoption does not appear to be a determining factor in how prominently a domain is cited by any of the three LLM platforms.

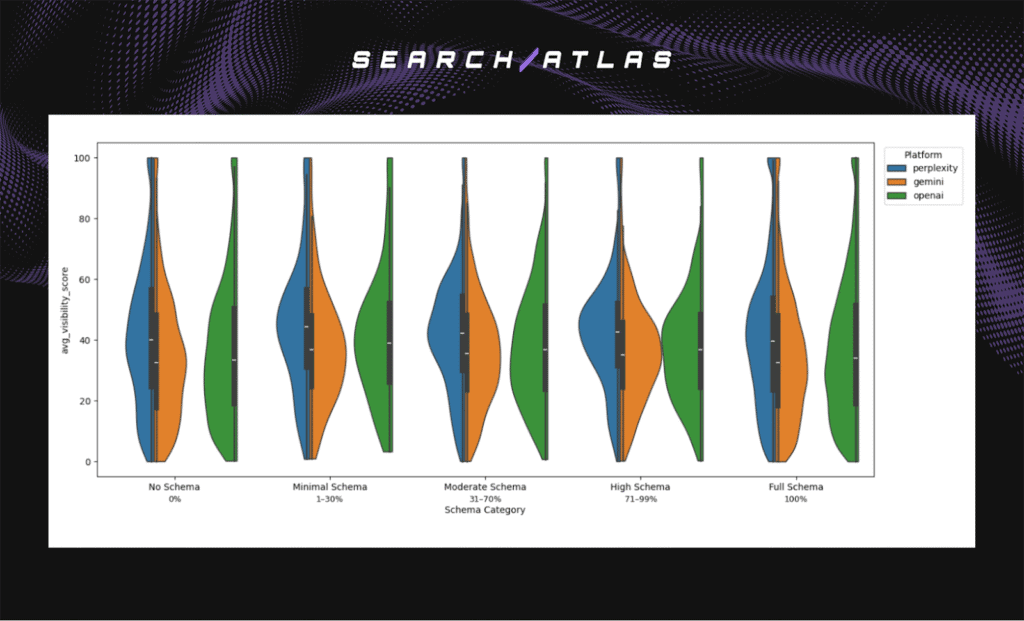

Violin Plot

This visualization highlights the density and spread of visibility scores within each schema category.

Key Insights

1. Distributions remain unchanged across schema levels

The density shapes, medians, and spread look almost identical across all schema categories, indicating that schema percentage has no meaningful effect on the distribution of visibility scores.

2. High- and low-performing domains appear in every category

Domains with very high visibility (70–100) and very low visibility (0–20) are present in all schema buckets. This shows that schema usage does not distinguish strong performers from weak ones.

3. Platform patterns remain stable

Perplexity consistently has the highest median visibility, Gemini the lowest, and OpenAI falls in between. These platform differences stay the same regardless of schema adoption level.

4. No upward trend with higher schema adoption

If schema improved visibility, high-schema categories would show higher medians or tighter distributions. Instead, the shapes remain virtually the same across all buckets.

5. Wide overlap across all categories

All platforms show broad visibility ranges (0 to 100) within every schema group, demonstrating that schema coverage alone does not explain or predict visibility outcomes.

Conclusion

The findings show that schema markup has no measurable effect on LLM visibility across OpenAI, Gemini, or Perplexity.

Domains with complete schema coverage perform no better than those with minimal or no schema, and visibility distributions remain almost identical across all schema categories. While schema markup continues to play a role in traditional SEO, it does not appear to influence how frequently LLMs cite a domain.

This indicates that the belief that schema improves LLM visibility is overstated, and that other factors such as content quality, topical relevance, and LLM retrieval behavior are likely far more important in determining which sources LLMs reference.