You may also read a concise version of this research in our blog: URL Freshness in LLM Responses: Search-Enabled vs Disabled Comparison

Executive Summary

This study evaluates how large language models (LLMs) reference external web content by analyzing the freshness of URLs cited across two major conditions:

- Web Search Enabled (OpenAI, Gemini, Perplexity)

- Web Search Disabled (OpenAI and Gemini)

We sampled 90,000 citations with web search enabled from OpenAI, Gemini, and Perplexity, and 60,000 citations with web search disabled from OpenAI and Gemini. Publication dates were successfully extracted for 10,329 URLs in the web-search-enabled dataset and 4,352 URLs in the web-search-disabled dataset.

In the web-search-enabled condition, all platforms show a clear recency bias, with most citations referencing content published within a few hundred days of the LLM response.

In the web-search-disabled condition, OpenAI still surfaces relatively recent content, though not as fresh as with search enabled. In contrast, Gemini shifts sharply toward older material and relies more on its most relevant training-time knowledge.

Methodology

Dataset Construction

1. Web Search Enabled Sample

- Total: 90,000 URLs

- Platforms: 30,000 each from OpenAI, Gemini, and Perplexity

- Time Range: October 28, 2025 – November 6, 2025

- Note: All three platforms had search enabled during generation.

2. Web Search Disabled Sample

- Total: 60,000 URLs

- Platforms: 30,000 each from OpenAI and Gemini (Note: Perplexity cannot disable its web retrieval mode, so no “disabled” sample exists for Perplexity)

- Time Range: October 01, 2025 – October 19, 2025

In total, these datasets consist of 180,000 sampled citations across both experimental conditions.

Publication Date Extraction

We removed all URLs that were homepages, and for each remaining cited URL we performed targeted web scraping to identify publication timestamp metadata. The extraction process checked the following sources:

- Trusted <meta> publication tags, such as article:published_time, og:published_time, datePublished, and similar fields.

When a valid date was found, it was parsed and recorded as the publication timestamp.

Using this method, publication dates were successfully extracted for 21,412 URLs across both datasets.

Freshness Measurement

By comparing the publication dates of the cited URLs with the timestamps of their corresponding LLM responses, we measured how recently each referenced source was published relative to when it was cited.

This indicates whether each platform tends to reference newer content or relies more heavily on older material.

Formula:

Freshness (days) = LLM Response Timestamp – URL Publication Date

This metric reveals how “recent” the cited information was at the moment the model generated its output.

The analysis includes:

- Freshness distribution across all LLMs

- Per-platform comparisons

- Comparisons between web search enabled and disabled conditions

Only queries and responses generated in 2025 were included to maintain temporal consistency.

The analysis focuses exclusively on queries generated in 2025, matched against URLs that may have been published at any time prior to their citation, not limited to 2025 publications.

Web Search Enabled

Homepage vs Non-Homepage / Content URLs – Web Search Enabled

To compare URL-response freshness, we exclude homepage URLs because they are not article or content pages and therefore do not contain meaningful publication-date metadata.

The chart below shows the proportion of homepage vs non-homepage URLs cited by each LLM when web search is enabled.

From the chart, we see that when web search is enabled, Gemini (92.95%) and OpenAI (88.72%) overwhelmingly cite non-homepage URLs, indicating that these models tend to surface specific content pages rather than top-level domain homepages.

Perplexity, however, cites a much more balanced mix of homepage (48.56%) and non-homepage/content URLs (51.44%), suggesting that its retrieval system often surfaces domain-level entry points alongside specific content pages.

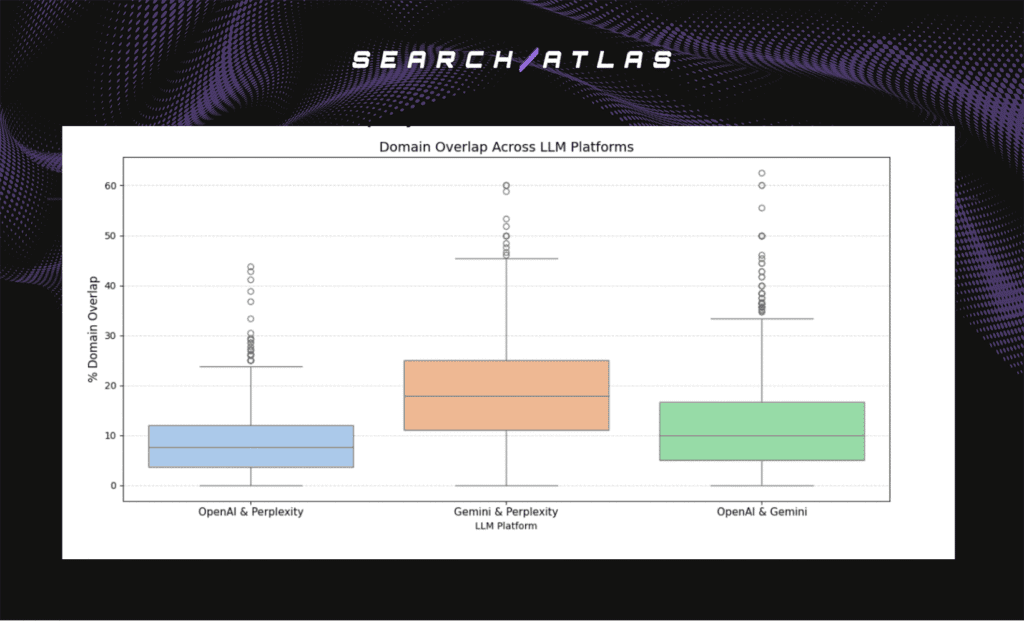

Domain Overlap Across LLM Platforms (Web Search Enabled)

To measure how similarly the models retrieve external sources, we computed domain-level overlap per query across pairs of LLMs.

For each query, we collected the set of domains cited by OpenAI, Gemini, and Perplexity. We then calculated the symmetric overlap as the intersection of two models’ domain sets divided by the union of those sets.

This process was repeated for every query, producing three overlap distributions: OpenAI & Perplexity, Gemini & Perplexity, and OpenAI & Gemini.

We also compared the average number of domains cited per query to assess each model’s overall retrieval breadth.

Below is the distribution of domain overlap between the LLMs, showing how often they cite the same domain for the same query.

Key Insights

- All model pairs show low domain overlap, meaning each LLM retrieves largely different sources even when answering the same query.

- OpenAI & Perplexity have the lowest overlap (median around 5–12 percent), indicating the strongest divergence in domain selection.

- Gemini & Perplexity show the highest overlap but also the widest spread (0–60 percent), indicating very inconsistent alignment across queries.

- OpenAI & Gemini show consistently low overlap (about 5–15 percent), confirming that they rarely cite the same domains.

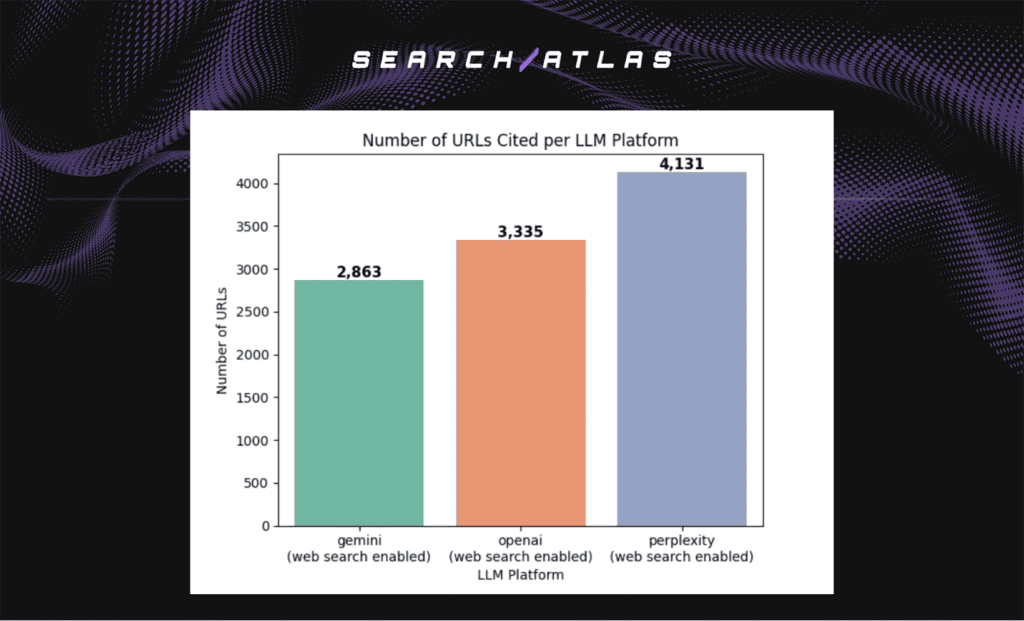

Distribution of Extracted Publication Dates by Platform – Web Search Enabled

All three LLMs analyzed (Gemini, OpenAI, and Perplexity) had web search enabled.

Out of the 30,000 URL citations analyzed for each platform, the chart below shows the number of non-homepage URLs from which a publication date could be extracted.

OpenAI and Perplexity cite more URLs than Gemini when web search is enabled, with Perplexity producing the highest volume.

This reflects Perplexity’s strong reliance on live retrieval and OpenAI’s broad citation behavior.

Gemini, by comparison, yields fewer article-level URLs with extractable publication dates, despite having the highest share of non-homepage citations (≈93 percent), due to our strict publication-date extraction logic.

Perplexity is also one of the most citation-dense models, generating a high number of citations relative to its typically shorter responses, whereas Gemini produces longer outputs with fewer citations.

Normalized Sampling for Fair Cross-Platform Comparison

To make the analysis comparable, we normalized the sample sizes by randomly selecting an equal number of URLs from each platform, using the smallest platform (Gemini with 2,863 URLs) as the baseline.

This ensures a fair comparison across all models.

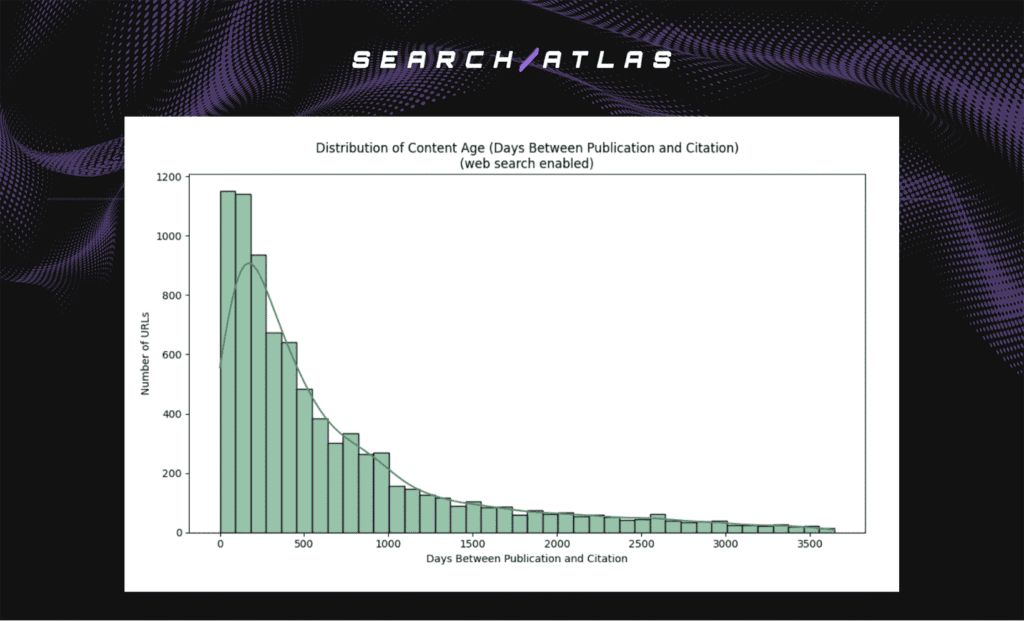

Distribution of Content Age Across All LLMs – Web Search Enabled

Overall distribution of days between publication and citation for all LLMs combined within the last 10 years.

Insight:

The distribution of content age is strongly right-skewed, with most cited URLs published within the first few hundred days. Citation activity peaks sharply between 0 and roughly 300 days, indicating that LLMs tend to reference recent material.

A large concentration of URLs have freshness values close to zero, meaning they were published within a few weeks or months before citation.

Beyond this peak, the distribution gradually tapers into a long tail, demonstrating that while recent content dominates LLM citations, a meaningful portion of sources are several years old.

Overall, the pattern indicates that LLMs prioritize newer content but still incorporate older material when it remains relevant to the topic.

Distribution of Content Age per LLM Platform – Web Search Enabled

Distribution of content age segmented by platform (Gemini, OpenAI, and Perplexity), showing how recently each model tends to cite published material within the last 10 years.

Insight:

All three LLM platforms show a right-skewed distribution of content age, indicating that most cited URLs were published recently, with citation activity peaking between 0 and roughly 300 days.

Gemini shows the strongest preference for fresh content, with the highest density near zero days and the steepest drop-off afterward. This means Gemini most consistently cites recently published pages.

Perplexity sits in the middle, citing a healthy amount of fresh content but with a moderate spread into older publication dates.

OpenAI surfaces older material more frequently than Gemini and Perplexity, reflected in its broader distribution and heavier tail, while still demonstrating strong performance in retrieving fresh content.

Overall, Gemini retrieves the freshest content, Perplexity provides a balanced mix of fresh and moderately old sources, and OpenAI surfaces the widest range of publication ages including the largest share of older URLs.

Semantic Relationship Between Queries and Cited URL Content

To measure how relevant each citation was to the user’s query, we computed the semantic similarity between:

- the query text, and

- the scraped content from each cited URL.

We scraped content from 69,017 cited URLs and generated embeddings for both the query text and the scraped URL content. The data used contained samples from OpenAI, Gemini and Perplexity, all with web search enabled.

For each row, we then computed cosine similarity between the two embeddings, giving a score from 0 to 1, where higher values indicate stronger semantic alignment between the question and the cited URL.

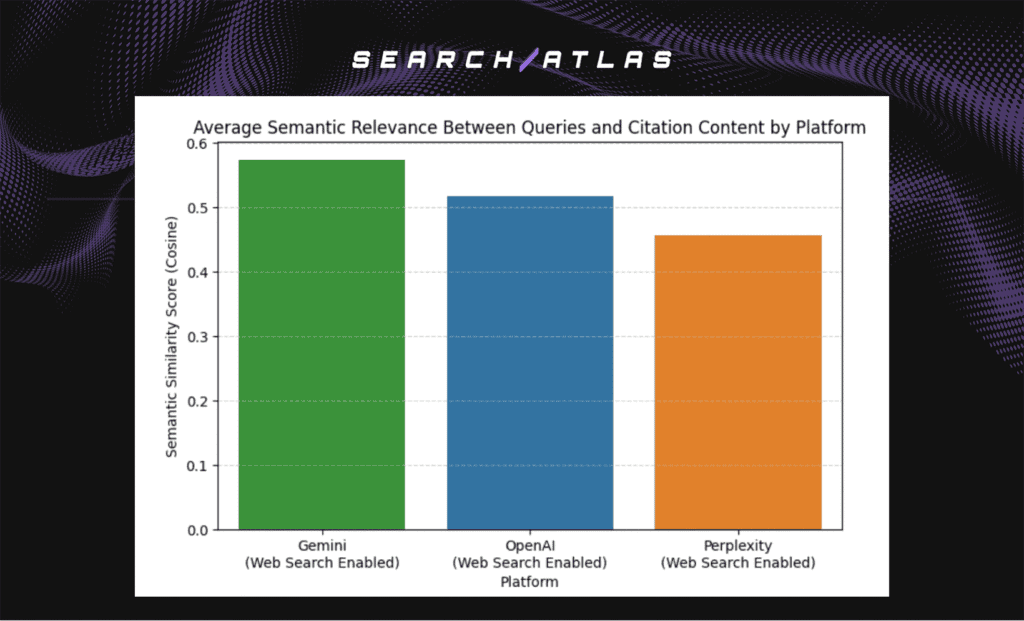

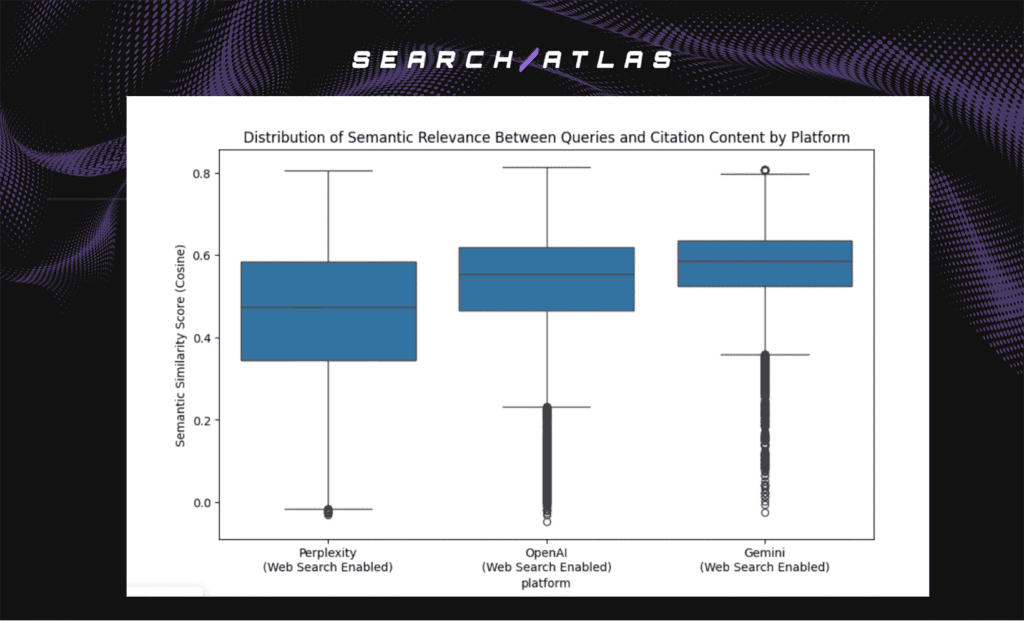

Below is the average semantic relevance score for each platform, summarizing overall citation quality.

Below is the distribution of semantic relevance scores for each platform, showing how citation quality varies across queries.

Insights

- Gemini shows the highest semantic relevance overall, with the strongest median and average similarity (≈0.57–0.60) and the tightest, most consistent distribution.

- OpenAI follows closely, with slightly lower similarity scores than Gemini (≈0.52–0.56) but still strong and stable across queries.

- Perplexity has the lowest average similarity (≈0.45–0.48) and the widest spread, indicating more variability. Some citations are highly relevant, but others are significantly less aligned with the query.

- Gemini is both the most relevant and the most consistent model.

- Perplexity is the most variable, and OpenAI sits in the middle on both relevance and stability.

Web Search Disabled

We collected 30,000 samples each for OpenAI, Gemini, and Perplexity. However, because Perplexity web search was enabled, it was excluded from this analysis.

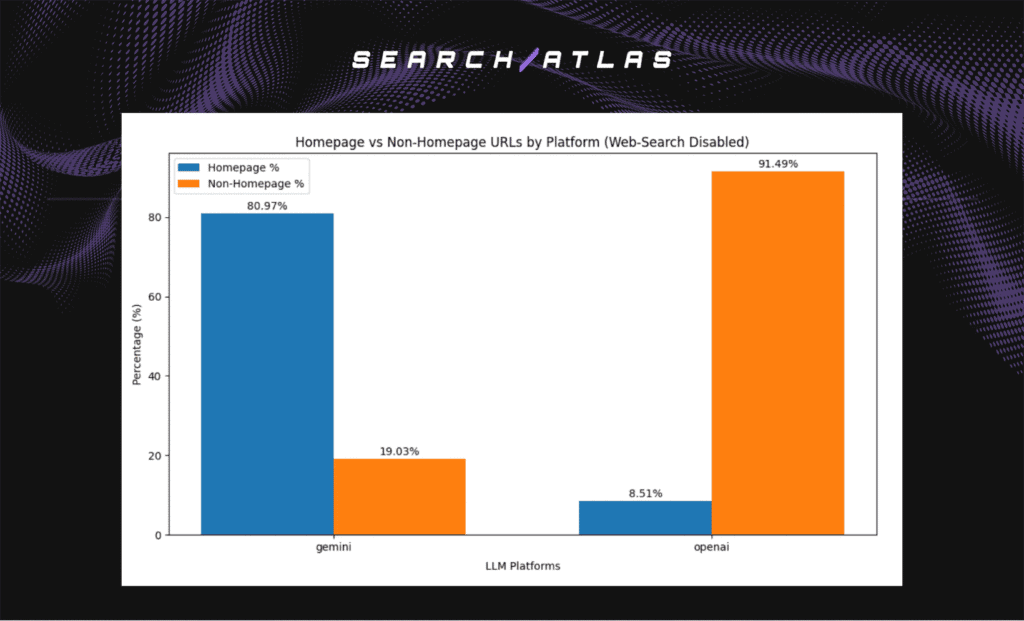

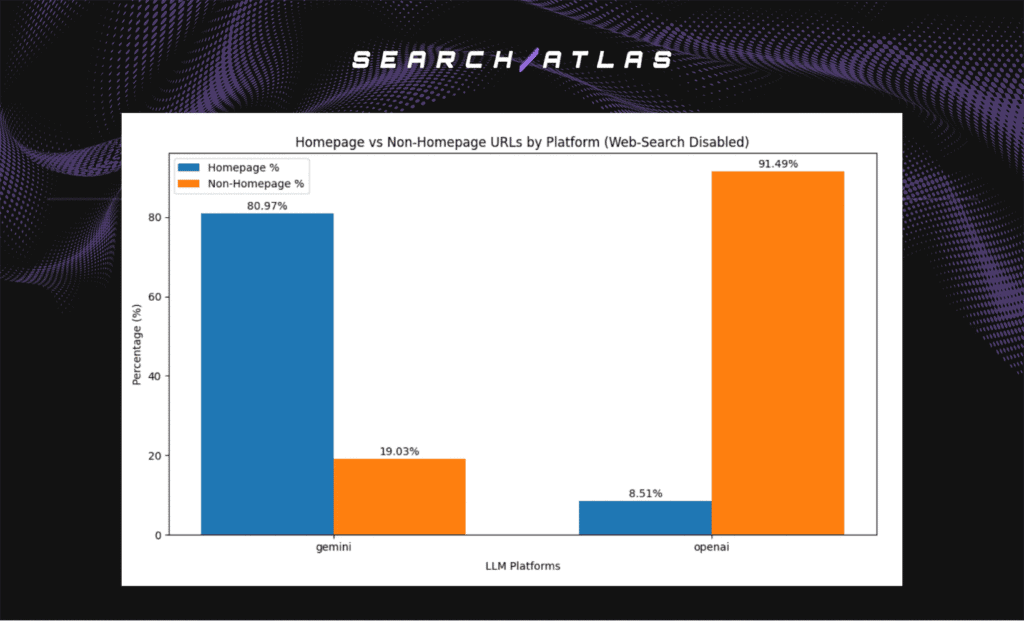

Homepage vs Non-Homepage / Content URLs – Web Search Disabled

During this analysis, we observed the following pattern: Gemini with web search disabled rarely returns URLs that point to specific webpages. Instead, it almost always returns general domain homepages. This behavior is shown in the chart below.

The chart below shows the proportion of homepage vs non-homepage URLs cited by each LLM when web search is disabled.

From the chart above, we can see that Gemini returns far more top-level domain pages than article or content URLs. This shows that without web-search retrieval, Gemini points to root domains rather than individual articles or pages.

In contrast, OpenAI, with web search disabled, still cites mostly content-specific URLs rather than homepages, with over 91 percent falling into the non-homepage / content category.

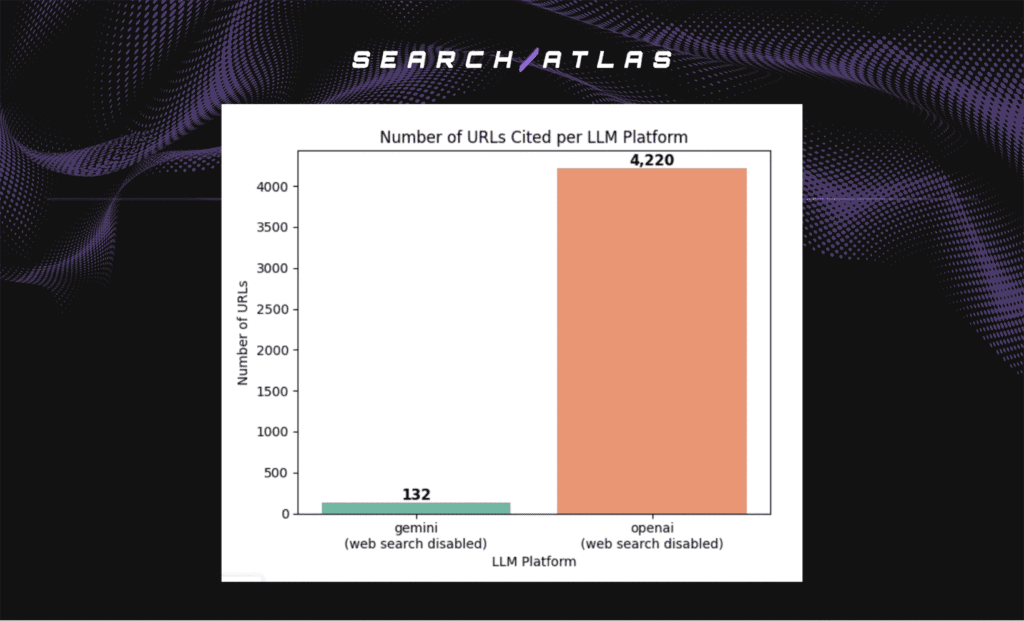

Distribution of Extracted Publication Dates by Platform – Web Search Disabled

Insight

Out of the 30,000 Gemini samples in the web-search-disabled dataset, only 5,710 were non-homepage URLs. From these, our strict publication-date extraction logic was able to retrieve valid dates for just 132 URLs, since we only accept trusted metadata fields and avoid capturing unrelated or ambiguous dates.

OpenAI produced 4,220 extractable publication dates in the disabled dataset, indicating it continues to return real article URLs even without web search.

Overall, the chart shows that web search capability significantly increases the number of article-level URLs with clean publication metadata, and Gemini is the most constrained when retrieval is disabled.

Distribution of Content Age per LLM Platform – Web Search Disabled

This chart shows the distribution of content age for OpenAI and Gemini with web search disabled, within the past 10 years.

Insights

The following are key insights drawn from the analysis:

- OpenAI continues to cite relatively recent content when web search is disabled. OpenAI’s distribution is strongly concentrated between 0 and ~1,200 days (0 – 3.5 years), with a pronounced peak under 500 days.This shows that OpenAI continues to surface content linked to comparatively recent publication dates, even when retrieval is disabled.

- Gemini cites much older content when web search is disabled. Gemini’s distribution is extremely flat and dominated by citations between 2,500 and 3,500+ days (~7–10 years old). This indicates that without web search, Gemini relies mainly on older, stable information learned during pretraining, rather than material that has been published more recently.

- Homepage Bias Reduces Extractable Publication Dates. OpenAI contributes 4,220 URLs with detectable publication dates, while Gemini contributes only 132, because most Gemini URLs in disabled-search mode are homepage-level and do not expose publication metadata.

Conclusion

Large language models demonstrate distinct patterns in how they cite web content, and the presence or absence of web search plays a defining role in the freshness and quality of their citations.

Web Search Enabled

With search enabled, all platforms exhibit a strong recency bias:

- Most cited URLs are published within a few hundred days of the LLM response.

- Gemini cites the freshest material, showing the highest concentration of near-zero-day content.

- Perplexity sits in the middle, producing many fresh citations but also a moderate spread into older pages.

- OpenAI surfaces the widest range of publication ages, including the largest share of older sources.

Overall, when retrieval is available, all models tend to surface timely, up-to-date content, though Gemini is the most recent-focused and OpenAI is the most age-diverse.

Web Search Disabled

When web search is disabled, the platforms diverge significantly:

- OpenAI continues to cite moderately recent material, returning many article-level URLs and maintaining a meaningful degree of recency even without retrieval.

- Gemini shifts heavily toward homepage-level URLs and older content, relying primarily on its pretraining knowledge rather than recently published pages.

- As a result, Gemini produces far fewer extractable publication dates, and its freshness distribution centers around sources roughly 7–10 years old.

This shows that Gemini’s citation freshness depends strongly on having access to web retrieval, while OpenAI remains more resilient.

Key Takeaways

This analysis shows that web search greatly improves the freshness, specificity, and depth of LLM citations. Below are key takeaways for each model analyzed:

- Perplexity benefits from constant retrieval, consistently surfacing a mix of fresh and mid-aged content.

- Gemini provides the freshest citations when search is enabled, but becomes highly constrained without retrieval.

- OpenAI shows the strongest resilience, continuing to produce relatively recent, article-level citations even with search disabled.

- Gemini produces the most semantically relevant URL citations, while citing fewer URLs than OpenAI and Perplexity.