Large Language Model Optimization (LLMO) is the marketing tactic of tailoring content for visibility in AI-powered search systems. Large Language Model Optimization improves how information appears in large language models like ChatGPT, Claude, and Gemini when users ask questions. LLMO ensures content is accurately retrieved and presented by AI assistants. Large Language Model Optimization combines traditional SEO elements with specialized techniques designed for AI comprehension.

As AI assistants become more popular, LLMO becomes more important compared to traditional SEO. Effective LLMO strategies include building topical authority through content clusters. Optimizing for semantic relationships between concepts improves visibility. Demonstrating expertise through proper citations enhances credibility. Popular AI platforms that require optimization include OpenAI ChatGPT, Anthropic Claude, and Google Gemini. LLMO success metrics include visibility tracking, answer accuracy, and brand mention monitoring in AI responses.

What is LLMO (Large Language Model Optimization)?

LLMO (Large Language Model Optimization) refers to the technique of improving how Large Language Models interpret, generate, and rank content in search engine environments and answer-based systems. LLM optimization directly impacts how AI systems retrieve, evaluate, and surface content as ranked answers on platforms like Google Search, SGE (Search Generative Experience), ChatGPT, Perplexity, and other LLM-powered interfaces.

LLMO helps content appear as authoritative answers when users ask questions through AI interfaces. LLMO focuses on how information is structured and connected to improve retrieval by language models.

LLMO targets the training data and retrieval mechanisms of large language models, while traditional SEO targets web search algorithms. The practice requires understanding how LLMs process information and recognize authoritative sources.

LLMO practitioners optimize for semantic relationships and entity recognition. They structure information hierarchically for better AI comprehension. Successful LLM SEO combines technical understanding of models with content expertise. LLMO tactics ensure that content becomes a preferred source for AI-generated responses.

How LLMs (Large Language Models) works?

LLMs (Large Language Models) process and generate text based on patterns learned from massive training datasets. LLMs analyze relationships between words and concepts to understand context. LLMs use transformer architecture with attention mechanisms to focus on important parts of the input text.

Large language models process language using a multi-stage architecture based on tokenization, vector encoding, and probabilistic prediction. Each input splits into tokens. Tokens become vectors that represent meaning. Transformers process these vectors using self-attention to determine which inputs matter most.

The output sequence reflects the most likely continuation or completion of the user query. During AI answer generation, models retrieve internal memory (trained content) or external memory (retrieval-augmented generation) and select high-confidence text segments.

Training data defines what models learn and recall during generation. Core datasets include Common Crawl, Wikipedia, and high-authority domains. These sources supply the language structure and factual basis used in inference. Models assign higher confidence to content that mirrors this structure.

LLMs assign higher weight to content that includes clear structure, defined entities, and compressed information density. Content that lacks clarity, formatting, or semantic alignment fails to surface in generative responses, even when indexed in traditional web search.

What is the Importance of LLM Optimization?

LLM Optimization ensures content visibility as users increasingly use AI assistants instead of traditional search engines. Content optimized for LLMs appears more frequently in AI-generated answers. LLM visibility directly impacts brand awareness, traffic potential, and information distribution.

The primary importance of LLM optimization is maintaining presence in AI assistant responses. Brands and publishers who ignore LLM training and optimization become invisible when users ask questions through ChatGPT or Claude. Only optimized content appears in responses. Visibility now depends on how well content aligns with LLM retrieval mechanisms.

LLM optimization for AI visibility delivers specific benefits for businesses. LLMO increases brand mentions in AI-generated responses, which creates connections with potential customers. Optimized content becomes a citation source for factual information, which builds authority in knowledge domains. Companies using LLMO strategies see improved information accuracy when AI systems discuss their products or services.

The benefits of LLM optimization include reduced misinformation about brands and products. It ensures facts remain accurate when AI systems reference company information. LLMO creates ways for customers to discover businesses through conversational interfaces. Optimizing for LLMs protects against competitors who might otherwise dominate AI-generated responses in your industry.

For websites already investing in SEO, LLM optimization provides another distribution channel without requiring excessive additional work. Many optimization principles remain similar, though LLMO needs specific adjustments for AI comprehension. Companies with comprehensive LLMO strategies position themselves well for current and future search technology developments. They secure visibility in both traditional search results and AI assistant responses.

How to Optimize LLMs for AI Answers?

To optimize LLMs for AI answers, apply LLMO strategies that improve content retrievability, semantic clarity, and entity-level alignment. Recommended LLM optimization for AI visibility includes building topical authority, optimizing for semantic SEO, using proper faceted navigation, demonstrating EEAT, and more.

The 6 best LLM optimization options for AI visibility tools are below.

1. Build Topical Authority

Topical authority is the depth and breadth of content coverage on a specific subject, structured around a central entity and its related concepts. A domain gains topical authority by publishing interrelated content that covers all dimensions of a topic, including definitions, applications, problems, solutions, and comparisons.

Topical authority improves LLM content retrieval across prompt variations, subtopic expansions, and multi-turn conversations. Language models assign higher weight to domains that demonstrate complete knowledge around an entity because structured topical coverage increases embedding surface and entity co-occurrence frequency.

The steps to build topical authority are listed below.

- Create articles that cover each subtopic and use case connected to the main entity.

- Use semantic headings that mirror user questions and prompt phrasing.

- Interlink articles using anchor text that repeats the core entity or its attributes.

- Expand each page with definitions, lists, examples, and references to related concepts.

- Reinforce coverage by clustering content under a dedicated URL structure or content hub.

A domain targeting AI in education should include separate articles on LLM bias in grading, student-facing AI tools, prompt design for learning outcomes, and privacy laws related to education data. When these pages link to each other using semantically aligned anchor segments, the model retrieves them more often as grouped sources in generative responses.

Topical authority improves visibility in platforms such as Perplexity and Gemini, which prioritize cluster density and site-wide relevance when selecting multi-source summaries.

2. Optimize For Semantic SEO

Semantic SEO is the process of structuring content based on entities, attributes, and relationships to improve meaning-based retrieval in language models. Semantic SEO replaces keyword stuffing with entity alignment, SPO formatting, and concept-level clarity that supports how LLMs encode and rank information.

Semantic SEO improves token match, embedding compatibility, and factual recognition during prompt resolution. Language models prioritize content that mirrors entity structures, maintains predicate consistency, and repeats concepts across context windows.

The methods to optimize for semantic SEO are listed below.

- Start each section by introducing a named entity and defining it using attributes or relationships.

- Repeat the named entity or a directly related attribute in the next sentence to establish entity chains.

- Write each paragraph using subject-predicate-object sentence structures to improve sentence retrievability.

- Add factual statements using exact measurements, comparisons, or dates to support token salience.

- Reuse synonyms or partial synonyms of entities and predicates to strengthen semantic layering.

An article on retrieval-augmented generation should define the term at the top, describe how it connects to vector databases, reference OpenAI and Meta as system providers, and compare RAG to standard transformer output. If the page repeats “retrieval system,” “external memory,” and “document store” in structured positions, semantic compatibility with model embeddings increases.

Semantic SEO supports answer inclusion in AI tools such as ChatGPT and Claude, which select content blocks based on vector proximity to user prompts, not string-matching keywords.

3. Use Proper Faceted Navigation

Faceted navigation is the structuring of content by multiple dimensions such as topic, intent, audience, or format to support precise retrieval by language models. Faceted systems create logical pathways for both users and LLMs to isolate relevant segments based on query signals.

Faceted navigation improves intent matching, content discoverability, and query resolution in AI-generated answers. Language models surface content more frequently when headings, URLs, and metadata reflect distinct layers of topic organization.

The actions to implement faceted navigation are listed below.

- Group content using topic-based subfolders and subdomains (e.g., /llm-seo/, /llm-seo/tools/).

- Assign each page metadata that reflects its use case, target audience, and content type.

- Link between pages only after naming shared entities or relationships to build anchor continuity.

- Separate content by search intent using headings such as “How to…”, “What is…”, or “Best tools for…”.

- Limit each page to a specific facet, such as tutorial, definition, comparison, or case study.

A site that publishes LLM-related content can divide navigation into instructional content (e.g., “How to fine-tune a language model”), tool reviews (e.g., “Best embedding visualizers for LLMs”), and strategic overviews (e.g., “Why prompt engineering matters for generative SEO”). When this structure reflects real user segmentation, LLMs retrieve answers with higher precision and include multiple facets in multistep outputs.

ChatGPT browsing and Perplexity lookup functions both prioritize scoped URLs and clearly segmented page formats when returning citations in response to layered prompts.

4. Demonstrate EEAT

EEAT stands for Experience, Expertise, Authoritativeness, and Trust, and it signals to large language models whether a source qualifies as reliable for answer generation. EEAT strengthens source credibility across AI systems that prioritize verifiable information and structured attribution when generating responses.

Demonstrating E-E-A-T improves the likelihood of citation, quote inclusion, and factual alignment in AI-generated outputs. Language models assign higher confidence scores to content that includes author metadata, trusted sources, and consistent formatting across a domain.

The best techniques to demonstrate EEAT are listed below.

- Add author bylines with full names, credentials, and links to contributor profile pages.

- Use Person and Organization schema to structure author and publisher metadata.

- Cite third-party sources with clear domain trust, such as government sites, academic journals, or industry-leading blogs.

- Include publication dates and update timestamps to signal recency and version control.

- Maintain formatting consistency across all articles to reinforce brand identity and editorial quality.

An article about LLM hallucinations that cites a peer-reviewed AI study from arXiv, includes an author bio linking to academic work, and marks the content with ScholarlyArticle schema ranks higher for trust-based inclusion. Platforms such as Claude and Gemini prioritize those pages when resolving prompts that include scientific, medical, or legal terms.

EEAT reinforces domain-level trust when multiple pages within a site demonstrate consistent author structure, source formatting, and topical depth across related concepts.

5. Reinforce Brand Mentions Using Entity Framing

Entity framing is the structured repetition of a brand name within clear, attribute-based contexts that increase visibility inside large language models. Using entity framing for brand optimization improves how models understand the brand’s role, category, and relevance in response generation.

A brand is a named entity, and LLMs treat it as such. Language models identify and retrieve branded content by detecting entity signals (such as name repetition, relationships, and factual attributes) that match entries in their internal or external data sources.

Brand optimization through entity framing improves citation rates, answer inclusion, and contextual association in AI tools.

The techniques to reinforce brand mentions using entity framing are listed below.

- Introduce the brand with a full name and describe its function or category in the first sentence.

- Repeat the brand using a related attribute, such as tool, solution, founder, or product line.

- Connect the brand to recognized concepts, technologies, or entities using fact-based statements.

- Include references to industries, users, or use cases that strengthen brand relationships.

- Apply Organization schema and sameAs links to associate the brand with official profiles and external sources.

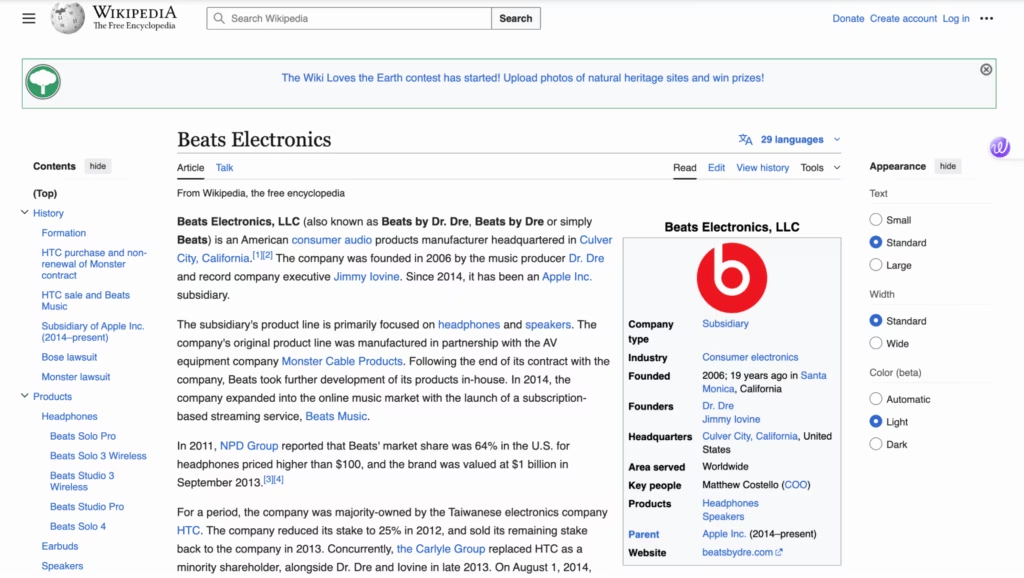

Wikipedia is the highest-performing brand entity model in LLM training and retrieval. Each Wikipedia page defines the entity by name, type, origin, and function in the opening paragraph. Subsequent sections repeat the name and connect it to other known entities using measurable, verifiable statements.

Wikipedia entries maintain consistent formatting, attribute layering, and relationship mapping that allow LLMs to extract accurate brand facts and associations.

A branded page that introduces Search Atlas as “a platform for AI-powered SEO tools” repeats “Search Atlas platform” in descriptions of its features, and connects the brand to known tasks such as link building and technical audits, increasing the visibility of Search Atlas in AI-generated responses.

6. Match User Intent with Answer-Focused Formatting

Answer-focused formatting presents information in structures that align with how LLMs extract, summarize, and deliver content during generative responses. When content reflects user intent through layout, sentence structure, and semantic headings, language models retrieve it more reliably and rank it higher for inclusion in direct answers.

Matching user intent improves alignment between prompts and content blocks, increases answer accuracy, and supports full-sentence extraction in zero-click environments. Structured formatting allows models to identify which section of a page resolves a given query, especially when the format mimics common prompt structures.

The methods to match user intent with answer-focused formatting are listed below.

- Use question-based H2 or H3 headers that mirror real user prompts (e.g., “What is vector embedding?” or “How does prompt tuning work?”).

- Start each answer with a clear, complete sentence that directly resolves the query stated in the heading.

- Limit paragraphs to 2 to 4 sentences and separate each idea with line breaks or subheadings.

- Use bullet points and numbered lists for steps, benefits, comparisons, or criteria that match list-type prompts.

- Place the most important sentence first in each section, then follow with supporting facts or context.

Pages that follow the “question → definition → expansion → example” structure perform best inside platforms such as Perplexity and ChatGPT. These formats match how models chunk text into retrievable spans, especially when each block resolves a single intent.

Wikipedia demonstrates this by structuring entries with short definitions followed by context and citations. Reddit, in contrast, often ranks through conversational tone, but fails to segment answers cleanly, making it less reliable for factual prompts but more useful for open-ended or exploratory queries in LLMs.

Which LLM Platforms and Chatbots should you Optimize For?

The most important LLM platforms and AI chatbots to optimize for are those that influence generative search visibility, zero-click answers, and real-time content retrieval.

The 7 main LLM platforms and chatbots you should optimize for are listed below.

- ChatGPT (OpenAI). ChatGPT retrieves answers from both pre-trained models and, in some modes, Bing-powered live web access. Optimizing for ChatGPT increases brand exposure across millions of user queries per day.

- Google Gemini (formerly Bard). Gemini uses content from the web, Google’s Knowledge Graph, and internal systems such as Search and Maps. Structured formatting and EEAT signals increase visibility in AI Overviews and SGE results.

- Claude (Anthropic). Claude integrates high-context prompts and emphasizes structured, human-like reasoning. Content that demonstrates topical authority, factual compression, and clear attribution performs best.

- Perplexity AI. Perplexity ranks pages based on live retrieval relevance. Bullet lists, short paragraphs, and citation-ready structures increase inclusion. Pages from Wikipedia and high-EEAT blogs frequently appear.

- Microsoft Copilot (Bing Chat). Copilot uses OpenAI models with Bing search infrastructure. Technical SEO, page speed, and clean content formatting influence citation likelihood.

- Meta Llama (LLaMA 3). While not yet fully commercialized in consumer-facing assistants, Meta’s models are used in open-source applications and experimental UIs. Brands should prepare for integration by allowing CCBot access and using structured data.

- You.com. You.com uses AI to generate search and chatbot-style responses. Content formatted with clean Q&A and clear headings increases visibility in their answer modules.

How to Measure the success of LLMO?

The success of LLM optimization depends on how frequently large language models retrieve, rank, and cite your content in AI-generated outputs. Unlike traditional SEO, which relies on SERP rankings and CTR, LLMO success is measured by content visibility in zero-click answers, branded mentions in generated summaries, and accurate factual inclusion.

The success metrics for LLMO are based on three measurable outcomes of visibility, accuracy, and consistency. Each outcome reflects how effectively content aligns with token-level retrieval, embedding compatibility, and source attribution logic used by LLMs.

The 6 main metrics for measuring LLMO success are listed below.

- AI Answer Inclusion Frequency. Track how often your content appears inside answers from ChatGPT, Gemini, Claude, or Perplexity. Use direct prompt testing and real-time response audits to identify citation or paraphrase frequency.

- Branded Mentions in AI Outputs. Measure how consistently the brand name appears in generated responses. Branded inclusion indicates strong entity recognition and high source trust. Include structured brand references in prompt tests to assess surface match.

- Accuracy of Cited Facts. Evaluate whether AI systems reproduce your content’s claims accurately. High factual fidelity shows effective structuring, proper semantic alignment, and successful token compression for LLM retrieval.

- Contextual Recall Across Related Prompts. Run prompt variations across tools to check whether LLMs recall your content in follow-up, long-tail, or reformulated questions. This tests embedding-level alignment and topical authority.

- Citations and URL Mentions in Source-Aware Platforms. Monitor Perplexity, Bing Chat (Copilot), and Gemini for direct citations or source URL inclusion. These systems reveal which formats, headers, or schema structures result in citation-level trust.

- Traffic and Referral Signals from AI Interfaces. Use analytics tools, Bing Webmaster Tools, and Google Search Console to identify new referrers from LLM-powered interfaces. Look for shifts in branded impressions and AI search terms.

A page that includes structured definitions, entity-rich headings, and consistent formatting for related topics receives higher attention weights in systems such as ChatGPT and Claude. When these LLMs cite the source by name, reproduce its facts, or return the same content across multiple prompts, the LLMO strategy is successful.

What software exists for LLM optimization?

LLM optimization software includes platforms that track answer visibility, map LLM data sources, and influence citation pathways in generative outputs. Relevant LLM optimization software includes Search Atlas QUEST, Diffbot, OpenPage.ai, and Market Brew.

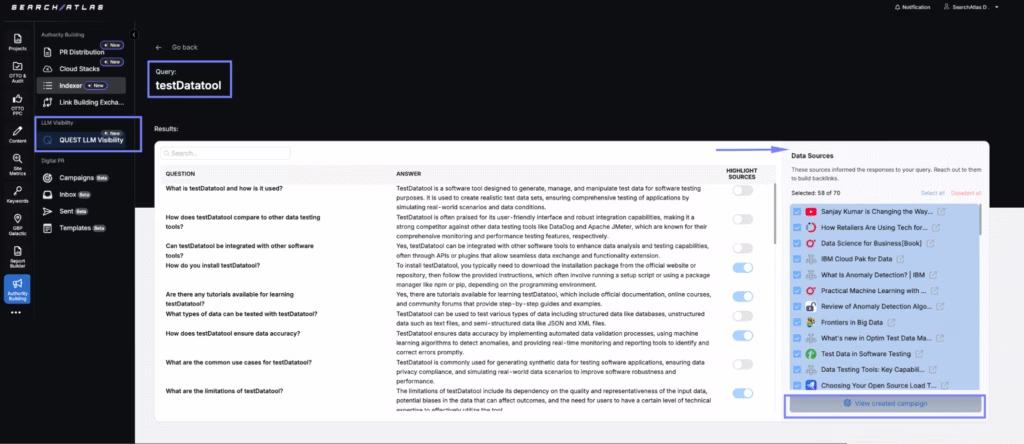

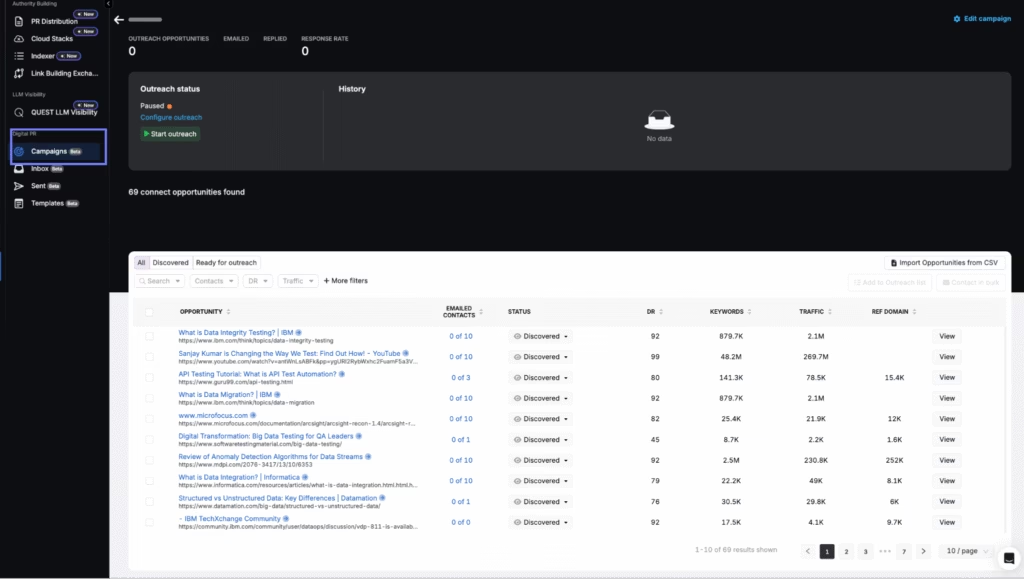

Search Atlas QUEST is top-rated LLM optimization software for AI. Search Atlas QUEST operates as an interrogation system that uncovers how large language models generate answers. QUEST identifies the documents that influence AI responses and enables users to shape those responses through targeted outreach. QUEST queries AI systems with high-volume prompts, extracts citations, and matches those URLs to real-time documents. Users gain direct access to the source layer behind LLM responses.

After QUEST identifies the source documents, it links each to the associated editors, publishers, or authors. The Search Atlas SEO platform directly supports outreach campaigns with built-in scraping, contact extraction, and email automation.

Users send page-specific requests to modify, update, or supplement data that LLMs reuse during inference. The Search Atlas QUEST tool uses known ingestion pathways (such as Bing SERP, Common Crawl, and custom crawlers) to increase visibility across AI outputs.

Other LLM optimization software options, like Diffbot, emphasize knowledge graph construction. OpenPage.ai monitors source overlap across AI platforms. Market Brew models ranking and visibility from a search engine simulation standpoint.

Other LLM optimization software platforms offer graph-based visibility monitoring, but do not support reverse-engineering or direct document targeting like Search Atlas QUEST does. QUEST uniquely enables citation control through real-world editorial targeting.

Is It Possible to Track Traffic from LLMs?

Yes, it is possible to track traffic from LLMs, but only under specific conditions.

Traffic from large language models (LLMs) becomes trackable when AI systems include real-time web retrieval and direct users to source URLs. Tools like Perplexity and ChatGPT with browsing mode attribute citations and allow outbound clicks, which generate referral traffic visible in analytics. You can detect this traffic by inspecting referral paths, user agents (e.g., ChatGPT-User), or session behavior from platforms like Bing or DuckDuckGo with AI overlays.

However, most LLMs, including Claude and Gemini in default modes, generate answers without attribution or linking, which prevents click-through tracking. In these cases, visibility remains measurable only through tools like Search Atlas QUEST, which reverse-engineer model outputs to infer content influence without direct referral data.

What is the difference between LLMO and SEO?

LLMO (Large Language Model Optimization) focuses on influencing AI-generated answers, while SEO (Search Engine Optimization) focuses on ranking content in search engine results.

Large Language Model Optimization, or LLMO, aims to make content retrievable, referenceable, and quotable by generative engines like ChatGPT, Perplexity, and Gemini. The goal of Large Language Model Optimization is to be selected as a source when LLMs generate answers. For example, adding entity-rich answers, proper schema, and allowing CCBot access increases the chance that a product list appears in a ChatGPT answer when a user asks, “What are the best protein powders for women?”

Search Engine Optimization, or SEO, improves a website’s visibility in traditional search engines like Google by optimizing for crawling, indexing, and keyword relevance. The goal of search engine optimization is to earn organic traffic from search engine results pages (SERPs). For example, optimizing a blog post with title tags, internal links, and structured data helps it rank for “best protein powders for women” on Google.

SEO impacts click-through from SERPs. LLMO influences visibility inside AI-generated answers. Both SEO and LLMO require technical access, topical authority, and content clarity, but they operate in different discovery systems.

Is LLMO replacing SEO?

No, LLMO is not replacing SEO. LLMO complements SEO as part of a broader AI visibility strategy. Traditional SEO still governs how content ranks in Google Search, Bing, and other engines with indexed results. Search engines remain dominant traffic sources for transactional and informational queries. However, generative engines powered by LLMs now intercept user intent earlier in the journey, especially for discovery, research, and summarization.

What is the difference between LLMO and AI SEO?

LLMO optimizes content for inclusion in LLM-generated answers. AI SEO uses AI tools to automate or enhance traditional SEO workflows.

Large Language Model Optimization focuses on making content visible, retrievable, and quotable by generative engines. The goal of LLMO is to influence answer selection. For example, allowing CCBot to crawl a product list and formatting it with schema improves inclusion in AI product recommendation outputs.

AI SEO applies artificial intelligence to improve how users execute SEO strategies. AI SEO tools like the Search Atlas OTTO SEO automate optimization recommendations. For example, AI SEO platforms like OTTO SEO may suggest internal links, rewrite headlines, or optimize paragraph length based on ranking correlation models.

LLMO optimizes for AI visibility inside generative platforms. AI SEO uses AI to optimize for search engine rankings.

What to know about LLMO besides AEO?

LLMO (Large Language Model Optimization) influences how AI generates answers. AEO (Answer Engine Optimization) focuses on how search engines serve direct answers to queries.

While AEO focuses specifically on appearing in direct question-answer scenarios, LLMO addresses how content functions within the complete knowledge processing capabilities of language models.

AEO structures content for search engines that provide zero-click answers, such as Google’s AI Overviews or Featured Snippets. The goal of AEO is to get selected by the engine answer layer. For example, AEO increases the chance that a paragraph appears in Google’s AI snapshot for “how to clean suede shoes.”

Large Language Model Optimization focuses on LLM memory and retrieval-augmented generation (RAG) systems. Answer engine optimization relies on retrieval and ranking logic in the search engine.

What to know about LLMO besides GEO?

LLMO (Large Language Model Optimization) makes content more accessible to language models. GEO (Generative Engine Optimization) shapes how language models generate branded, product-driven answers.

LLMO techniques include allowing crawler access (e.g., CCBot), improving entity clarity, and formatting data for retrievability. The goal of LLMO is inclusion in the generative engine source layers. For example, LLMO helps a how-to guide become a source that Claude cites in its summary.

GEO influences product mentions and brand placements inside LLM-driven shopping, affiliate, or recommendation outputs. The goal of GEO is to get product URLs surfaced during commercial queries. For example, GEO helps an eCommerce brand appear in ChatGPT’s suggestions when users ask for “best wireless earbuds under $100.”

GEO builds on LLMO. Generative engine optimization focuses on commercial queries, product discovery, and outbound influence using tools like QUEST.