Log file analysis examines web server records capturing every request to your website, including IP addresses, user agents, URLs, timestamps, HTTP status codes, and referrers. This first-party data reveals how search engines and users interact with your site, enabling identification of technical issues, crawl budget waste, orphan pages, broken links, redirect problems, and performance bottlenecks. Unlike third-party tools, server logs provide unfiltered, comprehensive data from all search engines.

Analysis involves accessing logs through hosting dashboards, FTP, or automated tools like OTTO SEO, then examining status code errors, bot activity patterns, crawling trends, and non-indexable URL waste. Regular monitoring prevents SEO damage, optimizes crawler efficiency, ensures important pages receive attention, and provides complete intelligence beyond Google Search Console’s (GSC) limited perspective.

What is a Log File?

A log file is a computer-generated record that automatically captures and stores chronological information about events, activities, or transactions within a system, application, or network. These files serve as digital audit trails, systematically documenting what happened, when it happened, and often who or what initiated the action. Log files are essential for monitoring system performance, troubleshooting issues, security analysis, and maintaining historical records of system behavior.

In general computing contexts, log files track everything from user logins and system errors to application performance metrics and security events.

What is a Log File in Search Engine Optimization?

In the context of search engine optimization (SEO), log files specifically refer to web server log files that record every request made to a website. These files are goldmines of data for SEO professionals because they provide unfiltered, first-hand information about how both users and search engines interact with a website.

The information that SEO log files contain is listed below.

- IP Addresses. They help identify the source of each request, which allows you to distinguish between regular users and search engine bots like Googlebot, Bingbot, or other crawlers.

- User Agents. They reveal the type of device, browser, or crawler making the request, which is crucial for understanding which search engines are visiting your site and how often.

- Requested URLs. They show exactly which pages, images, CSS files, JavaScript, and other assets were accessed, including attempts to reach non-existent pages (broken links).

- Timestamps. They provide precise timing of when each request occurred, which enables the analysis of crawl patterns and user behavior over time.

- HTTP Status Codes. They indicate the server’s response to each request (200 for successful page loads, 404 for pages not found, 301 for redirects, etc.).

- Referrers. They show the source that directed users to specific pages, which helps track traffic sources and internal linking effectiveness.

Log files are only kept for a short time, usually a few weeks to a few months, depending on how much traffic your site gets. Websites with high traffic fill up their log files faster and keep them for shorter periods.

What is Log File Analysis?

Log file analysis is the step-by-step process of downloading, reviewing, and checking server log files to find technical SEO problems, crawling issues, and performance slowdowns before they become serious. Log file analysis involves looking at the raw data that tracks every request made to your server to understand how search engines and visitors interact with your website.

Why is Log File Analysis Important?

Log file analysis is important because it provides direct insight into how search engines crawl and interact with your website. The main benefits of log file analysis are listed below.

- First-party Data Source. Gives you raw, unfiltered information directly from your server without relying on outside tools, providing complete control over your data.

- Crawl Pattern Discovery. Shows which pages search engines visit most and least often, helping you understand content prioritization and identify neglected important pages.

- Crawl Budget Optimization. Finds low-quality pages that waste search engine resources, allowing you to redirect attention to important content.

- Technical Issue Detection. Spots errors like broken links, redirect problems, and server issues that prevent search engines from reaching your pages.

- Page Speed Monitoring. Identifies slow-loading pages that hurt search rankings and user experience.

- Orphan Page Identification. Discovers pages with no internal links that search engines cannot find through normal crawling.

- Crawl Activity Tracking. Monitors sudden increases or decreases in search engine visit frequency, which signals potential problems or changes.

- Search Engine Accessibility. Confirms search engines are able to reach and read your most important pages.

- Performance Optimization. Provides clear data for making informed decisions about improving website search performance.

How to Perform Log File Analysis?

To perform log file analysis, follow the steps outlined below.

1. Access the Log File

To access the log file, use one of the paths listed below.

- Hosting Account Dashboard. Log in to your hosting account. Look for a section called “Log Manager,” “Access Logs,” or “File Manager.” Click on it and browse to find folders named “logs” or “.logs.” Click to download the log files you need.

- File Transfer Protocol (FTP). Download and install FTP software like FileZilla on your computer. Get your server connection details from your hosting provider. Connect to your server using the software. Browse to the logs folder and download the files to your computer.

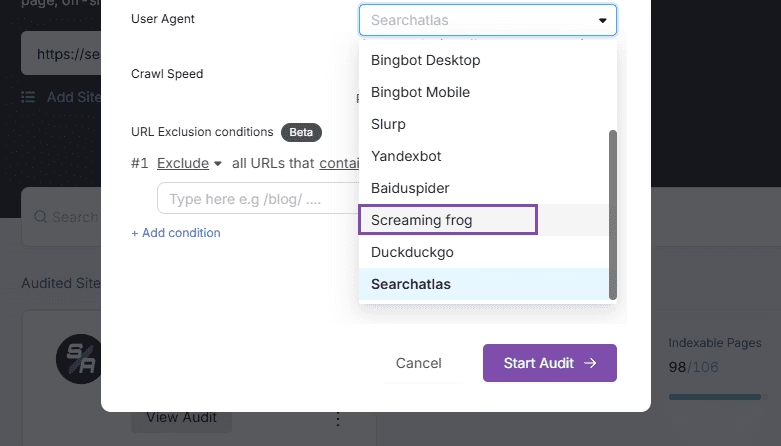

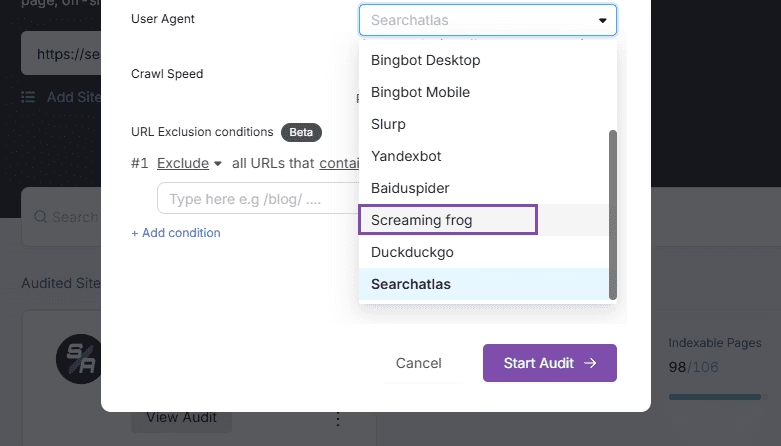

- Analysis Tools Like Screaming Frog. Connect the tool to your website or upload your log files. The tool automatically downloads and processes your log files. Review the analysis reports the tool creates for you.

- AI Tools Like OTTO SEO. Connect your website to the platform by copying the pixel code. The tool automatically monitors your server and collects log data. View real-time analysis and reports without downloading files manually again.

2. Download and Prepare Log Files

Log files come in different server formats that sometimes need conversion for analysis tools. Many free tools and basic analysis software only work with specific formats like CSV or standard Apache logs. Sometimes you need to convert or clean up the files first if your server uses a custom or less common format.

Premium tools like OTTO SEO are built to handle multiple formats automatically so you don’t need to worry about converting or cleaning up your raw log files before analysis.

3. Analyze the Log Files

You have several options for analyzing your log files (manual analysis, specialized software, and fully automated analysis).

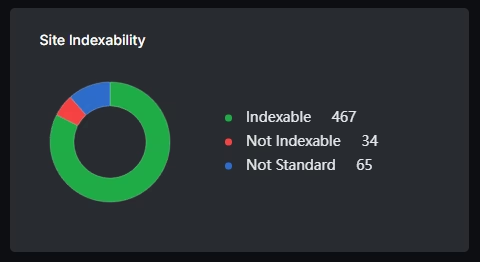

For manual analysis, import the data into Google Sheets for basic review. This approach usually requires reviewing tens or hundreds of thousands of rows of data manually. Specialized software offers a more efficient option that handles the manual work automatically, although it requires finding and potentially preparing the log file manually. These tools include information about your website’s traffic, crawl depth, indexability, internal links, and status codes to provide comprehensive insights.

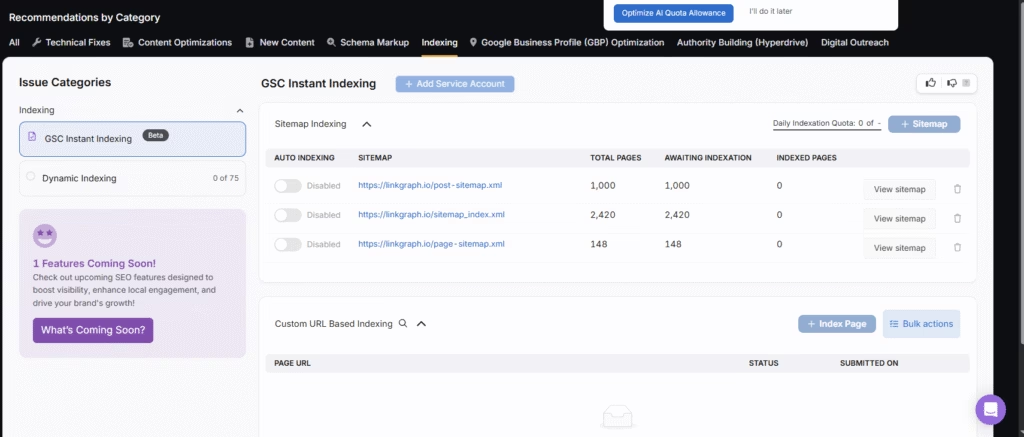

Search Atlas provides fully automated solutions through its AI agent OTTO SEO. Once you install the AI agent, there is no manual work required since it processes everything automatically. In the OTTO&Audit section, find information about crawl monitoring and log file insights directly from the dashboard.

The key points for log file analysis are listed below.

- Status Code Errors. Examine status codes and identify HTTP errors like 404 Not Found and 410 Gone errors that return non-200 status codes, which prevent search engines from accessing your content.

- Crawl Budget Waste. Take note of potential crawl budget waste caused by search engines crawling non-indexable URLs that provide no SEO value.

- Bot Activity Patterns. Check which search engine bots crawl your website most frequently to understand crawler preferences and priorities. Search Atlas lets you set up which bot you want to use.

- Crawling Trends. Monitor crawling patterns over time and identify increases or decreases in crawling activity on your website that signal changes.

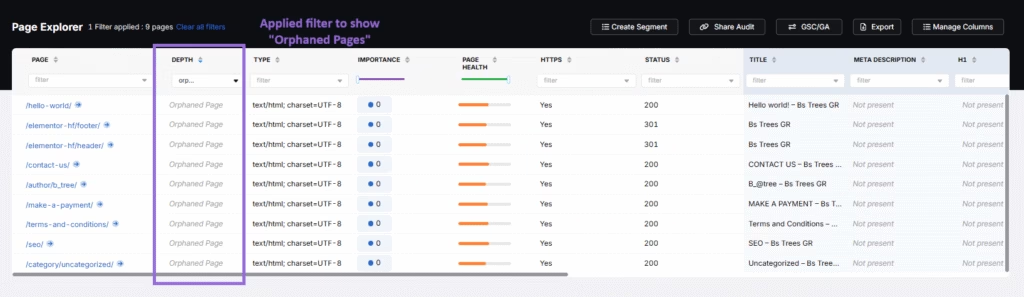

- Orphan Pages. Look for orphan pages that search engines cannot crawl and index because they lack incoming internal links from other pages on your site.

Search Atlas OTTO SEO and Site Auditor monitor your website 24/7 and send out alerts for any technical SEO issues. However, OTTO SEO is different from other tools on the market because it is able to fix issues in one click, directly from the dashboard.

What are Common Misconceptions About Log File Analysis?

We list common misconceptions about log file analysis below.

Small Websites Don’t Need Log File Analysis

Your site’s size doesn’t determine whether Google visits important pages or wastes time on unnecessary URLs. Understanding crawler activity helps any business optimize its search presence and avoid indexation problems.

Only Analyze Logs When Problems Occur

Waiting for problems means catching damage after it hurts your SEO performance. Regular monitoring prevents issues from getting worse and provides comparison data when problems arise. Consistent analysis helps you understand normal crawler patterns, making problems easier to spot. Schedule regular log reviews like other SEO maintenance tasks.

Google Search Console Eliminates the Need for Server Logs

The enhanced Crawl Stats dashboard convinces many that server logs are obsolete. However, Search Console presents only Google’s limited perspective with aggregated data samples. Server logs capture comprehensive activity from multiple search engines plus granular details about every server interaction. Combining both resources provides complete crawler intelligence rather than partial insights.