Technical SEO (Search Engine Optimization) refers to optimizing a website’s infrastructure to enhance its organic search performance. Technical SEO ensures that search engines can efficiently crawl, understand, and index a site’s content, ultimately improving visibility and rankings. Unlike content-focused or off-page SEO strategies, technical SEO focuses on site architecture, speed, mobile usability, and overall website health. Technical SEO plays a critical role in ensuring that a website is not only discoverable by search engines but also provides an operating convenience for users.

A well-optimized technical foundation enhances website performance, strengthens security, and increases conversion rates through specific factors. Key technical SEO factors include crawlability, site structure, HTTPS (SSL/TLS certificate), HTTP status codes, page speed, and mobile-friendliness. To meet these requirements effectively, websites must focus on the best technical SEO practices, such as optimizing their Core Web Vitals, refining site structure, and implementing proper structured data markup. A strong technical SEO guide supports complementary aspects of SEO, such as content strategy, link building, and local optimization, creating a solid framework for sustainable organic visibility and website performance.

What Is Technical SEO?

Technical SEO is the process of optimizing a website’s backend infrastructure to secure efficient crawling, rendering, and indexing by search engines. There are three main components of technical search engine optimization, including search engine discoverability, website performance, and structured data implementation.

Unlike on-page SEO, which enhances content relevance, and off-page SEO, which emphasizes external trust signals like backlinks, technical SEO optimization validates the website’s server configurations, resource management, and structured data to support search engine efficiency.

What Is Technical Optimization in Overall SEO Strategy?

Technical optimization in an overall SEO strategy is the refinement of a website’s structural and performance elements to establish uninterrupted interaction with search engines and users.

In the broader SEO strategy, technical SEO serves as the foundation that supports both on-page and off-page efforts. Content relevance and backlink authority cannot reach full ranking potential without a well-structured backend. Technical SEO is considered an advanced SEO approach for many, as it requires in-depth knowledge of search engine behavior, web performance factors, and structured data implementation.

Technical optimization bridges the gap between SEO and user experience (UX) by improving responsiveness, load speed, and interaction signals, making it a critical component of modern SEO marketing strategies.

What Is the Importance of Technical SEO?

Technical SEO is essential for ensuring search engines can accurately access, interpret, and rank a website’s content without encountering structural inefficiencies while enhancing the user experience.

A well-optimized technical SEO web source helps search engines allocate resources, prioritize indexation, and assess website relevance. Without it, websites may suffer from crawl inefficiencies, rendering errors, and indexing delays, leading to ranking fluctuations and reduced organic visibility.

As websites grow, a scalable technical SEO strategy ensures that new pages, content, and features integrate without disrupting search engine accessibility. Beyond search engine accessibility, technical SEO directly impacts website stability and user engagement. A fast-loading, mobile-friendly website with structured navigation improves usability, reduces bounce rates, and drives conversions.

For businesses targeting global audiences, technical SEO is crucial for optimizing hreflang tags, managing geo-targeting in Google Search Console, and ensuring proper indexation across different regions and languages using localized URL structures (ccTLDs, subdomains, or subdirectories).

What Are the Most Important Technical SEO Ranking Factors?

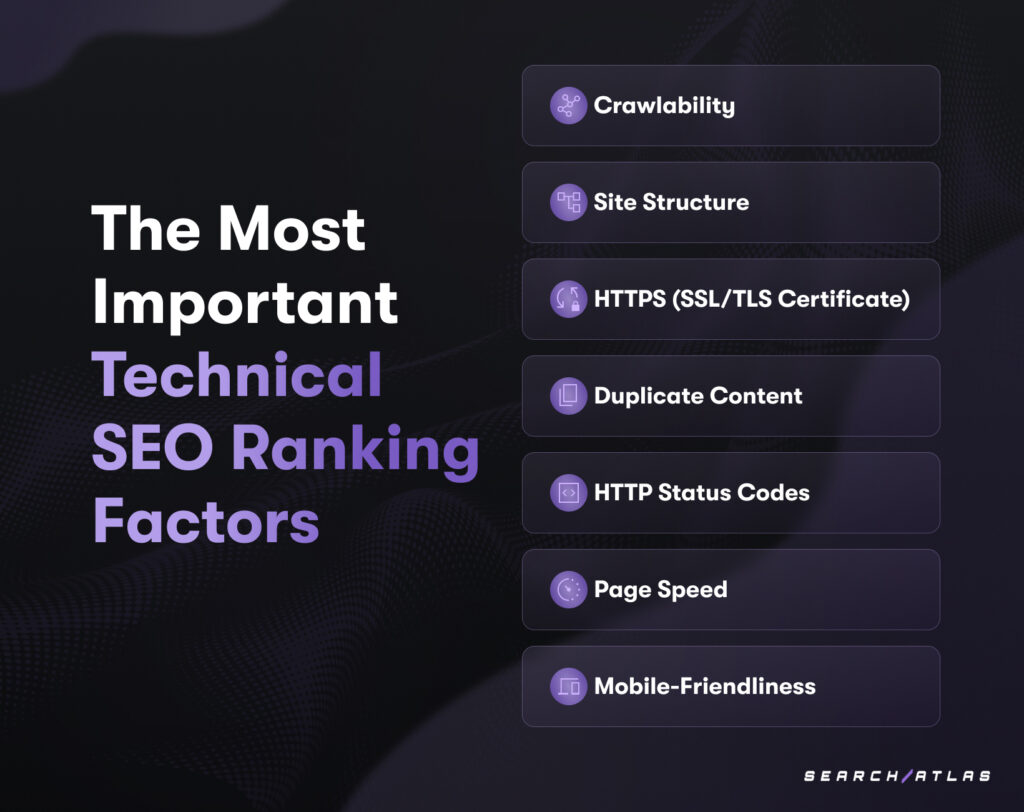

Technical SEO ranking factors are metrics and specifications that search engines use to evaluate a website’s technical performance, accessibility, and structure for processing, indexing, and ranking purposes. The most important technical SEO ranking factors are listed below.

- Crawlability. Determines how efficiently search engines discover and access web pages. Websites with well-structured XML sitemaps, optimized robots.txt files, canonical tags, and internal linking strategies guarantee productive crawl distribution, preventing orphan pages and index bloat.

- Site Structure. Refers to how content is organized, linked, and categorized within a website. A well-optimized site structure improves indexation efficiency, helping search engines understand website topics and their context for better ranking.

- HTTPS (SSL/TLS Certificate). Prevents data interception, guarantees encrypted connections, and signals site credibility. It uses SSL/TLS encryption to protect data integrity between users and servers. Non-secure websites face indexing challenges and a lack of trust from search engines and users.

- HTTP Status Codes. Impact how Googlebot processes and ranks web pages directly. Proper handling of 301 redirects, 404 errors, and 5xx server response codes prevents crawl inefficiencies and ensures uninterrupted content retrieval and ranking stability.

- Page Speed. Affects user experience and search rankings. Google’s Core Web Vitals, such as Cumulative Layout Shift (CLS), First Input Delay (FID), and Largest Contentful Paint (LCP), are the factors that determine a website’s loading speed and interactivity.

- Mobile-Friendliness. Guarantees the website is fully responsive and optimized for all devices. Google’s Mobile-First Indexing prioritizes websites that feature adaptive layouts, touch-friendly navigation, and optimized mobile interactions, as mobile usability directly correlates with search rankings and user retention.

Overall SEO Ranking Factors consideration provides that search engines like Google can properly process and retrieve content without encountering barriers to visibility and performance.

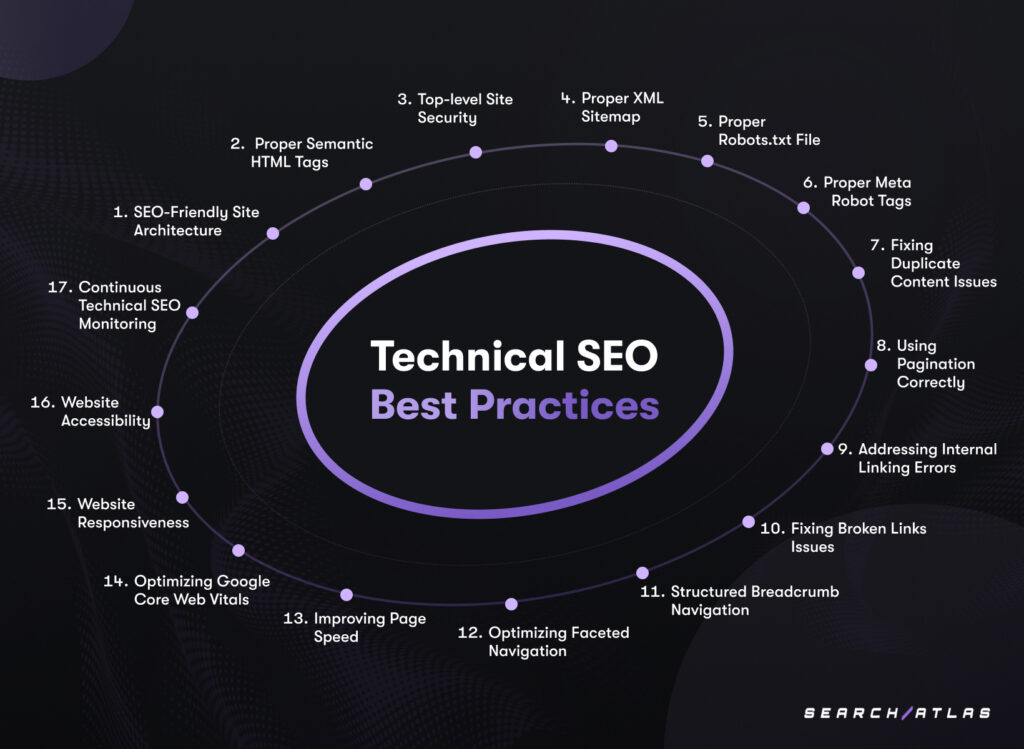

What Are the Technical SEO Best Practices?

There are 17 technical SEO best practices that improve website performance, user engagement, and long-term ranking. The technical SEO best practices are listed below.

1. Create an SEO-Friendly Site Architecture

Site architecture refers to the way a website’s pages are structured and connected. An SEO-friendly site architecture makes it easier for search engines to discover and rank content effectively while users navigate the website intuitively.

The main benefits of an optimized site structure are ensuring crawl efficiency, distributing ranking signals, and enhancing the user experience.

A strong site architecture follows a clear hierarchy, logical internal linking, consistent URL structures, and minimal crawl depth

- Clear hierarchy. Homepage → Category Pages → Subcategories → Individual Pages.

- Logical internal linking. Enhances discoverability and indexation.

- Consistent URL structures. E.g.,

example.com/category-name/product-name. - Minimal crawl depth. Warrants that key pages remain accessible within a few clicks.

To create an SEO-friendly site architecture, start by mapping out a hierarchical structure that follows a logical flow from the homepage to category pages and subpages. Your website should be structured to minimize click depth, ensuring all important pages are accessible within three clicks from the homepage.

2. Use Proper Semantic HTML Tags

Semantic HTML refers to using HTML5 elements that clearly define the role and meaning of content on a webpage. Semantic HTML is a key practice for semantic SEO, improving content structure and helping search engines better understand your site. Semantic tags like <header>, <section>, <article>, and <nav> help search engines and assistive technologies understand page structure instead of relying on generic <div> and <span> elements.

A well-structured page should use semantic elements to define each section’s role.

<header>. Contains the site title, logo, or navigation links.<nav>. Holds primary navigation menus.<main>. Wraps the core content of the page.<section>. Groups related content under a heading.<article>. Represents standalone pieces of content like blog posts.<aside>. Holds supplementary information such as sidebars or widgets.<footer>. Contains contact details, links, or copyright information.

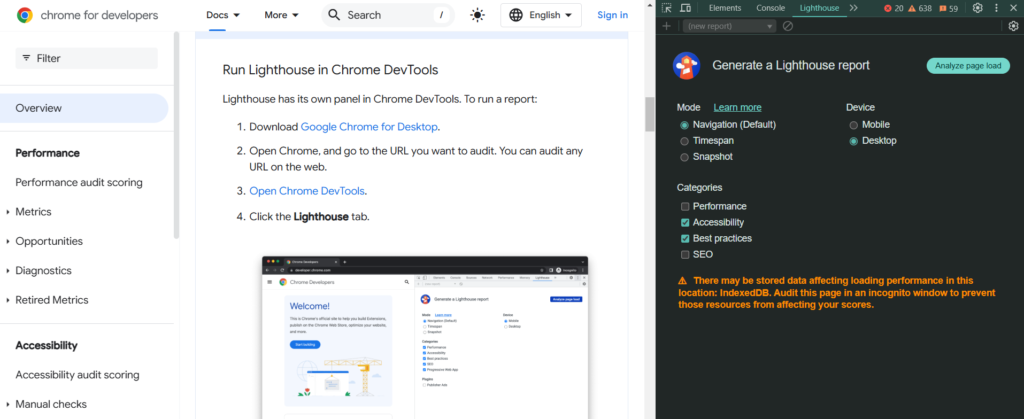

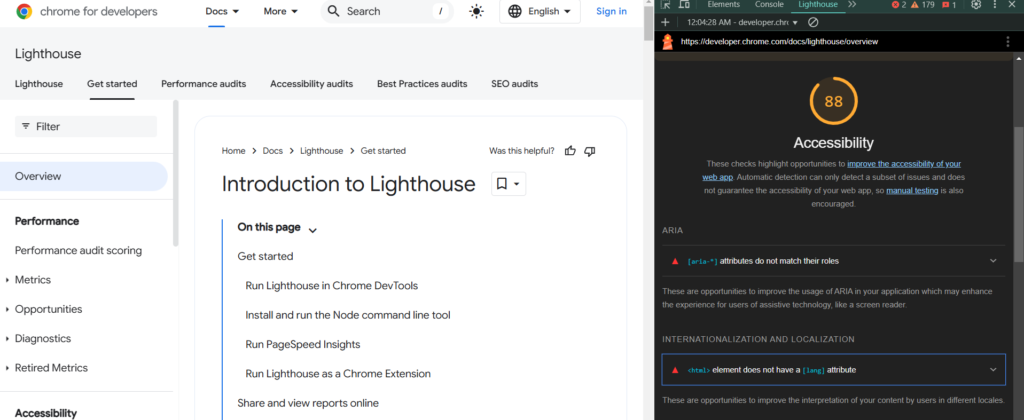

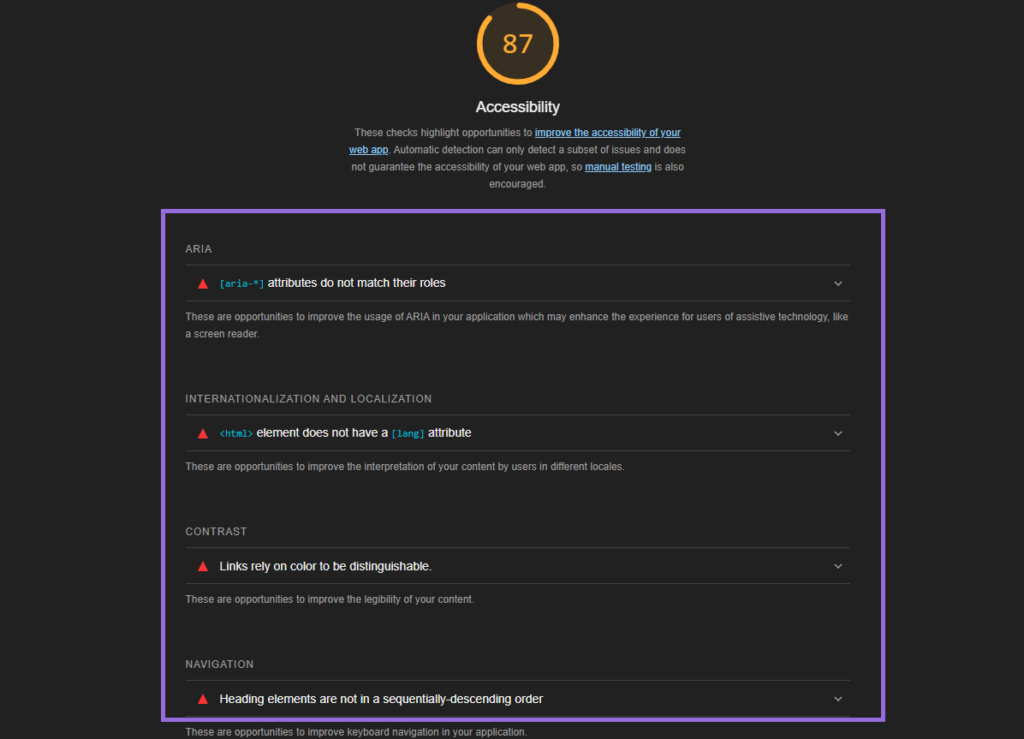

Run a Google Lighthouse audit (Chrome DevTools) under the “Best Practices” and “Accessibility” categories.

This highlights the incorrect use of HTML elements and missing landmark tags.

Once you’ve implemented semantic HTML, test it using Google’s Rich Results Test to see how search engines interpret your structured content. Properly structured HTML improves the chances of appearing in featured snippets, rich results, and voice search queries.

3. Use Top-Level Site Security

Top-level site security involves implementing measures to protect your website from cyber threats, data breaches, and unauthorized access. Site security involves using HTTPS encryption, setting secure HTTP headers, and regularly monitoring for vulnerabilities.

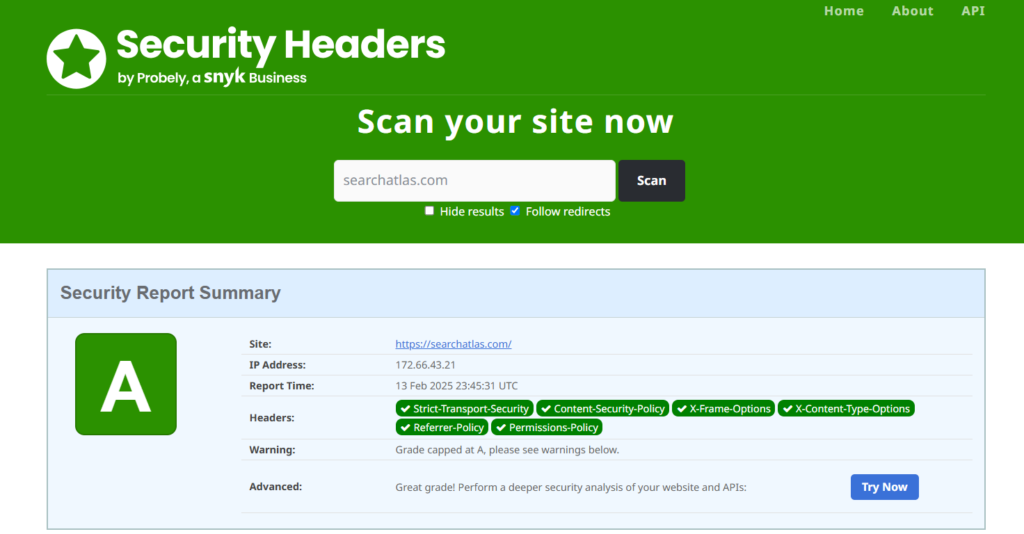

To guarantee your website is safe, acquire an SSL certificate from a trusted Certificate Authority (CA) or use free services like Let’s Encrypt. Install it on your web server to enable HTTPS.

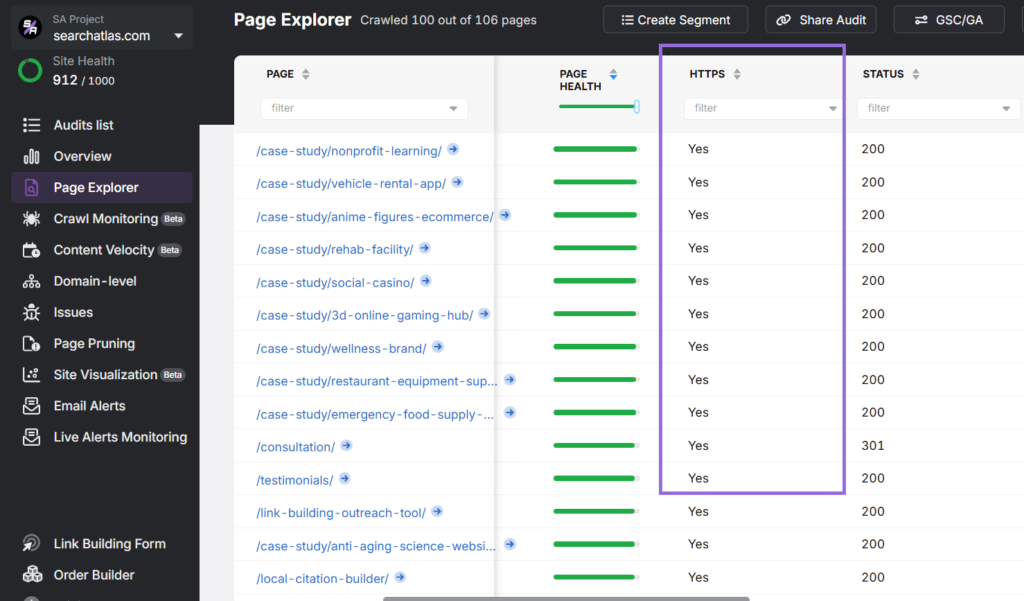

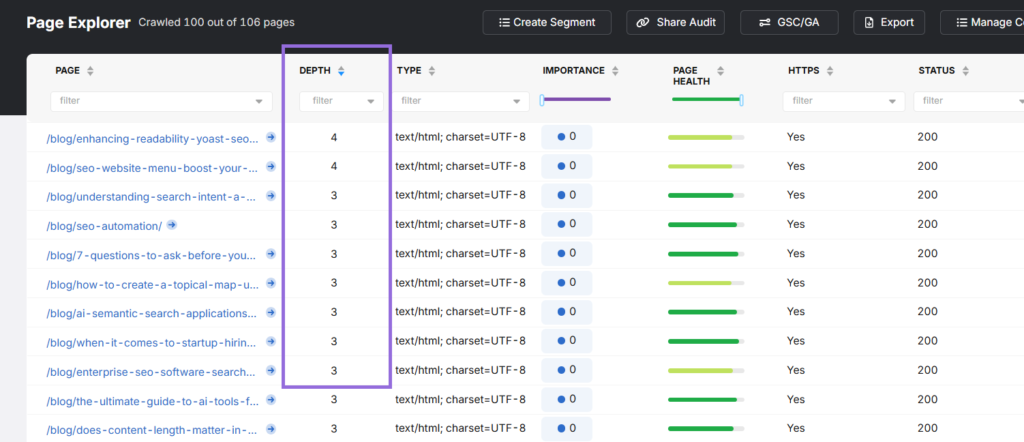

Use tools like the Search Atlas Site Audit Tool and navigate to the Page Explorer feature to confirm all pages are served over HTTPS and to identify any mixed content issues.

To configure secure HTTP headers, implement headers such as Content-Security-Policy, Strict-Transport-Security, and X-Content-Type Options to protect against common vulnerabilities.

Utilize SecurityHeaders.com to scan your website and receive a report on your HTTP header configurations.

To monitor for malware, employ services like Google Search Console’s Security Issues Report to identify and address any malware or security issues flagged by Google.

4. Submit Your XML Sitemap to Google Search

An XML (Extensible Markup Language) sitemap is a file that lists all the direct URLs on your website, helping search engines discover, crawl, and index your content promptly. Submitting a proper XML sitemap provides metadata such as last modified dates and priority levels for pages.

Tools like Yoast SEO can generate an XML sitemap automatically if using WordPress. For custom websites, create a sitemap using free generators like XML-Sitemaps.com.

To verify sitemap coverage, follow the tips listed below.

- Open your sitemap in a browser by visiting

example.com/sitemap.xml. - Make sure it includes all essential pages while excluding unnecessary or duplicate URLs (e.g., login pages).

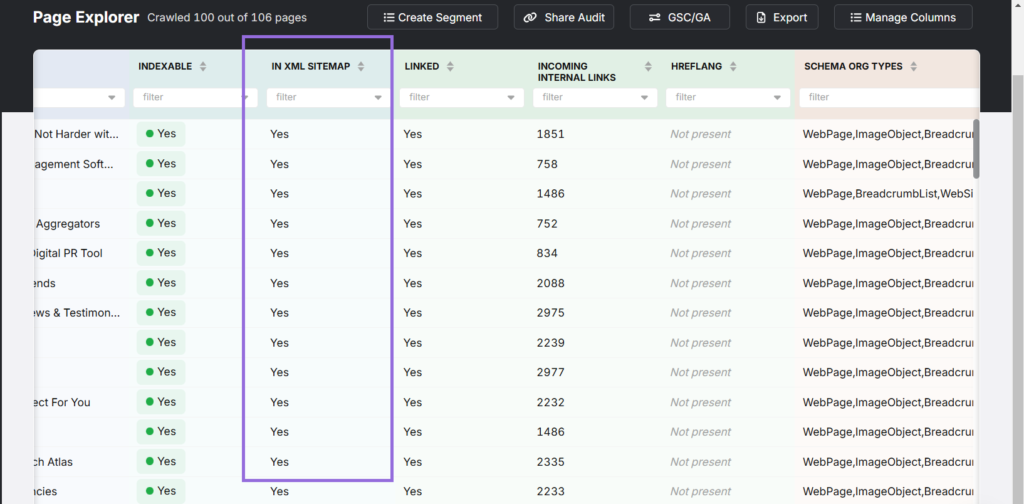

- Use the Search Atlas Page Explorer Tool to check whether all indexable pages are included in the sitemap. The “IN XML SITEMAP” column (as shown in the image) helps confirm which pages are properly listed.

To submit your sitemap to Google Search Console manually, follow the steps below.

- Go to Google Search Console → Index → Sitemaps.

- Enter your sitemap URL (e.g.,

https://example.com/sitemap.xml) and click Submit. - Google will process the submission and display any errors encountered.

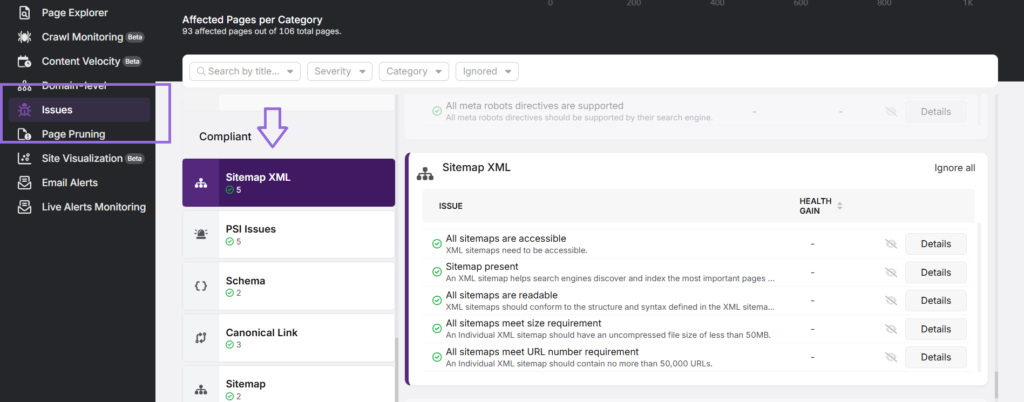

After submission, use the Search Atlas Site Audit Tool to check sitemap accessibility, readability, and compliance with Google guidelines.

Navigate to Issues, then the Sitemap XML section, to confirm the factors shown in the image.

CMS-based sitemaps update automatically when new pages are added.

For static sitemaps, periodically regenerate and resubmit them in Google Search Console to reflect website changes.

5. Ensure Proper Robots.txt File Guidelines

The robots.txt file is a simple text file located in the root directory of a website that provides crawling instructions to search engine bots. The robots.txt file tells search engines which pages or directories they are allowed or disallowed from crawling.

A properly configured robots.txt file prevents search engines from wasting crawl budget on unimportant pages, helps protect sensitive areas, and guides search engines toward relevant content.

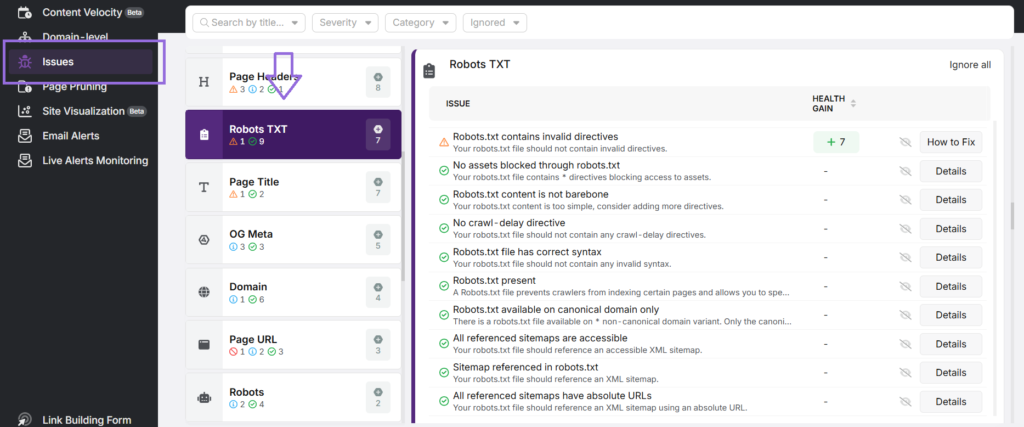

To locate and review your robots.txt file, follow the steps listed below.

- Open your browser and visit the robots.txt file.

https://example.com/robots.txt. - Check if important directives are correctly implemented and ensure no critical pages are blocked accidentally.

- Use the Search Atlas Site Audit Tool and navigate to the Issues section to analyze your robots.txt file and detect potential crawlability issues.

6. Ensure Proper Meta Robot Tags Implementation

Meta robots tags are HTML directives that control how search engines crawl and index individual web pages. Technical SEO basics, such as appropriate meta robot tags, give instructions to search engines on whether a page should be indexed, followed, or excluded from search results.

To identify and review current meta robot tags, open the HTML source code of a page and look for

<meta name=”robots” content=”index, follow”>.

In Google Search Console, go to the URL Inspection Tool to check if Google is indexing the page as expected.

To apply the correct meta robots directives, you should choose one of the options below.

- Allow full indexing and crawling.

<meta name="robots" content="index, follow">.

(Best for most public pages like blog posts, service pages, and landing pages.)

- Block indexing but allow link crawling.

<meta name="robots" content="noindex, follow">.

(Useful for pagination pages, search result pages, or outdated content you don’t want indexed but still want to pass link equity.)

- Prevent both indexing and link crawling.

<meta name="robots" content="noindex, nofollow">.

(Best for private pages like login portals, thank-you pages, and test environments.)

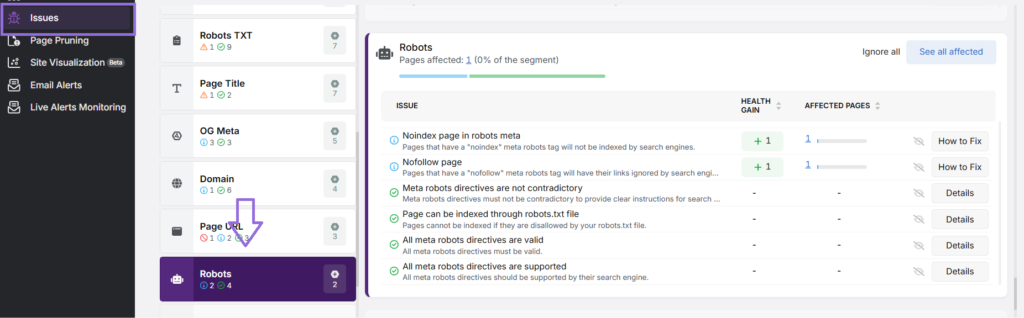

To detect and fix meta robots, open the Search Atlas Site Audit Tool, navigate to Issues, and go to the Robots section.

This will highlight pages mistakenly set to noindex that should be indexed, nofollow directives on pages that should pass link equity, and conflicting or invalid meta robots directives. Make sure canonical tags and meta robots tags do not conflict (e.g., avoid using noindex on canonical pages).

Regularly review Google Search Console’s Page Indexing Report to confirm your pages appear correctly in search results.

7. Use Pagination Correctly

Pagination is the practice of splitting large sets of content across multiple pages, commonly used for category pages, blog archives, and product listings. Proper pagination guarantees that all paginated pages are discoverable and indexed properly, preventing ranking dilution and improving the user experience for multi-page content.

To use pagination correctly, implement self-canonicalization on paginated pages. Each paginated page (page=2, page=3) should have a self-referencing canonical tag to prevent duplication.

<link rel="canonical" href="https://example.com/category/page-2/">

Google recommends that websites allow search engines to index paginated pages rather than blocking them with noindex. Check Google Search Console’s Indexing Report to confirm that paginated pages are not mistakenly excluded.

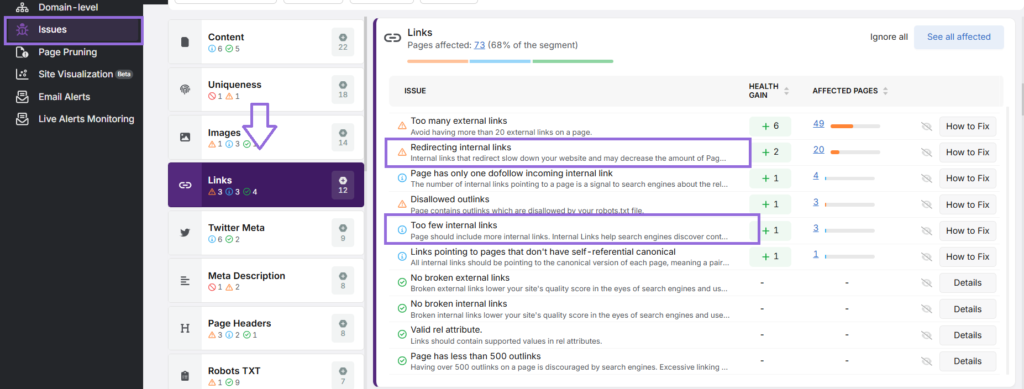

8. Address Internal Linking Errors

Internal linking errors occur when links within your website lead to incorrect, redirected, or orphaned pages, disrupting navigation and SEO. Internal linking errors can prevent search engines from effectively crawling your site and result in a poor user experience.

To address internal linking errors, first identify issues using the Search Atlas Site Audit Tool. The tool scans for broken links (pointing to deleted or non-existent pages), redirect chains (links that pass through multiple redirects before reaching their destination), and orphan pages (important pages with no internal links).

Once identified, fix internal linking errors by updating outdated or broken links to point to the correct URLs and eliminating redirect chains by linking directly to the final destination page.

Verify that orphaned pages (pages without internal links) are connected by linking them from relevant pages using the Search Atlas Page Explorer Tool. Reduce deep-linked pages (those requiring 3+ clicks to access) by placing links to them on high-authority pages like the homepage or main categories.

9. Find and Fix Broken Link Issues

Broken links occur when a web page links to a URL that no longer exists, resulting in a 404 error. These can be internal (within your site) or external (pointing to another domain).

To find broken links, use the Search Atlas Site Audit Tool to detect the elements below.

- Internal broken links leading to missing or deleted pages.

- External broken links pointing to dead third-party pages.

- Server response errors (e.g., 404 or 500 errors).

The technical SEO strategy to fix broken links is listed below.

- For internal links → Update them to point to an existing page or apply a 301 redirect if the page was permanently moved.

- For external links → Find and link to an alternative resource, or remove the broken link if no suitable replacement exists.

- For deleted pages with backlinks → Implement a 301 redirect to a relevant page to preserve SEO value.

Regularly audit your site with the Search Atlas Site Audit Tool and track 404 errors in Google Search Console’s Crawl Stats Report to maintain a seamless user experience and strong link structure.

10. Use Structured Breadcrumb Navigation

Breadcrumb navigation is a hierarchical navigation system that helps users and search engines understand the structure of your website. Structured breadcrumb navigation displays a clear path of where a page is located within the site, improving navigation and crawlability.

To implement structured breadcrumb navigation, make sure that each page follows a logical site hierarchy. Breadcrumbs should follow the site structure (e.g., Home > Category > Subcategory > Page), use anchor links to make navigation smooth, and be consistent across the site to avoid confusion.

Google Search Console’s Enhancements → Breadcrumbs Report can highlight issues with breadcrumb structured data.

11. Optimize Faceted Navigation

Faceted navigation allows users to filter content by categories, attributes, or tags, commonly used in e-commerce and large content sites. While faceted search is helpful for users, improper faceted navigation can create crawl inefficiencies and indexing issues for search engines.

To optimize faceted navigation, confirm that it does not generate unnecessary, low-value URL variations that dilute ranking signals. Use the Search Atlas Site Audit Tool to identify excessive or duplicate indexed pages caused by faceted filters.

Control indexing with the steps listed below.

- Use canonical tags to consolidate similar filtered URLs.

<link rel="canonical" href="https://example.com/category/">

- Disallow low-value faceted pages in

robots.txtto prevent Google from crawling unnecessary filter combinations.

User-agent: *

Disallow: /category?color=red&size=large

- Use noindex for specific filtered pages that should not appear in search results.

<meta name="robots" content="noindex, follow">

12. Improve Page Speed

Page speed is a core web performance metric that impacts user engagement, search rankings, and conversion rates. Faster-loading pages reduce bounce rates, improve dwell time, and enhance the likelihood of ranking in Google’s Featured Snippets.

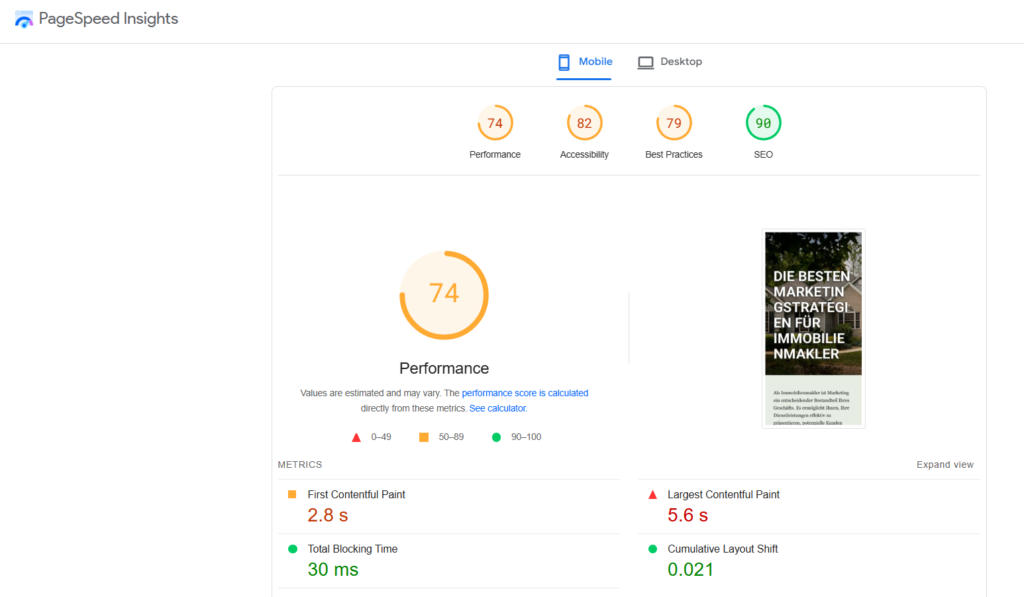

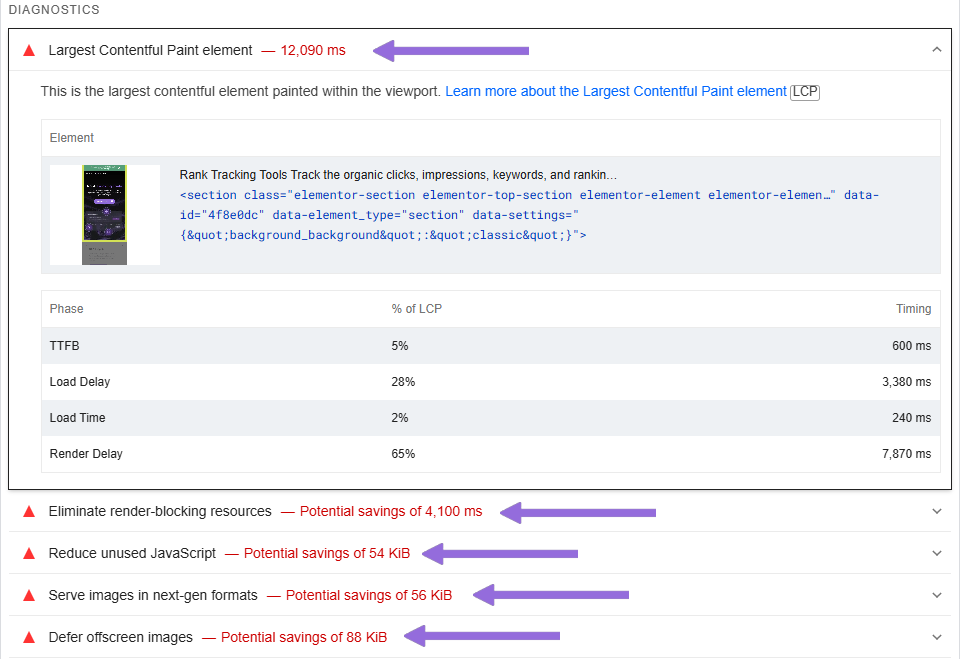

To optimize page speed, analyze your site using Google PageSpeed Insights to identify performance bottlenecks. Common issues include unoptimized images, render-blocking JavaScript, and excessive server requests.

Page Speed Insights highlights slow-loading elements and suggests optimizations.

Implement the page speed improvements listed below.

- Enable browser caching to store static files locally and reduce repeated load times.

Cache-Control: max-age=31536000 - Minify HTML, CSS, and JavaScript to eliminate unnecessary code.

- Use a Content Delivery Network (CDN) to serve static assets from geographically distributed servers.

- Optimize server response times by upgrading hosting and reducing HTTP requests.

13. Optimize Google Core Web Vitals

Google Core Web Vitals are key performance metrics that measure real-world user experience based on loading speed, interactivity, and visual stability. Google Core Web Vitals directly influence search rankings, particularly for mobile-first indexing and Page Experience signals.

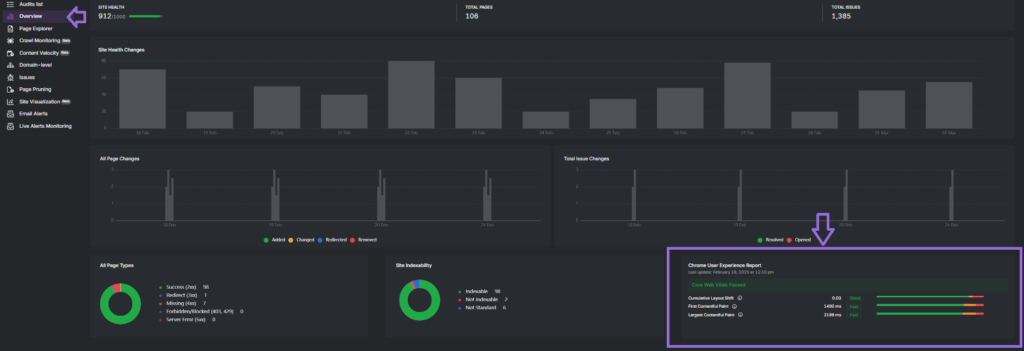

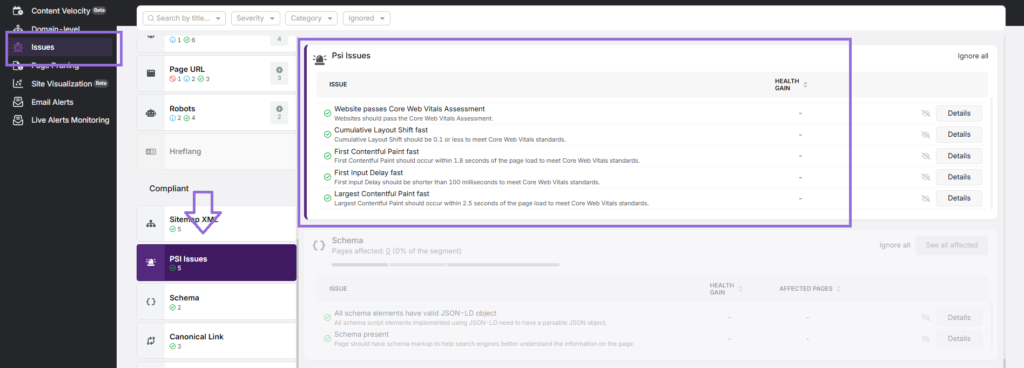

To optimize Core Web Vitals, analyze your website using the Search Atlas Site Audit Tool, navigating to the Overview Section to identify bottlenecks affecting performance.

The three key metrics to improve are Cumulative Layout Shift (CLS), First Input Delay (FID), and Largest Contentful Paint (LCP). The tips for improving Core Web Vitals metrics are below.

- Cumulative Layout Shift. Measures visual stability during page load.

- Set explicit width and height attributes for images and ads to prevent layout shifts.

- Use CSS to reserve space for dynamic content.

- First Input Delay. Measures responsiveness and interactivity.

- Reduce JavaScript execution time and defer non-essential scripts.

<script async src="script.js"></script>

- Largest Contentful Paint. Measures the loading speed of the largest visible element.

- Optimize hero images and background media.

- Enable lazy loading for offscreen images.

<img src="image.webp" loading="lazy" alt="Optimized LCP image"> - Use a CDN to speed up content delivery.

After making improvements, monitor changes in the Search Atlas Site Audit Tool to confirm compliance with Google’s Page Experience signals. Any issues that arise will be visible in the Issues Section of the audit, namely PSI Issues (PageSpeed Insights issues).

Refining Core Web Vitals enhances site usability, increases rankings, and improves conversion rates.

14. Ensure Your Website Is Responsive on All Devices

A responsive website automatically adapts to different screen sizes, ensuring a seamless experience on desktops, tablets, and mobile devices. Google prioritizes mobile-first indexing, meaning a poorly optimized mobile site can negatively impact rankings.

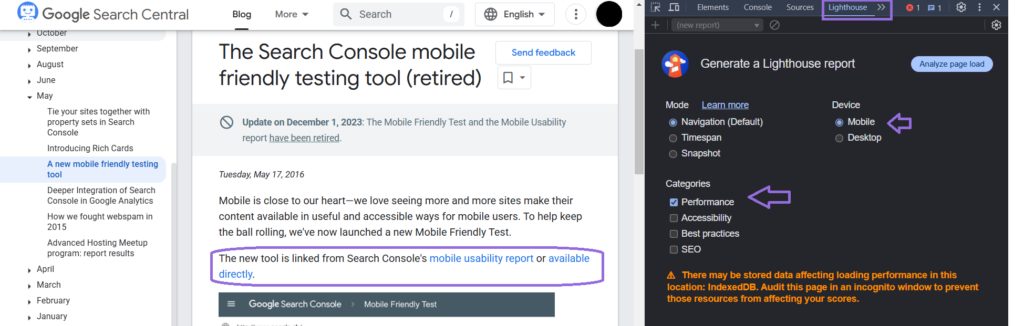

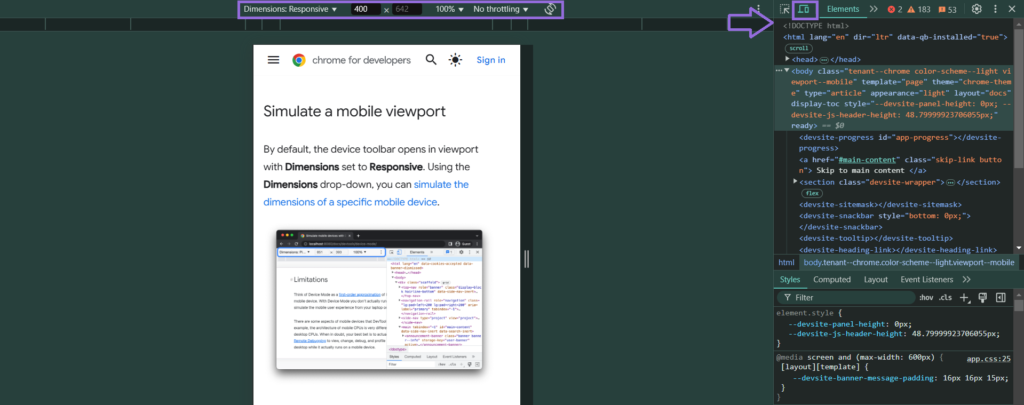

To verify responsiveness, test your site using Google Lighthouse (via Chrome DevTools) for performance, layout, and touch target analysis.

Chrome Developer Tools’ Device Mode allows you to preview your site on multiple screen sizes.

To improve responsiveness, use the technical SEO expert tips listed below.

- Implement flexible grid layouts instead of fixed-width elements.

- Optimize images with

srcsetto serve appropriate sizes based on the user’s device.<img src="image-small.jpg" srcset="image-large.jpg 1024w, image-medium.jpg 768w" alt="Responsive image"> - Make sure the viewport is correctly set for mobile scaling.

<meta name="viewport" content="width=device-width, initial-scale=1">

15. Ensure Your Website Is Accessible to Everyone

Website accessibility secures that all users, including those with disabilities and different language preferences, can navigate and interact with your site. Google prioritizes accessibility as part of its Page Experience signals, and compliance with Web Content Accessibility Guidelines (WCAG) is critical for usability.

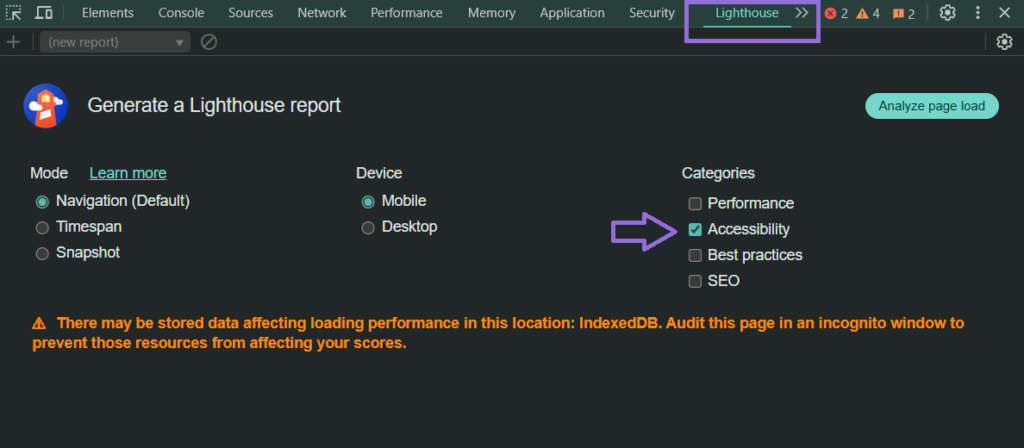

To improve accessibility, use Google Lighthouse to generate a report.

A Lighthouse report will detect issues with the accessibility factors listed below.

- ARIA attributes to enhance screen reader interactions.

<button aria-label="Submit Form">Submit</button> - Missing language attributes to signal the default language.

- Color contrast ratios to improve readability for visually impaired users.

- Keyboard navigation to validate that all functions can be accessed without a mouse.

- Alt text for images to assist screen readers.

<img src="example.jpg" alt="Detailed description of image">

16. Monitor Technical SEO Continuously

Technical SEO requires ongoing monitoring to guarantee that search engines can crawl, index, and rank your content effectively. As websites evolve, new errors such as broken links and slow-loading pages can appear, affecting rankings.

To maintain optimal performance, make sure you practice the tips below.

- Run the Search Atlas Site Audit Tool regularly to detect new issues related to crawlability, indexation, and Core Web Vitals.

- Use the Search Atlas Crawl Monitoring Tool for continuous bot tracking, as it provides real-time data on which pages Googlebot is crawling, how often, and whether important pages are being ignored.

- Monitor Google Search Console’s Coverage Report for indexing errors.

- Set up real-time alerts for critical errors, such as downtime or missing pages.

By following these best technical SEO practices, structuring your site properly, fixing broken links, optimizing speed, and improving accessibility, you create a site that search engines trust and users enjoy, setting yourself up for sustainable growth.

What Are the Advanced Technical SEO Techniques?

Advanced technical SEO techniques go beyond basic optimizations, ensuring that large websites are efficiently crawled, indexed, and ranked while maintaining fast performance and structured content. The advanced technical SEO techniques are listed below.

1. Optimize Crawl Budget for Your Website

Crawl budget optimization verifies that search engines focus on crawling and indexing high-value pages instead of wasting resources on crawling duplicate, thin, or outdated content.

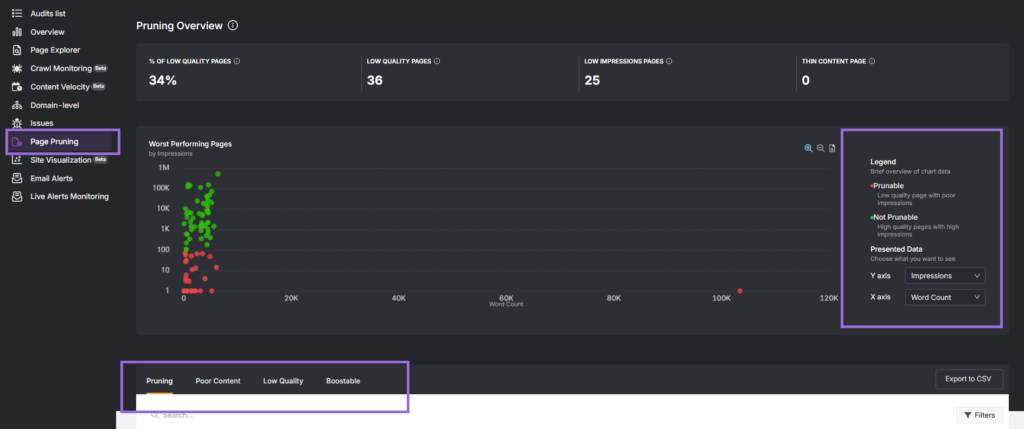

Remove low-quality or outdated content using the Search Atlas Page Content Pruning Tool.

Search Atlas Page Pruning tool helps you identify thin or underperforming pages that should be deindexed, consolidated, or redirected. It also highlights boostable pages, which is content that can be improved to retain SEO value instead of being removed.

After pruning, apply additional optimizations for crawl efficiency listed below.

- Implement

noindexfor non-essential pages such as internal search results and outdated promotional pages. - Use 301 redirects to consolidate ranking signals from outdated pages to their most relevant counterparts.

- Optimize JavaScript-dependent rendering by deferring non-critical scripts and ensuring key content is visible in the initial HTML.

- Reduce excessive parameterized URLs by managing faceted navigation and blocking unimportant query strings in

robots.txt.

2. Conduct Advanced Site Performance Checks

Advanced site performance checks analyze server-side processes beyond basic page speed optimizations. Advanced performance checks safeguard that critical assets load efficiently by improving server response times, JavaScript execution, and caching strategies.

To achieve the best results for site performance, follow the steps listed below.

- Optimize JavaScript assets for page speed.

- Reduce render-blocking JavaScript by deferring or asynchronously loading non-essential scripts.

<script async src="script.js"></script> - Minify and bundle JavaScript files to reduce HTTP requests.

- Use code-splitting to load only necessary scripts on each page.

- Manage resource order and request size count.

- Prioritize above-the-fold content for LCP.

- Optimize the critical rendering path by reducing the number of external requests.

- Compress and cache resources to minimize redundant network requests.

- Leverage Edge SEO for faster content delivery.

- Use a Content Delivery Network (CDN) to distribute assets closer to users, reducing latency.

- Implement serverless functions at the edge to dynamically rewrite requests for faster page loads.

- Optimize preloading and prefetching strategies to improve perceived speed.

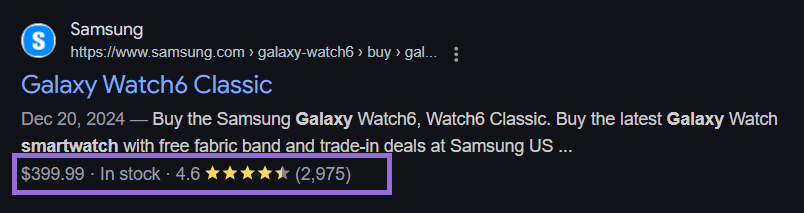

3. Implement Structured Data

Structured data, known as schema markup, is a type of code that helps search engines understand your content better. By adding structured data, you can enhance how your pages appear in search results with rich snippets, which display additional information like ratings, product details, or event dates.

To implement structured data, first choose the right schema type. Google supports different types of structured data, including article schema, product schema, FAQ schema, and event schema.

- Article schema for blog posts and news.

- Product schema for e-commerce pages.

- FAQ schema for frequently asked questions.

- Event schema for event listings.

Use a structured data generator to create the correct JSON-LD format. For example, here’s a basic product schema for a smartwatch.

<script type="application/ld+json">

{

"@context": "https://schema.org/",

"@type": "Product",

"name": "Galaxy Smartwatch 6",

"image": "galaxy-smartwatch.jpg",

"brand": {

"@type": "Brand",

"name": "Samsung"

},

"offers": {

"@type": "Offer",

"priceCurrency": "USD",

"price": "399.99",

"availability": "https://schema.org/InStock"

},

"aggregateRating": {

"@type": "AggregateRating",

"ratingValue": "4.6",

"reviewCount": "2,975"

}

}

</script>

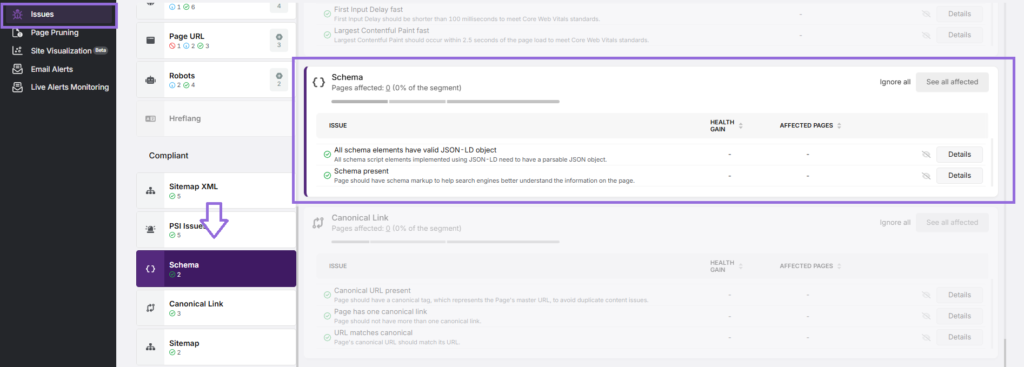

Use the Search Atlas Site Audit Tool. Navigate to the Issues Section to check if your schema elements are valid.

To monitor and validate structured data, go to Google Search Console, navigate to Enhancements → Rich Results, and check if your structured data is recognized.

4. Examine Log File Analysis

Log file analysis helps understand how often Googlebot and other crawlers visit your pages, identify crawl errors such as 404 pages, redirects, and disallowed URLs, and optimize the crawl budget by ensuring search engines focus on the most important pages.

Log files are stored on your server and contain records of each request made by search engine bots. You can find them via the tools listed below.

- Your web hosting provider’s log manager.

- Server-side tools like cPanel, Apache, or Nginx logs.

- Third-party tools such as Screaming Frog’s SEO Log File Analyser.

Check for the pages that are crawled most frequently, pages that rarely or never get crawled (potential indexing issues), and bots wasting crawl budget on unimportant URLs (e.g., faceted navigation, thin content).

There are 3 best ways to fix crawl inefficiencies, listed below.

- Block unnecessary pages in robots.txt to prevent a wasted crawl budget.

- Use internal linking to direct crawlers toward priority pages.

- Resolve repeated redirect chains to avoid crawl delays.

Regular log file analysis certifies that Google is crawling the right page, helping maximize indexing and search visibility.

5. Use Hreflang Tags in Multilingual Pages

The hreflang tag is an HTML attribute that tells search engines which language and regional version of a page to serve to users based on their location and language preferences. Proper hreflang implementation in multilingual pages guarantees that users see the most relevant version of your site.

To implement hreflang tags, use the correct format in the <head> section of each language version. For example, here’s how to set hreflang attributes for a website with a German version.

<link rel="alternate" hreflang="de" href="https://example.com/de/" />

If a site serves a global audience without a specific language preference, use x-default. This signals to search engines that this version should be shown to users who do not match a specific language or region.

There are three best practices to achieve proper hreflang implementation, as listed below.

- Provide bidirectional hreflang annotations so all language versions reference each other.

- Use the correct ISO (International Organization for Standardization) language and country codes (e.g.,

en-gbfor British English,fr-cafor Canadian French). - Check for incorrect hreflang mapping that might cause search engines to ignore tags.

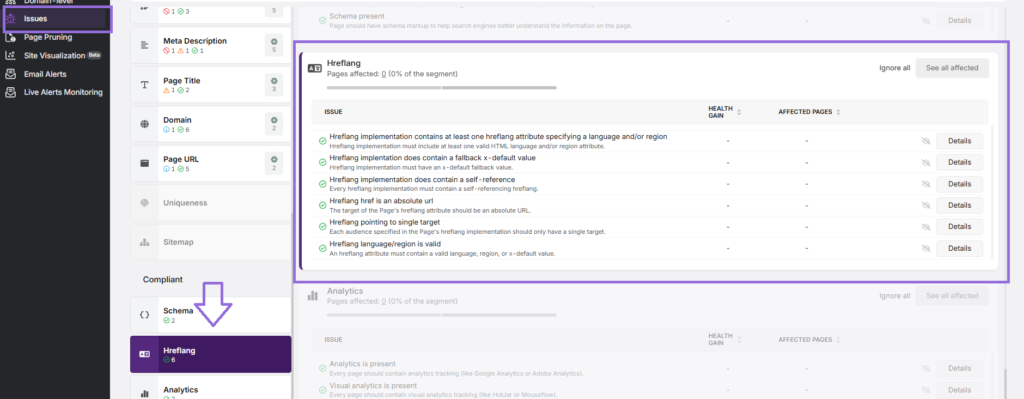

Utilize the SearchAtlas Site Audit Tool and navigate to the Issues section to detect missing or incorrect hreflang tags.

To monitor and validate hreflang tags, go to Google Search Console → International Targeting and check for implementation errors.

6. Assess Current CMS Limitations for SEO and Perform a Migration if Needed

A content management system (CMS) plays a critical role in a website’s SEO performance. Some CMS platforms have technical limitations that can hinder search engine visibility, slow down page load speed, or restrict customization options. If a CMS fails to meet SEO requirements, migrating to a more SEO-friendly CMS may be necessary.

To assess CMS limitations for SEO, analyze your CMS’s capabilities in the areas listed below.

- URL Structure & Customization. Does the CMS allow clean, keyword-rich URLs (

example.com/category/product) instead of complex query parameters (example.com/?p=123)? - Page Speed Optimization. Does the CMS enable caching, minification, and image compression for better Core Web Vitals?

- Indexation Control. Can you edit robots.txt, meta robots tags, and canonical URLs easily?

- Schema Markup Integration. Does the CMS support structured data without manual coding?

- Redirect Management. Does the CMS allow 301 redirects to avoid broken links and ranking drops during site updates?

If your CMS has serious SEO limitations that prevent optimization, consider migrating to a more SEO-friendly platform like WordPress, Shopify (for e-commerce), or Webflow.

How to Improve Technical SEO Using Search Atlas OTTO SEO?

OTTO SEO automates technical SEO optimizations in seconds, ensuring that websites are fully crawlable, indexable, and structurally sound for search engines. Users can apply fixes site-wide or individually, allowing precise control over technical SEO improvements while ensuring continuous monitoring and optimization.

The most influential technical SEO categories that Search Atlas OTTO SEO optimizes automatically are listed below.

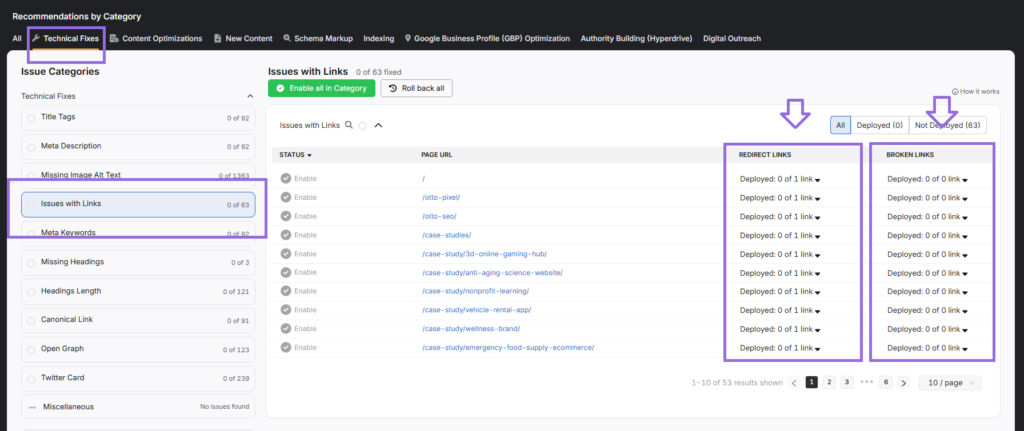

- Issues with Links. OTTO SEO’s Issues with Links module helps streamline internal and external link optimization by identifying redirected internal links that need updates and suggesting replacements for broken external links.

OTTO makes certain that pages link directly to their final destinations, eliminating unnecessary redirects and improving site structure.

When an internal link points to a redirected URL, it creates an unnecessary redirect loop, which slows down crawling and user navigation. OTTO detects these outdated internal links and suggests replacing them with the correct final destination URL.

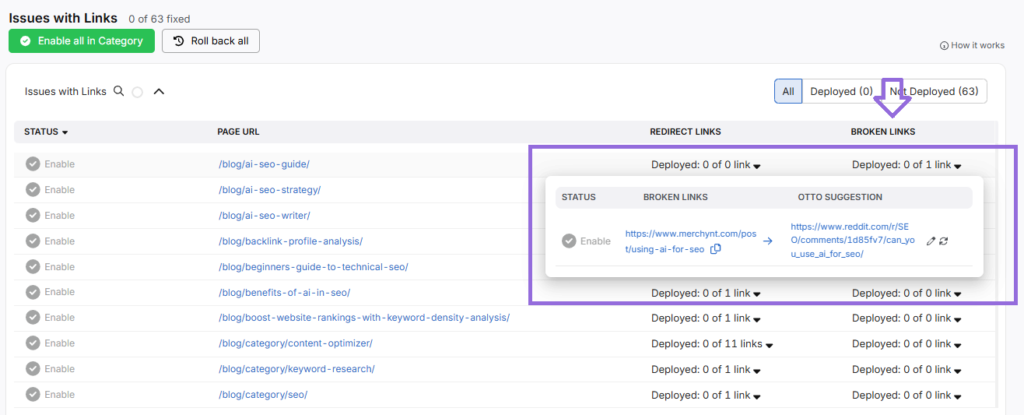

OTTO detects broken external links (leading to 404 pages) and suggests replacement URLs to maintain link integrity.

Users can deploy the suggestion, request a new recommendation, or manually edit and replace the link.

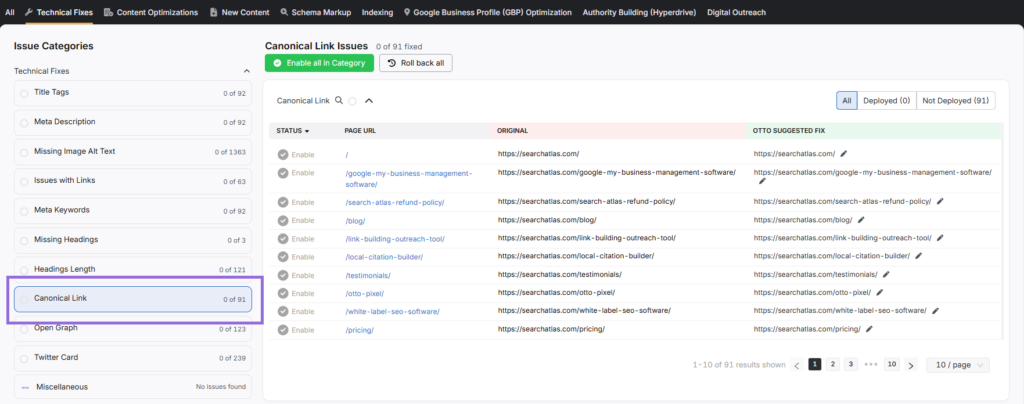

- Canonical Issues. OTTO SEO’s Canonical Link Issues module helps search engines understand which version of a page is the authoritative URL, improving indexation.

OTTO automatically detects incorrect, missing, or outdated canonical tags and suggests updates for better site performance.

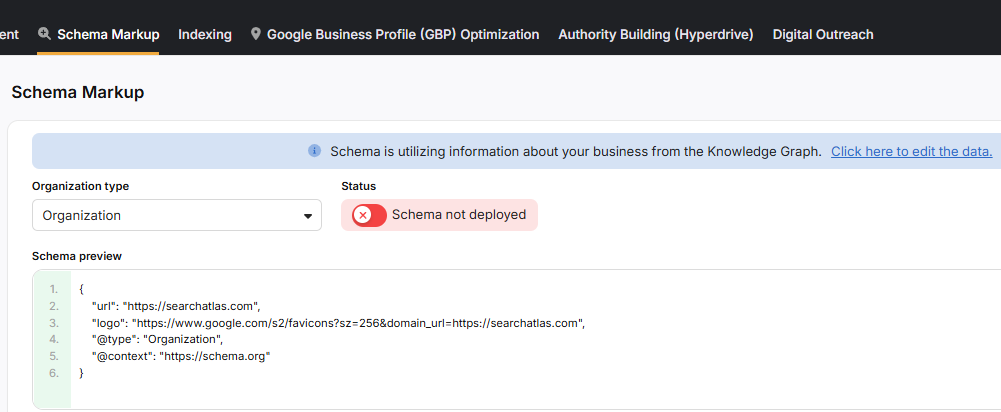

- Schema Markup. OTTO SEO’s Schema Markup module helps implement organization-level structured data, making it easier for search engines to understand and display key business details in search results.

OTTO automatically generates an organization-level schema by extracting data from the Knowledge Graph. This guarantees that search engines receive accurate and up-to-date business information without manual input.

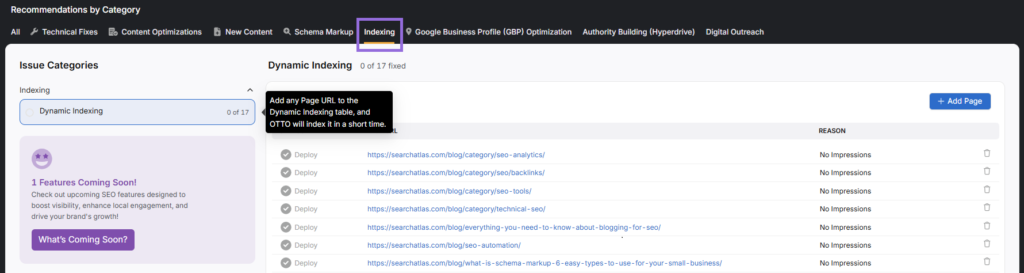

- Indexing. OTTO SEO accelerates the indexing process, ensuring new pages and updates are discovered and ranked faster instead of waiting for Googlebot’s next crawl cycle.

OTTO detects low-impression pages and reindexes them automatically using Dynamic Indexing to enhance search presence. For those looking for the best URL indexing tools, Search Atlas offers powerful AI-driven solutions like Instant Indexing, which submits URLs for immediate crawling and indexation, reducing the delay between publishing and ranking.

Beyond resolving issues with links, canonical tags, schema markup, and indexing, OTTO SEO also automates other fundamental technical SEO fixes to maintain a fully optimized website. The platform streamlines title tags, meta descriptions, missing image alt text, and Open Graph optimization, ensuring consistent metadata for better search visibility and social media performance. It detects missing headings, incorrect heading length, and meta keywords, refining page structure and content clarity.

For improved crawlability and content distribution, OTTO SEO identifies Twitter Card errors, Open Graph misconfigurations, and robots.txt directives, making technical SEO effortless and scalable. By addressing these critical areas in real-time, OTTO SEO guarantees a fully optimized, error-free, and high-performing website.

How to Conduct a Technical SEO Site Audit

A technical SEO audit evaluates a website’s technical infrastructure and performance to identify optimization opportunities and issues affecting search engine visibility. Conducting a technical audit involves a systematic examination of various website elements using specialized tools and methodologies.

The 4 essential steps to conduct a technical SEO audit are listed below.

- Run a complete site crawl. Perform a comprehensive website crawl using the Search Atlas Site Audit Tool to gather data about technical elements, such as redirects, status codes, and meta information.

- Analyze technical issues. Review crawl data to identify problems like broken links, missing meta tags, improper redirects, and page speed issues.

- Optimize based on best practices. Apply technical SEO best practices to resolve identified issues, following search engine guidelines and current web standards.

- Track and report results. Monitor changes through the Site Audit tool and create reports to document improvements and ongoing optimization needs.

A technical SEO audit report helps an SEO tech understand current performance and track progress while maintaining optimal visibility in search results. Regular technical analysis warrants that websites stay competitive and meet search engine requirements.

What Are the Best Technical SEO Tools?

The best technical SEO tools are the tools that provide features for crawling, analyzing, monitoring, and optimizing website technical performance. These tools identify and resolve technical issues that affect search visibility. The selection of technical SEO tools determines the effectiveness of website optimization processes.

The best technical SEO tools are the official Google SEO tools, such as Google Search Console, and AI-powered SEO tools like the Search Atlas SEO software platform. These tools provide different technical SEO insights for website optimization and monitoring processes. The best technical SEO tools are listed below.

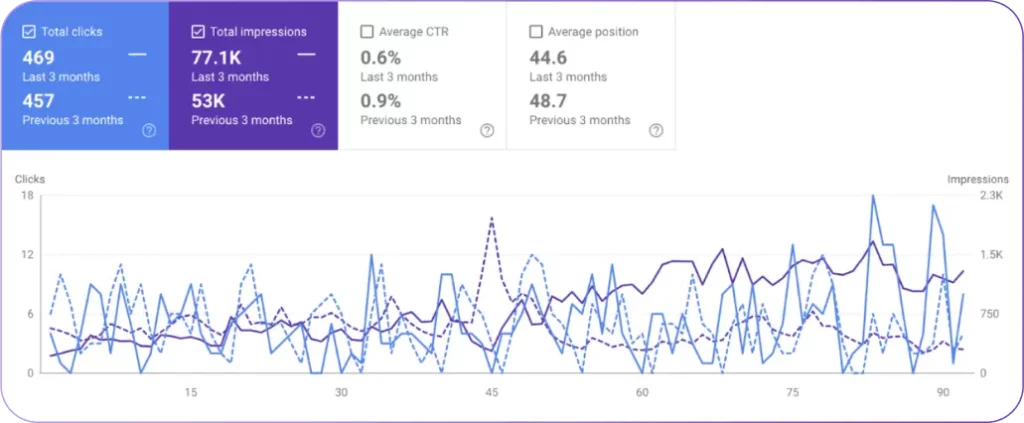

Google Search Console (GSC) serves as the primary technical SEO monitoring tool. GSC provides indexing coverage reports to show crawling and indexing performance. The performance reports of GSC display search visibility metrics, click-through rates, and keyword rankings. The URL inspection tool of GSC examines specific page performance and indexing status.

Google Analytics 4 (GA4) measures website performance through user interaction metrics. GA4 tracks user engagement patterns, conversion paths, and behavioral flows across pages. The enhanced measurement features of GA4 monitor scroll depth, outbound clicks, and site search usage. GA4 provides real-time monitoring for immediate performance insights and technical issue detection.

PageSpeed Insights (PSI) focuses on performance optimization opportunities. PSI measures Core Web Vitals, including Cumulative Layout Shift, First Input Delay, and Largest Contentful Paint. The lab data section of PSI identifies specific technical improvements for better performance. PSI provides separate scoring and recommendations for mobile and desktop versions.

Search Atlas SEO Software Platform serves as an all-in-one AI-driven SEO tool. Search Atlas tools enhance technical optimization processes through multiple features, leveraging AI-driven automation for site architecture analysis, internal linking, Core Web Vitals, schema markup, and JavaScript rendering. Its intelligent monitoring system detects technical changes, security issues, and performance shifts in real-time, ensuring peak search visibility.

Combining Google’s tools and Search Atlas technical SEO tools creates a complete technical SEO optimization system.

What Are the Different Types of Technical SEO?

The different types of technical SEO emerge to address specific challenges related to performance, rendering, and accessibility.

The types of technical SEO are listed below.

- Mobile SEO. Optimizes websites for mobile-first indexing by ensuring responsive design, fast loading speeds, and mobile-friendly navigation. Mobile SEO uses Google’s Lighthouse and Core Web Vitals assessments to enhance usability.

- JavaScript SEO. Ensures JavaScript-based content is crawlable and indexable by search engines. JavaScript SEO focuses on server-side rendering (SSR), dynamic rendering, and pre-rendering to prevent content hidden behind JavaScript from being missed.

- Accessibility SEO. Enhances website accessibility for users with disabilities by improving keyboard navigation, alt text for images, ARIA attributes, and color contrast. Accessibility SEO uses Google Lighthouse and WCAG (Web Content Accessibility Guidelines) compliance checks.

- React SEO. Focuses on optimizing React-based websites. React SEO leverages server-side rendering (SSR) with Next.js, optimizing hydration, and ensuring Googlebot can properly index dynamic components.

- Next.js SEO. Specializes in Next.js applications. Next.js SEO ensures efficient routing, lazy loading, dynamic imports, and structured data implementation to enhance search visibility.

- Headless SEO. Involves optimizing headless CMS setups where the frontend and backend are decoupled. Headless SEO requires proper API structuring, dynamic rendering solutions, and metadata optimization to maintain strong SEO performance.

- Edge SEO. Uses CDN-based optimizations and serverless functions at the edge. Edge SEO improves load speed, reduces latency, and executes SEO optimizations in real-time before content reaches the user’s browser.

Each type of technical SEO ensures that websites are search-friendly, fast, and accessible. Choosing the right approach depends on the website’s framework, technology stack, and user experience goals.

What Industries Need Advanced Technical SEO the Most?

Advanced technical SEO is crucial for industries using websites with complex structures, high traffic volumes, or dynamically generated content.

There are 4 industries that need advanced technical SEO the most. These are listed below.

- E-commerce Businesses. E-commerce businesses rely heavily on SEO for revenue generation, making technical SEO essential for e-commerce platforms such as Shopify, WooCommerce, and Magento. Large product catalogs, dynamically generated URLs, and faceted navigation can lead to index bloat and crawl budget inefficiencies. Implementing structured data for rich snippets, optimizing pagination, and managing canonical tags guarantees product page rank.

- Large Websites & Publishers. Large websites with millions of pages, such as news portals, job boards, and enterprise sites, need robust technical SEO platforms to smoothly manage indexing. Log file analysis, XML sitemap optimization, and crawl budget management are critical to ensuring search engines prioritize high-value content. Technical SEO for WordPress-based news sites involves optimizing for Google Discover, AMP (Accelerated Mobile Pages), and Core Web Vitals to improve search visibility.

- SaaS Companies. SaaS companies rely on content marketing, landing pages, and programmatic SEO to attract leads. Many SaaS websites use JavaScript frameworks like React or Next.js, which require server-side rendering (SSR) or pre-rendering to make sure search engines index content properly. Technical SEO for SaaS includes optimizing API documentation, improving site speed with Edge SEO, and using structured data to enhance knowledge panels and search result features.

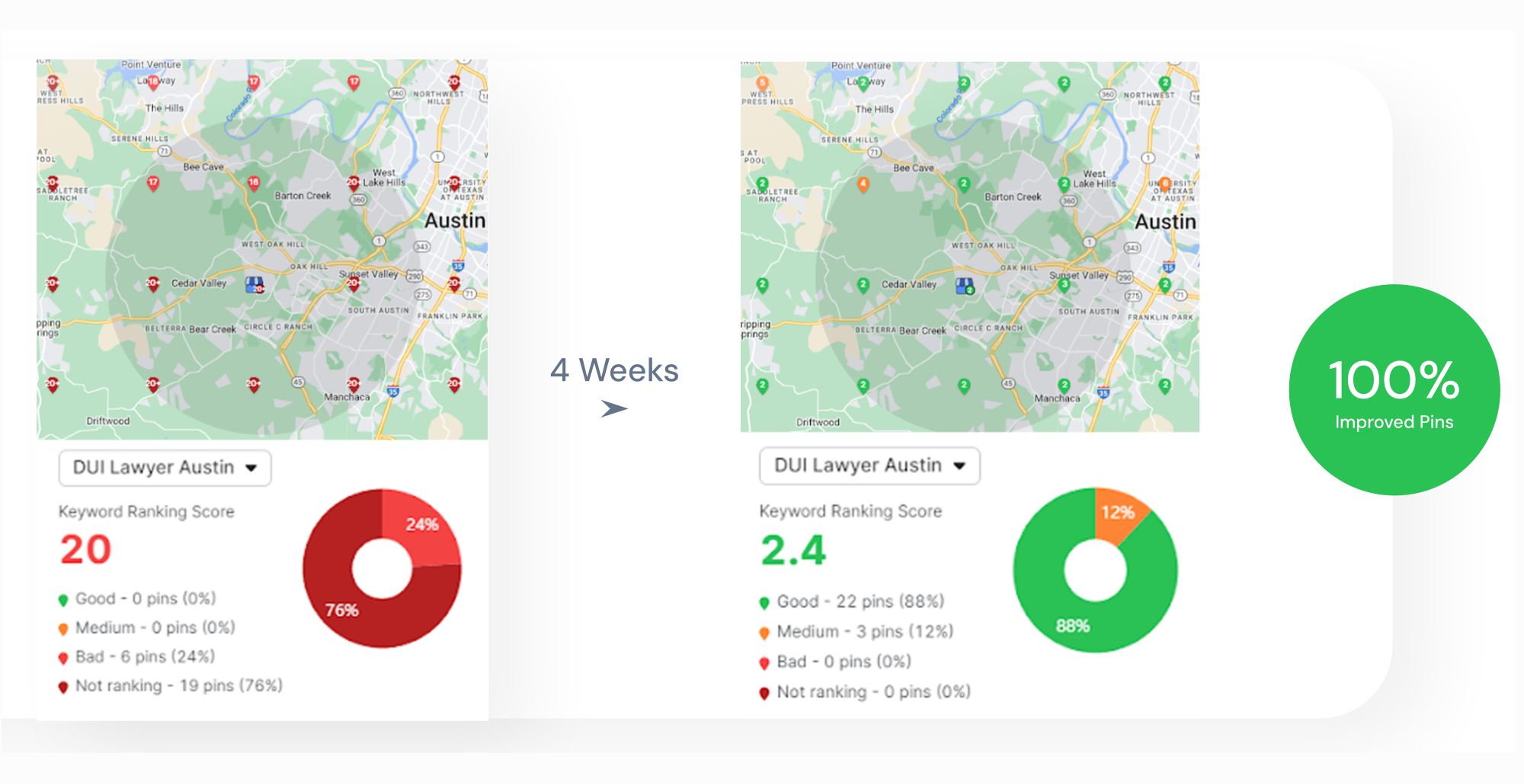

- Law Firms. Law firms depend on local SEO, schema markup, and strong site structure to attract clients in competitive markets. Implementing technical SEO for WordPress-based law firm sites includes optimizing local business schema, ensuring mobile responsiveness, and setting up hreflang tags for multilingual content. Google’s local ranking factors emphasize site speed, security (HTTPS), and structured navigation, making technical SEO a critical factor for success.

Advanced technical SEO affirms these industries’ websites remain search-friendly, scalable, and high-performing. By leveraging structured data, optimized site architecture, and JavaScript SEO strategies, businesses can maximize their search visibility and drive sustainable organic growth.

How to Start a Technical SEO Agency?

There are 10 essential steps to starting a technical SEO agency. These steps will help you establish a technical SEO agency, build expertise, attract clients, and scale operations effectively. The ten steps to start a technical SEO agency are listed below.

- Develop technical SEO expertise. Master key areas like crawling, indexing, Core Web Vitals, structured data, and site architecture by staying updated with Google’s algorithm changes and using industry tools like Google Search Console and Search Atlas SEO Platform.

- Define your niche. Specialize in a specific industry or type of business (e.g., e-commerce, SaaS, local businesses) to stand out from competitors.

- Set up your business structure. Register your agency, create a professional website, and optimize your brand presence using structured data, a clear service page, and case studies showcasing results.

- Invest in essential SEO tools. Use Search Atlas for site audits, indexing management, and automation, alongside Google Search Console, Google Analytics 4, PageSpeed Insights, and Google Lighthouse for performance optimization.

- Create a scalable SEO audit process. Develop a streamlined method for conducting technical SEO audits, generating reports, and delivering insights that clients can act on.

- Develop service packages. Structure well-defined technical SEO service packages that cater to your target clients’ needs. Leverage a white-label SEO solution from a renowned SEO platform like the Search Atlas White-Label SEO to offer fully branded, customizable reports and services under your agency’s name.

- Build a client acquisition strategy. Use content marketing, LinkedIn outreach, cold email campaigns, and PPC ads to attract high-value clients looking for technical SEO consulting.

- Optimize for enterprise-level clients. Leverage enterprise-grade tools and automation to manage complex site structures and enhance search visibility at scale. Develop custom SEO frameworks for large-scale websites using Search Atlas Enterprise SEO to establish crawl budget optimization, log file analysis, and schema markup implementation.

- Automate SEO processes. Use AI-powered tools like Search Atlas OTTO SEO to automate redirect management, internal linking optimization, and schema deployment, improving efficiency.

- Scale your agency with retainer clients. Offer monthly SEO retainers to warrant ongoing technical optimizations, algorithm monitoring, and continuous performance improvements for long-term client success.

How to be a Technical SEO Specialist?

There are eight essential steps to becoming a technical SEO specialist. These steps will help you develop technical SEO skills, gain practical experience, and build a career in technical SEO.

The 8 steps to becoming a technical SEO specialist are listed below.

- Understand the role of a technical SEO specialist. Learn that a technical SEO specialist works at the intersection of search engine algorithms, web development, and site architecture to ensure that search engines can efficiently process and rank web pages.

- Know the core principles of technical SEO. Study crawling, indexing, Core Web Vitals, structured data, page speed optimization, site architecture, and security protocols. Master Google’s Search Essentials and documentation on ranking factors.

- Familiarize yourself with technical SEO tools. Gain hands-on experience with Google Search Console, Google Analytics 4, PageSpeed Insights, Google Lighthouse, Search Atlas, Chrome DevTools, and Schema Markup Validators to analyze site health and detect technical errors.

- Develop a strong foundation in web technologies. Learn HTML, CSS, JavaScript, and server-side concepts like HTTP status codes, redirects, and CDNs to troubleshoot and implement technical fixes.

- Gain practical experience through SEO audits. Conduct technical SEO audits on real websites, identifying indexing errors, redirect chains, mobile usability issues, and schema markup opportunities using site audit tools.

- Understand website migrations and URL structure. Learn how to execute domain migrations, manage redirects, and optimize URL structures to prevent ranking losses during major site changes.

- Stay updated with algorithm changes and SEO trends. Follow Google algorithm updates, Core Web Vitals changes, and advancements in AI-driven search to continuously adapt your SEO strategies.

- Build a portfolio with case studies. Document successful technical SEO projects, audits, and performance improvements to showcase your expertise when applying for jobs or freelance work.

By following these steps, you can learn SEO from a technical perspective, specialize in solving complex site performance and indexing issues, and become a sought-after tech SEO specialist.

What is the Difference Between Technical SEO and On-Page SEO?

Technical SEO focuses on optimizing a website’s underlying architecture. The primary goal of technical SEO is to eliminate technical obstacles such as broken links or misconfigured redirects that could hinder search engines from properly indexing your pages.

On-page SEO focuses on optimizing individual pages. On-page optimization involves keyword placement, meta tags, internal linking, header tags, and content structure to enhance readability and engagement. The goal of on-page SEO is to improve a webpage’s content and structure to make it more relevant for specific search queries.

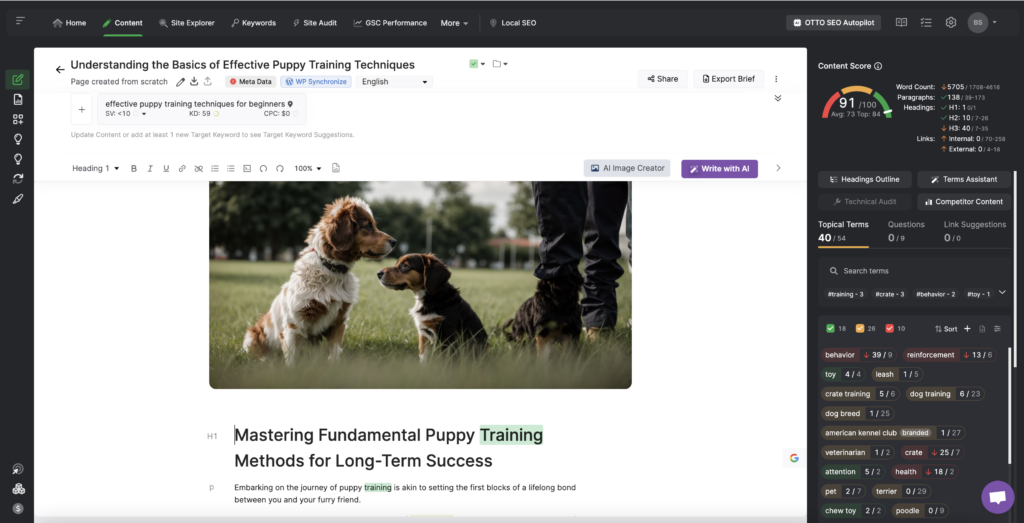

Technical SEO relies on tools like PageSpeed Insights for load time optimization, the Search Atlas Site Audit Tool for comprehensive website audits, and Google Lighthouse for in-depth performance analysis. On-page SEO utilizes tools such as Yoast SEO for optimizing meta tags and readability, Search Atlas OTTO for keyword and content scoring as well as structured data implementation, and the Search Atlas On-Page Audit Tool for evaluating and improving on-page elements.

What is the Difference Between Technical SEO and Content SEO?

Technical SEO is dedicated to optimizing the structural aspects of a website to facilitate efficient crawling and indexing by search engines. Content SEO, on the other hand, revolves around the creation and optimization of high-quality, relevant content that meets the needs of your audience. The goal of content SEO is to provide valuable, well-optimized content that meets user search intent, improves engagement, and ranks well in search results.

Technical SEO utilizes tools like Google Search Console, Screaming Frog, and Search Atlas’ Site Audit Tool to provide adherence to technical best practices. Content SEO relies on tools such as Search Atlas OTTO and the Content Genius Tool to develop optimized content with strategic keyword integration, internal linking, and engaging formats. It also incorporates insights from Google Search Console and Google Analytics 4 to refine content strategies based on search performance and user behavior changes.