Meta robots tags and X-robots tags are directives that control how search engines crawl and index pages. Meta robots tag and X-robots tag help manage crawl budget, eliminate duplicate content issues, and improve index control across different file types. They prevent low-value pages from diluting your site’s authority and ensure that only the most relevant URLs appear in search results.

To apply meta robots tag and x-robots tag, you must understand when to use HTML vs. header-level tags, maintain directive consistency, and regularly audit your implementation using tools like the Search Atlas Site Audit Tool. The wrong meta robots tag and X-robots tag configuration silently blocks valuable content and confuses crawlers, which hurts your SEO performance.

What Is a Meta Robots Tag?

A meta robots tag is an HTML tag or a directive that tells search engine crawlers how to index and follow a web page. Meta robots tags provide specific instructions to search engine crawlers about whether to index a page, follow links, or cache content. Meta robots tags appear in the HTML head section of web pages and communicate directly with search engine bots.

Search engines recognize meta robots tags as authoritative directives for page handling. The robots meta tag uses name=”robots” as the primary identifier. Web developers place these tags between the opening and closing head tags of HTML documents.

Meta robots tags control three primary functions of indexing permissions, link following behavior, and content caching rules. These directives help website owners manage search engine visibility and crawl budget allocation across their site architecture.

What Are the Types of Meta Robots Tag Values?

Meta robots tag values define specific indexing and crawling behaviors for a page. Search engines read the meta robots tag values to apply access rules as shown below.

- index. Index the page and display it in search results.

- noindex. Exclude the page from search results.

- follow. Follow the links on the page.

- nofollow. Do not follow links on the page.

- noarchive. Do not store a cached copy of the page.

- nosnippet. Do not show a snippet in the SERP.

- max-snippet:-1. Show unlimited snippet length.

- max-image-preview:large. Show large image previews.

Where Do You Place a Meta Robots Tag?

The meta robots tag must appear inside the <head> section of an HTML document. If placed in the <body>, Google and other crawlers ignore it during the indexing process.

Search engines read the head section before rendering the page body. Incorrect placement causes the tag to be missed entirely, which results in unintended indexing. To avoid crawl waste or accidental exposure, always insert the robots tag between the <head> and </head> tags.

The example of proper meta robots tag placement is below.

<head>

<meta name="robots" content="noindex, follow">

</head>

How Do Meta Robots Tags Affect SEO?

Meta robots tags directly affect SEO by telling crawlers what to index and follow.

Incorrect use of noindex or nofollow removes pages from search results. Correct configurations help manage crawl budgets, hide low-value content, and pass link equity. Meta robots tags are one of the most crucial SEO considerations as they greatly support clean indexing strategies for large websites.

What Is an X-Robots Tag?

An x-robots tag is an HTTP header directive that serves the same function as meta robots tags but operates at the server level. X-robots tags provide identical crawling and indexing instructions through HTTP response headers instead of HTML markup. X-Robots tags work for all file types, including PDFs, images, and non-HTML documents.

Server administrators configure X-Robots tags through web server settings or application code. The X-Robots-Tag header appears in HTTP responses before browsers or crawlers process page content, and it’s an important component of SEO for PDF. This server-level implementation offers more flexibility than HTML-based meta tags.

X-Robots tags use identical directive values as meta robots tags.

How to Configure an X-Robots-Tag?

Configure an X-Robots-Tag in Apache, Nginx, or through server-side scripting as listed below.

Apache (.htaccess) configuration example is below.

<FilesMatch "\.pdf$">

Header set X-Robots-Tag "noindex, noarchive"

</FilesMatch>

Nginx configuration example is below.

location ~* \.pdf$ {

add_header X-Robots-Tag "noindex, noarchive";

}

PHP header configuration example is below.

header("X-Robots-Tag: noindex, noarchive");

This implementation supports selective control by file type, directory, or response condition.

Should You Use Meta Robots Tag or X-Robots Tag?

Use a meta robots tag when you need to control the behavior of individual pages inside a CMS.

Use an X-Robots tag when you need to set indexing directives for assets or non-HTML URLs handled through the server or CDN.

When Should You Use Noindex Directives?

Use noindex for duplicate content pages, thin content sections, and user-specific areas that provide no search value. Noindex directives prevent index bloat and focus crawl budget on valuable content.

E-commerce sites benefit from noindex on filter pages, search result pages, and pagination sequences. Ecommerce SEO involves pages that often contain duplicate or minimal content that dilutes search visibility. Noindex directives concentrate ranking signals on product pages and category pages.

Internal search results, login pages, and checkout processes require noindex implementation. These functional pages serve user needs but provide no search value. Noindex directives prevent these pages from competing with content pages for search visibility.

Staging environments and development pages need noindex protection during active development. This prevents incomplete or test content from appearing in search results. Remove noindex directives only after the content review and approval processes are complete.

When Should You Use Nofollow Directives?

Use nofollow directives on pages with untrusted outbound links, user-generated content sections, or low-value internal pages. Nofollow directives control link equity distribution and crawler navigation patterns. Nofollow directives preserve link authority for high-priority content areas.

Comment sections, forum posts, and user profiles often require nofollow technical SEO implementation. User-generated content frequently contains spam links or irrelevant external references. Nofollow directives prevent these links from affecting site authority distribution.

Pagination pages, archive sections, and tag pages benefit from selective nofollow application. These pages serve organizational purposes but should not receive significant link equity. Nofollow directives redirect crawler attention to content pages.

Internal search results and filter pages require nofollow consideration for technical SEO optimization. Dynamically generated pages create infinite crawl paths that waste crawler resources. Nofollow directives establish clear site architecture boundaries.

How to Audit Meta Robots and X-Robots Tags?

To audit meta robots and X-Robots tags, follow a structured process that reviews both HTML directives and HTTP headers.

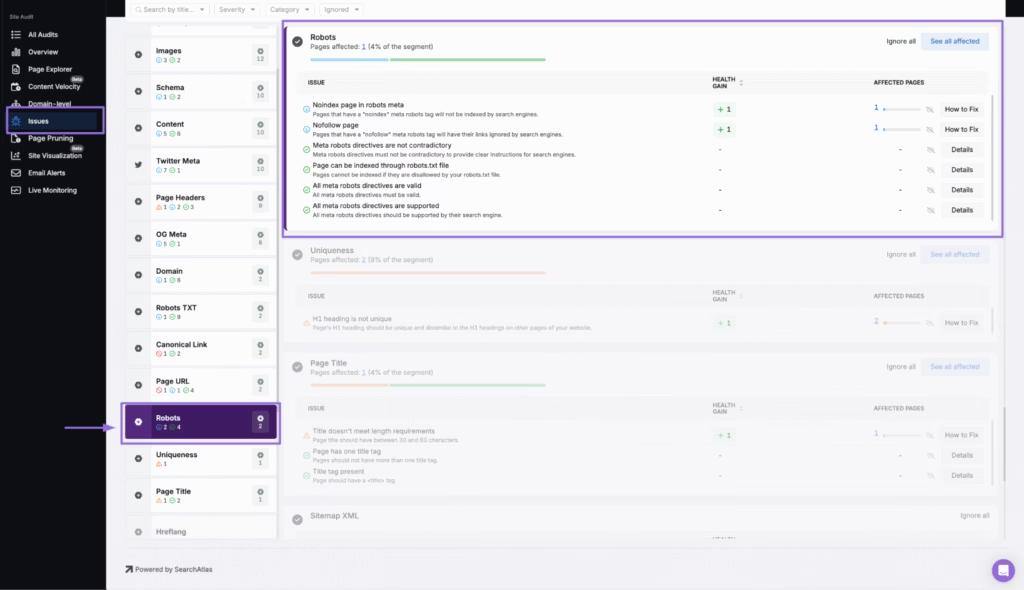

Use the Search Atlas Site Audit Tool to find, fix, and monitor issues related to meta robots and X-Robots tag directives. After running a site-wide crawl, open the Issues panel and navigate to the Robots category.

To perform a complete audit of robot tags using the Search Atlas Site Auditor, follow the 6 steps listed below.

- Open the Robots section in the Issues tab. From the left navigation panel, select “Issues” and scroll to the “Robots” card. This section surfaces all robot-based indexing issues across the site.

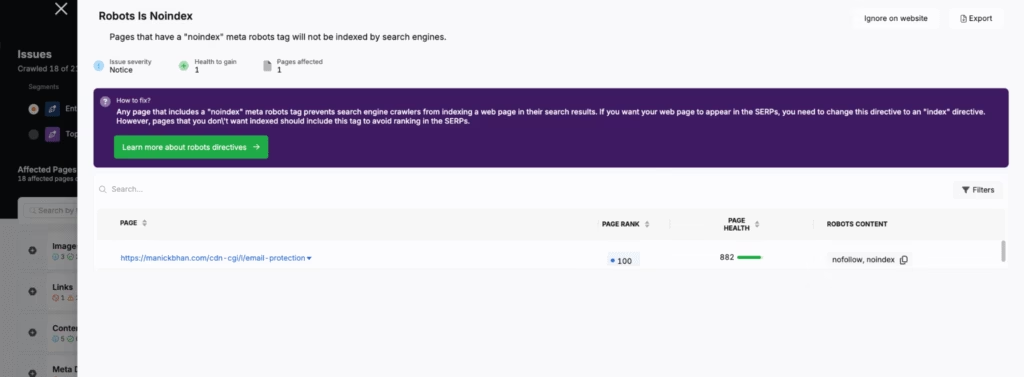

- Review flagged pages with noindex or nofollow. Click on each listed issue, like “Noindex page in robots meta” or “Nofollow page,” to view affected pages. These directives block pages from appearing in search results or from passing link equity.

- Check that directive usage matches intent. Open each affected URL and verify if it truly requires a

noindexornofollow. Pages such as admin panels or gated content may require exclusion, but important product or content pages should not. - Inspect for conflicts or unsupported directives. Review warnings like “Meta robots directives are not contradictory” or “All meta robots directives are valid.” Conflicting or unsupported tags confuse crawlers and disrupt indexing.

- Validate robots.txt impact on indexability. Check for the issue “Page can be indexed through

robots.txtfile.” A page blocked by robots.txt cannot apply meta or X-Robots-Tag directives because search engines skip reading the content. Adjust robots.txt or move the directive to headers if needed. - Use ‘How to Fix’ for guided resolution. Click the “How to Fix” button next to each issue. Follow the Search Atlas instructions to adjust meta tags in the page source or update HTTP headers via server configuration.

Search Atlas SEO platform provides precise visibility into robot directives through structured issue flags, affected page counts, and health impact scoring. The Search Atlas Site Audit Tool helps eliminate unintentional deindexing and supports accurate indexing control.

What Are Common Mistakes with Meta Robot Tags and X-Robot Tags?

The common mistakes with meta robot tags and X-Robots tags cause crawl waste, indexation failures, and link equity loss. The mistakes with robot meta tags and X-robot tags often go unnoticed because crawlers follow conflicting signals or skip blocked resources silently.

The five most common mistakes with meta robot tags and X-Robots are below.

1. Blocking pages in robots.txt that include noindex tags. Search engines cannot read meta robots or X-Robots tags on pages blocked in robots.txt. When the page is disallowed, crawlers skip the entire document, which prevents noindex from being processed. Always leave crawl access open if you use noindex.

2. Placing meta robots tags outside the <head> section. Google and other bots ignore meta tags placed in the <body>. The meta robots tag must appear inside the <head> or it will not be executed.

3. Using contradictory directives (e.g., noindex + canonical). Adding noindex to a page while canonicalizing it to another URL causes index confusion. Canonical signals consolidation, while noindex requests removal. Use one directive per goal to avoid misinterpretation.

4. Applying X-Robots tags to all file types without MIME targeting. Global X-Robots settings often block assets unintentionally. PDFs, scripts, or CSS files may be excluded from indexing or caching if MIME-specific headers are not configured. Use precise rules in .htaccess or NGINX location blocks.

5. Forgetting to audit dynamically generated or non-HTML files. X-Robots tags apply to images, PDFs, and scripts, but many sites skip them during audits. If search results show unexpected file indexing, check your server headers for missing or misapplied X-Robots instructions.

The Search Atlas Site Auditor scans for these conflicts. The audit tool highlights unindexed pages, skipped headers, and contradictory directives across HTML and server levels, which ensures robots control signals align with your SEO goals.

Why Should You Avoid Mixing Canonical and Noindex Signals?

A canonical tag signals that a page should pass its value to another URL, while a noindex tag tells search engines to exclude the current page from the index entirely. When combined, canonical tags and noindex directives send conflicting instructions. Google may ignore the canonical or delay deindexation. It undermines both index hygiene and signal consolidation, especially on large-scale sites with templated content.

How Do Meta Robot Tags and X-Robot Tags Support Crawl Budget Optimization?

Meta robot tags and x-robot tags prevent search engines from wasting crawl budget on low-priority, duplicate, or non-indexable pages. By applying noindex and nofollow to filter pages, internal search results, and other thin content, you focus the crawl budget on high-value URLs. This improves the frequency and depth of crawling for important pages, which enhances index freshness and ranking stability.

What Role Do Robots Tags Play in International SEO and JavaScript SEO?

In international SEO, the meta robots tag and X-robots tag help manage localized page variants by controlling which language or regional versions appear in the search. Incorrect tag usage in international SEO may block alternate Hreflang targets or cause duplicate content indexing.

In JavaScript SEO, the meta robots tag and X-robots tags control crawler behavior before JavaScript execution. Since search engines may index pre-rendered versions, precise robot tagging ensures that dynamic content or user-specific states in JavaScript SEO do not dilute the index or expose unwanted URLs.