Large language models (LLMs) increasingly function as decision engines for local intent queries. LLMs are reshaping how people discover nearby businesses. Queries like “plumber near me,” “restaurants near me,” and “urgent care near me” now return ranked local business recommendations inside AI-generated answers across search interfaces and assistants. These citations matter because they determine which local businesses gain visibility before users engage with maps, listings, or traditional search results.

Local SEO historically relied on proximity, Google Business Profile (GBP) prominence, citations, reviews, and domain authority to influence rankings. LLMs now mediate local discovery, but uncertainty remains around whether these systems apply the same signals or operate under a different ranking logic. The missing piece is large-scale evidence that identifies which factors influence how LLMs select, order, and cite local businesses in response to “near me” queries.

This study analyzes 104,855 URL citations generated across OpenAI, Gemini, Perplexity, Grok, Copilot, and Google AI Mode between October 27 and December 3, 2025. The analysis evaluates how businesses are selected and ranked for near me queries. The dataset captures how different classes of signals (semantic relevance, content freshness, GBP metrics, domain authority, and schema markup) correlate with LLM ranking position.

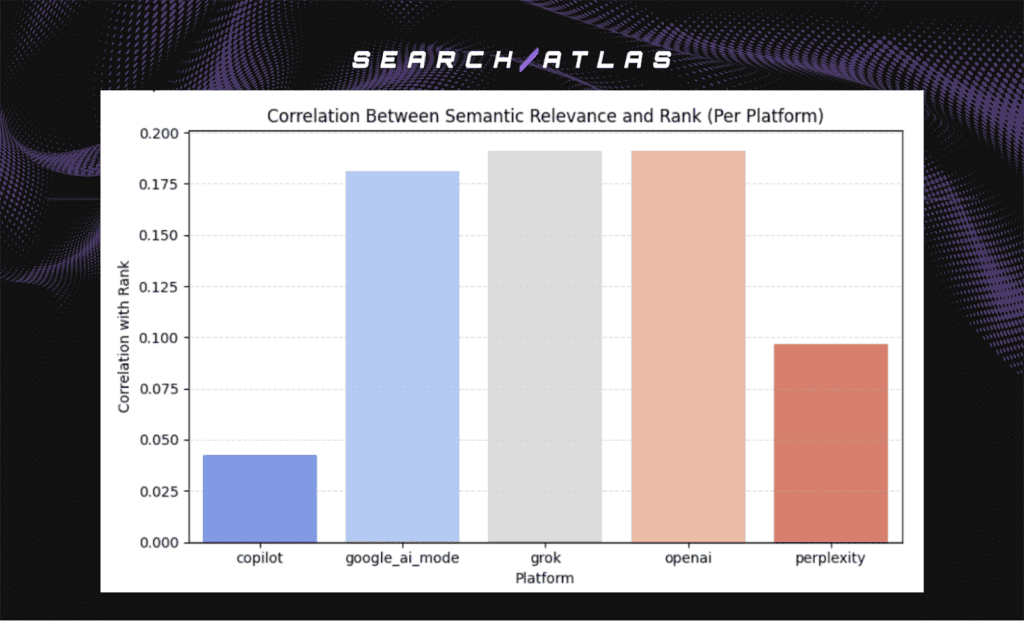

The results show that semantic relevance drives local LLM ranking behavior more strongly than Google Maps and traditional local SEO authority signals. Citations that closely match user intent consistently rank higher across most platforms, while domain authority, structured data, and map-based visibility exert inconsistent influence. Freshness and GBP quality contribute modestly and vary by model design, which confirms that LLMs prioritize how closely a page matches local intent over how a business appears inside traditional search systems.

Methodology – How Was Local LLM Ranking Measured?

This experiment measures how LLMs rank and cite local businesses for “near me” queries. The analysis evaluates whether LLMs apply traditional local SEO signals or rely on a different set of ranking drivers when ordering business citations inside AI-generated answers.

This experiment matters because local discovery increasingly occurs inside LLM responses rather than maps or organic listings. Understanding which signals influence citation order reveals whether local visibility inside AI systems follows proximity and authority models or shifts toward semantic and contextual relevance.

The dataset integrates one primary local-intent citation dataset described below.

LLM Local Citation Dataset. The dataset contains 104,855 URL citations generated by OpenAI, Gemini, Perplexity, Grok, Copilot, and Google AI Mode between October 27 and December 3, 2025. All citations originate from queries containing the phrase “near me,” selected to reflect broad local discovery intent rather than branded or niche searches.

Each citation record includes the fields listed below.

- Query metadata. Query title, inferred intent, search volume, and timestamp.

- Citation metadata. Domain, URL path, LLM ranking position, mentions, and share of voice.

- Platform metadata. LLM platform, model identifier, token usage, and response creation timestamp.

This dataset forms the foundation for all ranking factor analyses in this study.

Analytical Framework

The analysis tests 5 classes of signals to determine how each relates to LLM citation ranking position. The 5 classes of signals are listed below.

1. Domain Metrics Analysis.

The analysis enriched each cited domain with Domain Power (DP), Domain Authority (DA), and Domain Rating (DR) values. The analysis retained only the most recent metric per domain and excluded domains without valid measurements. This step enables direct comparison between traditional SEO authority signals and LLM citation ranking behavior across platforms.

2. Schema Markup Coverage Analysis.

The analysis merged a large-scale HTML schema dataset to measure structured data adoption across cited domains. It assigned a schema detection flag to each cited URL and calculated schema coverage as the proportion of URLs per domain containing valid structured data. This process produced a domain-level schema score used to evaluate schema influence on LLM ranking.

3. Google Business Profile Signal Integration.

The analysis merged a dedicated GBP dataset to evaluate local prominence signals among cited businesses. It examined GBP Score, heatmap rank, keyword coverage, citation count, posts, review volume, ratings, reply behavior, and questions. The analysis removed rows with missing or zero values to maintain reliable correlation results.

4. Semantic Relevance Analysis.

The analysis measured semantic relevance using vector embeddings generated from query text and cited page content. It calculated cosine similarity between these embeddings to produce a Semantic Relevance Score for each citation. This score quantifies intent alignment and enables evaluation of semantic relevance as a primary ranking driver across LLM platforms.

5. URL Freshness Analysis.

The analysis scraped cited URLs to retrieve page content and publication timestamps while excluding homepages and non-HTML assets. It extracted dates from standardized metadata fields and removed invalid timestamps. The analysis calculated freshness as the time difference between publication and citation, retaining 9,805 URLs with verified dates for evaluation.

What Is the Final Takeaway?

The analysis demonstrates that local LLM rankings are driven primarily by semantic relevance rather than traditional local SEO signals. The study shows that citation ordering for “near me” queries depends on how closely content aligns with user intent, not on proximity, domain authority, structured data, or strength inside Google Maps and link-based search systems.

Semantic relevance produces the strongest and most consistent relationship with ranking across platforms. OpenAI, Grok, and Google AI Mode show similar positive alignment between relevance and rank, which confirms that intent matching drives citation order inside these systems. Perplexity shows weaker alignment, while Copilot displays minimal sensitivity to relevance signals.

Traditional authority and markup signals contribute little to ranking outcomes. Domain authority metrics and schema markup adoption show weak or inconsistent relationships with citation position, which indicates that LLMs do not prioritize link equity or structured metadata when ranking local results.

Freshness and GBP quality introduce secondary, platform-dependent effects. Gemini favors recently published content, while OpenAI and Grok balance fresh pages with evergreen sources. GBP quality metrics correlate modestly with ranking, signaling supportive context rather than primary ranking control.

The direction of these findings remains consistent. Semantic alignment defines local visibility inside LLMs, while authority, proximity, and citations recede in importance. LLMs select local businesses based on how well content satisfies the user’s request rather than how powerful a business appears inside traditional search indexes.

Local SEO now requires alignment with how LLMs interpret intent, with semantic relevance and LLM citation visibility serving as the new benchmarks for local discoverability in AI-generated search environments.

How Do Ranking Signals Appear in LLM Citations?

I, Manick Bhan, together with the Search Atlas research team, analyzed 104,855 LLM-generated URL citations to understand how different ranking signals appear inside local “near me” responses. The analysis evaluates whether LLMs rely on traditional local SEO indicators or surface citations based on alternative relevance-driven signals.

The breakdown thar shows how domain authority, schema markup, GBP signals, and semantic relevance appear across platforms are described below.

Domain Metrics Analysis

The domain-level analysis measures whether traditional SEO authority metrics influence LLM citation ranking. This signal matters because local SEO historically treats domain strength as a proxy for trust and visibility.

The headline results are shown below.

- OpenAI, Grok, Google AI Mode. Strong negative correlation with domain authority.

- Perplexity. Weaker but still negative association with domain authority.

- Overall pattern. No consistent advantage for higher-authority domains.

Across most platforms, DA, DP, and DR show negative correlations with ranking position. Higher-authority domains do not consistently appear in higher-ranked citations. This pattern indicates that LLMs decouple local ranking behavior from classical link-based authority and instead prioritize other contextual signals.

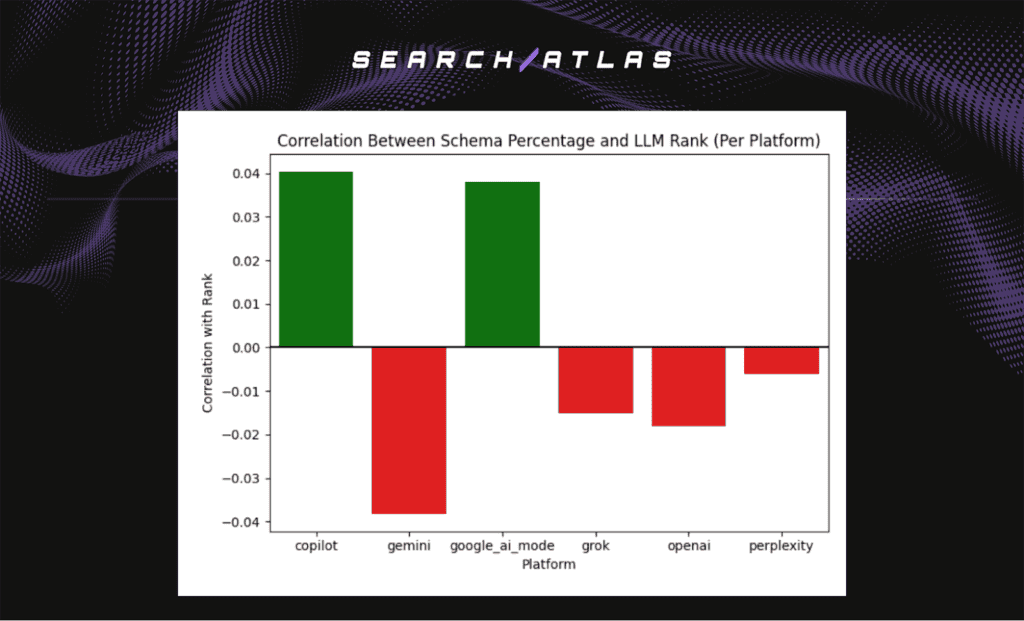

Schema Markup Analysis

The schema analysis measures whether structured data adoption correlates with higher ranking positions in LLM-generated responses. Structured data historically improves visibility inside search engines, which makes its role inside LLMs an open question.

The headline results are shown below.

- OpenAI, Gemini, Grok, Perplexity. Neutral to negative correlation with schema usage.

- Copilot and Google AI Mode. Small positive correlation with schema adoption.

- Overall pattern. Minimal influence across all platforms.

Schema markup shows a weak and inconsistent relationship with ranking position. Platforms closely integrated with traditional search infrastructure show slight sensitivity to schema adoption, while foundational LLM platforms show no measurable benefit. This divergence reflects system design differences rather than a universal ranking rule.

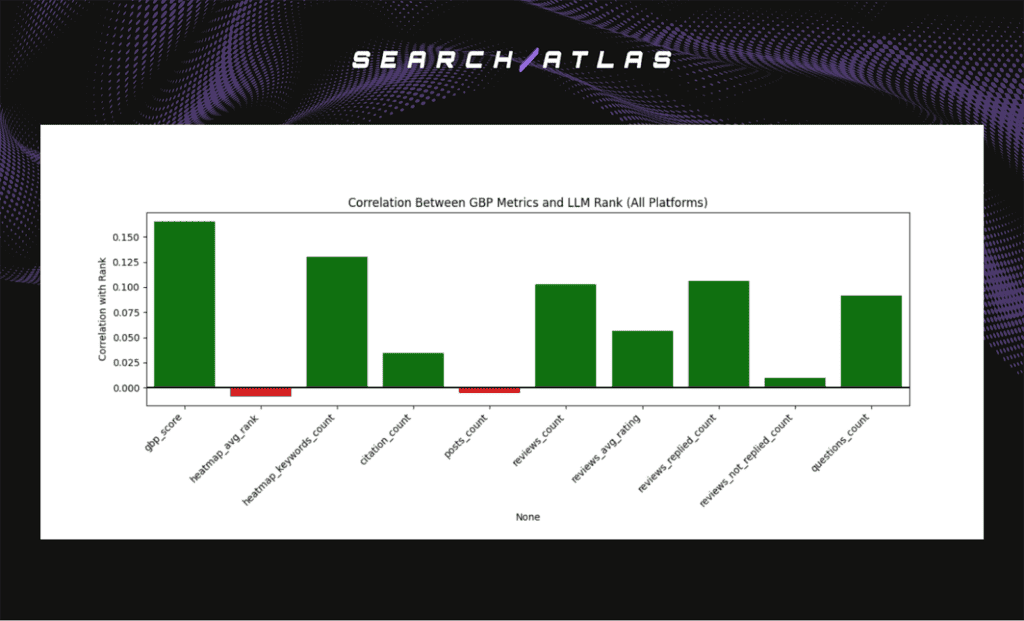

Google Business Profile (GBP) Signal Analysis

The GBP analysis evaluates whether local prominence and reputation signals influence LLM citation ranking. GBP signals matter because they anchor traditional local SEO performance through reviews, engagement, and map visibility.

The headline results are shown below.

- GBP Score. Strongest positive correlation at approximately +0.16.

- Review quality and replies. Small but positive correlation.

- Map rank and posting frequency. Neutral to negative correlation.

LLMs show modest sensitivity to GBP quality signals, especially composite and engagement-oriented metrics. Review quality and responsiveness correlate more strongly than raw volume or posting activity. Heatmap rank and map-based visibility contribute little, which indicates that LLMs treat GBP as supporting context rather than a primary ranking engine.

Semantic Relevance Analysis

The semantic relevance analysis measures how closely cited URLs align with local “near me” query intent. This signal matters because LLMs generate answers by matching meaning rather than retrieving results based on proximity or authority alone.

The analysis measures cosine similarity between vector embeddings generated from each query and the content of every cited URL.

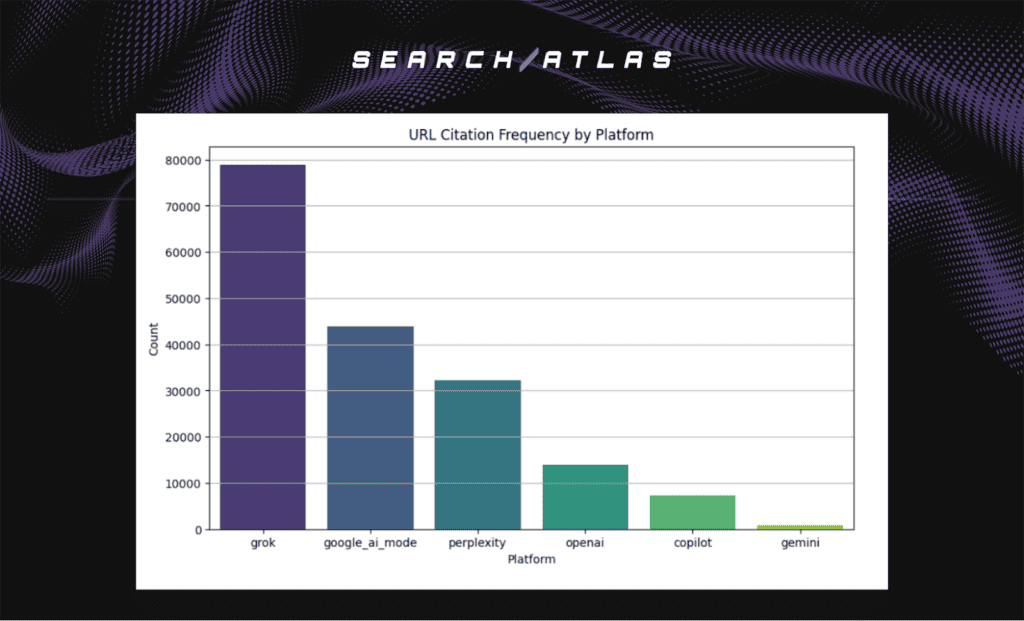

URL Citation Frequency by Platform

Citation volume varies significantly by platform, which shapes every relevance pattern observed in the dataset. The headline distribution is shown below.

- Perplexity. Largest share of total citations.

- OpenAI and Google AI Mode. Moderate and stable citation volume.

- Gemini, Grok, Copilot. Smaller but consistent citation pools.

Perplexity dominates citation volume due to continuous retrieval behavior. Other platforms contribute fewer citations but still display consistent relevance patterns within their respective outputs.

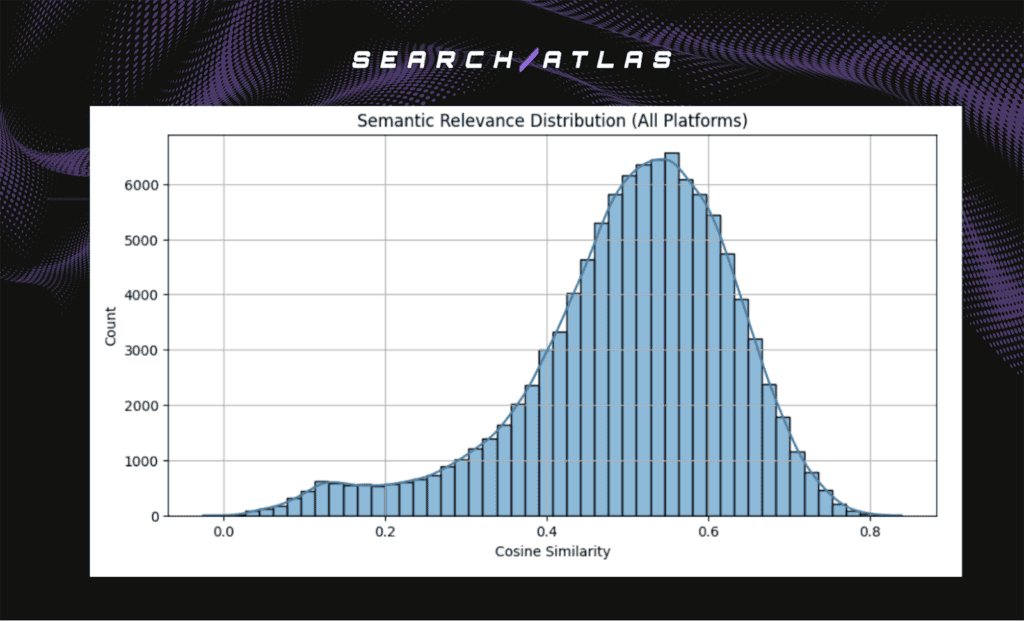

Distribution of Semantic Relevance

Semantic relevance scores cluster strongly across all platforms. The headline results are shown below.

- Primary cluster. Scores between approximately 0.35 and 0.75.

- Secondary pattern. Gradual left tail extending toward lower relevance scores.

- No distinct low-relevance cluster.

Most citations show meaningful alignment with user intent. The absence of a secondary cluster indicates that LLMs rarely surface irrelevant sources. Instead, lower relevance scores appear gradually rather than abruptly.

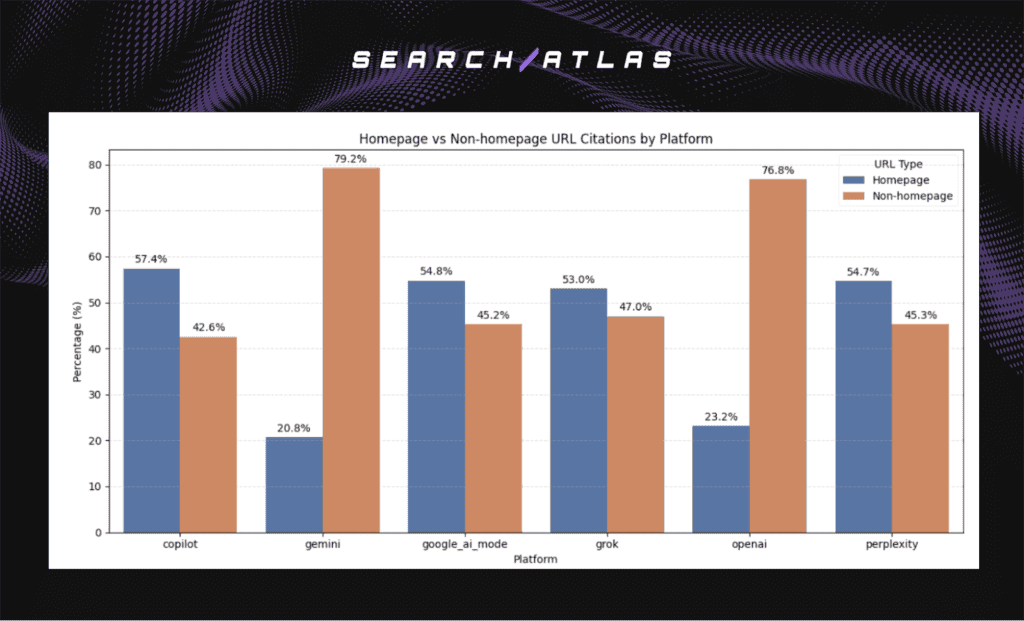

Homepage vs Non-homepage URL Citations by Platform

Further analysis shows that lower relevance scores originate primarily from homepage-level citations rather than content-specific pages. Homepage URLs contain broad navigational and multi-topic content, which reduces semantic alignment with narrowly defined local queries.

The headline patterns are shown below.

- Gemini and OpenAI. Higher share of non-homepage citations.

- Copilot and Google AI Mode. Balanced mix of homepage and page-level citations.

- Perplexity. Higher reliance on homepage-level domain citations.

Gemini and OpenAI prioritize deeper, page-specific content that closely matches intent. Perplexity surfaces authoritative domains more frequently, even when homepage content dilutes relevance. Copilot and Google AI Mode blend domain-level authority with page-level relevance.

Which Signals Influence LLM Ranking Position?

This section evaluates which signals influence the position of citations inside LLM-generated local responses. The analysis focuses on semantic relevance and URL freshness to determine whether LLMs consistently rank higher-quality or more recent content above less aligned sources.

Semantic Relevance

Semantic relevance measures how closely cited URLs match the intent of a local “near me” query. These differences reveal whether a platform prioritizes precise page-level alignment or broader domain-level interpretation when generating local recommendations.

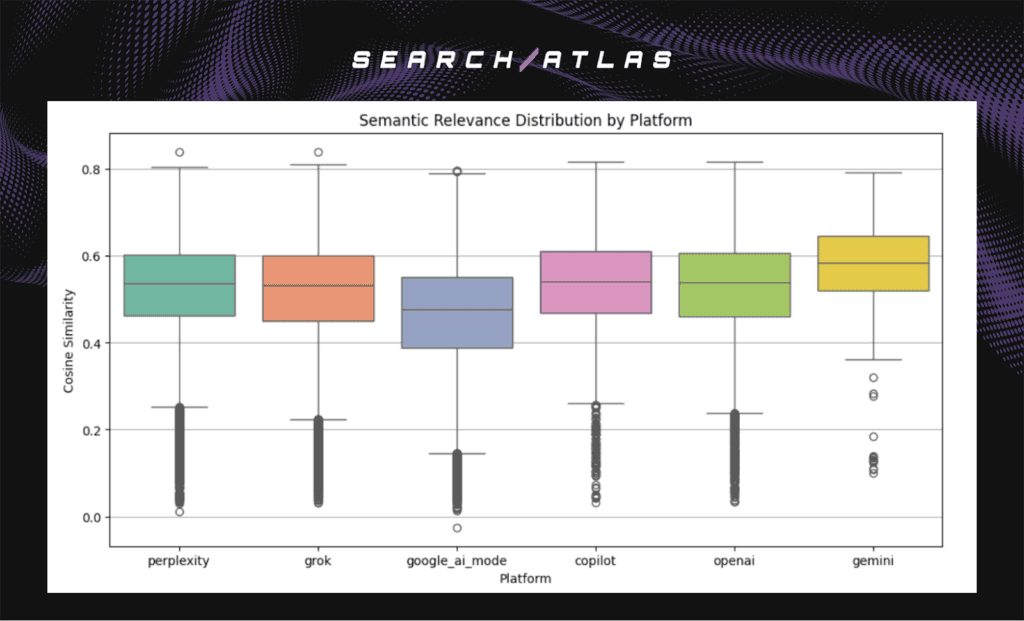

The distribution of semantic relevance scores across platforms is shown below.

- Overall range. Scores concentrate between 0.40 and 0.70 across platforms.

- Gemini. Highest median relevance, indicating stronger alignment with query intent.

- OpenAI and Copilot. Most consistent relevance, with narrow mid-to-high distributions.

- Perplexity and Grok. Wider spread with heavier lower tails.

- Google AI Mode. Lower median relevance with moderate dispersion.

Low-relevance outliers appear across all platforms and originate primarily from homepage or domain-level citations rather than content-specific pages.

Correlation Between Semantic Relevance and LLM Rank

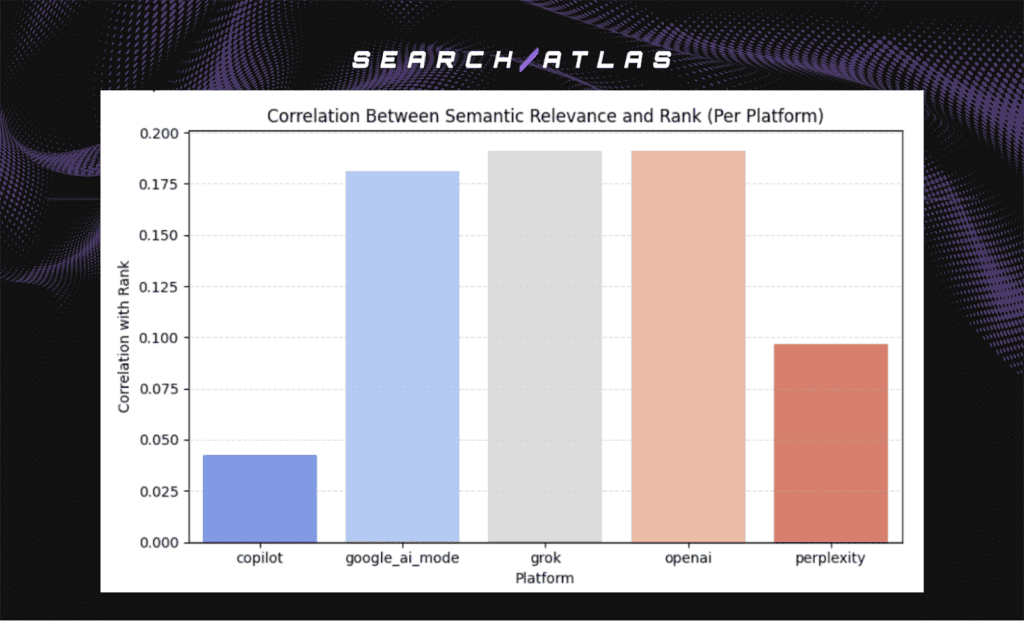

The analysis measured whether higher relevance scores correspond to higher ranking positions. The headline results are shown below.

- OpenAI, Grok, Google AI Mode. Strong positive correlation around 0.18 to 0.19.

- Perplexity. Weaker but positive correlation near 0.10.

- Copilot. Minimal correlation around 0.04.

These results show that several platforms consistently rank more semantically relevant citations higher. The shared correlation strength across OpenAI, Grok, and Google AI Mode indicates similar relevance-driven ordering logic.

URL Freshness Analysis

URL freshness measures the age of cited content relative to the LLM response timestamp. This signal matters because some systems prioritize recent information while others favor established sources.

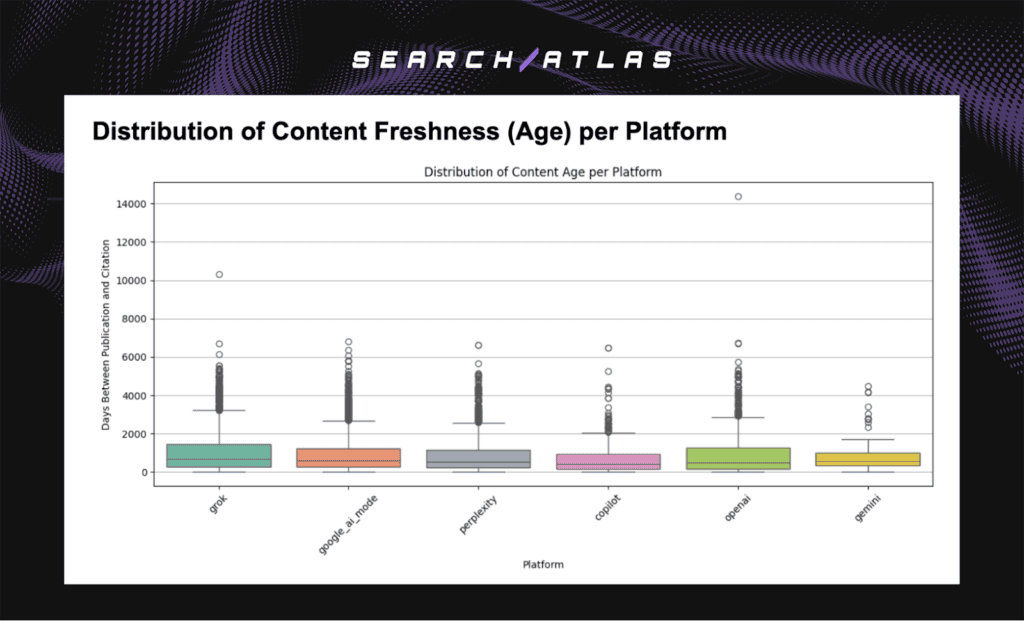

Distribution of Content Freshness (Age) per Platform

Content age varies substantially across platforms. The headline patterns are shown below.

- Gemini. Freshest citations overall.

- OpenAI and Grok. Mix of recent and evergreen sources.

- Perplexity, Copilot, Google AI Mode. Balanced distribution across content ages.

- Overall median. Approximately 3 to 4 years, with a long tail of older citations.

These patterns indicate that most platforms combine recency with historical content rather than enforcing strict freshness thresholds.

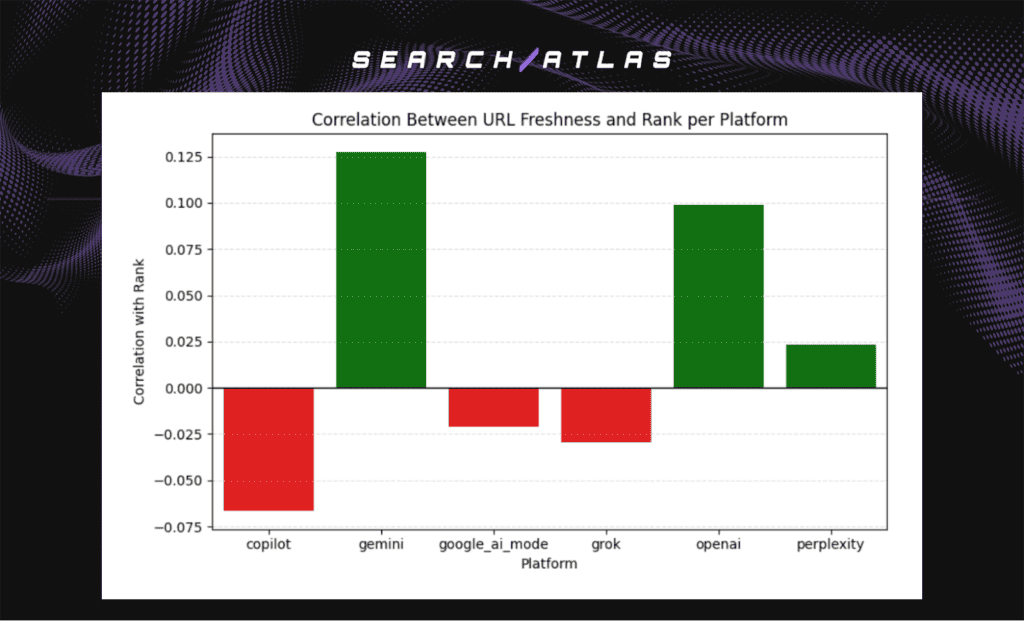

Correlation Between URL Freshness and Rank per Platform

The analysis measured whether fresher content appears higher in ranking order. The headline results are shown below.

- Gemini. Strongest positive correlation around 0.13.

- OpenAI. Moderate positive correlation near 0.09.

- Perplexity. Weak positive correlation near 0.02.

- Copilot, Grok, Google AI Mode. Negative correlations.

These results show that freshness influences ranking strongly on Gemini, moderately on OpenAI, and minimally or negatively on other platforms. Freshness acts as a platform-specific modifier rather than a universal ranking driver.

Which Local SEO Signals Actually Matter in LLMs?

This analysis evaluates which local SEO signals explain ranking behavior inside LLM-generated responses. The study focuses on semantic relevance, freshness, and traditional authority indicators to determine which factors meaningfully influence citation order for “near me” queries.

Each signal contributes differently. Semantic relevance measures how closely cited content matches user intent. Freshness captures content recency at the time of citation. Traditional signals such as domain authority, schema markup, and Google Business Profile metrics reflect credibility inside search-driven ecosystems.

Semantic relevance emerges as the strongest and most consistent ranking signal across platforms. OpenAI, Grok, and Google AI Mode show similar positive alignment between relevance and ranking position, confirming that intent matching drives citation order inside these systems. Perplexity shows weaker alignment, while Copilot shows minimal sensitivity, which reflects platform-specific ranking logic rather than uniform behavior.

Freshness acts as a secondary, platform-dependent modifier. Gemini favors recently published content more strongly than other platforms. OpenAI and Grok balance fresh pages with evergreen sources, while Perplexity, Copilot, and Google AI Mode show limited or inconsistent sensitivity to recency. Freshness influences ranking in some environments but does not define ordering universally.

Traditional authority and structured data signals play a limited role. Domain authority metrics and schema markup show weak or inconsistent relationships with ranking position, indicating that LLMs do not prioritize link equity or markup depth when ordering local citations. Google Business Profile quality metrics correlate modestly with ranking, which positions GBP as a supportive context rather than a primary ranking engine.

Together, these findings show that LLMs rank local businesses primarily through semantic alignment. Authority, proximity, and citations recede in importance. Local visibility inside LLMs now depends on how clearly content satisfies user intent rather than performance inside traditional search indexes.

What Should Local Businesses and SEOs Do With These Findings?

The results show that local visibility inside LLMs follows different rules than traditional local SEO. Semantic relevance drives citation order, while authority, proximity, and map-based signals play supporting roles. These patterns require a shift in how local businesses evaluate and optimize visibility inside AI-generated environments.

- Treat LLM Visibility as a Core Local Performance Layer. LLM-generated answers surface local businesses before users engage with Maps or organic search. Tracking citation presence and ranking position reveals which businesses appear, how often they surface, and where visibility gaps exist across “near me” queries.

- Optimize Pages for Semantic Precision. Pages that clearly describe services, locations, and use cases in natural language align more strongly with local intent. Service-specific and intent-matched content increases the likelihood that LLMs select and rank a business for commercial local searches.

- Benchmark Visibility Across LLM Platforms. OpenAI, Gemini, Perplexity, and Google AI Mode apply different weighting to relevance and freshness. Monitoring citation behavior across platforms reveals uneven exposure patterns and identifies where optimization strategies need adjustment.

- Expand Content Coverage. Ranking control remains limited, but content coverage remains controllable. Service pages, location-aware explanations, and intent-driven content improve semantic match even without authority or map dominance. Local discoverability inside LLMs now depends on sustained semantic presence rather than guaranteed ranking position.

What Should Local Businesses Do If They Cannot Control LLM Ranking?

Local businesses need to focus on visibility resilience rather than ranking control. LLMs apply platform-specific logic that businesses cannot fully influence, but repeated citation presence still determines whether a brand appears inside AI-generated local answers.

Content clarity remains the most reliable lever. Clear service descriptions, location-specific explanations, and intent-matched pages increase semantic alignment with “near me” queries and improve selection probability even without ranking dominance.

Entity consistency across the web reinforces recognition. Accurate business information and aligned descriptions across owned pages and major directories improve inclusion inside LLM responses, shifting success from rank position to sustained local visibility.

Tracking LLM visibility becomes essential. LLM Visibility features monitor citation presence and page selection across platforms, revealing where a business appears, which intents trigger inclusion, and where gaps exist. Local discoverability inside AI systems now depends on sustained semantic presence rather than guaranteed ranking position.

What Are the Study’s Limitations?

Every empirical analysis carries limitations. The limitations of this study are listed below.

- Query Scope. The dataset includes only queries containing the phrase “near me,” which reflects broad local discovery intent but excludes branded and highly specific local searches.

- Platform Coverage. The analysis evaluates six major LLM platforms, but model behavior evolves rapidly. Future updates alter ranking dynamics and citation patterns.

- Publication Date Availability. Freshness analysis relied on URLs with extractable publication dates, which reduced the sample size and excluded some valid citations.

- Local Signal Granularity. Google Business Profile data reflects available aggregate metrics rather than real-time user interactions or proximity signals.

- Model Design Differences. Retrieval-enabled and reasoning-based systems apply fundamentally different ranking logic, which limits direct comparability across platforms.

Despite these constraints, the analysis establishes a clear baseline for understanding how LLMs rank local businesses for “near me” queries. The findings confirm that semantic relevance drives ranking behavior more consistently than traditional local SEO signals.

Future studies need to expand query coverage, extend time horizons, and track longitudinal shifts in local LLM visibility across evolving platforms.