Crawlability and indexability are core technical properties. Crawlability and indexability determine whether your website appears in search results.

Crawlability means search engines can access and follow internal links. Crawlability improves when your website uses a logical structure and clean links. A website with good crawlability supports search engine navigation by search engine bots. This navigation ensures that website pages are fully crawlable.

Indexability refers to a web page’s ability to be interpreted and stored in the search engine’s index for an effective retrieval process in search results. Indexability measures if meta tags, headers, and canonical rules are correctly applied. Indexable pages can appear in Google search results, leading to increased traffic and higher conversion rates.

What Is Crawlability?

Crawlability is the ability of search engine bots to access and navigate a website’s content. Crawlability allows bots like Googlebot to discover pages through internal and external links. Crawlers follow each path from the homepage and extend through linked content to explore new or updated pages.

Search engines use crawlability to decide what content gets discovered. Visibility in search results depends on successful crawling. Pages that aren’t crawled cannot be indexed.

How often a website is crawled depends on its authority, updates, and content quality. Websites with strong authority or frequent updates are more crawlable.

What Is Indexability?

Indexability is the process that allows a search engine to store a webpage in its database and show it in the results. Search engines crawl a page first, then decide whether to include it in their index. Indexing means the engine analyzes the page content, evaluates technical signals, and stores the page in the Google index.

Indexed pages become eligible to appear when users search for related topics. Pages that allow crawling but block indexing will not appear in search results.

How do Search Engines Handle Crawlability and Indexability?

Search engines handle crawlability and indexability by deploying automated crawlers that scan the web through internal links, XML sitemaps, and referring domains to assess crawlability. Crawlers follow website structure and obey robots.txt directives to determine access.

Google assigns specific tasks to dedicated crawlers as listed below.

- Googlebot. Googlebot crawls desktop and mobile pages for Google Search

- Googlebot Image. Googlebot Images scans image URLs for Google Images and visual results

- Googlebot News. Googlebot News collects news content for Google News

- Googlebot Video. Googlebot Video processes video files and pages with embedded media

- Google StoreBot. Google StoreBot crawls product listings, cart flows, and checkout paths

After verifying crawlability, Google proceeds to indexability. Google processes HTML, on-page text, metadata, images, and structured data. Only pages that meet technical standards and content quality signals enter the search index.

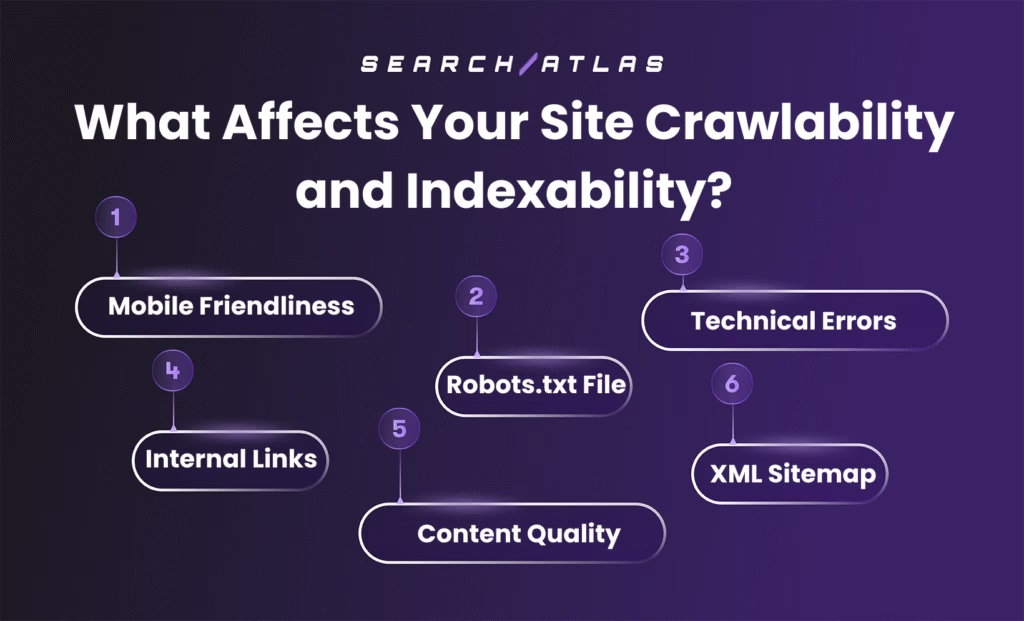

What Affects Your Website Crawlability and Indexability?

There are 6 main issues that affect your site’s crawlability and indexability. These are listed below.

1. Mobile Friendliness

Mobile indexability refers to the ability of mobile-accessible content to be crawled and indexed by search engines. Mobile indexability depends on visibility through mobile rendering, which defines how search engines evaluate and process content.

In July 2024, Google completed its transition to mobile-first indexing. Mobile-first indexing means that Google now uses only the mobile version of a website’s content for indexing and ranking. Websites with content that is not accessible at all on mobile devices will no longer be indexable.

Search engines review mobile versions to assess content quality, identify crawl paths, and evaluate page value. They expect mobile SEO standards like functional layouts, visible elements, high-quality content, and consistent page structures.

Even if a website isn’t optimized for mobile SEO, as long as the content is accessible and displays correctly on mobile devices, it remains eligible for indexing.

2. Robots.txt File

The robots.txt file controls which parts of your website search engine crawlers will access. The robots.txt file sits at the root of your domain and sends instructions to crawl bots before they start crawling your pages. A misconfigured robots.txt file blocks important sections of a website.

Crawl control does not equal index control. Blocking a page in robots.txt stops the crawl, but does not always prevent indexing if the page is linked elsewhere. With robot.txt configured correctly, it helps manage crawl traffic and protect sensitive directories from unnecessary exposure.

3. Technical Errors

Technical errors or technical SEO failures interrupt how search engines access, evaluate, and store content. Each issue interferes with specific mechanisms search engines use for crawlability and indexability.

The main technical SEO failures that interrupt crawlability and indexability are below.

- Broken Links. Broken links trigger 404 errors and stop crawlers. Dead links cut off discovery paths and leave pages hidden.

- Redirect Loops. Redirect loops force crawlers into endless redirects. Loops between URLs prevent bots from reaching a final page and drain the crawl budget.

- Orphan Pages. Orphan pages provide no internal path for discovery. Without incoming links, crawlers can’t reach these pages even if they’re in the websitemap.

- Slow Server Response. Slow server response delays page loads past crawl time limits. Search engines skip slow pages to maintain efficiency, reducing crawl frequency and visibility.

- Incorrect Canonical Tags. Incorrect canonical tags create confusion about the preferred version. Conflicting canonicals lead search engines to index the wrong page or ignore all variants.

Crawlability drops if crawlers can’t reach or complete page loads. Indexability fails if duplicate signals or incorrect directives distort content interpretation.

4. Internal Links

Internal links define how search engines crawl and understand a website. Crawlers follow internal links to reach content, evaluate page relationships, and build a complete picture of website structure. A missing path in internal links blocks discovery. A page that lacks incoming internal linking remains disconnected and outside the crawl sequence.

Crawlability relies on internal navigation. Each anchor tag acts as a signal, passing context from one page to another. This structure allows crawlers to identify related topics, prioritize important sections, and maintain an efficient crawl flow across the domain. All these signals directly influence what enters the index and how it ranks.

Indexability requires full discovery. Crawlers cannot make the indexability of what they do not reach, even when a page appears in the websitemap. Internal linking removes that barrier by connecting content to the main crawl route, which allows search engines to evaluate and include it in results.

5. Content Quality

Content quality influences how search engines prioritize pages for crawling and indexing. Pages with high-quality content help search engine bots understand value, while weak or thin content often gets ignored.

Thin content includes short, generic text that adds little information. Examples of thin content include copied product descriptions, low-effort blog posts, or duplicate content. Pages with thin content offer limited user value, so search engines crawl them less frequently or skip indexing entirely.

Relevance builds on quality. Content that aligns with user intent, covers a topic deeper, shows authority, and provides a good user experience signals reliability. This alignment makes it easier for search engines to evaluate purpose, group related pages, and decide what belongs in the index.

6. XML Sitemap

An XML sitemap is a structured file that lists essential URLs and provides crawl metadata to guide search engines to content on your website. This file defines the website’s primary content, highlights update frequency, and assigns relative priority. While internal links guide contextual flow, XML sitemaps ensure no priority page remains hidden.

XML sitemaps support crawlability because they present a clear inventory of pages. Sitemaps flag sections that lack strong internal navigation, which includes isolated updates, newly added pages, or media-rich formats. Even if a page has few internal links or backlinks, its presence in the XML sitemap increases its chances of being crawled and evaluated.

How to Improve Crawlability and Indexability for SEO

To improve crawlability and indexability for SEO follow the 9 steps listed below.

1. Fix Technical Errors

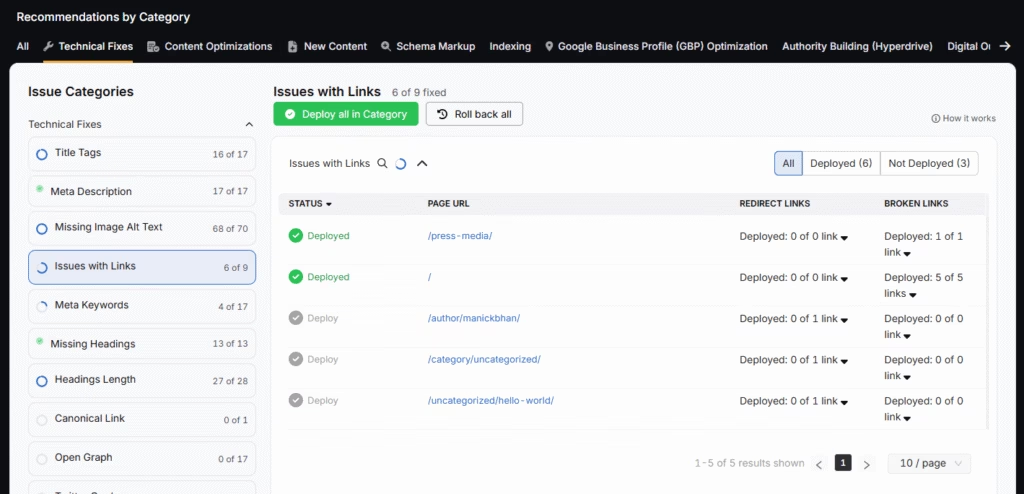

Fixing technical errors is the process of optimizing a website’s technical structure to improve crawlability and indexability. Fixing technical errors improves search visibility by ensuring search engines efficiently access and evaluate all intended pages.

Improve crawl efficiency by optimizing performance as listed below.

- Reduce page load times by compressing images, enabling browser caching, and minimizing unnecessary requests.

- Apply responsive design to support different devices and screen sizes, prioritizing mobile speed improvements.

- Fix broken links and redirect chains promptly to prevent crawl interruptions and ensure smooth navigation.

- Monitor technical metrics regularly to keep your website crawlable, indexable, and ready for discovery.

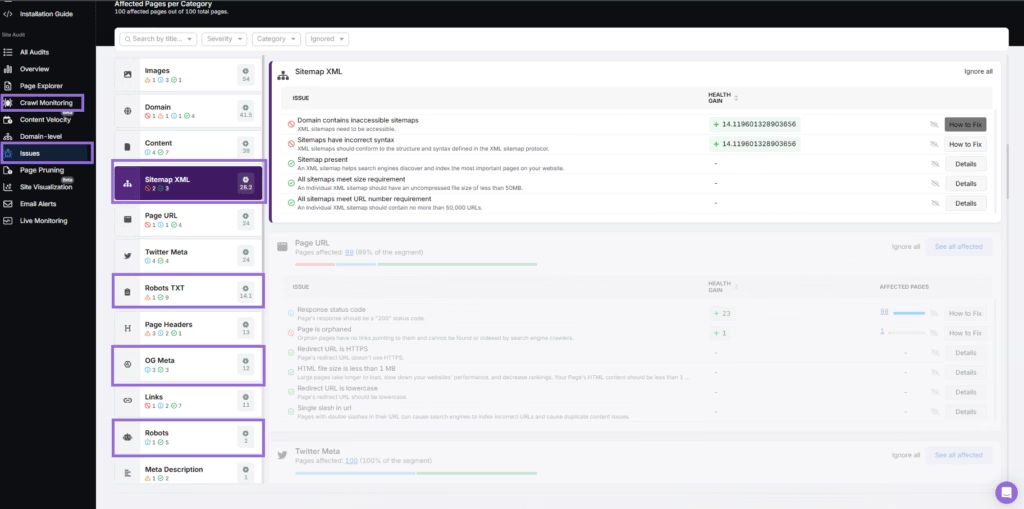

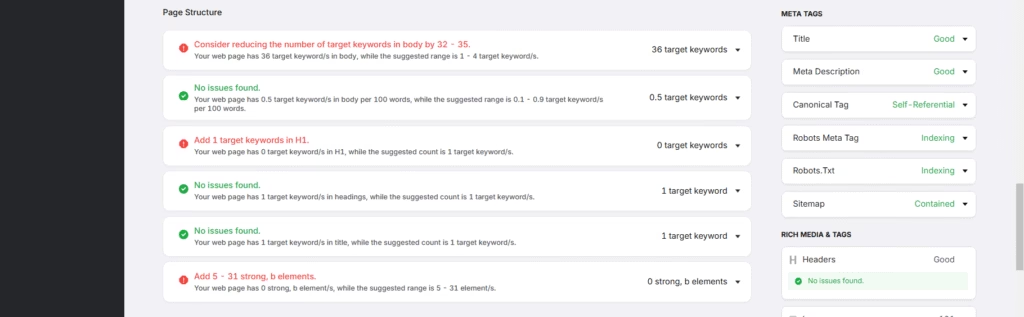

Search Atlas Site Auditor Tool scans your domain, configures the crawl depth and frequency, and generates a report. Review the output to identify non-indexable pages, then follow the tool’s targeted recommendations to resolve any flagged issues. Each recommendation in the Search Atlas Site Auditor Tool includes direct links to affected URLs, streamlining the resolution process.

2. Create a Robots.txt File

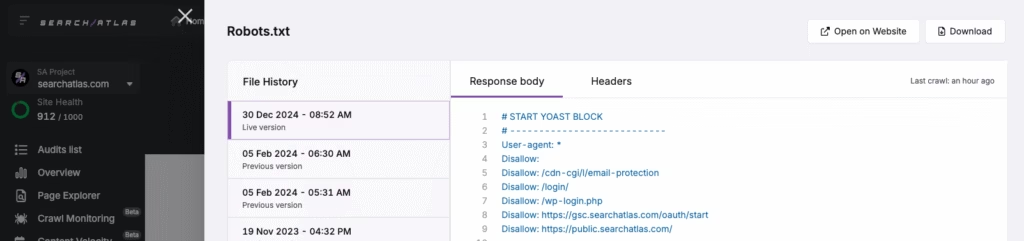

Robots.txt is a text file stored at the root of your domain. A robots.txt file defines which parts of a website search engines will access. It uses directives to allow or disallow crawlers from visiting specific paths of your pages.

To improve crawlability, check that the robots.txt file does not block essential resources. Avoid disallowing folders that contain CSS, JavaScript, or mobile content. These resources support proper rendering and user experience, which search engines evaluate during crawling.

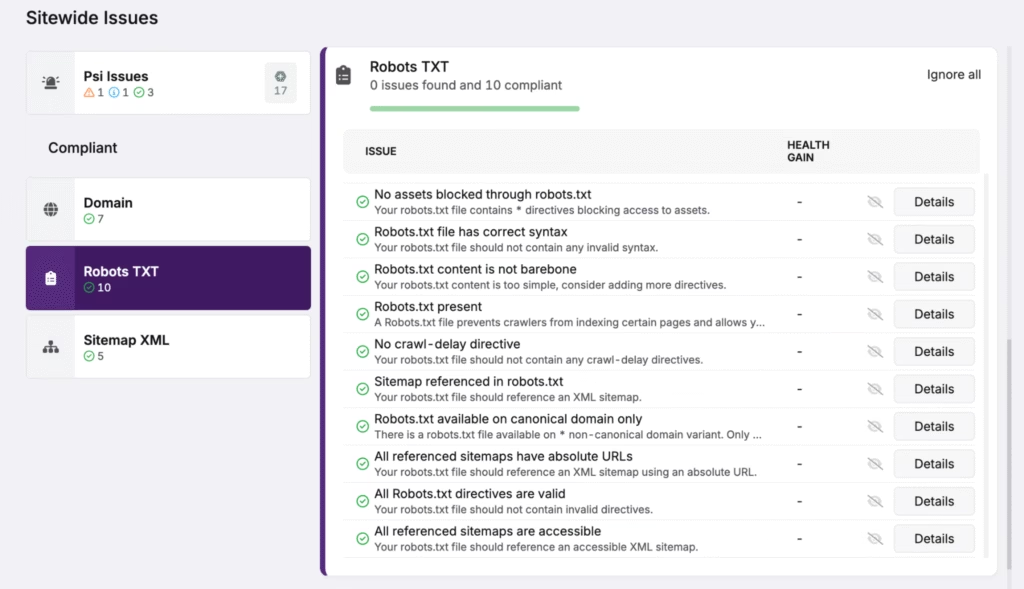

Analyze your robots.txt setup with the Search Atlas Site Auditor. The tool identifies blocked URLs, evaluates crawl permissions, and tracks past edits. Review the Page Indexing Report to ensure updates result in accurate coverage.

Use this visibility to remove unintentional blocks and confirm important sections remain crawlable. A properly configured robots.txt file guides crawlers efficiently and supports broader indexing strategies.

3. Verify Robots Meta Tags

Robots meta tags refer to page-level HTML directives that guide search engines on indexing and link following. Robots meta tags operate through the <meta name=”robots”> element placed in a page’s <head>. Robot meta tags affect how search engines treat individual pages during crawling and indexing.

To optimize indexability, review your use of index and no-index directives. Remove no-index tags from any page you want to appear in search results. Pages with no-index tags remain crawlable but cannot enter the index.

Use follow and no-follow directives to control whether search engines pass link equity through the page. Limit the use of no-follow to utility pages like login forms or admin areas to preserve website authority flow.

Check robots meta tag implementation with the Search Atlas On-Page Audit Tool. The Search Atlas On-Page Audit Tool highlights each page’s indexability status and flags unexpected no-index or no-follow tags. Confirm alignment with your SEO goals and remove restrictive tags where necessary. Maintain consistent meta directives across both mobile and desktop versions.

4. Set Canonical Tags Correctly

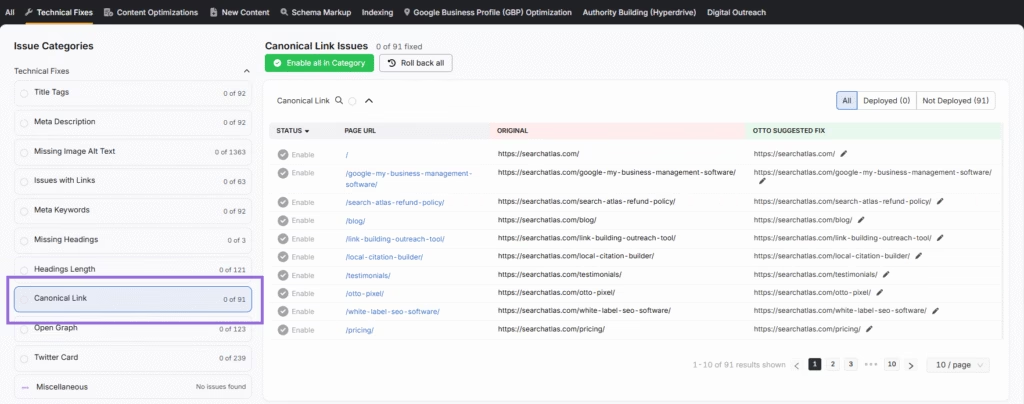

Canonical tags are the HTML elements that signal the preferred version of a page when duplicates or near-duplicates exist. Canonical tags help Google understand which version will be indexed and ranked.

Add a self-referencing canonical tag to every indexable page. This confirms to search engines that the current URL is the authoritative version, preventing dilution across duplicates.

Use the Search Atlas Site Auditor Tool to detect canonical tag issues at scale. The tool identifies missing tags, broken references, and tag-page mismatches that affect index consistency. Fixing these ensures crawlers focus on the right versions of your content.

Avoid conflicts between canonical tags and robots meta tags. A page marked as canonical should never include a no-index directive. This type of signal mismatch prevents indexing and lowers crawl efficiency.

For multilingual websites, assign canonical tags by language and region. Match each version of the page with a self-referencing canonical in its own language to support international indexing and relevance.

5. Create an XML Sitemap

XML sitemap refers to a structured file that lists important URLs on your website. A sitemap improves crawlability by which helps search engines locate and prioritize content for indexing.

Visit yoursite.com/sitemap.xml to check for an existing sitemap. Create one and place it in the root directory if no file appears. This gives search engines a complete reference of your indexed content.

Use the Search Atlas WordPress plugin to generate and maintain your sitemap automatically. The plugin supports real-time updates, which help you keep your sitemap aligned with published content, redirects, and removed URLs.

Use ChatGPT to generate your sitemap automatically and Search Atlas WordPress plugin to support real-time updates. This helps you keep your XML sitemap aligned with published content, redirects, and removed URLs.

Monitor it regularly to confirm coverage accuracy, discover crawl errors, and verify that your most important pages are indexed correctly. Filter out duplicate, redirected, or noindex-tagged pages.

Use canonical tags to consolidate mobile and desktop URLs. Include only the preferred version in the sitemap. This avoids duplication and strengthens crawl efficiency.

6. Use Hreflang Tags to Avoid Duplicate Content

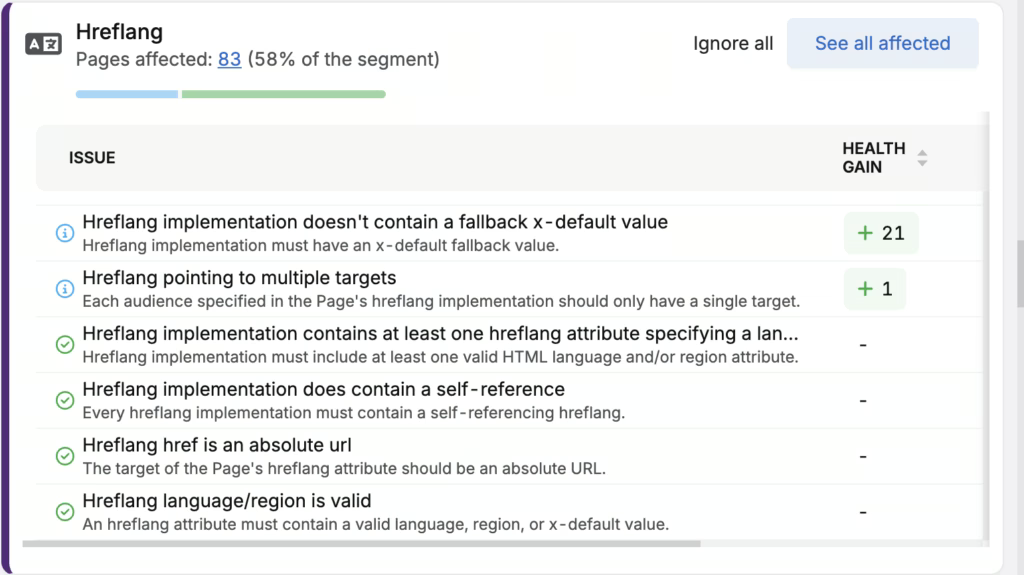

Hreflang tags tell search engines which version of a page to show based on the user’s language and region. They stop engines from treating translated or region-specific pages as duplicates.

Each version must include hreflang references to all others, including itself. This setup confirms intent and reduces indexing errors.

Stick to the hreflang tags implementation rules listed below.

- Set crawl priorities. Highlight important region-specific pages in your sitemap or server logs.

- Ensure consistency. Add hreflang tags using the same format across pages—either in HTML or HTTP headers.

- Include self-referencing tags. Ensure each version has a tag pointing to itself to confirm its purpose.

- Use XML websitemaps. Add hreflang info to your sitemap to reduce crawl strain for large websites.

Search Atlas OTTO SEO Tool flags missing hreflang tags and duplicate content issues. Use the Search Atlas OTTO SEO Tool to check for gaps and automate fixes across large websites. GSC tracks hreflang indexation. Keyword Planner and Trends help tailor keywords by market. Analytics reports on performance by region.

7. Create High-Quality Content and Keep It Updated

High-quality content refers to original, relevant, and well-structured pages that meet technical requirements and follow Google’s content policies. Content improves crawlability if it’s clearly organized, consistently updated, and aligned with search intent.

Use proper HTML formatting, which includes clear headings and subheadings. Structure your paragraphs with short, readable sentences to help search engine bots process content efficiently.

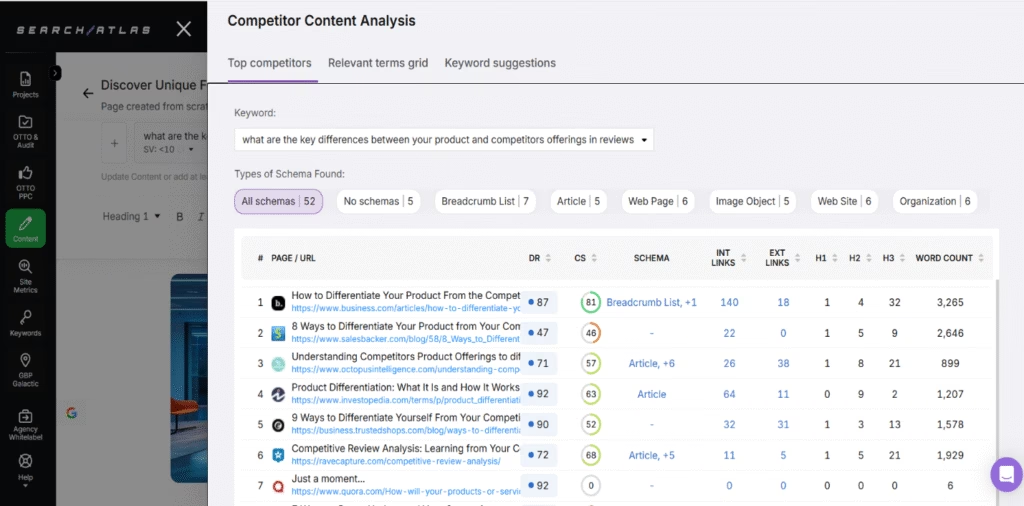

Compare your content with top-ranking competitors to find gaps. Adjust your material to meet search intent. Use Search Atlas Competitor Content Analysis to see which pages and keywords of your competitor drive traffic and find new content opportunities.

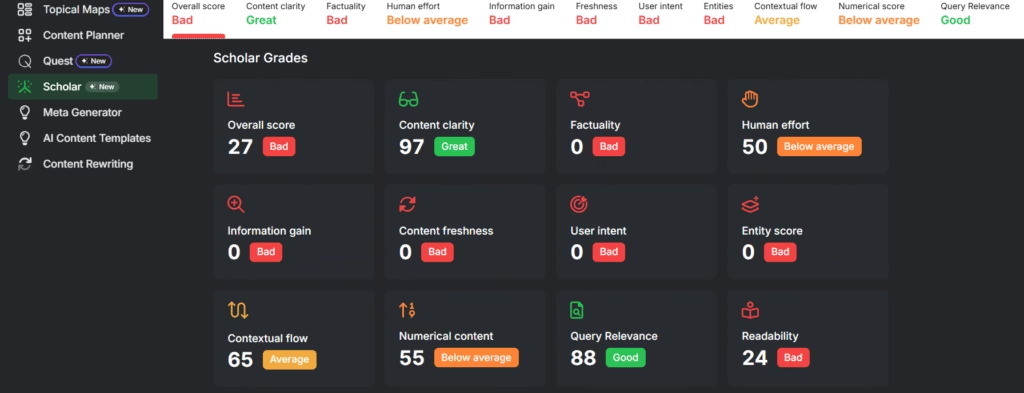

Run a content quality review using the Search Atlas SCHOLAR Tool. The Search Atlas SCHOLAR Tool evaluates your page based on Google’s Page Quality Algorithm, checking clarity, structure, keyword usage, and relevance.

Confirm factual accuracy, check keyword placement, avoid repetition, and get a full-quality report with optimization recommendations. Pages that match user intent, load quickly, and follow clear logic are more likely to rank and remain indexed.

Refresh content regularly by adding new insights or expanding existing pages. Consistent updates signal to search engines that your website is active and authoritative. Updated pages get crawled more often, which increases the chances of re-indexing and improved visibility.

8. Implement Structured Data and Schema Markup

Structured data refers to standardized code that explains content to search engines. Schema markup is one format for structured data that helps bots interpret your website content and display rich results in search.

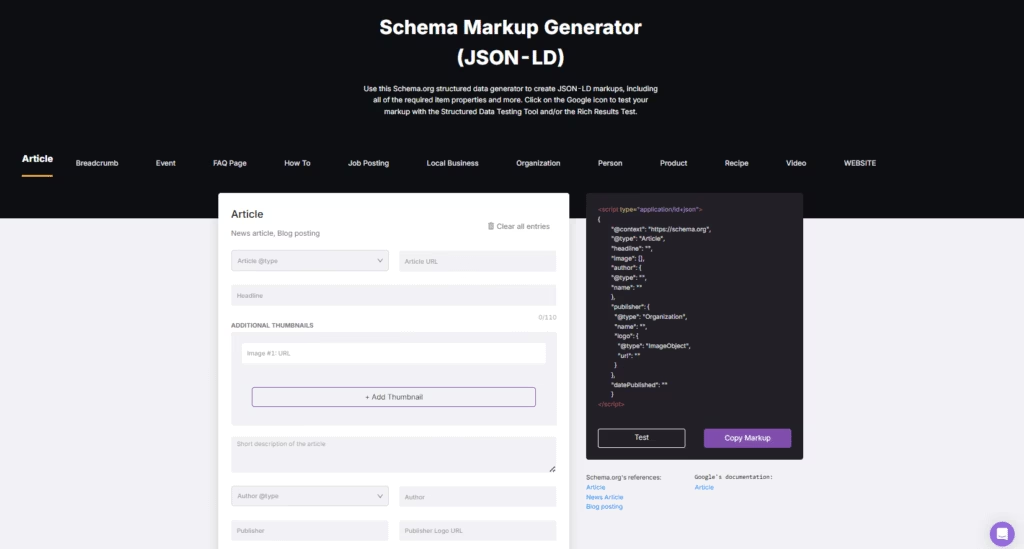

Add schema markup using JSON-LD to describe elements like articles, products, events, or reviews. Structured data improves crawlability if the markup is accurate and complete. Bots categorize your content, increasing the chances of enhanced visibility in search engine results more easily.

Accurate markup supports faster indexing by improving how search engines interpret your pages. Use the Search Atlas Schema Markup Generator Tool to create valid JSON-LD code. The Search Atlas Schema Markup Generator Tool reduces errors and improves markup quality. It includes links to both validators to quickly confirm your implementation.

After applying structured data, test your markup with Google’s Structured Data Testing Tool. Structured data alone doesn’t guarantee rich results, but it increases the likelihood.

9. Strengthen Internal Links

Strengthening internal links is a method to enhance crawlability and indexability by guiding search engine bots through your website using structured connections between related pages. The internal linking strategy involves placing navigational, contextual, and footer links across your website to help crawlers discover content and pass link equity.

A well-structured internal linking approach ensures no page stands alone and every important URL is accessible from multiple touchpoints. This allows search engines to efficiently interpret the website’s hierarchy and prioritize valuable content.

The core techniques to improve crawlability and indexability with internal linking are listed below.

- Link each page to other relevant content to prevent dead ends.

- Use keyword-rich anchor text that clearly indicates the destination topic.

- Add contextual links within the content body to guide crawlers naturally.

- Keep consistent navigation menus, footers, and sidebars across all pages.

- Fix orphaned pages by linking them to appropriate categories or related content.

- Visualize internal link flow to audit crawl depth and uncover content gaps.

- Highlight key pages by linking to them frequently from high-context sections.

- Organize content into thematic categories and connect them to related pages.

- Build topical maps by grouping related pages and linking them together to show topical depth.

Search Atlas OTTO SEO maps your internal link flow, detects weak points, and highlights crawl depth issues. Rely on Search Atlas OTTO SEO to automatically identify linking opportunities, suggest relevant anchors, and deploy internal links with one click.

How to Know if Your Website Is Crawled and Indexed

Crawl and index monitoring relies on diagnostic tools like Google Search Console (GSC) and specialized crawl tracking features to provide visibility into how search engine bots interact with your website. The crawl and index monitoring process ensures that your technical improvements, including internal linking and structural updates, result in actual search visibility.

Google Search Console (GSC) is one way to verify if pages are indexed and see the last crawl date. The URL Inspection Tool allows you to check whether a page has been indexed and displays the last crawl date.

Search Atlas SEO platform simplifies this process by consolidating data from GSC, Google Analytics 4, and Google Business Profile directly into its platform. You don’t need to switch tools to verify index status or monitor crawler behavior.

The Site Auditor Crawl Monitoring feature tracks how bots move through your pages, reveals crawl gaps, and helps adjust your link structure to improve discoverability. With integrated data and real-time analysis in one place, the Search Atlas SEO platform lets you manage visibility, crawl activity, and performance without manual cross-platform checks.

What Is The Difference Between Crawled and Indexed?

A page is crawled when search engine bots find and read it. A page is indexed when it’s added to the search engine’s database and can appear in search results. Crawling confirms discovery while Indexing confirms eligibility for search.

How Easy SEO Tools Reveal Crawl and Index Block Issues?

SEO tools show crawl and index issues using labeled reports and page-level filters. They detect noindex tags, robots.txt blocks, and skipped crawls. Each issue links to the affected URL with a short explanation. Many platforms integrate GSC to submit indexing requests. This combines issue detection and reindexing in one workflow.

How to Fix Pages Marked as “Crawled – Currently Not Indexed” and “Discovered – Currently Not Indexed”?

Fix Crawled, currently not indexed by improving content, removing noindex tags, and resolving technical blockers. Fix Discovered, currently not indexed by strengthening internal links, submitting URLs, and improving page accessibility. Both require clear value and unrestricted crawling to encourage indexing.

How Long Until Google Indexes Discovered Pages?

Google indexes discovered pages in 3 to 14 days. Indexing speed depends on site authority, content quality, and technical setup. JavaScript-heavy content delays the process but prerendering helps search engines access content faster.