Choosing the right LLMRefs alternative ultimately comes down to finding a platform built for Generative Engine Optimization (GEO) and Answer Engine Optimization (AEO)—tools that offer real LLM visibility, reliable automation, and clear reporting. This guide breaks down why GEO and AEO are now essential for brand discoverability across ChatGPT, Gemini, Perplexity, and Google AI Overviews. It also explains how modern platforms surface and optimize the signals LLMs rely on when generating answers.

You’ll see what capabilities matter most, how to evaluate LLM visibility tools, the steps involved in improving performance, and how pricing models connect to actual ROI. The article also compares leading alternatives, outlines how SearchAtlas stacks up against other solutions, and provides checklists and practical workflows for improving brand presence in AI-generated answers. Throughout, target keywords such as llmrefs alternatives, generative engine optimization, AI search visibility, and llm visibility are woven in to support 2025 GEO/AEO strategies.

What Are the Leading LLMRefs Alternatives for AI Search Optimization?

The leading LLMRefs alternatives are platforms that combine LLM tracking, automation, integrations, and reporting to help brands appear more consistently in AI-generated answers. What sets them apart is how broadly they track LLMs, how often they monitor results, the strength of their automation, and how clearly they surface the sources that models pull from.

Evaluating these tools means looking closely at GEO/AEO features, the ease of connecting them to your CMS and analytics stack, and whether they deliver real workflows rather than dumping raw mentions without context. Below is a quick snapshot of common platform types, along with simple differentiators to help you focus on the options best suited for improving AI search visibility.

Top LLMRefs alternatives generally fall into four groups: all-in-one AI SEO suites, visibility trackers, GEO-focused platforms, and prompt or mention-monitoring tools:

- All-in-one AI SEO platforms: Offer integrated automation, content optimization, and visibility tracking across multiple LLMs.

- Visibility-first trackers: Prioritize measuring brand presence in AI answers and surfacing the underlying sourcing signals.

- GEO-specialized tools: Focus on structured data, authoritative source mapping, and guided answer-optimization workflows.

These categories make it easier to evaluate platforms based on business needs—whether you’re consolidating enterprise tools or looking for deeper analytical detail.

Intro to comparison table:

The table below outlines which platform archetypes typically include LLM tracking, automation, consolidation features, pricing formats, and intended user groups. It’s a quick way for teams to understand fit before diving into a full evaluation.

| Platform Type | LLM Tracking | Automation | All-in-One | Typical Pricing Model |

| All-in-one AI SEO | Yes — multi-LLM | High — audit & content workflows | Yes — integrated suite | Tiered subscription |

| Visibility Tracker | Yes — emphasis on mentions | Low–Medium | No — focused tool | Usage or subscription |

| GEO Specialist | Yes — source mapping | Medium | Partial — focused on GEO | Custom or tiered |

This table makes it easier to see how each approach balances breadth and depth, helping teams decide whether it’s better to consolidate everything into one platform or pair together a few specialized tools. The next section breaks down how a unified platform performs compared to more fragmented setups and introduces a specific alternative that illustrates what an integrated solution looks like.

Which platforms compete with LLMRefs in Generative Engine Optimization?

Platforms competing in GEO are built to map authoritative sources, strengthen structured signals, and optimize content so it’s more likely to be selected by LLM heuristics. These GEO tools vary widely: some put most of their weight on source provenance and excerpt sourcing, while others focus on technical elements like structured data and schema hygiene. Effective GEO platforms pull in search analytics, monitor answer excerpts, and recommend specific content or markup adjustments based on observed LLM behavior. For most teams, the main goals are increasing excerpt inclusion, boosting source prominence, and reducing the friction between content updates and how AI systems surface answers.

A practical GEO evaluation centers on whether a tool can automate source scoring, highlight content gaps where LLMs already pull answers, and translate those findings into clear optimization tasks that content teams can actually implement. This turns GEO into something systematic: discover sources → optimize content or markup → measure shifts in LLM visibility. Understanding how this workflow operates helps teams estimate time-to-impact and align GEO tasks with existing SEO roadmaps—topics covered in more detail later in the optimization steps section.

How do SearchAtlas, AthenaHQ, and Geostar differ in AI content tracking?

SearchAtlas, positioned as an integrated AI SEO automation platform, focuses on LLM visibility tracking paired with automation workflows. In contrast, many other tools spread tracking and execution across separate systems. SearchAtlas blends measurement with automated on-page recommendations and link-building automation, creating a closed-loop GEO process that moves from detection to direct optimization. Other specialist platforms may provide deeper insights for certain LLMs, but they typically require manual coordination to turn those findings into content updates.

When comparing tracking models, look at three core dimensions: coverage (which LLMs are monitored), reporting detail (excerpt-level insights vs. broader trend data), and actionability (whether the tool suggests fixes or automates tasks). SearchAtlas is built around transforming visibility insights into repeatable SEO actions, which helps close the gap between understanding a problem and acting on it—ultimately speeding up iteration cycles and improving GEO and AEO results.

How Does SearchAtlas Compare to Other LLMRefs Alternatives?

SearchAtlas positions itself as an all-in-one AI SEO automation platform that brings together LLM Visibility and autonomous SEO workflows. Its main components include LLM Visibility (which tracks and measures brand reach inside AI-generated answers), OTTO SEO (an AI autopilot for technical audits, content optimization, and link building), and OTTO Google Ads. The goal is to replace scattered tool stacks by offering measurement, automation, and reporting in a single environment. For buyers, this means fewer handoffs between analytics and optimization teams and faster test-and-learn cycles across GEO and AEO programs.

SearchAtlas delivers a suite-level value proposition centered on automation and measurable LLM visibility. The platform highlights automation that “automates up to 90 percent of manual SEO tasks” and offers LLM-specific dashboards that show how content appears inside ChatGPT, Gemini, Perplexity, and Google AI Overviews. Recent milestones—from an acquisition that strengthens integration capabilities to recognition from industry awards—serve as signals of product maturity and broader market validation. For practitioners, these attributes make SearchAtlas appealing as a single vendor when consolidation and rapid impact are priorities.

What unique AI automation features does SearchAtlas offer?

SearchAtlas’s OTTO SEO automates core SEO tasks and moves teams from detection to execution through scripted workflows. OTTO runs technical audits that identify schema issues or canonical errors, recommends content improvements based on LLM excerpting patterns, and coordinates link-building actions to strengthen page-level authority signals. By handling repetitive work, OTTO shortens experiment cycles across large page sets and helps teams scale GEO initiatives without piling on manual tasks.

Automation still fits neatly into human workflows through review queues, ranked recommendations, and optional approval steps—so teams keep control while increasing output. The result is faster iteration and more consistent application of GEO/AEO best practices. With OTTO managing routine optimizations and tracking results, teams can focus on strategy and developing stronger content.

How does SearchAtlas’s LLM Visibility tool enhance brand presence in AI answers?

LLM Visibility shows where and how a brand appears inside AI-generated answers, tracking excerpt sourcing, mention prominence, and which LLMs surface those excerpts. It collects data on mention frequency, prominence within the answer, and the likely source URL or knowledge base the model is referencing. With this information, teams can prioritize updates to content, structured data, or citation reinforcement to improve their chances of being selected as an authoritative source.

A typical workflow looks like this: spot a low-prominence mention → analyze the excerpt’s context and source signals → apply targeted content or markup changes → measure shifts in LLM excerpting and answer prominence. This feedback loop transforms visibility data into meaningful AEO improvements, helping brands earn stronger placements in AI-generated answers over time.

What Are the Key Features to Evaluate in LLMRefs Alternatives?

When comparing LLMRefs alternatives, focus on the features that directly influence GEO and AEO performance: LLM coverage, tracking granularity, automation depth, CMS and analytics integrations, and reporting built specifically for answer engines. Each of these ties to a tangible outcome—coverage expands monitoring reach, granular tracking exposes actionable excerpt context, automation speeds execution, and integrations ensure that optimizations can be deployed and measured reliably. Teams should weigh these features against broader priorities like speed, scale, and technical bandwidth.

Below is a concise checklist outlining the five features that matter most in GEO/AEO evaluations and how each one affects results:

- LLM Coverage: Broad monitoring ensures visibility across ChatGPT, Gemini, Perplexity, and Google AI Overviews.

- Tracking Granularity: Excerpt-level visibility shows exactly what text LLMs cite and the surrounding context.

- Automation & Workflows: Automated audits and optimization playbooks turn insights into scalable actions.

- Integrations: CMS and analytics integrations make continuous deployment and KPI measurement possible.

- Reporting & Measurement: Dashboards connecting visibility to traffic and conversions validate GEO investments.

These pillars form the backbone of any GEO-capable platform and help teams prioritize vendors that match their technical resources and strategic goals. The next section highlights which tools offer strong LLM visibility and how to identify weak implementations.

Which tools provide comprehensive LLM visibility and brand mention tracking?

Robust LLM visibility platforms gather mention counts, excerpt sources, prominence levels, and sentiment signals to help teams prioritize what needs attention. Strong implementations offer time-series trends, excerpt snapshots, and clear source provenance so teams can focus on the highest-impact fixes. Warning signs include tools that only surface raw mentions without context, infrequent crawling of LLM outputs, or narrow LLM coverage that misses major answer engines.

When evaluating tools, look for features like excerpt capture, link-to-source mapping, prominence scoring, and alerts for new high-impact mentions. These indicators make visibility actionable and support a prioritization framework for content and markup updates—both essential for improving measurable AEO outcomes.

How important is automation and all-in-one platform integration?

Automation and consolidation are key for teams aiming to scale without increasing headcount. Automated workflows reduce manual effort across audits, content updates, and link-building, while CMS and analytics integrations ensure that optimizations roll out smoothly and can be measured. Fragmented stacks, on the other hand, create handoff delays, add coordination overhead, and widen the gap between detection and execution.

Organizations should balance the productivity benefits against potential vendor lock-in and ensure that automated workflows still preserve human oversight through review steps and audit trails. When implemented well, automation and all-in-one integration accelerate GEO programs and make AEO initiatives sustainable across large content portfolios.

How Can You Optimize Your Brand Visibility Using LLMRefs Alternatives?

Improving brand visibility in AI-generated answers requires a structured process: audit your current presence, set up continuous tracking, make targeted content and markup updates, and measure the results against visibility KPIs. This creates a repeatable loop that turns raw LLM mentions into prioritized optimization tasks. In practice, teams follow a series of steps to move from detection to stronger placement across models.

Five steps to operationalize GEO and AEO programs:

- Conduct an initial GEO audit: Identify where your brand appears, which excerpts cite your content, and which LLMs surface those mentions.

- Implement continuous LLM visibility monitoring: Track excerpt frequency, prominence, and source signals over time.

- Prioritize remediation tasks: Use prominence and traffic impact to rank content, schema, and authority improvements.

- Apply targeted optimizations: Update content, reinforce citations, and enhance structured data on priority pages.

- Measure and iterate: Connect the visibility changes to downstream metrics and refine your playbook.

This cycle creates a data-driven feedback loop where measurement leads to action and results validate investment. The following subsections detail GEO’s role in ranking and how tracking tools support stronger AEO performance.

What role does Generative Engine Optimization play in AI content ranking?

Generative Engine Optimization (GEO) focuses on the signals that influence whether an LLM chooses a piece of content as a source—factors like content authority, schema markup, and source provenance. GEO is effective because LLMs rely on recognizable patterns and signals to assemble concise answers. Strengthening those signals improves the odds that your content will be selected.

Prioritizing GEO tasks leads to quick wins—such as fixing schema issues or clarifying citations—and longer-term gains through building topical authority with targeted content and link-building. These priorities align directly with the optimization steps outlined earlier and help teams allocate resources efficiently across AEO programs.

How do AI content tracking tools improve Answer Engine Optimization?

AI content tracking tools bridge the gap between observation and action by showing exactly how a brand is referenced in AI-generated answers. They highlight mention contexts, excerpt boundaries, and whether citations appear with prominence or are buried deeper in the response. Teams use this visibility to inform content updates, structured data improvements, or link-building that strengthens authority signals and makes content more excerptable.

A straightforward feedback loop captures the value: track mentions to identify weak pages → analyze context to determine specific improvements → implement changes and monitor visibility gains. This measurable approach to AEO ensures that limited resources go toward high-impact fixes rather than broad, unfocused updates.

What Are the Pricing and Value Differences Among Top LLMRefs Alternatives?

Pricing models for LLMRefs alternatives generally fall into three categories: tiered subscriptions, usage-based plans, and enterprise or custom contracts. Each option has trade-offs—tiered subscriptions offer predictable pricing and bundled features, usage-based models align cost with monitoring volume, and enterprise contracts provide customization and stronger support commitments. Choosing the right model depends on monitoring scale, automation needs, and whether consolidating tools lowers overall overhead.

To evaluate value, map each pricing model’s features to expected ROI in time saved, reduced tool sprawl, and visibility-driven traffic growth. The table below outlines common pricing structures and qualitative ROI signals to help teams compare overall value without relying on exact list prices.

Intro to pricing EAV table:

This table summarizes typical pricing models, trial availability indicators, and qualitative ROI cues that buyers should consider when assessing platform value.

| Pricing Model | Trial / Entry Availability | Value Indicators / ROI Notes |

| Tiered Subscription | Common — feature-limited trials | Predictable costs, bundled automation, good for teams needing consolidation |

| Usage-Based | Sometimes — usage credits or permits | Flexible scaling, costs rise with monitoring volume, suitable for variable workloads |

| Enterprise/Custom | Typically available on request | Custom integrations and SLAs, best for complex enterprise requirements |

This comparison helps decision-makers align their purchasing choices with expected operational gains and coverage needs. The next paragraphs break down how subscription models differ and how teams can frame ROI in practical, measurable terms.

How do subscription models vary across SearchAtlas and competitors?

Subscription models range from standard tiered plans designed for agencies and in-house teams to fully customized enterprise agreements for organizations with complex integration requirements. When reviewing tiers, buyers should look closely at what each level includes—user seats, LLM coverage, automation limits, and support—and how trial or demo access showcases the platform’s real capabilities. Trials matter because they let teams validate LLM coverage, test automation workflows, and see how well the platform integrates with their CMS and analytics setup.

As you compare plans, pay attention to whether automation caps might bottleneck your GEO program and whether onboarding support includes essentials like tracking setup and configuration. These factors play a major role in time-to-value and should come up early in vendor conversations.

What ROI improvements can users expect from AI SEO automation platforms?

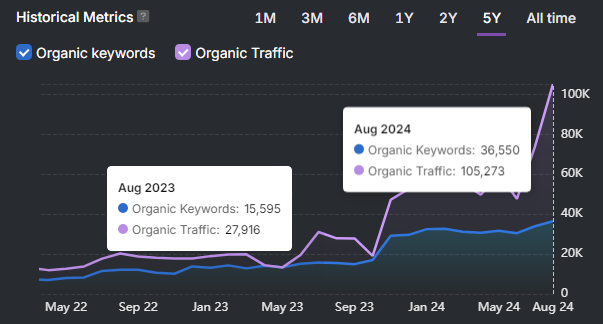

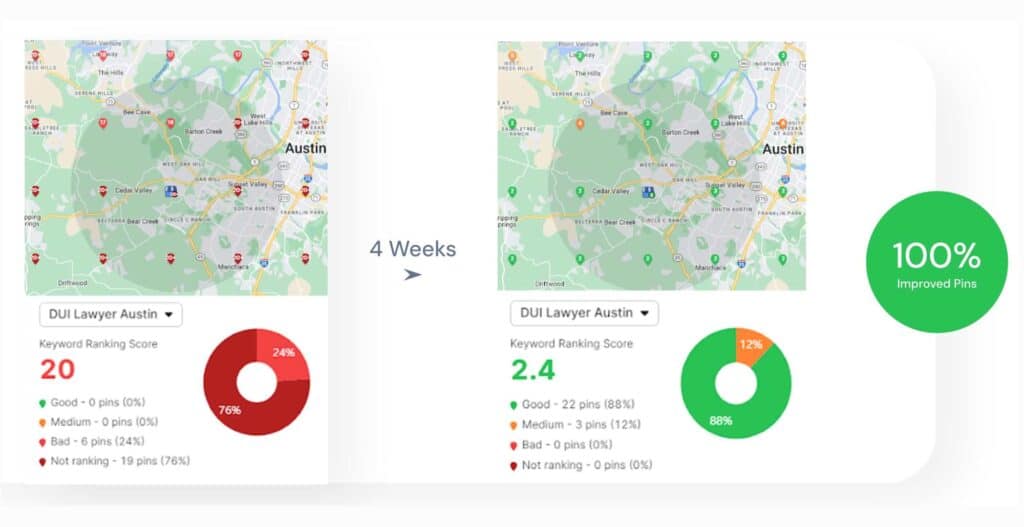

AI SEO automation platforms typically deliver ROI through time savings, reduced tool redundancy, faster experimentation, and stronger visibility that can drive more organic and referral traffic from AI-generated answers. Industry reporting often highlights significant automation potential—some platforms claim to “automate up to 90 percent of manual SEO tasks.” When teams validate these capabilities in pilot projects, the outcome is usually reallocated hours that can be invested in strategy, creative development, and higher-value initiatives. Case studies and proven results are the best way to calibrate expectations and set meaningful KPIs.

To measure ROI effectively, establish baseline metrics for visibility, answer excerpt frequency, organic traffic, and conversion performance before adopting a platform. Use those baselines to track gains tied directly to automation and improved LLM visibility, then refine the workflows that drive the strongest marginal returns.

Where Can You Find Reliable Resources and Support for LLMRefs Alternatives?

Vendors that offer substantial educational resources, case studies, and clear onboarding materials make it far easier to unlock value from GEO and AEO programs. Look for providers that offer technical guides for Google AI Overviews, how-to content for ChatGPT brand tracking, visibility case studies, webinars, and hands-on demos. Strong documentation reduces ramp time and helps teams adopt best practices quickly.

SearchAtlas acts as both a platform and a resource hub, offering feature pages, a blog, case studies, and solutions tailored for agencies and enterprises. These resources help teams understand LLM Visibility and OTTO-driven automation while validating real-world workflows. As you evaluate vendors, request demos and sample case studies to confirm that their recommended playbooks align with your content operations and technical environment.

What educational materials and case studies do SearchAtlas and competitors provide?

The most useful vendor resources include step-by-step guides for optimizing content for specific LLMs, visibility case studies, dashboard walkthroughs, and recorded webinars that explain GEO concepts in practical terms. These assets help teams turn high-level theories into operational playbooks and identify vendors whose methodologies align with internal processes. Case studies should clearly document the challenge, the playbook used, and the measurable visibility or traffic outcomes.

When reviewing these materials, prioritize those that include dashboards, reproducible workflows, and transparent explanations of how visibility improvements were achieved. This level of detail separates marketing content from practical guidance you can actually apply.

How do customer support and onboarding compare among AI search optimization platforms?

Customer support and onboarding vary significantly across platforms. Some vendors rely on self-serve documentation and community forums, while others provide dedicated customer success managers and professional services for integration and migration. Key onboarding deliverables typically include initial tracking setup, data migration, CMS and analytics integration, and team training. Vendors that combine hands-on support with solid documentation usually help teams reach meaningful GEO impact more quickly.

Your need for professional services depends on team capacity and technical complexity. For large-scale deployments or integrations involving multiple data systems, professional onboarding reduces risk and accelerates AEO results. Ask vendors for example onboarding timelines during evaluation to set accurate expectations.

- Educational resources to request: product guides, GEO playbooks, case studies.

- Onboarding deliverables to expect: tracking configuration, integrations, team training.

- Support channels to verify: dedicated CSMs, technical support SLAs, self-serve documentation.

These considerations help teams choose vendors that offer both the tools and the operational support needed to make GEO and AEO investments successful in 2025. For teams ready to explore an integrated platform, scheduling a vendor demo and reviewing case studies are the most practical next steps to confirm fit and expected ROI.