SEOs have been on high alert this past year with Google rolling out one core update after another. Now, Google is requiring JavaScript to access its search results, a move aimed at enhancing security against bots and scrapers.

This change has raised a lot of questions: Why is Google doing this? And how does it affect SEO tools and strategies? 🤔

As data gathering becomes more resource-intensive, traditional tools like Semrush and Ahrefs—already known for their premium pricing—could become even more expensive.

But not all tools are affected. OTTO, for example, is completely immune to this change. 😎

So, what makes OTTO different? It has always used JavaScript to find legitimate and accurate data. Let’s dive into the specifics and explore the fascinating technology behind it, as well as what this shift means for the future of SEO.

What is JavaScript?

JavaScript (JS) is a programming language that makes websites interactive. It powers elements like clickable buttons, real-time updates, and smooth animations.

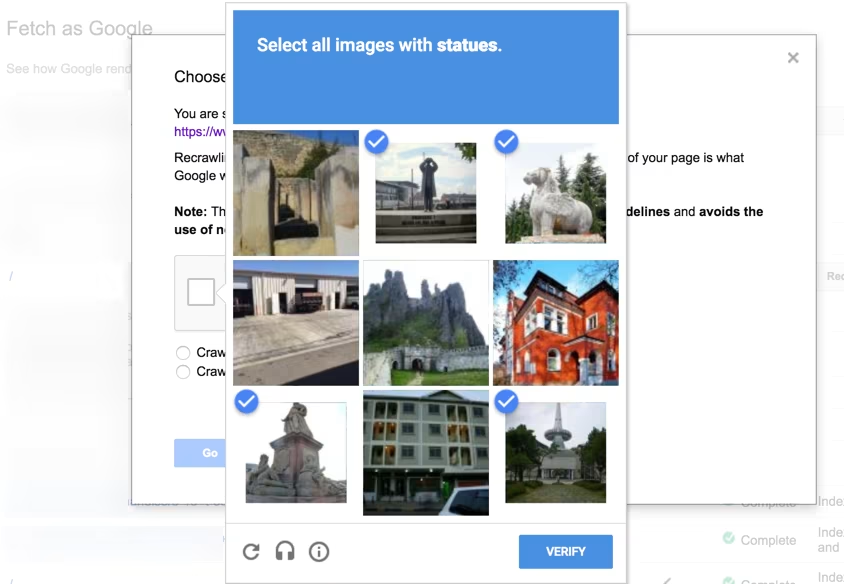

This is what you’ll see if you try searching on Google without JavaScript enabled.

Now, who might be browsing the web without JavaScript? Probably no one with a modern device.

The only ones trying to access websites without JavaScript are bots, automated programs scraping data from search results.

It’s not real users, just bots. 🤖

Why is Google Requiring JavaScript?

Google’s new requirement for search results is designed to block suspicious activities like spam, bots, and platform misuse.

Competitors like Perplexity and ChatGPT have already restricted access, and now Google is following suit.

Why? They want to end “free-for-all” data scraping—the automated process of using software to extract data from websites.

Bots have been clogging Google’s systems for years. For example, bots can make 3,000 searches on “site:www.example.com [query]” in Google Search Console (GSC), and each search costs Google in compute power.

What is Google’s Goal?

This shift reflects Google’s evolving priorities around resource management and protecting its ecosystem.

To combat scraping, Google has not only started requiring JavaScript-enabled browsers but also activated CAPTCHA for IP addresses that exceed around 25-30 queries.

Via Medium

Important: Failing CAPTCHA results in a “black mark” on the IP, making future requests from that address more likely to be flagged. 🏴☠️

Google previously tolerated bots to keep their platform open. However, the introduction of AI overviews allows bots unrestricted access, which would significantly increase Google’s costs.

JavaScript also helps Google personalize search results, ensuring users get the most relevant and up-to-date information based on their needs.

Why This Spells Trouble for Conventional SEO Tools

Conventional SEO tools like Semrush and Ahrefs rely on scraping Google’s search results at scale to collect keyword and traffic data. They gather vast amounts of data by continuously pulling results from Google.

This move is tied to rate limiting, meaning Google is capping the number of pages that can be accessed in a given time.

Google sent a clear message to them: adapt or face much higher operating costs.

Scrapers and bots are now forced to mimic legitimate user behavior using residential proxies, advanced browser emulation, and stricter CAPTCHA handling.

What does this mean for conventional SEO tools?

- Higher operational costs: More servers, more power, more money.

- Price increases for users: As scraping gets more expensive, subscription prices will rise.

- Slower and limited data: Google’s rate-limiting could restrict the breadth and depth of available data.

These tools are in a tough spot and must innovate to find compliant, cost-effective ways to gather data.

Why Are Search Atlas and OTTO Not Affected By This Shift?

Unlike traditional tools, Search Atlas uses first-party data directly from Google Search Console (GSC).

We’ve embraced browser emulation and invested in robust infrastructure to analyze and extract valuable data.

Our method has always had JavaScript enabled to ensure accurate, up-to-date data—unlike other tools that rely on approximations and estimates.

This commitment ensures we can still deliver the insights our clients need while respecting Google’s increasing focus on data security and authenticity.

Simply put: Search Atlas and OTTO have direct access to Google’s data—no barriers, no hassles.

Here’s how Search Atlas stands out:

- Efficiency: We’ve used JavaScript since the beginning, so our features remain fast and reliable.

- Stable pricing: The pricing remains consistent, regardless of changes to Google’s JavaScript or algorithms.

- Value-focused: Unlike other SEO tools that replicate keyword data or provide estimates, Search Atlas offers real, accurate data directly from GSC.

Frequently Asked Questions

We’ll tackle the most common concerns and explain what these updates mean for your strategies.

How Do These Changes Impact Google Ads?

Google is restricting access to ad data, including CPCs, competitor ads, and click activity, making ad intelligence harder to obtain.

For example, ad visibility is now limited for non-residential or “low-quality” IPs, highlighting Google’s focus on delivering ad impressions to verified users. Additionally, click activity and behavior data are now more strictly filtered based on origin and intent to maintain data quality.

Marketers need AI-powered tools to optimize resources and reduce Google queries—OTTO PPC offers a solution.

How Do These Changes Affect Agencies?

For SEO agencies, the immediate impact may be minor, but over time, it will become harder to manipulate rankings.

Google is now prioritizing real user signals and quality backlinks—genuine links from active sites with real traffic, measured by the Domain Power metric.

This shift means focusing on authentic content, strong backlinks, and user experience will be increasingly important. It’s about building real authority, not relying on quick tricks.

Do These Changes Affect Google’s View of My Website?

These changes don’t affect how Google views the web, only how your browser displays search results.

Those affected weren’t using the best tools for their SEO. For Search Atlas users? Zero impact. We checked when the news hit, and everything ran smoothly.

If you’re curious about how search engines and AI tools are crawling your website, we’ve added real-time crawl monitoring in OTTO.

You can track when Google, Bing, or even AI tools like ChatGPT last crawled your site. If you notice an increase in crawl activity, it’s a sign that a traffic boost is on the way.

What This Means for the Future of SEO Tools

Google’s tighter data access is part of a wider trend across platforms like Amazon, search engines, and AI tools, all making scraping harder to protect their valuable data.

These changes are aimed at securing their platforms and controlling data, so expect more updates in this direction. Traditional tools will need to adapt or face rising costs and technical challenges.

Search Atlas is already ahead, focusing on acceleration, accurate data, and value, giving us—and you—a major edge as Google evolves. 🌟

Experience firsthand how we’re ahead of the curve—no strings attached! Try OTTO and explore the rest of Search Atlas’ innovative features with your free trial!