Crawlability issues silently block search engines from indexing your site, leading to fewer clicks, lost traffic, and missed growth. Search engines that fail to access or interpret your content exclude your pages from results, regardless of quality.

Understanding crawlability unlocks growth through SEO. Fixing technical barriers drives faster ranking improvements and stronger site performance. This guide explains crawlability problems, their impact, and how to identify and fix 20 common issues that prevent visibility.

What Are Crawlability Problems?

Crawlability problems block search engine bots like Googlebot from navigating and accessing your website. A page that bots cannot crawl will not be indexed. Without indexing, the page never appears in search results. This kills SEO performance.

Search engines use crawlers to scan websites, follow internal links, and collect content for indexing. This process defines how pages rank for search queries. Bots that hit roadblocks—broken links, misconfigured tags, or slow-loading pages—fail to complete the crawlability and indexability process.

There are different common crawlability errors. The different common crawlability errors are listed below.

- Pages blocked in robots.txt

- Nofollow internal links

- Redirect loops

- Broken navigation paths

- JavaScript-based links that bots cannot reach

Picture your website as a library and Googlebot as the librarian. Locked doors, misfiled books, and missing labels stop the librarian from finding anything.

Crawlability issues often go unnoticed but restrict your visibility. Identifying and fixing these barriers drives better indexing and stronger SEO performance.

How Does Search Engine Crawling Work?

Crawling starts the process of content discovery and organization by search engines. Automated bots, known as crawlers or spiders, move from link to link, collecting data from each page they access.

Search engines usually begin with your homepage, then follow internal and external links to find more content.

The crawling depends on three key elements. The three key elements that crawling depends on are listed below.

- Internal links that guide bots through your site

- XML sitemaps that list all important URLs

- Robots.txt files that define allowed or disallowed areas

A page with a 404 error, blocked by robots.txt, or hidden behind JavaScript gets skipped. Even minor technical errors block crawlers and limit indexing. These crawl barriers stop search engines from discovering your pages.

Common Site Crawler Tools

Google uses Googlebot as its main crawler and switched to Mobile Googlebot as the default user agent on July 5, 2024. It views and evaluates websites as if accessed from a smartphone, so mobile optimization shapes what Google sees.

There are different Googlebot crawlers. The different Googlebot crawlers are given below.

- Googlebot Image crawls images for Google Images

- Googlebot News crawls news articles

- Googlebot Video crawls video content

- Google StoreBot crawls product and e-commerce pages

On the SEO side, tools like Screaming Frog act as third-party crawlers that simulate bot behavior across your site. These tools support audits and detect broken links, redirect loops, and blocked resources before Google finds them.

How Do Crawlability Problems Impact SEO?

Crawlability issues rarely show clear warnings, but they directly impact SEO performance.

There are different ways crawlability problems impact SEO. The different ways crawlability problems impact SEO are listed below.

- Reduced Indexing. Crawlability issues stop search engines from discovering important pages. Pages blocked by robots.txt, buried in poor navigation, or loaded through unreadable JavaScript never reach the index. Unindexed pages remain invisible in search results, no matter how useful or optimized they are. Indexing comes first. Without it, nothing ranks.

- Loss of Organic Traffic. Missing pages in search results eliminate chances to reach active searchers. Fewer indexed pages mean lower click-through rates, fewer leads, and lost conversions. Crawl errors cut off organic traffic at the source.

- Lower Search Rankings. Indexed pages still lose value with crawlability problems. Broken or weak internal links confuse Google and hide page importance. High crawl budgets wasted on duplicate or error pages stop bots before reaching valuable content. Technical errors lower site structure quality and trust, pushing rankings down.

- Lost Link Equity. Links distribute authority across your site. A broken, blocked, or redirected link stops that flow. This weakens internal linking and lowers ranking strength. Backlinks lose value at crawl dead ends, hurting your top pages and making it harder to compete.

What Are the 20 Common Crawlability Problems and How to Fix Them?

Crawlability forms the foundation of technical SEO. Crawlers that hit barriers fail to access your content, leaving even your best pages out of search results. Below are 20 common crawlability problems. Each includes an explanation, how to identify it, and how to fix it. Resolve these issues to maintain visibility and stay competitive in search.

1. Pages Blocked in Robots.txt

Your robots.txt file uses Disallow rules to restrict crawler access to certain URLs or directories. This file helps manage crawl behavior, but misconfigured rules block important pages from indexing.

Blocked pages—like homepages, products, blog posts, or JS/CSS directories—stay invisible in search. Google skips these pages, ignores their content, and breaks internal link signals.

Check if your pages block crawlers

Visit yourdomain.com/robots.txt and review any Disallow: lines. Example:

User-agent: *Disallow: /blog/This blocks all bots from the blog section.

Use Google Search Console (GSC):

- Go to Settings >> Crawlers >> robots.txt Tester

- Paste a URL and test crawl status

- Use the URL Inspection Tool for crawl feedback like “Blocked by robots.txt”

Fix blocked crawler access

Avoid blocking key directories like /blog/, /product/, or /services/. Remove or refine the Disallow lines.

Example below:

User-agent: *Disallow: /private/Allow: /private/press-release/This blocks general access but allows a specific folder.

Unblock essential rendering resources

Allow: /wp-content/themes/Allow: /wp-content/plugins/After updates, retest in Search Console or Search Atlas. Confirm that the page is crawlable.

2. JavaScript Resources Blocked in Robots.txt

Your robots.txt file blocks critical JavaScript files, which stops search engines from fully rendering and understanding your pages. Modern websites use JavaScript to load content, structure layouts, and enable interactivity. Blocked JS files lead to incomplete indexing and lower visibility.

Identify blocked JavaScript resources

Visit yourdomain.com/robots.txt and look for lines like:

Disallow: /js/ Disallow: /assets/Use the URL Inspection Tool in Google Search Console to check if key resources are blocked.

Unblock JavaScript resources

Remove or adjust Disallow rules that restrict access. Example:

User-agent: * Allow: /js/Re-test affected pages using the URL Inspection Tool or Search Atlas. Confirm that Googlebot renders the full content.

Unblock essential JavaScript files to ensure Googlebot sees the complete page and indexes it accurately. This action improves how your site appears in search.

3. URLs Blocked in Webmaster Tools

Certain URLs on your site are manually blocked using tools like the Removals Tool from Google Search Console, which overrides crawlability and limits their visibility in search results. These temporary or accidental blocks prevent important content from appearing in Google Search, impacting both traffic and visibility.

To identify the issue, go to Google Search Console (GSC), navigate to “Removals” under the “Index” section, and check for any active requests. Review who on your team submitted them and whether they were intentional. Use the URL Inspection Tool to verify if a page was excluded due to a removal.

To resolve the issue, cancel any outdated or incorrect requests in the Removals section, and then resubmit the URL for indexing using the URL Inspection Tool.

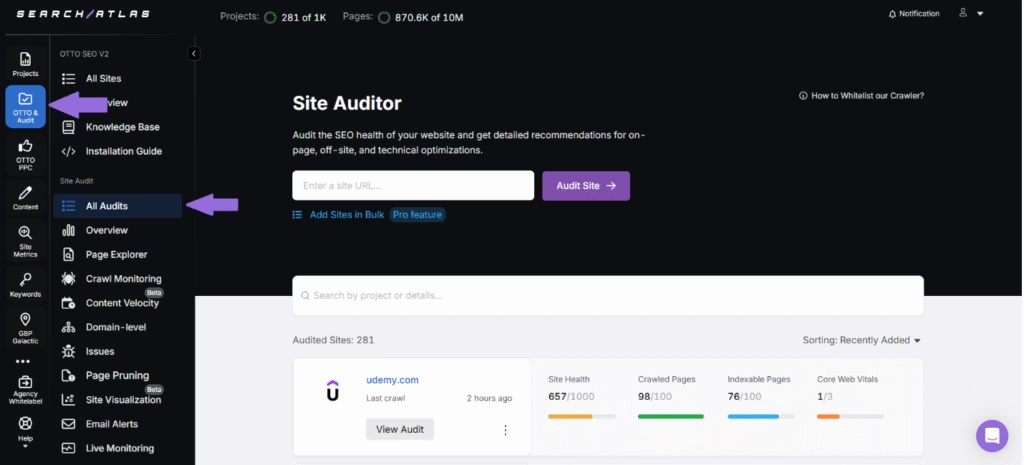

For ongoing visibility tracking, the Search Atlas Site Auditor tool helps monitor changes to crawl and index status, offering real-time insights by consolidating data from GSC and other key sources. Regular review of removal requests prevents unintentional SEO setbacks.

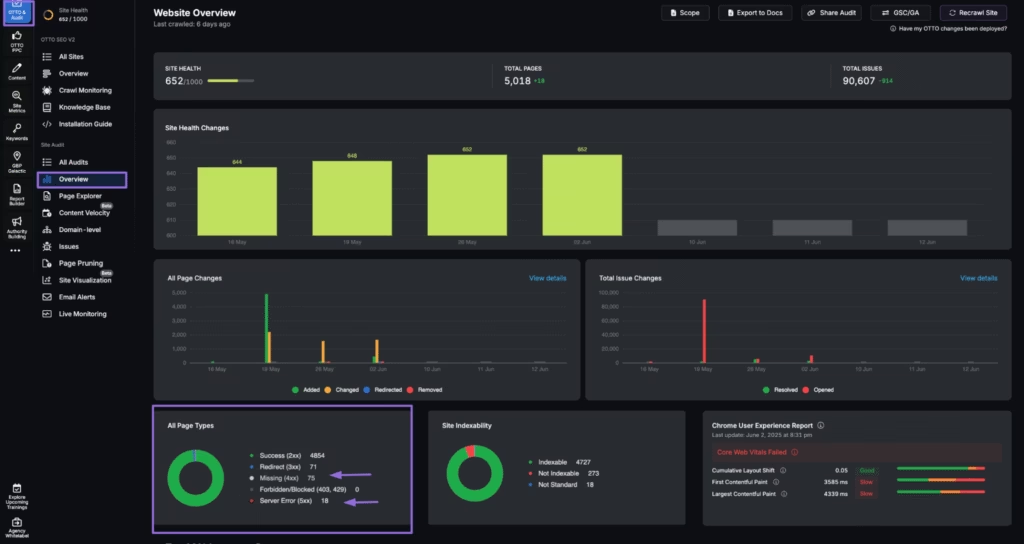

4. Server (5xx) Errors

5xx errors are server-side issues that occur when your server fails to respond to requests from search engine crawlers or users. Common types include 500, 502, 503, and 504 errors. Googlebot that repeatedly encounters these issues reduces the crawl frequency or temporarily stops crawling your site. This delays indexing and negatively impacts your rankings.

Use Crawl Stats from Google Search Console to detect spikes in 5xx errors and review server logs for timing and causes. Tools like the Search Atlas Site Auditor flag pages returning 5xx status codes. Resolve the issue by collaborating with your hosting provider or development team to pinpoint the root cause, such as server overload, PHP misconfiguration, or database timeouts.

Improve infrastructure with caching, load balancing, and uptime monitoring. For planned downtime, use a 503 status with a “Retry-After” header to notify crawlers. Consistent monitoring prevents disruptions that impact visibility and user experience.

5. Not Found (404) Errors

A 404 error occurs when a user or crawler requests a URL that does not exist on your server. This happens when pages get deleted, renamed, or linked incorrectly.

Widespread 404 errors waste crawl budgets, damage user experience, and lose link equity when backlinks point to broken pages.

Identify 404 pages with the steps below.

- Check the Coverage report in Google Search Console for a list of URLs returning 404 errors.

- Use the Search Atlas Site Auditor to identify broken internal or inbound links.

- Look for referral traffic going to non-existent pages in Google Analytics (GA4).

Fix 404 broken pages with the steps below.

- Redirect important 404 pages with backlinks or traffic to a relevant, live page using 301 redirects.

- Remove or update broken internal links pointing to deleted pages.

- Customize your 404 page to help users navigate to working content and reduce bounce rate.

- Avoid redirecting all 404s to your homepage, which confuses both bots and users.

Fixing 404s protects crawl efficiency, preserves link equity, and keeps users and search engines moving in the right direction.

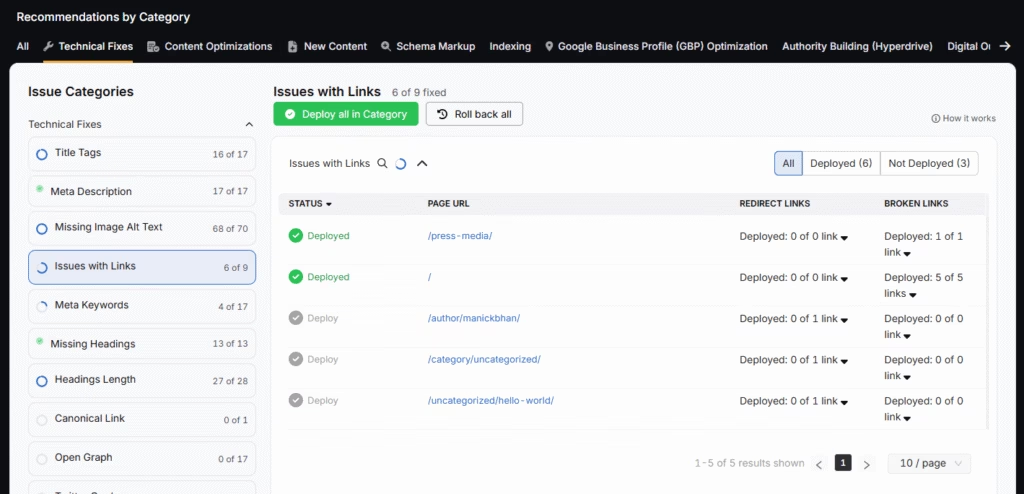

6. Internal Broken Links

Internal broken links occur when one page on your website links to another internal page that no longer exists or has moved without a redirect.

Broken internal links disrupt user experience, waste crawl budget, and block search engines from navigating and indexing your site. They fragment site authority and hurt SEO.

Identify broken links in your site with the steps below.

- Use Search Atlas Site Auditor to scan your website for 404 status codes in internal links.

- Monitor Google Search Console Coverage report for crawl anomalies.

- Audit high-traffic pages to ensure internal links remain functional.

Fix internal broken links by following the steps below.

- Update or remove links pointing to deleted or moved pages.

- Redirect old URLs to relevant new content using 301 redirects if the original page was removed.

- Maintain a process for internal link checks during content updates or URL changes.

Fixing internal links improves crawl efficiency and keeps users on track as they explore your content.

7. Redirect Loops

Redirect loops happen when two or more URLs point to each other in a way that creates an infinite loop. For example, Page A redirects to Page B, which then redirects back to Page A.

These loops block search engines and users from reaching the intended content. Crawlers abandon the page entirely, and users see browser errors instead of valuable content. This hurts rankings and damages user trust.

Identify redirect loops on your site with the steps below.

- Use Search Atlas Site Auditor to detect redirect chains and loops in its generated report.

- Look for browser errors like “ERR_TOO_MANY_REDIRECTS.”

- Check server logs or crawl reports for repeated redirect patterns.

Remove redirect loops by following the steps below.

- Map out your redirect chains to understand the flow.

- Eliminate unnecessary redirects by pointing URLs directly to their final destination.

- Update internal links and canonical tags to reflect the correct, final URLs.

Fixing redirect loops improves crawlability, speeds up page delivery, and ensures both search engines and users reach your content without roadblocks.

8. Access Restrictions (IP, Password, Firewalls)

Some websites block search engine crawlers through server-level restrictions such as IP blocks, required login credentials, or firewall rules that prevent bots from accessing the site.

Crawlers like Googlebot that face these restrictions fail to view or index content, even when it is publicly available. This removes pages from search results and reduces organic traffic.

Check the Crawl Stats report in Google Search Console for access errors. Run a crawl with the Search Atlas Site Auditor Tool to flag any 401 (Unauthorized) or 403 (Forbidden) responses. Review server logs to see where bots get denied.

To fix it, adjust firewall or server settings to avoid blocking search engine IPs. Remove authentication requirements from pages intended for indexing. Use noindex tags or HTTP authentication only for staging environments.

9. URL Parameters

URL parameters like ?sort=price or &category=shoes create multiple variations of the same page. These help users but confuse search engine crawlers by generating duplicate or near-duplicate URLs.

Crawlers that waste resources on similar URLs with slight differences trigger duplicate content issues and dilute ranking signals. This blocks important pages from discovery and bloats your index with unnecessary URLs.

Check for crawl efficiencies by following the steps below.

- In Google Search Console, go to the Crawl Stats and Page Indexing reports to see which URLs are indexed and whether parameters are causing excess variations.

- Run a crawl in Screaming Frog and search for URLs with query strings.

- Mitigate crawler problems caused by excessive URL variants.

- Use canonical tags on parameterized pages to point back to the main version of the content.

- Configure parameter handling in Google Search Console (under legacy tools) to define how Google treats specific parameters.

- Switch to clean URLs using static filtering paths where possible (e.g., /shoes/men instead of ?gender=male).

- Manage URL parameters with canonicals and Google Search Console settings to avoid crawl waste, duplicate content, and diluted SEO signals.

10. JavaScript Links / Dynamically Inserted Links

Links or content generated entirely through JavaScript is not visible to search engine crawlers, depending on how the site renders and when the links appear.

Critical navigation links or internal references inserted after the initial page load using JavaScript get missed during crawling. This blocks discovery of key pages and disrupts your internal linking structure.

Discover missing links on your site with the steps below.

- Use the URL Inspection Tool in Google Search Console to view how Googlebot renders a page and whether it detects your links.

- Run a JavaScript rendering test in Screaming Frog using the “JavaScript Rendering” mode to see which links appear after rendering.

Steps to fix these issues are listed below

- Use server-side rendering (SSR) or dynamic rendering for important links to ensure they appear in the initial HTML.

- Avoid adding crucial internal links exclusively through client-side JavaScript.

- Ensure navigation menus and sitemaps are rendered in the HTML whenever possible.

- Use OTTO SEO to spot missing links and compare rendered vs. raw HTML. OTTO SEO resolves the issues for you in one click.

- Expose JavaScript-generated links in the initial HTML to ensure crawlability and support proper indexing.

11. Rendering Issues

Search engines struggle to render your pages when they rely on JavaScript or complex client-side elements. Key content gets missed entirely if it does not appear in the rendered HTML.

Googlebot renders pages to understand dynamic content. Crucial elements like text, links, or images that fail to load during rendering do not get indexed. This creates content gaps, ranking losses, and missed keyword opportunities.

Identify rendering issues in your site with the steps below.

- Use Google Search Console URL Inspection Tool to see how Googlebot renders a page.

- Compare the rendered HTML to the original source code to find missing content.

- Test your pages with JavaScript disabled in your browser to see which content disappears.

This reflects how search engines with limited rendering view your site.

Fix rendering errors by following the instructions below.

- Load important content server-side or early in the client-side lifecycle.

- Avoid hiding critical elements behind user interactions like tabs or dropdowns.

- Use structured data and fallback content when applying advanced scripts.

- Ensure third-party scripts load fast and remain reliable.

Search engines that fail to see your content during rendering will not index or rank it. Visibility begins with accessibility.

12. Site Structure

A disorganized or overly complex site architecture, such as deeply buried pages, tangled navigation, or orphaned content, confuses search engines and users. Pages hidden several clicks deep, disconnected from internal links, or grouped inconsistently create crawling challenges. This wastes crawl budget, leaves key pages out of the index, and spreads link equity across low-priority URLs.

Search engines rely on logical site structure and internal links to understand relationships between pages and prioritize what to index. A flat, hierarchical structure helps crawlers access and rank your most valuable content efficiently.

Identify a bad site architecture with the steps below.

- Create a visual sitemap of your website structure to see how pages connect and spot navigation bottlenecks or isolated content.

- Look for orphaned pages, inconsistent URL patterns, or navigation paths that lead to dead ends.

- Check if top-performing or strategic pages stay reachable within 3 clicks from the homepage.

Fix the site architecture of your website with the steps below.

- Create a clear, siloed hierarchy (e.g., Home > Category > Subcategory > Article).

- Flatten the structure so important pages stay within 3–4 clicks of the homepage.

- Build hub pages for major sections and interlink related content.

- Use contextual internal links, breadcrumb navigation, and an HTML sitemap to guide crawlers and users.

A well-structured site improves crawling, boosts indexing efficiency, and ensures your most valuable content earns visibility.

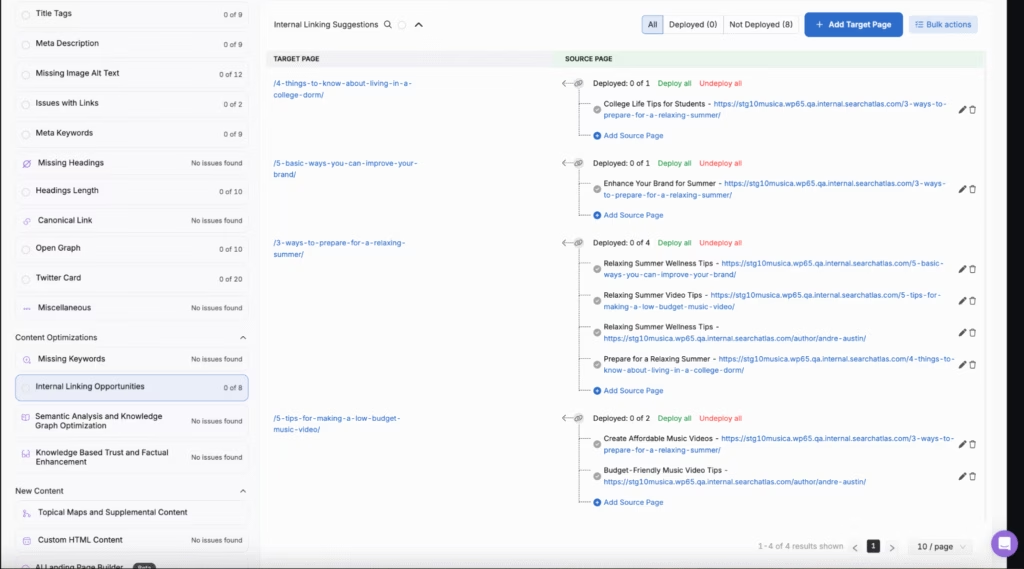

13. Lack of Internal Links

Crawlers never find them or assign them low importance when pages lack internal links. This leaves valuable content disconnected and difficult to index.

Internal links help search engines navigate your site, understand content relationships, and prioritize which pages to crawl and rank. Without enough internal links, pages become “orphans,” receive no link equity, and stay buried in the crawl queue. It weakens topical relevance across your site.

Verify that you are using internal links correctly on your site with the steps below.

- Use the Search Atlas Site Auditor to get a detailed report of all orphaned pages in your website.

- Review your content and identify high-value pages with few or no internal links pointing to them.

- Analyze anchor text usage and linking patterns to ensure logical connections between content.

Resolve internal linking problems with the steps below

- Use Search Atlas OTTO SEO to automatically spot linking opportunities, suggest relevant anchors, and deploy internal links with one click for systematic link distribution.

- Add contextual links from blog posts, service pages, or related content to underlinked pages.

- Ensure each new page gets linked from at least one existing page during content publishing.

- Use keyword-rich anchor text to strengthen topical map signals.

- Audit and update older posts to include internal links to newer content.

Strong internal linking requires deliberate action. Treat it like a strategy to shape what search engines crawl, rank, and understand.

14. Thin Content

Thin content refers to pages that contain little to no valuable information for users or search engines. These pages are too short, duplicate other content, or lack original insight.

Search engines prioritize high-quality, comprehensive pages that satisfy user intent. Thin content fails to meet these expectations, making it less likely to be crawled, indexed, or ranked. Too much thin content dilutes domain authority and wastes crawl budget on low-value pages.

Identify thin content issues by following the steps below.

- Use the Search Atlas On-page SEO Audit tool to get a content score for your web pages. Compare your pages to top competitors.

- Check for duplicate or near-duplicate content across your site.

- Review bounce rates and engagement metrics. Thin pages often show low time-on-page and high exit rates.

Mitigate thin content issues by following the steps below.

- Generate comprehensive, keyword-targeted content with Search Atlas OTTO Content Generator to transform thin pages into valuable resources.

- Expand underdeveloped pages with original content that satisfies search intent.

- Combine several thin pages into a single, more valuable resource if they target similar topics.

- Use canonical tags to prevent indexing of duplicate pages.

- Remove or noindex pages that provide no SEO or user value and cannot be improved.

Thin content brings no value to users or bots. Build depth and originality into every page to secure indexing and search visibility.

15. Duplicate Content (from Technical Issues or Spider Traps)

Duplicate content occurs when identical or nearly identical pages exist at multiple URLs, often unintentionally. Technical issues like URL variations, session IDs, pagination, or misconfigured parameters trigger these duplicates, leading to a spider trap where crawlers endlessly follow loops of duplicate URLs.

Search engines struggle to decide which version to index or rank when they detect duplicate content. This leads to indexing delays, wasted crawl budget, diluted link equity, and ranking suppression. Excessive duplication weakens your entire SEO strategy.

Identify duplicate content problems with the steps below.

- Use Search Atlas OTTO SEO to detect duplicate meta tags (title tags and meta descriptions), and content blocks.

- Check for multiple versions of a page (e.g., http vs https, www vs non-www, or with tracking parameters).

- Review crawl reports for excessive parameter-based URLs or near-identical page copies.

To prevent duplicate content, follow the instructions below.

- Consolidate duplicates using canonical tags (rel=”canonical”) to point to the preferred version.

- Implement 301 redirects to eliminate redundant URLs.

- Use parameter handling in Google Search Console and clean up your URL structure.

- Add noindex tags to duplicate versions when removal is not possible.

- Block spider traps in your robots.txt to prevent unnecessary crawling.

Duplicate content caused by technical flaws confuses crawlers and harms rankings. Fix it with canonicalization, redirects, and clean architecture to stop crawling in circles.

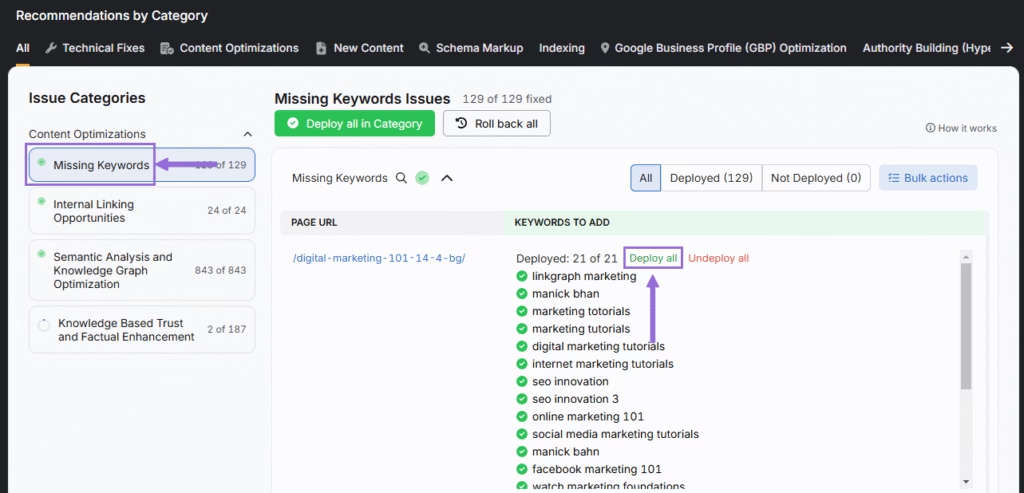

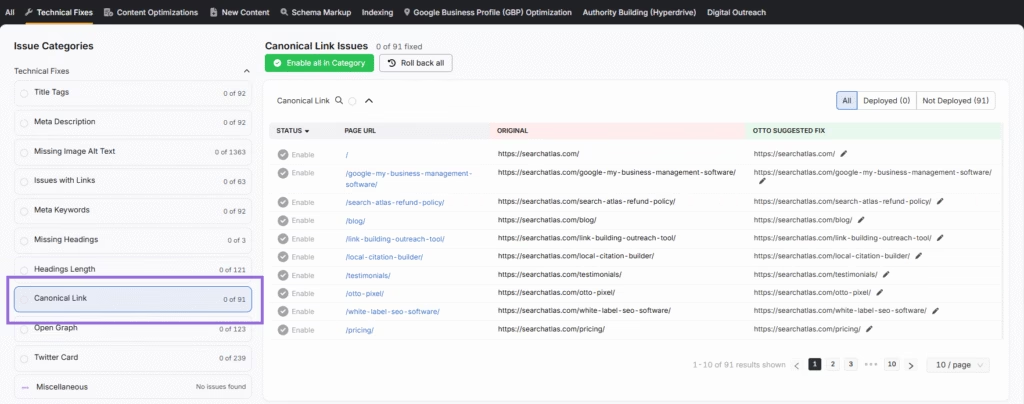

16. SEO Tag Errors (Missing Title, Meta, Canonicals)

Missing or misconfigured SEO tags, like title tags, meta descriptions, and canonical tags, prevent search engines from interpreting, displaying, or prioritizing your content. These tags serve as core signals for relevance and indexing.

Without a unique and descriptive title or meta description, search engines auto-generate subpar snippets in search results, which hurts click-through rates. Missing canonical tags trigger duplicate content issues. Absent or mismatched titles confuse crawlers about page intent and keyword focus.

Take care of SEO tag errors with the instructions below.

- Check for pages lacking canonical tags, especially those with similar or duplicate content.

- Review title tags for keyword clarity and meta descriptions for relevance and uniqueness.

Fix SEO tag errors by following the methods below.

- Use the Search Atlas OTTO SEO to scan and automatically resolve missing, duplicate, or overly long titles and meta tags.

- Add unique, keyword-optimized title tags to every indexable page (55–60 characters is ideal).

- Write clear, concise meta descriptions (up to 160 characters) that entice users and summarize the content.

- Use canonical tags (<link rel=”canonical” href=”…”>) to signal the preferred version of duplicate or similar content.

- Avoid using the same meta tags across multiple pages, even for paginated or filtered content.

Small errors in SEO tags create major indexing problems. Optimizing titles, descriptions, and canonicals guarantees correct interpretation, indexing, and ranking.

17. ‘Noindex’ Tags

A noindex tag tells search engines not to include a page in their index. This works in specific cases but creates problems when applied to pages you want to rank.

Critical pages marked with noindex get crawled by search engines but excluded from search results. These pages disappear from Google, even if they offer high-quality and relevant content. Accidental or excessive use of noindex destroys visibility and breaks your SEO strategy.

Search for noindex tags in your site by following the steps below.

- Use the Search Atlas On-page Audit Tool or Google Search Console to find pages with the noindex directive.

- Check the HTTP headers and page source for

<meta name="robots" content="noindex">. - Monitor drops in indexed pages in Google Search Console Pages report.

Resolve noindex tag issues by following the steps below.

- Remove the noindex tag from any pages intended for indexing and search appearance.

- Audit your CMS and plugin settings to ensure they do not auto-apply noindex to templates or archives.

- Use noindex only on low-value pages like login screens, thank-you pages, or duplicate filters.

- The noindex tag works as a precision tool. Misuse blocks important content from search. Audit and control it to protect visibility.

18. Bad Sitemap Management

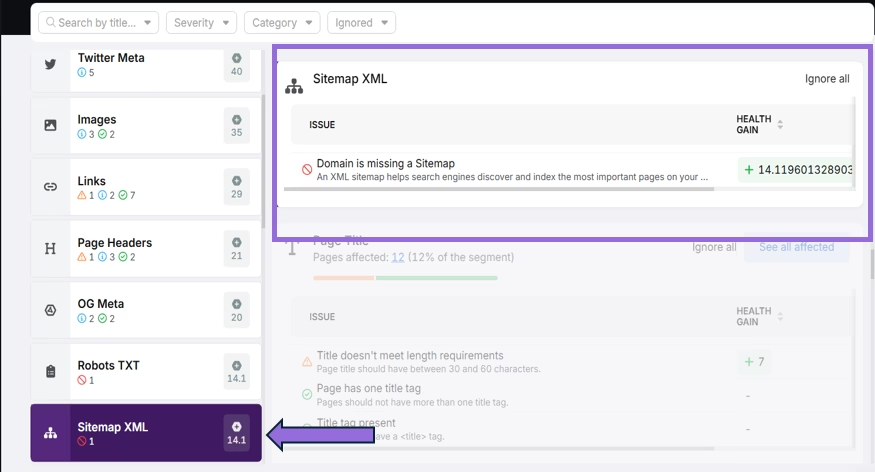

An XML sitemap is a roadmap for search engines, but with missing pages, outdated URLs, or formatting issues, it leads crawlers away from your most important content.

Sitemaps help search engines prioritize and discover your site faster. A broken or inaccurate sitemap misleads crawlers, wastes crawl budget on irrelevant or broken pages, and delays indexing of critical URLs. A sitemap that fails to guide crawlers correctly damages SEO.

Identify inaccurate sitemaps by following the steps below.

- Use the Search Atlas OTTO SEO or Google Search Console Sitemaps report to check for errors or excluded URLs.

- Open your sitemap file (yourdomain.com/sitemap.xml) and scan for broken links, redirects, or outdated content.

- Compare your sitemap against a full crawl of your site to find missing priority pages.

Manage XML sitemaps by following the methods below.

- Keep your sitemap updated automatically using CMS tools or plugins that refresh it when content changes.

- Exclude noindexed, redirected, or canonicalized pages from the sitemap.

- Limit each sitemap file to 50,000 URLs or 50MB uncompressed. Use a sitemap index file for large sites.

- Resubmit the corrected sitemap in Google Search Console to trigger a fresh crawl.

Your sitemap must stay clean and current. Keep it optimized to ensure search engines crawl and index your best content.

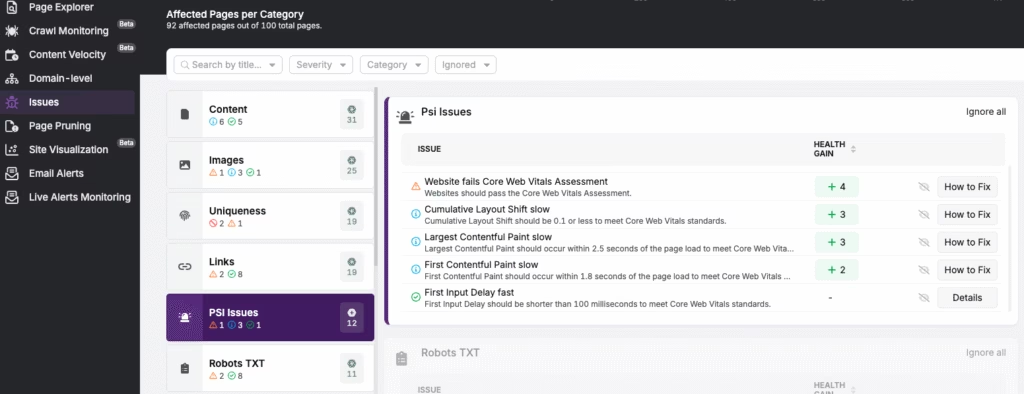

19. Slow Site Speed / Slow Page Load Speed

Pages that take too long to load create friction for users and search engine crawlers. Delays cause bots to abandon crawling sessions before they index your full site.

Google treats page speed as a ranking factor, especially for mobile. Slow-loading sites hurt SEO, raise bounce rates, and limit the number of pages crawled within your crawl budget. A sluggish site sends negative signals to search engines and users.

Test the speed of your site by following the steps below.

- Use Google PageSpeed Insights (PSI) or Lighthouse to test load times and diagnose performance bottlenecks.

- Check Core Web Vitals in Google Search Console to identify real-world speed problems like Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS).

- Review server response times, image sizes, and third-party scripts.

Optimize site speed by following the instructions below.

- Compress and properly size images with modern formats like WebP.

- Minify CSS, JavaScript, and HTML.

- Use lazy loading for images and videos.

- Enable browser caching and use content delivery networks (CDNs).

- Upgrade to a faster hosting provider or use a lightweight theme or framework.

- Reduce the number of third-party scripts and unnecessary plugins.

Fast-loading pages keep users and bots engaged. Prioritize speed to improve crawlability, user experience, and search rankings.

20. Poor Mobile Experience / Mobile Usability

A website that is difficult to use on mobile devices frustrates visitors and causes search engines to deprioritize your pages in mobile search results.

Google uses mobile-first indexing, which means it primarily crawls and indexes the mobile version of your site. Broken, cluttered, or unresponsive mobile experiences affect how Google sees your content and how users interact with it. Poor usability leads to higher bounce rates and lower engagement, which hurt your rankings.

The steps to check for mobile usability issues on your site are listed below.

- Use Google Mobile-Friendly Test or the Mobile Usability report in Search Console to check for touch element spacing, font sizes, and viewport configuration issues.

- Analyze bounce rate and time-on-site metrics from mobile users in Google Analytics.

- Test your site on real devices and emulators to catch layout or navigation issues.

Improve the mobile experience of your site by following the steps below.

- Use a responsive design that adapts to all screen sizes.

- Ensure font sizes are readable on small screens and interactive elements have enough spacing.

- Optimize mobile navigation, use sticky menus, collapsible sections, and clear CTAs.

- Compress images and remove resource-heavy elements to speed up mobile load times.

- Avoid using Flash or popups that interfere with mobile experience.

A poor mobile experience blocks SEO performance and pushes users away. Build for mobile to keep crawlers and visitors engaged.

How To Crawl Your Website and Identify Problems?

To uncover crawlability issues, view your site through the eyes of a search engine. Run a full crawl to simulate how bots, such as Googlebot, navigate pages, follow links, and encounter barriers.

The Search Atlas Site Audit tool simplifies this process by mimicking Googlebot behavior and flagging common issues such as blocked pages in robots.txt, 404 errors, redirect loops, noindex tags, thin content, orphan pages, and weak internal linking. After the crawl, receive a categorized report with filters, exportable data, and clear action steps.

There are different tips for effective crawling. The different tips for effective crawling are listed below.

- Start with the mobile version of Googlebot to reflect mobile-first indexing.

- Check your robots.txt and meta tags before crawling to avoid missing pages.

- Use both crawl maps and data tables to spot structure issues and orphaned pages.

- Re-crawl after fixes to confirm resolution and catch new issues.

Regular crawling with the right tools keeps your site healthy, indexable, and optimized for search engine performance.

How to Ensure Your Website is Crawled and Indexed?

Submitting a sitemap does not guarantee crawling or indexing. Manage how search engines discover your content by taking proactive steps, especially on large or frequently updated sites.

OTTO Dynamic Indexing automates discovery by detecting new or updated pages and triggering real-time crawl signals. This speeds up indexing beyond the standard crawl cycle. Use it for e-commerce catalogs, large-scale websites, or frequently updated blogs.

For faster results, use OTTO Instant Indexing to submit URLs directly to Google and Bing the moment they go live. This reduces visibility delays to minutes. Deploy it for time-sensitive updates like product launches or news content.

Support this process with the Search Atlas Site Auditor Tool. It uses a Googlebot crawler to simulate Google’s site crawl, monitors bot movement, identifies crawl gaps, adjusts scan settings, and flags non-indexable pages. Direct links and next steps simplify issue resolution. Integrated data delivers real-time analysis for visibility and performance management.

Run your first crawl and fix your first issue automatically. Try Search Atlas for FREE today.